Академический Документы

Профессиональный Документы

Культура Документы

Platform For BD Booklet-Final

Загружено:

murilomouraОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Platform For BD Booklet-Final

Загружено:

murilomouraАвторское право:

Доступные форматы

Talend Platform For Big Data

Exercise Book

Last updated

Date

Talend Platform Version

September 15, 2014

5.5.1

Any total or partial reproduction without the consent of the author or beneficiary, devisee or legatee is not allowed

(law of 11 March 1957, par. 1 of article 40). Representation or reproduction, by any means, would be considered

an infringement of copyright under articles 425 et.seq of the Penal Code. The law of 11 March 1957, par. 2 and 3

of article 41, allows the creation of copies and reproductions exclusively for the private use of the copier and not

for collective use on the one hand while on the other it allows analysts to use short quotes for purposes of

illustration.

DISCOVERY WORKSHOP

Talend Platform for Big Data

TABLE OF CONTENTS

DEMO 1: BIG DATA SANDBOX

EXERCISE 1: CREATING METADATA

EXERCISE 2: LOADING DATA TO HDFS

EXERCISE 3: INTRODUCTION TO HDFS COMPONENTS

10

EXERCISE 4: CONVERTING A JOB TO USE MAP/REDUCE

16

EXERCISE 5: INTRODUCTION TO THE PIG COMPONENTS

20

EXERCISE 6: INTRODUCTION TO THE HIVE ELT COMPONENTS

24

DISCOVERY WORKSHOP

Talend Platform for Big Data

Demo 1: Big Data Sandbox

Download The Big Data Sandbox :

Choose between Cloudera, Hortonworks or Map/R Distributions on

either the VMWare or VirtualBox format.

A Thirty-day Platform For Big Data evaluation license will be

generated and emailed to you.

A Booklet is included with setup instructions, as well as sample demo

jobs.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Exercise 1: Creating Metadata

Summary:

In this exercise, you will create metadata connections to customer and product csv files.

Purpose:

Understand how to set up a metadata connection to a csv file.

In the Repository, under Metadata, right-click on File Delimited and

select Create File Delimited.

Complete the form to document this metadata. We will name this

connector "Customers_BD". Typically, filling out the purpose and

description will help in understanding the job later on, and its

recommended those fields be filled in during job creation.

Step 1:

Step 2:

Click Browse and point to the Customers.out file.

The Format field allows you to specify the EOL character (which is OSdependent). In this case, its a Unix/Linux file.

File Viewer shows an outline of the first 50 lines of the file.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Step 3: Configure the file parameters.

1. Encoding, Field and Row separators (US-ASCII ; and \n) these are defaults,

just verify.

2. Rows to ignore leave this blank. This can be used to ignore lines if you have

comments in your data file. When you perform the action for #4 below, this

will be changed to 1 to skip the header.

3. Escape Char Settings

Select Delimited.

4. Select the checkbox for Set Heading Row as column names and click the

Refresh Preview button. This will automatically update the header field (in #2

area) to 1.

The Preview posts the first 50 lines extracted from the file; it uses

the same code as that generated by the Jobs and is an accurate

rendering.

The CSV mode is more resource-consuming than the Delimited mode.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Step 4: The preview produces the following schema:

Forbidden characters in Column 1 are replaced with an Underscore: _

if applicable.

The Key column cant be defined automatically when reading the file

and must be entered manually.

The Type column is defined by the first 50 extracted lines. Verify that

this is correct for each of the columns.

The Length and Precision columns are determined by the first 50

extracted lines. Change the lengths for each column as needed.

The Nullable column is always checked by default for every column

and can be changed if necessary.

While this schema process is intended to save you time, it should

always be reviewed manually.

The Guess button lets you recover the schema at any time starting

from the extraction of the first 50 lines of the file. (This value is also

user defined in Window>Preferences>Talend).

The Load and Save buttons let you save your schemas in XML format.

To Save and view one or more of your schemas, Export to a file path,

name the file and select an extension of your choice, then open and

look at the simple XML structure (Menu File>Open File).

If you dont format the columns as shown above, you wont be

able to use your data correctly. The CustomerNumber should

have a length of 7.

Step 5: Do the same to create the metadata corresponding to the Products.out file.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Exercise 2: Loading data to HDFS

Summary:

In this exercise, you will map data from the customers and product files to HDFS files.

Purpose:

Understand how to create a map.

Understand how to map a csv file to an HDFS file.

In the Repository, right-click on Job Designs and select Create Job.

Fill in Name, Purpose, Description and Status. Name is required as it

will appear in the Repository. Name this job "Exercise02".

In the Repository, select Metadata>File delimited>Customers_BD and

drag-and-drop it to Job Designer.

Choose the tFileInputDelimited component from the popup and Click

OK.

In the Designer, type hdfsout and Double-click the tHDFSOutput

component to drop it into the Designer. Place it to the right of

tFileInputDelimited. This can also be found in the palette under Big

Data->HDFS.

Right-click on FileInputDelimited and, holding the button down, slide it

over to tHDFSOutput so that the (Main) row1 link appears.

Configure the tHDFSOutput component.

Preparation:

Step 1:

Select the Run tab, Click on the Run button to run the job, then verify

the content of the file you created.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Step 2:

In the same Job Designer window, create a similar process to load the

Products records to HDFS.

This time select Metadata>File delimited>Products as your

tFileInputDelimited component.

You can copy/paste the tHDFSOutput component from the Customers

subjob and connect it with a Main Row connector from the Products

input. If you do this, you will need to synch the schema to match the

Products schema.

The tHDFSOutput component should be configured like this.

Synch the target

schema

Change the file name

Add an OnSubJobOk trigger to connect the Customer and Product

subjobs and run the job.

Verify that both datasets have been created.

DISCOVERY WORKSHOP

Talend Platform for Big Data

You can also use the tHDFSPut component to copy a flatfile to an

HDFS file. This is the preferred method if no transformations are

needed.

The component would be configured as follows to load the data.

DISCOVERY WORKSHOP

Talend Platform for Big Data

Exercise 3: Introduction to HDFS components

Summary:

In this exercise, you will create metadata connections to the HDFS files that you created in

the previous exercise. You will map data from customers and perform aggregations on the

products table to write an HDFS file containing the aggregated product information per

customer. Metadata connections are useful because you can drag and drop them into a job

and do not have to reconfigure the connection parameters every time. Also, this allows you

to define the schema one time and reuse it in multiple jobs.

Purpose:

Understand how to create an HDFS connection.

Understand how to use the tAggregate component.

Understand how to use the tMap component.

Preparation:

In the Repository, right-click on Job Designs and select Create Job

Fill in Name, Purpose, Description and Status. Name is required as it will

appear in the Repository. Name this job Exercise_03.

Step 1:

In the Repository, under Metadata, right-click on Hadoop Cluster and

select Create Hadoop Cluster.

Complete the form to document this metadata. We will name this

connector "Cloudera_Training". Typically, filling out the purpose and

description will help in understanding the job later on, and its

recommended those fields be filled in during job creation.

Fill in the Hadoop Cluster Connection information as follows and click

Finish

Once the connection is created perform a right mouse click on the

Cloudera_Training connection and then select Create HDFS

10

DISCOVERY WORKSHOP

Talend Platform for Big Data

Name the HDFS connection HDFS_Training and click on Next.

Change the user name to talend and click on Finish.

Right click on the HDFS_Training connection that you created and select

Retrieve Schema

Drill down to the location of the files that were created in Exercise 02.

Select the files and press next.

11

DISCOVERY WORKSHOP

Talend Platform for Big Data

This screen shows you the files that were retrieved customers and

products and the schemas associated with these files. Most of the time,

HDFS files will not be written with headers. However, you can

configurate the tHDFSOutput component to write out the header. Since

this was not written with a header, the column names are defaulted

Column0, Column1, etc. You can manually change them or you can use

the schema layout from the the csv files that they were created from. In

this case, just accept the defaults and the next step will show you how to

use the schemas from the csv files.

From the Metadata->File Delimited objects, expand Customers_DB and

then double click on the metadata object to edit it.

Select all of the columns and click on the Copy button.

12

DISCOVERY WORKSHOP

Talend Platform for Big Data

Now under the Hadoop Cluster Metadata, select the customers metadata

object and edit it.

Select all of the columns and use the delete button to delete them.

Press the paste button to paste the columns that were copied from the

customers schema.

13

DISCOVERY WORKSHOP

Talend Platform for Big Data

Perform the same actions to modify the products schemas columns.

Step 2:

Create a new job and call it Exercise03. Drag the tHDFSConnection for

HDFS_Training to the Designer

Drag the tHDFSInput components for customers and products to the

designer and connect the tHDFSConnection to the customers tHDFSInput

component using the OnSubJobOk trigger. Be sure to use the option Use

Existing Connection for the inputs.

Add the remaining components so that the job looks like this. Each

component will be configured below.

Configure tAggregateRow as follows.

14

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure the tMap component as follows.

Configure the tSortRow component as follows.

Configure the tHDFSOutput component as follows.

15

DISCOVERY WORKSHOP

Talend Platform for Big Data

Run the job and verify the results.

Exercise 4: Converting a job to use map/reduce

Summary:

In this exercise, you will convert the job that you created in Exercise 3 to run using

map/reduce code. There are two ways to convert a standard job to a map reduce job and

this exercise will show both of them.

Purpose:

Understand how to convert a job to use map/reduce.

Understand which components are available in map/reduce.

Preparation:

Save and close the job that you created in Exercise 3. The job must be

closed to allow you to perform Step 1 below.

Step 1:

Select the job Exercise03 in the Repository under Job Designs and

perform a right mouse click to select Edit Properties. Click on the

Convert to Map/Reduce job button.

You can also just drag/drop the standard job to the Map/Reduce Jobs

folder.

16

DISCOVERY WORKSHOP

Talend Platform for Big Data

Both of these methods will result in the job being created under the

Map/Reduce Jobs folder. Notice the job is renamed to Exercise03_mr to

denote it is the Map/Reduce version of a standard job.

Select HDFS_Training as the node to provide the hadoop configuration

information.

Open the job Exercise03_mr. Notice that the tHDFSConnection

component has a red x by it. This is because the tHDFSConnection

component is not available in map/reduce. The processing is handled

differently for a map/reduce job and the connection is no longer needed.

There are only a select number of components that are available in

map/reduce. In the following steps, you will change the job to run in

map/reduce.

17

DISCOVERY WORKSHOP

Talend Platform for Big Data

Delete the tHDFSConnection component.

The HDFS input and output components do not have the option to

connect to an existing connection. However, the folder needs to be

added to the tHDFSOutput component. Partitions will be created in the

folder.

The connection information is provided on the Run tab using the Hadoop

Configuration option. Change the Property Type to Repository and select

the HDFS_Training connection. This will provide the appropriate

connection information.

In this virtual machine, the map/reduce memory configuration is set up

for 1024 Mb. The Set Memory option needs to be checked or the job will

try to use more memory which will result in an execution error.

18

DISCOVERY WORKSHOP

Talend Platform for Big Data

Click on the Toggle Subjobs icon to show the map/reduce steps and run

the job.

Verify the results of the job. If the job runs successfully, each of the

Map and Reduce steps will be filled with green.

Verify that the file was created. Notice that the file name is part-00000

and appears under the customers_product_mr folder. This is running on

a single partitioned cluster, so there is only one partition file. If there

were multiple partitions, there would be multiple partition files.

19

DISCOVERY WORKSHOP

Talend Platform for Big Data

Exercise 5: Introduction to the Pig Components

Summary:

In this exercise, you will replicate the logic from Exercise 3 using the Pig Components.

Purpose:

Understand how to use the tPigLoad component

Understand how to use the tAggregate component.

Understand how to use the tMap component.

Understand how to use the tPigStore component

Preparation:

In the Repository, right-click on Job Designs and select Create Job

Fill in Name, Purpose, Description and Status. Name is required as it will

appear in the Repository. Name this job Exercise_05.

Step 1:

Drag/drop the following components into the Designer and connect them

accordingly.

Configure tPigLoad_1 to reference the customers data. Use the

repository information for the connection and the schema. Fill in the

path for the input file URI.

20

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure tPigLoad_2 to reference the products data. Use the repository

information for the connection and the schema. Fill in the path for the

input file URI.

Configure the tPigAggregate component.

Configure the tPigMap component.

21

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure the tPigSort component

Run the job and verify the resulting file.

To see the generated Pig code, select a component and then click on the

Code tab.

Configure the tPigStore component. The Pig code will get translated into

map/reduce code when the job runs, so the resulting file will be a

partition file. Therefore you will need to input the resulting folder, not the

resulting file name.

22

DISCOVERY WORKSHOP

Talend Platform for Big Data

In this example, the tPigAggregate component was selected and this is

what the generated code looks like.

23

DISCOVERY WORKSHOP

Talend Platform for Big Data

Exercise 6: Introduction to the Hive ELT Components

Summary:

In this exercise, you will replicate the logic from Exercise 3 using the Hive ELT Components.

Purpose:

Understand how to create a Hive connection

Understand how to create Hive tables

Understand how to use the tPigLoad component

Understand how to use the tAggregate component.

Understand how to use the tMap component.

Understand how to use the tPigStore component

Preparation:

In the Repository, right-click on Job Designs and select Create Job

Fill in Name, Purpose, Description and Status. Name is required as it will

appear in the Repository. Name this job Exercise_05.

Step 1:

Create a new Hive connection under Cloudera_Training. Name it

Hive_Training

Configure the Database Connection and press Check. Click on Finish to

save the connection.

Create a new job called Exercise_06_Create_Hive_Tables.

Drag the Hive_Training connection to the Designer and select

tHiveConnection as the component type.

Drag/drop two tHiveCreateTable components to the Designer so the job

looks like this.

Step 2:

24

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure tHiveCreateTable_1 as follows. This will create a Hive table

called customers_training that references any files within the folder

/user/talend/data/onlinetraining/customers.

For the Schema, the customers metadata should be chosen. The Hive

schema needs to have the associated DB Types filled in. Click on the

Edit schema button and then select Change to built-in property and press

OK.

Select the appropriate DB Type for each column.

Click on the Advanced Settings tab and select the option to Create an

external table. This will create a Hive table that references the HDFS

file. If you do not select this option, a physical Hive table will be created.

This means that any data changes would have to be loaded to the Hive

table. If you create an external table, then any changes made to the

referenced HDFS file will be shown when reading from the Hive table.

25

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure tHiveCreateTable_2 as follows. This will create a Hive table

called products that references any files within the folder

/user/talend/data/onlinetraining/products. Hive expects a folder

directory with files in it that have the same schema.

For the Schema, the products metadata should be chosen. The Hive

schema needs to have the associated DB Types filled in. Click on the

Edit schema button and then select Change to built-in property and press

OK.

Select the appropriate DB Type for each column.

Click on the Advanced Settings tab and select the option to Create an

external table. This will create a Hive table that references the HDFS

file.

26

DISCOVERY WORKSHOP

Talend Platform for Big Data

Configure tHiveCreateTable_3 as follows. This will create a Hive table

called customer_product. This will not be an external table, since its an

output table. Notice that Set file location is not selected.

Run the job and verify that the Hive tables are created.

Select the Hive_Training connection, perform a right mouse click and

select Retrieve Schema

Step 3:

27

DISCOVERY WORKSHOP

Talend Platform for Big Data

Click on Next and then select the tables that were created in the previous

step.

Click next and verify the schemas.

Create a new job called Exercise_06_Hive_ELT.

Drag/drop a tELTHiveInput component for customers_training and

products to the Designer

28

DISCOVERY WORKSHOP

Talend Platform for Big Data

Drag/drop a tELTHiveMap component from Hive_Training to the Designer

Drag/drop a tELTHiveOutput component for customers_product to the

Designer.

Drag/drop a tLibraryLoad component to the Designer and configure as

follows. This jar file is needed in the job, but not available with the

default jars.

Connect the components together as follows. NOTE the components

will be connected using a Link instead of a Row.

Double click on the Hive_Training (tELTHiveMap) component. Click on

the green + on the source side to add an alias for the source tables.

29

DISCOVERY WORKSHOP

Talend Platform for Big Data

Select the table and give it an alias name. The alias name can be the

same name as the table. Do this for both of the tables.

After adding both source tables, the source side of the tHiveELTMap

component should look like this.

Use the Automap button to map the data from the source. The target

should now look like this.

30

DISCOVERY WORKSHOP

Talend Platform for Big Data

Totalamount is the sum of amount from the products table. Ordercount

is the count of amount from the products table. Type these commands

in the associated Expression window.

Set up a join on the source side by dragging customernumber from

customers to products. Click on the Explicit box to imply an explicit join.

Anytime a Sum and/or Count is done, a Group By statement must be

created. For Hive (and other relational databases, all columns that are

not part of the aggregation (sum, count) must be included in the Group

By clause. This is done by clicking on the

button and then adding

the Group By statement. The easiest way to do this is to select all of the

source columns that are part of the group by statement and drop them

in the clauses window. Next, add Group By and then insert a comma

between the columns.

To see the SQL statement that will be used when the job is executed,

click on the Generated SQL Select query tab.

31

DISCOVERY WORKSHOP

Talend Platform for Big Data

Run the job and verify the results.

32

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- AsicДокумент2 страницыAsicImmanuel VinothОценок пока нет

- Ensis DWG2462 DataSheetДокумент1 страницаEnsis DWG2462 DataSheetaldiОценок пока нет

- 11 Edition: Mcgraw-Hill/IrwinДокумент11 страниц11 Edition: Mcgraw-Hill/IrwinmanahilОценок пока нет

- The Tobacco World Vol 30 1910Документ355 страницThe Tobacco World Vol 30 1910loliОценок пока нет

- Moment Connection HSS Knife Plate 1-24-15Документ31 страницаMoment Connection HSS Knife Plate 1-24-15Pierre du Lioncourt100% (1)

- Will The Real Body Please Stand UpДокумент18 страницWill The Real Body Please Stand UpPhilip Finlay BryanОценок пока нет

- CWDM Vs DWDM Transmission SystemsДокумент4 страницыCWDM Vs DWDM Transmission Systemsdbscri100% (1)

- BABOK v3 GlossaryДокумент16 страницBABOK v3 GlossaryDzu XuОценок пока нет

- Transnational Crime Sem 8Документ17 страницTransnational Crime Sem 8KHUSHBOO JAINОценок пока нет

- Batch Programming Basics Part-1Документ34 страницыBatch Programming Basics Part-1Shantanu VishwanadhaОценок пока нет

- Maxwell's Equations Guide Modern TechДокумент8 страницMaxwell's Equations Guide Modern Techpaulsub63Оценок пока нет

- HandNet For WindowsДокумент146 страницHandNet For WindowsKeysierОценок пока нет

- Topic 1 - Introduction To M&E Systems PDFДокумент49 страницTopic 1 - Introduction To M&E Systems PDFSyamimi FsaОценок пока нет

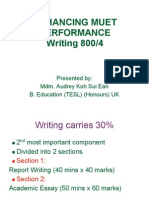

- Guidelines For Muet WritingДокумент35 страницGuidelines For Muet Writingkumutha93% (28)

- ATV303 Modbus Parameters S1A94575 01Документ14 страницATV303 Modbus Parameters S1A94575 01Đặng Cao MẫnОценок пока нет

- TwelveSky Finding Pointers and OffsetsДокумент20 страницTwelveSky Finding Pointers and OffsetsHenrik PedersenОценок пока нет

- Modelos Writing b2Документ11 страницModelos Writing b2CristinaОценок пока нет

- The Mystery of Belicena Villca / Official Version in English 2022Документ572 страницыThe Mystery of Belicena Villca / Official Version in English 2022Pablo Adolfo Santa Cruz de la Vega100% (1)

- Johor Upsr 2016 English ModuleДокумент147 страницJohor Upsr 2016 English ModulePuvanes VadiveluОценок пока нет

- Mathematics Upper Secondary4Документ5 страницMathematics Upper Secondary4fvictor1Оценок пока нет

- Frank, W. José Carlos MariáteguiДокумент4 страницыFrank, W. José Carlos MariáteguiErick Gonzalo Padilla SinchiОценок пока нет

- Data Flow Diagram (Music)Документ12 страницData Flow Diagram (Music)Brenda Cox50% (2)

- Manual (150im II)Документ222 страницыManual (150im II)Ricardo CalvoОценок пока нет

- And Founded A News Startup Called Narasi TV in 2018Документ5 страницAnd Founded A News Startup Called Narasi TV in 2018Nurul Sakinah Rifqayana AmruОценок пока нет

- HDL-BUS Pro UDP Protocal and Device Type (Eng) PDFДокумент12 страницHDL-BUS Pro UDP Protocal and Device Type (Eng) PDFAnonymous of8mnvHОценок пока нет

- MRP (Material Requirement Planning) - AДокумент30 страницMRP (Material Requirement Planning) - Amks210Оценок пока нет

- The Design of Hybrid Fabricated Girders Part 1 PDFДокумент3 страницыThe Design of Hybrid Fabricated Girders Part 1 PDFLingka100% (1)

- Sciencedirect: Mathematical Analysis of Trust Computing AlgorithmsДокумент8 страницSciencedirect: Mathematical Analysis of Trust Computing AlgorithmsThangakumar JОценок пока нет

- Progress Report Memo and Meeting Minutes Assignment - F19 35Документ2 страницыProgress Report Memo and Meeting Minutes Assignment - F19 35muhammadazharОценок пока нет

- 63 Tips To Get Rid of DepressionДокумент8 страниц63 Tips To Get Rid of Depressionalexiscooke585Оценок пока нет