Академический Документы

Профессиональный Документы

Культура Документы

American Statistical Association, American Society For Quality, Taylor & Francis, Ltd. Technometrics

Загружено:

rameshОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

American Statistical Association, American Society For Quality, Taylor & Francis, Ltd. Technometrics

Загружено:

rameshАвторское право:

Доступные форматы

American Society for Quality

Review

Reviewed Work(s): Introductory Probability and Statistical Applications by Paul L. Meyer

Review by: William A. Ericson

Source: Technometrics, Vol. 8, No. 4 (Nov., 1966), pp. 720-722

Published by: Taylor & Francis, Ltd. on behalf of American Statistical Association and

American Society for Quality

Stable URL: http://www.jstor.org/stable/1266656

Accessed: 24-01-2017 18:51 UTC

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted

digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about

JSTOR, please contact support@jstor.org.

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at

http://about.jstor.org/terms

American Statistical Association, American Society for Quality, Taylor & Francis, Ltd.

are collaborating with JSTOR to digitize, preserve and extend access to Technometrics

This content downloaded from 14.139.155.215 on Tue, 24 Jan 2017 18:51:13 UTC

All use subject to http://about.jstor.org/terms

BOOK REVIEWS

720

require

require aa good

gooddeal

dealofofthought.

thought.

InIn

our

our

opinion,

opinion,

Henrici's

Henrici's

book

book

would

would

benefit

benefit

fromfrom

inclusion

inclusion

of

of

material

material on

onmatrix

matrixcomputations

computations

and

and

Gaussian

Gaussian

integration

integration

formulas;

formulas;

Fr6berg

Fr6berg

has very

has very

little little

on the

the propagation

propagationofofround-off

round-off

error.

error.

A distinctive

A distinctive

feature

feature

of Henrici's

of Henrici's

bookbook

is that

is he

that

makes

he makes

distinctions

distinctionsbetween

betweenalgorithms

algorithms

and

and

theorems;

theorems;

most

most

algorithms

algorithms

are accompanied

are accompanied

by theorems

by theorems

which tend to emphasize the assumptions and limitations behind the algorithms. Henrici's

book is valuable for its clear treatment of iteration, showing how many methods arise as improvements of the basic method of Successive Approximations. Frbberg, though less rigorous

than Henrici, seems to assume a slightly greater amount of mathematical ability of his readers,

except in some of the problems, when the situation is reversed.

Duane Meeter

Florida State University

Introductory Probability and Statistical Applications, by Paul L. Meyer. Addison-Wesley

Publishing Co., Inc., 1965, 339 pp., $8.75.

This text is basically designed for a one semester's introductory course in probability for

students in engineering having the standard prerequisite of one year of calculus. Of the fifteen

chapters, twelve concern the usual essentials of probability, one comprises a generally excellent

introduction to applications in reliability theory, while the remaining three chapters deal with

statistics.

One finds here an elementary text which is distinguished by a number of excellent features,

but is marred by an excessive number of errors, misprints, and omissions and by the author's

failure to uniformly maintain the high quality of exposition which characterizes many sections.

The very brief treatment of statistics attempts to cover too many topics and suffers thereby.

Among the commendable features one finds very lucid and perceptive discussions of many

basic concepts. Examples include some of the discussion of conditional probability (page 34)

and Section 7.9 on the correlation coefficient-what it measures and does not measure. Also,

the author has included, although briefly, some discussion of models (?1.1); simulation (page

256); Taylor series approximations to means and variances (?7.7); and other topics (including

brief tables of random digits, binomial and Poisson probabilities) which seem too often omitted

in texts at this level. The presentation is generally within the mathematical prerequisite assumed and the author has included many discerning comments in the form of "notes." Finally,

there are plenty of illustrative examples and over 330 unambiguously stated exercises, the

majority of which are "applied," aimed by subject matter at an engineering audience, and

provided with answers.

Unfortunately, one can find much to criticize. Here is a sampling:

First, the author adopts a somewhat vague version of the restrictive relative frequency view

of probability, with no mention of alternatives. He is careful to point out that observable rela-

tive frequency of an event A is, at best, an approximation to the probability, P(A), of that

event (pp. 13, 16), and then refers the reader to the succeeding chapter (Chapter 2) for a discussion of how the assignment P(A) is made. In that chapter one finds only some brief discussion of the assumption of "equally likely." On page 50 the author asserts that probabilities

associated with points in a sample space are in essence determined by "nature." In general,

the reader is given little help in bridging the gap between the mathematical model and real

applications. Finally, in many examples and problems the thoughtful student will find it

difficult to reconcile the numerical probabilities (always given) with either a relative frequency

interpretation or a symmetry (equally likely) assumption on the sample space.

Second, this text not only contains a large number of misprints and errors, but the author

often leaves the reader with erroneous impressions. Some examples are: the author gives the

impression that only for continuous sample spaces does zero probability not imply the null

event (p. 58); it is asserted (p. 91) that the intersection of the plane y = c with the joint density

surface z = f(x, y) is precisely the conditional p.d.f., g(x I c); Theorem 6.1 (p. 93) is incorrect

for the conditional probabilities may not be defined; in Example 7.12 (p. 119), the event that

exactly one resistor fails seems to have been ignored; the author fails to point out the condition

of absolute convergence for the existence of E(X) in the discrete case (p. 109). Also the author

This content downloaded from 14.139.155.215 on Tue, 24 Jan 2017 18:51:13 UTC

All use subject to http://about.jstor.org/terms

BOOK REVIEWS

721

omits an independence condition or inclusion of cross partial derivatives in Theorem 7.7 (p.

128); Theorem 8.4 (p. 158) is false as stated, its proof is left to the reader (Problem 8.18), yet

the same result is correctly formulated as Problem 4.4; his example of the normal approximation

to the binomial (pp. 230-231) is one where the Poisson is considerably better, and he advocates

approximating the probability of zero by the normal area from - to ?, instead of the usual

and better normal tail area; the author seems to overlook the fact that for any observable

sample proportion and confidence coefficient in (0, 1) there are always two distinct roots to the

quadratic which yields approximate confidence intervals for a proportion (p. 288). On page

309 there is an example of the chi-square goodness of fit test for normality in which the author

uses maximum likelihood estimates based on ungrouped data rather than cell frequencies.

Chernoff and Lehmann [Annals of Math. Stat., 25, 579] have shown that the asymptotic distribution of the test statistic, based on such efficient estimators, is not chi-square and, in

particular, for the normal case may lead to serious underestimates of the probability of a type I

error.

Next, this text also contains non-standard and omitted definitions. One finds no m

here of the terms median or percentiles; on page 35 the author includes in his definit

partition the condition that the probability of each element be positive; the geometri

pretation of the correlation coefficient (page 134) is at best confusing; and often the leg

set of parameter values of a distribution is not mentioned, e.g., the Weibull distribution

216). There is a definition of the j-th largest observation (page 249), but no mention

term "order statistic." In discussing simulation (page 256), the author describes a t

random numbers as merely "tables ... compiled in a way to make them suitable fo

purpose." (The purpose is to draw a random sample.) While the author is careful to distin

a random variable, X, from its generic value, x, he uses the same term estimate for b

random variable and its sample value. In his chapter on hypothesis testing the only n

potheses considered, with little discussion, are of the simple form 0 =- 0, exactly thos

either the hypothesis should not be taken literally and/or a test is silly, or at best may

leading. Also here one finds an operating characteristic function defined for parameter v

not in either the null or alternative hypotheses, and no mention of the term power fun

Also the text contains inconsistencies in level of presentation, imprecisely stated the

general lack of references, and awkward proofs and arguments. One finds, for exam

page 219 a detailed six-line proof of a result which is an immediate application of his The

1.3 (p. 14); on page 239 a detailed argument (including a figure!) showing that ti >

t - ti > 0 implies 0 < t, <? t; but yet the derivation of multinomial coefficients (p. 28

proof that the 't' density approaches a unit normal density (p. 284) are "left to the r

On page 160, the hypergeometric distribution is introduced in terms of sampling for de

(instead of more generally as for other distributions), the range of the random vari

never stated clearly, and the derivation of the mean, variance, and binomial approxim

left to the reader as Problem 8.19. With the tools the author provides, the derivation of

the variance will be extremely difficult for students at this level. The discussion of the

distribution is handled awkwardly (p. 168); a simpler approach would be to introduce t

normal first. A central limit theorem for independent random variables is stated (p. 232)

the annoying phrase "under certain general conditions"; no references are given for ex

finding these conditions. An outline proof is sketched for a special case of identical distrib

in which: the existence of a moment generating function is assumed and not mentioned,

ence is made to an earlier Theorem 10.3, but reference to the more crucial Theorem 1

omitted, and in which the never-defined phrase "convergence in distribution" is u

page 265 one finds a proof of a result that X is the minimum variance unbiased linear est

of , by using calculus (beyond the first year?) methods of minimizing a function of s

variables subject to constraint, where a simple and elegant algebraic demonstration

easily have been provided. While the author promises (preface) to at least sketch a pr

each theorem, Theorem 14.1 (p. 264) and others are stated without any indication of a

or inclusion of references; in this particular case a simple application of Chebyshev's ineq

would suffice to give the essence of an argument.

Finally, here is a listing of some misprints, a number of which may cause a student

moments of anguish:

This content downloaded from 14.139.155.215 on Tue, 24 Jan 2017 18:51:13 UTC

All use subject to http://about.jstor.org/terms

722

BOOK REVIEWS

p.

p. 60,

60,line

line10:

10:numerator

numerator

should

should

be P(1/3

be P(1/3

< X < <1/2).

X < 1/2).

p.

p. 98,

98,line

line22:

22:Chapter

Chapter

12 12

notnot

Chapter

Chapter

11. 11.

p. 106, line 3 of Example 7.1: k > 0 not k 7 0.

p. 113, in second note: there are 3 three's and the sum should be 25.

p. 117, line 12: E(E) not E(e).

p. 127, line 3: Eq. (7.19) not Eq. (7.14).

p. 129, penultimate line: C2 = k/a2 not C = ka2.

p. 134, expression for p: = not -.

p. 159, definition of Z, should read: "... up to and including the first occurence of A".

p. 178, line 8: r > 0 not r > 1.

p. 183, both divisors in expression for P(Y < 2) should be 0.1.

p. 185, expression for P(Y = k) : Xk is omitted from numerator.

p. 185, expression for f(x): term I/ao is omitted.

p. 186, line 3: term 1/a is omitted.

p. 205, problem 8C): replace My(t) by Mx(t).

p. 217, line 2: Eq. (11.5) not Eq. (11.4).

p. 221, line 18: Theorem 6.5 not Theorem 6.10.

p. 253, line 11: 0.99005 not 0.9905.

p. 254, line 9: (, - X) in second term should be squared.

p. 255, line 4 of Example 13.2: delete dx.

p. 273, expression for a: no bars over the X's.

p. 276, line 4 of Example 14.14: Eq. (14.4) not Eq. (14.2).

p. 298, first display of Case 1: uo not IA.

p. 298, line 1 of Case 2: n not a.

p. 306, last expression for D2: only the numerator is squared.

p. 333, answer to problem 9.6: E(Y) = (2/-r)1/2.

p. 333, answer to problem 10.1: + not *.

William A. Ericson

The University of Michigan

Matrix Algebra for the Biological Sciences, by S. R. Searle. John Wiley & Sons, Inc.,

1966, 296 pp., $9.95.

This is a well written introduction to the concepts of matrix algebra and the use of these

concepts in the solution of problems arising in statistics and the biological sciences. Although

it is aimed primarily at the biologist it would seem to me to be useful to anyone who is concerned with the statistical analysis of data. Although the last two chapters assume some knowledge of statistics the main body of the book is aimed at those with a minimum background in

mathematics.

The book begins with a description of matrices and vectors, and a description of the notation

to be used. It then describes the algebraic operations of addition, subtraction, and multiplication of matrices. Linear transformations, quadratic forms, and variance-covariance matrices

are introduced early in the book. Following a chapter on determinants, the concept of the

inverse of a square matrix is presented and discussed.

With this general background as a base, the book then begins a discussion of the solution of

systems of linear equations. In this connection the concepts of independence and rank are

examined through the use of elementary operators. Following this is a discussion of equivalence

and the reduction of a matrix to equivalent canonical form.

At this point a relatively new topic is introduced. This is the topic of generalized inverses.

Because of the usefulness of generalized inverses in solving a number of problems which arise

in statistical analysis this chapter forms a particularly interesting part of the book. Further,

it is a topic which is not often covered in books at the elementary level.

The rest of the main body of the book is concerned with latent roots and vectors and a

number of miscelaneous topics. These include orthogonal matrices, idempotent matrices,

nilpotent matrices, direct products, and direct sums. All of these topics have application in

statistical analysis.

This content downloaded from 14.139.155.215 on Tue, 24 Jan 2017 18:51:13 UTC

All use subject to http://about.jstor.org/terms

Вам также может понравиться

- An Introduction to Probability and Stochastic ProcessesОт EverandAn Introduction to Probability and Stochastic ProcessesРейтинг: 4.5 из 5 звезд4.5/5 (2)

- Stationary and Related Stochastic Processes: Sample Function Properties and Their ApplicationsОт EverandStationary and Related Stochastic Processes: Sample Function Properties and Their ApplicationsРейтинг: 4 из 5 звезд4/5 (2)

- Jep 15 4 3Документ8 страницJep 15 4 3Frann AraОценок пока нет

- Topics Feb21Документ52 страницыTopics Feb21vivek thoratОценок пока нет

- 6134 Math StatsДокумент4 страницы6134 Math Statscsrajmohan2924Оценок пока нет

- The Forecasting Dictionary: J. Scott Armstrong (Ed.) : Norwell, MA: Kluwer Academic Publishers, 2001Документ62 страницыThe Forecasting Dictionary: J. Scott Armstrong (Ed.) : Norwell, MA: Kluwer Academic Publishers, 2001Rafat Al-SroujiОценок пока нет

- 1998 Material Ease 5Документ4 страницы1998 Material Ease 5Anonymous T02GVGzBОценок пока нет

- Inequalities and Extremal Problems in Probability and Statistics: Selected TopicsОт EverandInequalities and Extremal Problems in Probability and Statistics: Selected TopicsОценок пока нет

- Econometrics For Dummies by Roberto PedaДокумент6 страницEconometrics For Dummies by Roberto Pedamatias ColasoОценок пока нет

- Koenker, R., & Bassett, G. (1978) - Regression QuantilesДокумент24 страницыKoenker, R., & Bassett, G. (1978) - Regression QuantilesIlham AtmajaОценок пока нет

- Inference for Heavy-Tailed Data: Applications in Insurance and FinanceОт EverandInference for Heavy-Tailed Data: Applications in Insurance and FinanceОценок пока нет

- This Content Downloaded From 167.249.42.226 On Thu, 12 May 2022 14:24:33 976 12:34:56 UTCДокумент5 страницThis Content Downloaded From 167.249.42.226 On Thu, 12 May 2022 14:24:33 976 12:34:56 UTCFabiana BLОценок пока нет

- Interpolation and Extrapolation Optimal Designs V1: Polynomial Regression and Approximation TheoryОт EverandInterpolation and Extrapolation Optimal Designs V1: Polynomial Regression and Approximation TheoryОценок пока нет

- Cointegracion MacKinnon, - Et - Al - 1996 PDFДокумент26 страницCointegracion MacKinnon, - Et - Al - 1996 PDFAndy HernandezОценок пока нет

- FCM2063 - Printed NotesДокумент126 страницFCM2063 - Printed NotesRenuga SubramaniamОценок пока нет

- Uncertainty Quantification and Stochastic Modeling with MatlabОт EverandUncertainty Quantification and Stochastic Modeling with MatlabОценок пока нет

- Introduction to Probability Theory with Contemporary ApplicationsОт EverandIntroduction to Probability Theory with Contemporary ApplicationsРейтинг: 2 из 5 звезд2/5 (2)

- Learning Probabilistic NetworksДокумент38 страницLearning Probabilistic NetworksFrancisco AragaoОценок пока нет

- Statistical Inference for Models with Multivariate t-Distributed ErrorsОт EverandStatistical Inference for Models with Multivariate t-Distributed ErrorsОценок пока нет

- BootДокумент28 страницBootShweta SinghОценок пока нет

- Hall TheoreticalComparisonBootstrap 1988Документ28 страницHall TheoreticalComparisonBootstrap 1988pabloninoОценок пока нет

- 1897 - Yule - On The Theory of CorrelationДокумент44 страницы1897 - Yule - On The Theory of CorrelationNilotpal N SvetlanaОценок пока нет

- Evidence Contrary To The Statistical View of Boosting: David MeaseДокумент71 страницаEvidence Contrary To The Statistical View of Boosting: David Measemn720Оценок пока нет

- Wiley Journal of Applied Econometrics: This Content Downloaded From 128.143.23.241 On Sun, 08 May 2016 12:18:10 UTCДокумент19 страницWiley Journal of Applied Econometrics: This Content Downloaded From 128.143.23.241 On Sun, 08 May 2016 12:18:10 UTCmatcha ijoОценок пока нет

- Glynn 2012 CA Correspondence AnalysisДокумент47 страницGlynn 2012 CA Correspondence AnalysisHolaq Ola OlaОценок пока нет

- FCM2063 Printed Notes.Документ129 страницFCM2063 Printed Notes.Didi AdilahОценок пока нет

- Risk and Reliability AnalysisДокумент812 страницRisk and Reliability AnalysisJANGJAE LEE100% (1)

- Statistical Inference in Financial and Insurance Mathematics with RОт EverandStatistical Inference in Financial and Insurance Mathematics with RОценок пока нет

- Roger Koenker, Gilbert Bassett and Jr.1978Документ19 страницRoger Koenker, Gilbert Bassett and Jr.1978Judith Abisinia MaldonadoОценок пока нет

- Mislevy 1986Документ30 страницMislevy 1986Bulan BulawanОценок пока нет

- Donald L Smith Nuclear Data Guidelines PDFДокумент8 страницDonald L Smith Nuclear Data Guidelines PDFjradОценок пока нет

- On The Mathematical Foundations of Theoretical Statistics - Fisher - pt1921Документ61 страницаOn The Mathematical Foundations of Theoretical Statistics - Fisher - pt1921donpablorОценок пока нет

- A Manifesto For The Equifinality ThesisДокумент31 страницаA Manifesto For The Equifinality ThesisRajkanth RamanujamОценок пока нет

- This Content Downloaded From 51.148.132.89 On Sat, 24 Apr 2021 03:48:33 UTCДокумент12 страницThis Content Downloaded From 51.148.132.89 On Sat, 24 Apr 2021 03:48:33 UTCNon AktifОценок пока нет

- Estimation and Hypothesis Testing of Cointegration Vectors in Gaussian Vector Auto Regressive ModelsДокумент31 страницаEstimation and Hypothesis Testing of Cointegration Vectors in Gaussian Vector Auto Regressive ModelsMenaz AliОценок пока нет

- Dissertation Mit BachelorДокумент6 страницDissertation Mit BachelorWhereToBuyResumePaperUK100% (1)

- A Comparison of Ten Methods For Determining The Number of Factors in Exploratory Factor AnalysisДокумент15 страницA Comparison of Ten Methods For Determining The Number of Factors in Exploratory Factor Analysissignup001Оценок пока нет

- Chapter 20 Structural Reliability TheoryДокумент16 страницChapter 20 Structural Reliability Theoryjape162088Оценок пока нет

- Dembo NotesДокумент384 страницыDembo NotesAnthony Lee ZhangОценок пока нет

- Substantive Theory and Constructive Measures: A Collection of Chapters and Measurement Commentary on Causal ScienceОт EverandSubstantive Theory and Constructive Measures: A Collection of Chapters and Measurement Commentary on Causal ScienceОценок пока нет

- Verbal ModelsДокумент9 страницVerbal ModelsNilesh AsnaniОценок пока нет

- Likelihood-Ratio TestДокумент5 страницLikelihood-Ratio Testdev414Оценок пока нет

- A Guide To The Applied EconomistДокумент29 страницA Guide To The Applied EconomisthalhoshanОценок пока нет

- An Algorithmic Approach to Nonlinear Analysis and OptimizationОт EverandAn Algorithmic Approach to Nonlinear Analysis and OptimizationОценок пока нет

- The Nuts and Bolts of Proofs: An Introduction to Mathematical ProofsОт EverandThe Nuts and Bolts of Proofs: An Introduction to Mathematical ProofsРейтинг: 4.5 из 5 звезд4.5/5 (2)

- Thesis Using Linear RegressionДокумент7 страницThesis Using Linear Regressionpamelawrightvirginiabeach100% (1)

- Simplified Statistics For Small Numbers of Observations: R. B. Dean, and W. J. DixonДокумент4 страницыSimplified Statistics For Small Numbers of Observations: R. B. Dean, and W. J. Dixonmanuelq9Оценок пока нет

- Sad Song: What Ate Toijf C.sndfkjhsodfn VДокумент1 страницаSad Song: What Ate Toijf C.sndfkjhsodfn VrameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- DS, C, C++, Aptitude, Unix, RDBMS, SQL, CN, OsДокумент176 страницDS, C, C++, Aptitude, Unix, RDBMS, SQL, CN, Osapi-372652067% (3)

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Chapter XVI QuestionsДокумент3 страницыChapter XVI Questionsramesh204Оценок пока нет

- Chapter XV SolutionsДокумент1 страницаChapter XV SolutionsrameshОценок пока нет

- EE243 MEE Jan 2016 Assignment 01 FinalДокумент3 страницыEE243 MEE Jan 2016 Assignment 01 FinalrameshОценок пока нет

- Presentation 1Документ1 страницаPresentation 1rameshОценок пока нет

- Challenging Star Pattern Programs in CДокумент16 страницChallenging Star Pattern Programs in Cरवींद्र नलावडेОценок пока нет

- Quote For Faultdetection NItk 11 2Документ1 страницаQuote For Faultdetection NItk 11 2rameshОценок пока нет

- New Text DocumentДокумент1 страницаNew Text DocumentrameshОценок пока нет

- Motivation LetterДокумент1 страницаMotivation LetterrameshОценок пока нет

- Home Work and Solution For OPAMPДокумент6 страницHome Work and Solution For OPAMPrafikОценок пока нет

- Resistor ChartsДокумент5 страницResistor ChartsmarlonfatnetzeronetОценок пока нет

- Week 3Документ6 страницWeek 3rameshОценок пока нет

- How To Use Dev-C++Документ7 страницHow To Use Dev-C++QaiserОценок пока нет

- Power Flow 21Документ10 страницPower Flow 21sarathoonvОценок пока нет

- Application Form SSC MTS PostsДокумент3 страницыApplication Form SSC MTS PostsrameshОценок пока нет

- Lu Example 2Документ1 страницаLu Example 2rameshОценок пока нет

- Subspaces of R: ExampleДокумент7 страницSubspaces of R: ExamplerameshОценок пока нет

- EE243 Math For Electrical EngineersДокумент2 страницыEE243 Math For Electrical EngineersrameshОценок пока нет

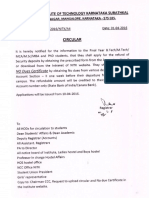

- Student Refund CircularДокумент1 страницаStudent Refund CircularrameshОценок пока нет

- Slides (Shubanga)Документ57 страницSlides (Shubanga)rameshОценок пока нет

- Steps To Install MatlabДокумент2 страницыSteps To Install MatlabShyam RadsunОценок пока нет

- Faulkner Sound and FuryДокумент3 страницыFaulkner Sound and FuryRahul Kumar100% (1)

- Muharram Sermon - Imam Ahmad Al Hassan A.SДокумент20 страницMuharram Sermon - Imam Ahmad Al Hassan A.Smali1988Оценок пока нет

- Introduction To Game Theory: Matigakis ManolisДокумент46 страницIntroduction To Game Theory: Matigakis ManolisArmoha AramohaОценок пока нет

- The Problem of EvilДокумент13 страницThe Problem of Evilroyski100% (2)

- Seeing With in The World Becoming LittlДокумент23 страницыSeeing With in The World Becoming LittlMarilia PisaniОценок пока нет

- Assessment in The Primary SchoolДокумент120 страницAssessment in The Primary SchoolMizan BobОценок пока нет

- College Graduation SpeechДокумент2 страницыCollege Graduation SpeechAndre HiyungОценок пока нет

- Physics Assessment 3 Year 11 - Vehicle Safety BeltsДокумент5 страницPhysics Assessment 3 Year 11 - Vehicle Safety Beltsparacin8131Оценок пока нет

- MAE552 Introduction To Viscous FlowsДокумент5 страницMAE552 Introduction To Viscous Flowsakiscribd1Оценок пока нет

- Electrical Theory - Learning OutcomesДокумент6 страницElectrical Theory - Learning OutcomesonaaaaangОценок пока нет

- Garry L. Hagberg-Fictional Characters, Real Problems - The Search For Ethical Content in Literature-Oxford University Press (2016) PDFДокумент402 страницыGarry L. Hagberg-Fictional Characters, Real Problems - The Search For Ethical Content in Literature-Oxford University Press (2016) PDFMaja Bulatovic100% (2)

- Organizational Development InterventionsДокумент57 страницOrganizational Development InterventionsAmr YousefОценок пока нет

- PIOT Remotely GlobalДокумент12 страницPIOT Remotely Globaletioppe50% (2)

- Aristotle Nicomachean EthicsДокумент10 страницAristotle Nicomachean EthicsCarolineKolopskyОценок пока нет

- 3 Days of DarknessДокумент2 страницы3 Days of DarknessIrisGuiang100% (3)

- Local Networks: Prepared By: Propogo, Kenneth M. Burce, Mary Jane PДокумент20 страницLocal Networks: Prepared By: Propogo, Kenneth M. Burce, Mary Jane PPatricia Nicole Doctolero CaasiОценок пока нет

- Crisis ManagementДокумент9 страницCrisis ManagementOro PlaylistОценок пока нет

- Makalah Narative Seri 2Документ9 страницMakalah Narative Seri 2Kadir JavaОценок пока нет

- Yes, Economics Is A Science - NYTimes PDFДокумент4 страницыYes, Economics Is A Science - NYTimes PDFkabuskerimОценок пока нет

- Clinical Implications of Adolescent IntrospectionДокумент8 страницClinical Implications of Adolescent IntrospectionJoshua RyanОценок пока нет

- Habit 1 - Be Proactive: Stephen CoveyДокумент7 страницHabit 1 - Be Proactive: Stephen CoveyAmitrathorОценок пока нет

- CS 213 M: Introduction: Abhiram RanadeДокумент24 страницыCS 213 M: Introduction: Abhiram RanadeTovaОценок пока нет

- David ch1 Revised1Документ18 страницDavid ch1 Revised1Abdulmajeed Al-YousifОценок пока нет

- Nieva Vs DeocampoДокумент5 страницNieva Vs DeocampofemtotОценок пока нет

- The Permanent SettlementДокумент7 страницThe Permanent Settlementsourabh singhal100% (2)

- Plato's Theory of ImitationДокумент2 страницыPlato's Theory of ImitationAnkitRoy82% (17)

- Assignment 2research Design2018Документ3 страницыAssignment 2research Design2018artmis94Оценок пока нет

- Leadership Theories and Styles: A Literature Review: January 2016Документ8 страницLeadership Theories and Styles: A Literature Review: January 2016Essa BagusОценок пока нет

- The Downfall of The Protagonist in Dr. FaustusДокумент13 страницThe Downfall of The Protagonist in Dr. FaustusRaef Sobh AzabОценок пока нет