Академический Документы

Профессиональный Документы

Культура Документы

CC Beginner Guide Machine Learn Unknown

Загружено:

fatalist3Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

CC Beginner Guide Machine Learn Unknown

Загружено:

fatalist3Авторское право:

Доступные форматы

A beginner's guide to artificial intelligence, machine

learning, and cognitive computing

M. Tim Jones June 01, 2017

Get an overview of the history of artificial intelligence as well as the latest in neural network

and deep learning approaches. Learn why, although AI and machine learning have had their

ups and downs, new approaches like deep learning and cognitive computing have significantly

raised the bar in these disciplines.

For millennia, humans have pondered the idea of building intelligent machines. Ever since, artificial

intelligence (AI) has had highs and lows, demonstrated successes and unfulfilled potential. Today,

the news is filled with the application of machine learning algorithms to new problems. From

cancer detection and prediction to image understanding and summarization and natural language

processing, AI is empowering people and changing our world.

The history of modern AI has all the elements of a great drama. Beginning in the 1950s with a

focus on thinking machines and interesting characters like Alan Turing and John von Neumann, AI

began its first rise. Decades of booms and busts and impossibly high expectations followed, but AI

and its pioneers pushed forward. AI is now exposing its true potential, focusing on applications and

delivering technologies like deep learning and cognitive computing.

This article explores some of the important aspects of AI and its subfields. Let's begin with a

timeline of AI, and then dig into each of these elements.

Timeline of modern AI

Beginning in the 1950s, modern AI focused on what was called strong AI, which referred to AI that

could generally perform any intellectual task that a human could. The lack of progress in strong AI

eventually led to what's called weak AI, or applying AI techniques to narrower problems. Until the

1980s, AI research was split between these two paradigms. But, around 1980, machine learning

became a prominent area of research, its purpose to give computers the ability to learn and build

models so that they could perform activities like prediction within specific domains.

© Copyright IBM Corporation 2017 Trademarks

A beginner's guide to artificial intelligence, machine learning, and Page 1 of 10

cognitive computing

developerWorks® ibm.com/developerWorks/

Figure 1. A timeline of modern artificial intelligence

Building on research from both AI and machine learning, deep learning emerged around 2000.

Computer scientists used neural networks in many layers with new topologies and learning

methods. This evolution of neural networks has successfully solved complex problems in various

domains.

In the past decade, cognitive computing has emerged, the goal of which is to build systems that

can learn and naturally interact with humans. Cognitive computing was demonstrated by IBM

Watson by successfully defeating world-class opponents at the game Jeopardy.

In this tutorial, I'll explore each of these areas and explain some of the important algorithms that

have driven their success.

Foundational AI

Research prior to 1950 introduced the idea that the brain consisted of an electrical network of

pulses that fired and somehow orchestrated thought and consciousness. Alan Turing showed that

any computation could be implemented digitally. The idea, then, that building a machine that could

mimic the human brain couldn’t be far off.

Much early research focused on this strong aspect of AI, but this period also introduced the

foundational concepts that all machine learning and deep learning are built on today.

Figure 2. Timeline of artificial intelligence approaches to 1980

AI as search

Many problems in AI can be solved through brute-force search (such as depth or breadth first

search). However, considering the search space for moderate problems, basic search quickly

suffers. One of the earliest examples of AI as search was the development of a checkers-playing

program. Arthur Samuel built the first such program on the IBM 701 Electronic Data Processing

Machine, implementing an optimization to search trees called alpha-beta pruning. His program

also recorded the reward for a specific move, allowing the application to learn with each game

A beginner's guide to artificial intelligence, machine learning, and Page 2 of 10

cognitive computing

ibm.com/developerWorks/ developerWorks®

played (making it the first self-learning program). To increase the rate at which the program

learned, Samuel programmed it to play itself, increasing its ability to play and learn.

Although you can successfully apply search to many simple problems, the approach quickly fails

as the number of choices increases. Take the simple game of tic-tac-toe as an example. At the

start of a game, there are nine possible moves. Each move results in eight possible countermoves,

and so on. The full tree of moves for tic-tac-toe contains (unoptimized for rotation to remove

duplicates) is 362,880 nodes. If you then extend this same thought experiment to chess or Go, you

quickly see the downside of search.

Perceptrons

The perceptron was an early supervised learning algorithm for single-layer neural networks.

Given an input feature vector, the perceptron algorithm could learn to classify inputs as belonging

to a specific class. Using a training set, the network's weights and bias could be updated for

linear classification. The perceptron was first implemented for the IBM 704, and then on custom

hardware for image recognition.

Figure 3. Perceptron and linear classification

As a linear classifier, the perceptron was capable of linear separable problems. The key example

of the limitations of the perceptron was its inability to learn an exclusive OR (XOR) function.

Multilayer perceptrons solved this problem and paved the way for more complex algorithms,

network topologies, and deep learning.

Clustering algorithms

With perceptrons, the approach was supervised. Users provided data to train the network, and

then test the network against new data. Clustering algorithms take a different approach called

unsupervised learning. In this model, the algorithm organizes a set of feature vectors into clusters

based on one or more attributes of the data.

A beginner's guide to artificial intelligence, machine learning, and Page 3 of 10

cognitive computing

developerWorks® ibm.com/developerWorks/

Figure 4. Clustering in a two-dimensional feature space

One of the simplest algorithms that you can implement in a small amount of code is called k-

means. In this algorithm, k indicates the number of clusters in which you can assign samples. You

can initialize a cluster with a random feature vector, and then add all other samples to their closest

cluster (given that each sample represents a feature vector and a Euclidean distance used to

identify "distance"). As you add samples to a cluster, its centroid—that is, the center of the cluster

—is recalculated. The algorithm then checks the samples again to ensure that they exist in the

closest cluster and ends when no samples change cluster membership.

Although k-means is relatively efficient, you must specify k in advance. Depending on the data,

other approaches might be more efficient, such as hierarchical or distribution-based clustering.

Decision trees

Closely related to clustering is the decision tree. A decision tree is a predictive model about

observations that lead to some conclusion. Conclusions are represented as leaves on the tree,

while nodes are decision points where an observation diverges. Decision trees are built from

decision tree learning algorithms, where the data set is split into subsets based on attribute value

tests (through a process called recursive partitioning).

Consider the example in the following figure. In this data set, I can observe when someone was

productive based on three factors. Using a decision tree learning algorithm, I can identify attributes

by using a metric (one example is information gain). In this example, mood is a primary factor in

productivity, so I split the data set according to whether "good mood" is Yes or No. The No side is

simple: It's always nonproductive. But, the Yes side requires me to split the data set again based

on the other two attributes. I've colorized the data set to illustrate where observations led to my leaf

nodes.

A beginner's guide to artificial intelligence, machine learning, and Page 4 of 10

cognitive computing

ibm.com/developerWorks/ developerWorks®

Figure 5. A simple data set and resulting decision tree

A useful aspect of decision trees is their inherent organization, which gives you the ability to easily

(and graphically) explain how you classified an item. Popular decision tree learning algorithms

include C4.5 and the Classification and Regression Tree.

Rules-based systems

The first system built on rules and inference, called Dendral, was developed in 1965, but it wasn't

until the 1970s that these so-called "expert systems" hit their stride. A rules-based system is one

that stores both knowledge and rules and uses a reasoning system to draw conclusions.

A rules-based system typically consists of a rule set, a knowledge base, an inference engine

(using forward or backward rule chaining), and a user interface. In the following figure, I use a

piece of knowledge ("Socrates was a man"), a rule ("if man, then mortal"), and an interaction on

who is mortal.

Figure 6. A rules-based system

Rules-based systems have been applied to speech recognition, planning and control, and disease

identification. One system developed in the 1990s for monitoring and diagnosing dam stability,

called Kaleidos, is still in operation today.

Machine learning

Machine learning is a subfield of AI and computer science that has its roots in statistics and

mathematical optimization. Machine learning covers techniques in supervised and unsupervised

A beginner's guide to artificial intelligence, machine learning, and Page 5 of 10

cognitive computing

developerWorks® ibm.com/developerWorks/

learning for applications in prediction, analytics, and data mining. It is not restricted to deep

learning, and in this section, I explore some of the algorithms that have led to this surprisingly

efficient approach.

Figure 7. Timeline of machine learning approaches

Backpropagation

The true power of neural networks is their multilayer variant. Training single-layer perceptrons is

straightforward, but the resulting network is not very powerful. The question became, How can we

train networks that have multiple layers? This is where backpropagation came in.

Backpropagation is an algorithm for training neural networks that have many layers. It works in

two phases. The first phase is the propagation of inputs through a neural network to the final

layer (called feedforward). In the second phase, the algorithm computes an error, and then

backpropagates this error (adjusting the weights) from the final layer to the first.

Figure 8. Backpropagation in a nutshell

During training, intermediate layers of the network organize themselves to map portions of the

input space to the output space. Backpropagation, through supervised learning, identifies an error

in the input-to-output mapping, and then adjusts the weights accordingly (with a learning rate) to

A beginner's guide to artificial intelligence, machine learning, and Page 6 of 10

cognitive computing

ibm.com/developerWorks/ developerWorks®

correct this error. Backpropagation continues to be an important aspect of neural network learning.

With faster and cheaper computing resources, it continues to be applied to larger and denser

networks.

Convolutional neural networks

Convolutional neural networks (CNNs) are multilayer neural networks that take their inspiration

from the animal visual cortex. The architecture is useful in various applications, including image

processing. The first CNN was created by Yann LeCun, and at the time, the architecture focused

on handwritten character-recognition tasks like reading postal codes.

The LeNet CNN architecture is made up of several layers that implement feature extraction,

and then classification. The image is divided into receptive fields that feed into a convolutional

layer that extracts features from the input image. The next step is pooling, which reduces the

dimensionality of the extracted features (through down-sampling) while retaining the most

important information (typically through max pooling). The algorithm then performs another

convolution and pooling step that feeds into a fully connected, multilayer perceptron. The final

output layer of this network is a set of nodes that identify features of the image (in this case, a

node per identified number). Users can train the network through backpropagation.

Figure 9. The LeNet convolutional neural network architecture

The use of deep layers of processing, convolutions, pooling, and a fully connected classification

layer opened the door to various new applications of neural networks. In addition to image

processing, the CNN has been successfully applied to video recognition and many tasks within

natural language processing. CNNs have also been efficiently implemented within GPUs, greatly

improving their performance.

Long short-term memory

Recall in the discussion of backpropagation that the network being trained was feedforward. In this

architecture, users feed inputs into the network and propagate them forward through the hidden

layers to the output layer. But, many other neural network topologies exist. One, which I investigate

here, allows connections between nodes to form a directed cycle. These networks are called

recurrent neural networks, and they can feed backwards to prior layers or to subsequent nodes

within their layer. This property makes these networks ideal for time series data.

In 1997, a special kind of recurrent network was created called the long short-term memory

(LSTM). The LSTM consists of memory cells that within a network remember values for a short or

long time.

A beginner's guide to artificial intelligence, machine learning, and Page 7 of 10

cognitive computing

developerWorks® ibm.com/developerWorks/

Figure 10. A long short-term memory network and memory cell

A memory cell contains gates that control how information flows into or out of the cell. The

input gate controls when new information can flow into the memory. The forget gate controls

how long an existing piece of information is retained. Finally, the output gate controls when the

information contained in the cell is used in the output from the cell. The cell also contains weights

that control each gate. The training algorithm, commonly backpropagation-through-time (a variant

of backpropagation), optimizes these weights based on the resulting error.

The LSTM has been applied to speech recognition, handwriting recognition, text-to-speech

synthesis, image captioning, and various other tasks. I'll revisit the LSTM shortly.

Deep learning

Deep learning is a relatively new set of methods that's changing machine learning in fundamental

ways. Deep learning isn't an algorithm, per se, but rather a family of algorithms that implement

deep networks with unsupervised learning. These networks are so deep that new methods of

computation, such as GPUs, are required to build them (in addition to clusters of compute nodes).

This article has explored two deep learning algorithms so far: CNNs and LSTMs. These algorithms

have been combined to achieve several surprisingly intelligent tasks. As shown in the following

figure, CNNs and LSTMs have been used to identify, and then describe in natural language a

picture or video.

Figure 11. Combining convolutional neural networks and long short-term

memory networks for image description

A beginner's guide to artificial intelligence, machine learning, and Page 8 of 10

cognitive computing

ibm.com/developerWorks/ developerWorks®

Deep learning algorithms have also been applied to facial recognition, identifying tuberculosis with

96 percent accuracy, self-driving vehicles, and many other complex problems.

However, despite the results of applying deep learning algorithms, problems exist that we

have yet to solve. A recent application of deep learning to skin cancer detection found that the

algorithm was more accurate than a board-certified dermatologist. But, where dermatologists could

enumerate the factors that led to their diagnosis, there's no way to identify which factors a deep

learning program used in its classification. This is called deep learning's black box problem.

Another application, called Deep Patient, was able to successfully predict disease given a patient's

medical records. The application proved to be considerably better at forecasting disease than

physicians—even for schizophrenia, which is notoriously difficult to predict. So, even though the

models work well, no one can reach into the massive neural networks to identify why.

Cognitive computing

AI and machine learning are filled with examples of biological inspiration. And, while early AI

focused on the grand goals of building machines that mimicked the human brain, cognitive

computing is working toward this goal.

Cognitive computing, building on neural networks and deep learning, is applying knowledge from

cognitive science to build systems that simulate human thought processes. However, rather than

focus on a singular set of technologies, cognitive computing covers several disciplines, including

machine learning, natural language processing, vision, and human-computer interaction.

An example of cognitive computing is IBM Watson, which demonstrated state-of-the-art question-

and-answer interactions on Jeopardy but that IBM has since extended through a set of web

services. These services expose application programming interfaces for visual recognition,

speech-to-text, and text-to-speech function; language understanding and translation; and

conversational engines to build powerful virtual agents.

Going further

This article covered just a fraction of AI's history and the latest in neural network and deep learning

approaches. Although AI and machine learning have had their ups and downs, new approaches

like deep learning and cognitive computing have significantly raised the bar in these disciplines.

A conscious machine might still be out of reach, but systems that help improve people's lives are

here today.

For more information on developing cognitive IoT solutions for anomaly detection by using deep

learning, see Introducing deep learning and long-short term memory networks.

A beginner's guide to artificial intelligence, machine learning, and Page 9 of 10

cognitive computing

developerWorks® ibm.com/developerWorks/

Related topics

• IBM Watson services

• IBM Bluemix

© Copyright IBM Corporation 2017

(www.ibm.com/legal/copytrade.shtml)

Trademarks

(www.ibm.com/developerworks/ibm/trademarks/)

A beginner's guide to artificial intelligence, machine learning, and Page 10 of 10

cognitive computing

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- JW Museum Bible Tours - The British Museum - All Artefacts Room OrderДокумент187 страницJW Museum Bible Tours - The British Museum - All Artefacts Room Orderfatalist3100% (2)

- Enunciating The Automated Memory: Templum'Документ2 страницыEnunciating The Automated Memory: Templum'fatalist3Оценок пока нет

- cs231n 2017 Lecture15Документ154 страницыcs231n 2017 Lecture15fatalist3Оценок пока нет

- cs231n 2017 Lecture16Документ43 страницыcs231n 2017 Lecture16fatalist3Оценок пока нет

- cs231n 2017 Lecture5Документ78 страницcs231n 2017 Lecture5fatalist3Оценок пока нет

- Cheat SheetДокумент163 страницыCheat Sheetfatalist3Оценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Activity 3Документ21 страницаActivity 3Rogen Mae Cabailo Pairat100% (2)

- M Davis Cover Letter and Resume RevisionДокумент8 страницM Davis Cover Letter and Resume Revisionapi-511504524Оценок пока нет

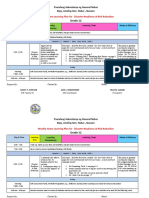

- MNN Import - Child and After Care 06 10 2021Документ9 страницMNN Import - Child and After Care 06 10 2021johnОценок пока нет

- DLP For Classroom Observation Q4Документ2 страницыDLP For Classroom Observation Q4Jannet FuentesОценок пока нет

- Sarah Ann Goodbrand, Ninewells Hospital and Medical School, DundeeДокумент1 страницаSarah Ann Goodbrand, Ninewells Hospital and Medical School, DundeeAh MagdyОценок пока нет

- Further Schools of Thought in Psychology Chapter 5 LenseДокумент7 страницFurther Schools of Thought in Psychology Chapter 5 LenseCarlos DelgadoОценок пока нет

- Mindfulness Micro-Teach PDFДокумент3 страницыMindfulness Micro-Teach PDFapi-453210284Оценок пока нет

- Scheme of Work Science Stage 6v1Документ22 страницыScheme of Work Science Stage 6v1vjahnaviОценок пока нет

- Wpu Faculty ManualДокумент144 страницыWpu Faculty ManualjscansinoОценок пока нет

- My Roles As A Student in The New NormalДокумент1 страницаMy Roles As A Student in The New NormalMariel Niña ErasmoОценок пока нет

- Mid Year Evaluation 2022Документ3 страницыMid Year Evaluation 2022api-375319204Оценок пока нет

- Grade 12: Weekly Home Learning Plan For Disaster Readiness & Risk ReductionДокумент4 страницыGrade 12: Weekly Home Learning Plan For Disaster Readiness & Risk ReductionNancy AtentarОценок пока нет

- Task 3 ProblemДокумент3 страницыTask 3 ProblemZarah Joyce SegoviaОценок пока нет

- Action PlanДокумент9 страницAction PlanJolly Mar Tabbaban MangilayaОценок пока нет

- PSYC 1205 Discussion Forum Unit 4Документ3 страницыPSYC 1205 Discussion Forum Unit 4Bukola Ayomiku AmosuОценок пока нет

- Sop Draft Utas Final-2Документ4 страницыSop Draft Utas Final-2Himanshu Waster0% (1)

- Classroom Phrasal Verbs PDFДокумент5 страницClassroom Phrasal Verbs PDFdoeste20083194Оценок пока нет

- Final Personality DevelopmentДокумент23 страницыFinal Personality DevelopmentHamza Latif100% (2)

- Reveal Math Te Grade 1 Unit SamplerДокумент86 страницReveal Math Te Grade 1 Unit SamplerGhada NabilОценок пока нет

- 158 Esa Entire Lesson PlanДокумент8 страниц158 Esa Entire Lesson Planapi-665418106Оценок пока нет

- Lesson 1 ICT Competency Standards For Philippine PreДокумент5 страницLesson 1 ICT Competency Standards For Philippine PreFrederick DomicilloОценок пока нет

- CS WAVE: Virtual Reality For Welders' TrainingДокумент6 страницCS WAVE: Virtual Reality For Welders' TrainingBOUAZIZОценок пока нет

- Ele 160 Remedial Instruction Syllabus AutosavedДокумент6 страницEle 160 Remedial Instruction Syllabus AutosavedRicky BustosОценок пока нет

- The 7 Domains of Teachers Professional PracticesДокумент8 страницThe 7 Domains of Teachers Professional PracticesRica Mitch SalamoОценок пока нет

- PGT Lesson Plan#1 - PreconfrenceДокумент1 страницаPGT Lesson Plan#1 - PreconfrenceBart T Sata'oОценок пока нет

- Deciding On Materials: Selection and Preparation of Materials For An Esp CourseДокумент44 страницыDeciding On Materials: Selection and Preparation of Materials For An Esp CourseDedek ChinmoiОценок пока нет

- Lesson Plan Profile A Country 1Документ3 страницыLesson Plan Profile A Country 1api-479660639Оценок пока нет

- Year 5 NewsletterДокумент2 страницыYear 5 NewsletterNISTYear5Оценок пока нет

- (Appendix C-03) COT-RPMS Rating Sheet For T I-III For SY 2023-2024Документ1 страница(Appendix C-03) COT-RPMS Rating Sheet For T I-III For SY 2023-2024Mishie BercillaОценок пока нет

- Letter of Concerns Re Compre ExamДокумент2 страницыLetter of Concerns Re Compre ExamKathy'totszki OrtizОценок пока нет