Академический Документы

Профессиональный Документы

Культура Документы

Aaa 25

Загружено:

mlОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Aaa 25

Загружено:

mlАвторское право:

Доступные форматы

even with a simple product.

[4]:17–18[8] This means that the number of

defects in a software product can be very large and defects that occur

infrequently are difficult to find in testing. More significantly, non-

functional dimensions of quality (how it is supposed to be versus what it

is supposed to do)—usability, scalability, performance, compatibility,

reliability—can be highly subjective; something that constitutes

sufficient value to one person may be intolerable to another.

Software developers can't test everything, but they can use combinatorial

test design to identify the minimum number of tests needed to get the

coverage they want. Combinatorial test design enables users to get

greater test coverage with fewer tests. Whether they are looking for

speed or test depth, they can use combinatorial test design methods to

build structured variation into their test cases.[9]

Economics

A study conducted by NIST in 2002 reports that software bugs cost the

U.S. economy $59.5 billion annually. More than a third of this cost could

be avoided if better software testing was performed.[10][dubious –

discuss]

Outsourcing software testing because of costs is very common, with China,

the Philippines and India being preferred destinations.[11]

It is commonly believed that the earlier a defect is found, the cheaper

it is to fix it. The following table shows the cost of fixing the defect

depending on the stage it was found.[12] For example, if a problem in the

requirements is found only post-release, then it would cost 10–100 times

more to fix than if it had already been found by the requirements review.

With the advent of modern continuous deployment practices and cloud-based

services, the cost of re-deployment and maintenance may lessen over time.

Cost to fix a defect Time detected

Requirements Architecture Construction System test Post-

release

Time introduced Requirements 1× 3× 5–10× 10× 10–100×

Architecture – 1× 10× 15× 25–100×

Construction – – 1× 10× 10–25×

The data from which this table is extrapolated is scant. Laurent Bossavit

says in his analysis:

The "smaller projects" curve turns out to be from only two teams of

first-year students, a sample size so small that extrapolating to

"smaller projects in general" is totally indefensible. The GTE study does

not explain its data, other than to say it came from two projects, one

large and one small. The paper cited for the Bell Labs "Safeguard"

project specifically disclaims having collected the fine-grained data

that Boehm's data points suggest. The IBM study (Fagan's paper) contains

claims which seem to contradict Boehm's graph, and no numerical results

which clearly correspond to his data points.

Boehm doesn't even cite a paper for the TRW data, except when writing

for "Making Software" in 2010, and there he cited the original 1976

article. There exists a large study conducted at TRW at the right time

for Boehm to cite it, but that paper doesn't contain the sort of data

that would support Boehm's claims.[13]

Roles

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Embedded Systems DesignДокумент576 страницEmbedded Systems Designnad_chadi8816100% (4)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- HSBC in A Nut ShellДокумент190 страницHSBC in A Nut Shelllanpham19842003Оценок пока нет

- State Immunity Cases With Case DigestsДокумент37 страницState Immunity Cases With Case DigestsStephanie Dawn Sibi Gok-ong100% (4)

- Fake PDFДокумент2 страницыFake PDFJessicaОценок пока нет

- 2016 066 RC - LuelcoДокумент11 страниц2016 066 RC - LuelcoJoshua GatumbatoОценок пока нет

- Basic Vibration Analysis Training-1Документ193 страницыBasic Vibration Analysis Training-1Sanjeevi Kumar SpОценок пока нет

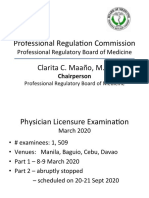

- Professional Regula/on Commission: Clarita C. Maaño, M.DДокумент31 страницаProfessional Regula/on Commission: Clarita C. Maaño, M.Dmiguel triggartОценок пока нет

- Exp. 5 - Terminal Characteristis and Parallel Operation of Single Phase Transformers.Документ7 страницExp. 5 - Terminal Characteristis and Parallel Operation of Single Phase Transformers.AbhishEk SinghОценок пока нет

- Separation PayДокумент3 страницыSeparation PayMalen Roque Saludes100% (1)

- General Financial RulesДокумент9 страницGeneral Financial RulesmskОценок пока нет

- BST Candidate Registration FormДокумент3 страницыBST Candidate Registration FormshirazОценок пока нет

- Reverse Engineering in Rapid PrototypeДокумент15 страницReverse Engineering in Rapid PrototypeChaubey Ajay67% (3)

- Interruptions - 02.03.2023Документ2 страницыInterruptions - 02.03.2023Jeff JeffОценок пока нет

- Experiment On Heat Transfer Through Fins Having Different NotchesДокумент4 страницыExperiment On Heat Transfer Through Fins Having Different NotcheskrantiОценок пока нет

- Efs151 Parts ManualДокумент78 страницEfs151 Parts ManualRafael VanegasОценок пока нет

- Ludwig Van Beethoven: Für EliseДокумент4 страницыLudwig Van Beethoven: Für Eliseelio torrezОценок пока нет

- ESG NotesДокумент16 страницESG Notesdhairya.h22Оценок пока нет

- Engineering Management (Final Exam)Документ2 страницыEngineering Management (Final Exam)Efryl Ann de GuzmanОценок пока нет

- Dialog Suntel MergerДокумент8 страницDialog Suntel MergerPrasad DilrukshanaОценок пока нет

- Audit On ERP Implementation UN PWCДокумент28 страницAudit On ERP Implementation UN PWCSamina InkandellaОценок пока нет

- Urun Katalogu 4Документ112 страницUrun Katalogu 4Jose Luis AcevedoОценок пока нет

- Reflections On Free MarketДокумент394 страницыReflections On Free MarketGRK MurtyОценок пока нет

- Delta AFC1212D-SP19Документ9 страницDelta AFC1212D-SP19Brent SmithОценок пока нет

- GL 186400 Case DigestДокумент2 страницыGL 186400 Case DigestRuss TuazonОценок пока нет

- Unit-5 Shell ProgrammingДокумент11 страницUnit-5 Shell ProgrammingLinda BrownОценок пока нет

- ATPDraw 5 User Manual UpdatesДокумент51 страницаATPDraw 5 User Manual UpdatesdoniluzОценок пока нет

- Specialty Arc Fusion Splicer: FSM-100 SeriesДокумент193 страницыSpecialty Arc Fusion Splicer: FSM-100 SeriesSFTB SoundsFromTheBirdsОценок пока нет

- ST JohnДокумент20 страницST JohnNa PeaceОценок пока нет

- Cancellation of Deed of Conditional SalДокумент5 страницCancellation of Deed of Conditional SalJohn RositoОценок пока нет

- My CoursesДокумент108 страницMy Coursesgyaniprasad49Оценок пока нет