Академический Документы

Профессиональный Документы

Культура Документы

ConnectionRefused - Hadoop Wiki

Загружено:

Muhammad ZainiАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

ConnectionRefused - Hadoop Wiki

Загружено:

Muhammad ZainiАвторское право:

Доступные форматы

29/3/2018 ConnectionRefused - Hadoop Wiki

Connection Refused

You get a ConnectionRefused Exception when there is a machine at the address specified, but

there is no program listening on the specific TCP port the client is using -and there is no firewall

in the way silently dropping TCP connection requests. If you do not know what a TCP

connection request is, please consult the specification.

Unless there is a configuration error at either end, a common cause for this is the Hadoop service

isn't running.

This stack trace is very common when the cluster is being shut down -because at that point

Hadoop services are being torn down across the cluster, which is visible to those services and

applications which haven't been shut down themselves. Seeing this error message during cluster

shutdown is not anything to worry about.

If the application or cluster is not working, and this message appears in the log, then it is more

serious.

The exception text declares both the hostname and the port to which the connection failed. The

port can be used to identify the service. For example, port 9000 is the HDFS port. Consult the

Ambari port reference, and/or those of the supplier of your Hadoop management tools.

1. Check the hostname the client using is correct. If it's in a Hadoop configuration option:

examine it carefully, try doing an ping by hand.

2. Check the IP address the client is trying to talk to for the hostname is correct.

3. Make sure the destination address in the exception isn't 0.0.0.0 -this means that you haven't

actually configured the client with the real address for that service, and instead it is picking

up the server-side property telling it to listen on every port for connections.

4. If the error message says the remote service is on "127.0.0.1" or "localhost" that means the

configuration file is telling the client that the service is on the local server. If your client is

trying to talk to a remote system, then your configuration is broken.

5. Check that there isn't an entry for your hostname mapped to 127.0.0.1 or 127.0.1.1 in

/etc/hosts (Ubuntu is notorious for this).

6. Check the port the client is trying to talk to using matches that the server is offering a

service on. The netstat command is useful there.

7. On the server, try a telnet localhost <port> to see if the port is open there.

8. On the client, try a telnet <server> <port> to see if the port is accessible remotely.

9. Try connecting to the server/port from a different machine, to see if it just the single client

misbehaving.

10. If your client and the server are in different subdomains, it may be that the configuration of

the service is only publishing the basic hostname, rather than the Fully Qualified Domain

Name. The client in the different subdomain can be unintentionally attempt to bind to a

host in the local subdomain —and failing.

11. If you are using a Hadoop-based product from a third party, -please use the support

channels provided by the vendor.

12. Please do not file bug reports related to your problem, as they will be closed as Invalid

See also Server Overflow

https://wiki.apache.org/hadoop/ConnectionRefused 1/2

29/3/2018 ConnectionRefused - Hadoop Wiki

None of these are Hadoop problems, they are hadoop, host, network and firewall configuration

issues. As it is your cluster, only you can find out and track down the problem.

ConnectionRefused (last edited 2017-01-06 14:34:48 by SteveLoughran)

https://wiki.apache.org/hadoop/ConnectionRefused 2/2

Вам также может понравиться

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- 1991 WIRING DIAGRAMSДокумент28 страниц1991 WIRING DIAGRAMSEli Wiess100% (1)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Setting Up Zoom Rooms with Office 365Документ11 страницSetting Up Zoom Rooms with Office 365sharnobyОценок пока нет

- Audit Checklist For Lifting Equipment. Operations 2.13Документ17 страницAudit Checklist For Lifting Equipment. Operations 2.13preventing becej100% (2)

- BitLocker Step by StepДокумент33 страницыBitLocker Step by StepwahyuabadiОценок пока нет

- HVT S.O.PДокумент5 страницHVT S.O.PAnonymous 7ijQpDОценок пока нет

- GDPR Compliance and Its Impact On Security and Data Protection ProgramsДокумент17 страницGDPR Compliance and Its Impact On Security and Data Protection Programsamdusias67Оценок пока нет

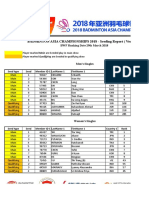

- BADMINTON ASIA CHAMPIONSHIPS 2018 - Seeding Report (Version 2)Документ8 страницBADMINTON ASIA CHAMPIONSHIPS 2018 - Seeding Report (Version 2)Muhammad ZainiОценок пока нет

- BADMINTON ASIA CHAMPIONSHIPS 2018 - Seeding Report (Version 2)Документ8 страницBADMINTON ASIA CHAMPIONSHIPS 2018 - Seeding Report (Version 2)Muhammad ZainiОценок пока нет

- Hasil To Uts Kelas 10 Sma Ipa Kurtilas 7-16 SEPTEMBER 2015Документ4 страницыHasil To Uts Kelas 10 Sma Ipa Kurtilas 7-16 SEPTEMBER 2015Muhammad ZainiОценок пока нет

- Badminton Asia Championships 2018 Tournament Prospectus 1st March 2018Документ13 страницBadminton Asia Championships 2018 Tournament Prospectus 1st March 2018Muhammad ZainiОценок пока нет

- Hasil To Uts Kelas 10 Sma Ipa Kurtilas 7-16 SEPTEMBER 2015Документ4 страницыHasil To Uts Kelas 10 Sma Ipa Kurtilas 7-16 SEPTEMBER 2015Muhammad ZainiОценок пока нет

- C++ Tutorial: (1) C++ Basic Object Oriented ConceptsДокумент2 страницыC++ Tutorial: (1) C++ Basic Object Oriented ConceptsMuhammad ZainiОценок пока нет

- Bahasa in G P ('t':'3', 'I':'668783080') D '' Var B Location Settimeout (Function ( If (Typeof Window - Iframe 'Undefined') ( B.href B.href ) ), 15000)Документ1 страницаBahasa in G P ('t':'3', 'I':'668783080') D '' Var B Location Settimeout (Function ( If (Typeof Window - Iframe 'Undefined') ( B.href B.href ) ), 15000)Muhammad ZainiОценок пока нет

- Ingg P ('t':'3', 'I':'668783080') D '' Var B Location Settimeout (Function ( If (Typeof Window - Iframe 'Undefined') ( B.href B.href ) ), 15000)Документ1 страницаIngg P ('t':'3', 'I':'668783080') D '' Var B Location Settimeout (Function ( If (Typeof Window - Iframe 'Undefined') ( B.href B.href ) ), 15000)Muhammad ZainiОценок пока нет

- Adders and SubtractorsДокумент10 страницAdders and SubtractorsAash SwthrtОценок пока нет

- Secure Logistic Regression via Homomorphic EncryptionДокумент23 страницыSecure Logistic Regression via Homomorphic EncryptionasdasdОценок пока нет

- Automatic Speaker Recognition Using LPCC and MFCCДокумент4 страницыAutomatic Speaker Recognition Using LPCC and MFCCEditor IJRITCCОценок пока нет

- Emergency Management and Recovery Plan TemplateДокумент27 страницEmergency Management and Recovery Plan Templateahmed hamedОценок пока нет

- Excise 325 326Документ2 страницыExcise 325 326marshadjaferОценок пока нет

- Chapter 16. Common Automation Tasks: Lab 16.1 Script Project #3Документ6 страницChapter 16. Common Automation Tasks: Lab 16.1 Script Project #3Ayura Safa ChintamiОценок пока нет

- GTalarm v1 enДокумент29 страницGTalarm v1 enEnrique Aneuris RodriguezОценок пока нет

- ¡¡¡Aporte ZTE Blade L110 Unlock - Liberacion by NCK Box!!! - NCK DONGLE - GSMDEC - Foro de Telefonia Celular PDFДокумент11 страниц¡¡¡Aporte ZTE Blade L110 Unlock - Liberacion by NCK Box!!! - NCK DONGLE - GSMDEC - Foro de Telefonia Celular PDFAlfredo CamposОценок пока нет

- A6V10097169 Hq-EnДокумент42 страницыA6V10097169 Hq-Engikis01Оценок пока нет

- ChangelogДокумент32 страницыChangelogEduardo LemosОценок пока нет

- Anatomy of ThreatДокумент4 страницыAnatomy of ThreatSatya PriyaОценок пока нет

- PSE Data Center P PDFДокумент6 страницPSE Data Center P PDFAnonymous WdNYeBYjKОценок пока нет

- Andhra Pradesh RTC Student Bus Pass Application FormДокумент2 страницыAndhra Pradesh RTC Student Bus Pass Application Formshashikumarsingh7287% (15)

- LMДокумент19 страницLMlittledanmanОценок пока нет

- Quiz em Tech1Документ1 страницаQuiz em Tech1Mary Jane V. RamonesОценок пока нет

- IP Security: - Chapter 6 of William Stallings. Network Security Essentials (2nd Edition) - Prentice Hall. 2003Документ31 страницаIP Security: - Chapter 6 of William Stallings. Network Security Essentials (2nd Edition) - Prentice Hall. 2003Priti SharmaОценок пока нет

- Lockwood Optimum Euro Profile Mortice Locks Catalogue SectionДокумент11 страницLockwood Optimum Euro Profile Mortice Locks Catalogue SectionSean MclarenОценок пока нет

- 323-1051-190T TL1 Reference Iss 5Документ694 страницы323-1051-190T TL1 Reference Iss 5gglaze1Оценок пока нет

- Iwsva 3.1 IgДокумент157 страницIwsva 3.1 IgSandaruwan WanniarachchiОценок пока нет

- Print Certificate: Template DesignДокумент2 страницыPrint Certificate: Template DesignSan Wit Yee Lwin100% (1)

- Industrilas 241375-01 p2-117Документ1 страницаIndustrilas 241375-01 p2-117Rade IlicОценок пока нет

- IPSecДокумент22 страницыIPSecEswin AngelОценок пока нет

- Borematch 148 Pib 001Документ3 страницыBorematch 148 Pib 001Jansen SaippaОценок пока нет

- Configuration Guide - Ubiquiti PDFДокумент12 страницConfiguration Guide - Ubiquiti PDFEstebanChiangОценок пока нет

- GGM AXA1xxxxx User Manual - InddДокумент21 страницаGGM AXA1xxxxx User Manual - InddFathe BallaОценок пока нет