Академический Документы

Профессиональный Документы

Культура Документы

02-Wordcount Mapreduce

Загружено:

Mohammed ThawfeeqОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

02-Wordcount Mapreduce

Загружено:

Mohammed ThawfeeqАвторское право:

Доступные форматы

MapReduce is the core component of the distributed processing framework Hadoop which is

written in Java –

Map Phase – Data transformation and pre-processing step. Data is input in terms of key value

pairs and after processing is sent to the reduce phase.

Reduce Phase- Data is aggregated and the business logic is implemented in this phase which

is sent to the next big data tool in the data pipeline for further processing.

The standard Hadoop’s MapReduce model has Mappers, Reducers, Combiners, Partitioner,

and sorting all of which manipulate the structure of the data to fit the business requirements.

It is evident that to manipulate the structure of the data – Map and Reduce phase need to

make use of data structures like arrays to perform various transformation operations.

Ex. No. 2:

AIM:

Word count program to demonstrate the use of Map and Reduce tasks

STEPS:

1. Analyze the input file content

2. Develop the code

a. Writing a map function

b. Writing a reduce function

c. Writing the Driver class

3. Compiling the source

4. Building the JAR file

5. Starting the DFS

6. Creating Input path in HDFS and moving the data into Input path

7. Executing the program

$ cd ~

$ sudo mkdir wordcount

$ cd wordcount

$ sudo nano WordCount.java

Wordcount program:

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*; // to tell hadoop what to run

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*; //

import org.apache.hadoop.util.*; // to run mapreduce application

public class WordCount

{

public static class Map extends MapReduceBase implements Mapper<LongWritable, Text,

Text, IntWritable>

{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map (LongWritable key, Text value, OutputCollector<Text, IntWritable>

output, Reporter reporter) throws IOException

{

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens())

{

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

public static class Reduce extends MapReduceBase implements Reducer<Text, IntWritable,

Text, IntWritable>

{

public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text,

IntWritable> output, Reporter reporter) throws IOException

{

int sum=0;

while (values.hasNext())

{

sum+=values.next().get();

}

output.collect (key, new IntWritable(sum));

}

}

public static void main (String[] args) throws Exception

{

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}

Start the hdfs daemon

$start-all.sh

To check the hadoop classpath

$ hadoop classpath

/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/*:/usr/local/hadoop

/share/hadoop/common/*:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoo

p/hdfs/lib/*:/usr/local/hadoop/share/hadoop/hdfs/*:/usr/local/hadoop/share/hadoop/mapreduc

e/*:/usr/local/hadoop/share/hadoop/yarn:/usr/local/hadoop/share/hadoop/yarn/lib/*:/usr/local/

hadoop/share/hadoop/yarn/*

Grant all permissions to user to access the folder ‘wordcount’

$ sudo chmod 777 wordcount/

Run javac

$ cd ~

$ cd wordcount

$ javac WordCount.java -cp $(hadoop classpath)

The three java class files are created. To check it, type

$ ls

create jar file to combine these class files

$ jar cf wc.jar WordCount*.class

Create input and output directory in the hadoop file system

$ hadoop fs -mkdir /input

$ hadoop fs -mkdir /output

$ hadoop fs -ls /

Create text file and move it to input folder in hadoop file system

$ nano hello.txt

Data transformation and pre-processing step. Data is input in terms of key value pairs and

after processing is sent to the reduce phase.

$ hadoop fs -put hello.txt /input

To check all files and folder

$ hadoop fs -lsr /

Execute the program

$ hadoop jar wc.jar WordCount /input /output/out1

The last two arguments are source directory location which has input file and output directory

location where result will be generated in ‘out1’ folder. ‘Out1’ must not be created before.

output is in part-00000 subfolder

To check your output

$ hadoop fs -cat /output/out1/part-00000

Any error

1. edit the file hadoop-env.sh in /usr/local/etc/hadoop

Adding Hadoop library into LD_LIBRARY_PATH :

export LD_LIBRARY_PATH=/usr/local/hadoop/lib/native/:$LD_LIBRARY_PATH

2. Include /native in bashrc file

export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib/native"

3. java related error

in bashrc file, include the following line after case statements “case

${HADOOP_OS_TYPE}”

export HADOOP_OPTS="--add-modules java.activation"

4. any error in yarn. include the following

usr/local/hadoop/etc/hadoop/yarn-site.xml

sudo gedit /usr/local/Hadoop/etc/hadoop/yarn-site.xml

Add the following to the configuration Tag

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

Steps to rectify Error

If the SSH connect should fail, these general tips might help:

● Enable debugging with ssh -vvv localhost and investigate the error in

detail.

● Check the SSH server configuration in /etc/ssh/sshd_config, in

particular the options PubkeyAuthentication (which should be set to yes)

and AllowUsers (if this option is active, add the hduser user to it). If you

made any changes to the SSH server configuration file, you can force a

configuration reload with sudo /etc/init.d/ssh reload.

Вам также может понравиться

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- 82001Документ85 страниц82001Mohammed ThawfeeqОценок пока нет

- Interface Design: Easy To Use? Easy To Understand? Easy To Learn?Документ25 страницInterface Design: Easy To Use? Easy To Understand? Easy To Learn?Mohammed ThawfeeqОценок пока нет

- Ex - No:9 Construction of Dag: ProgramДокумент8 страницEx - No:9 Construction of Dag: ProgramMohammed ThawfeeqОценок пока нет

- EXNO: 11 Foreign Trading System Date: AIM: Mohammed Thawfeeq 311115205036Документ6 страницEXNO: 11 Foreign Trading System Date: AIM: Mohammed Thawfeeq 311115205036Mohammed ThawfeeqОценок пока нет

- T:T-Yuu,: ,.XR Q. Hi Tr,. :,: ,) DH ' TДокумент13 страницT:T-Yuu,: ,.XR Q. Hi Tr,. :,: ,) DH ' TMohammed ThawfeeqОценок пока нет

- Cs2357-Ooad Lab ManualДокумент199 страницCs2357-Ooad Lab ManualMohammed Thawfeeq0% (1)

- Cy2151-Engineering Chemistry-I Question Bank Part-B (16 Marks) Unit-I Polymer ChemistryДокумент2 страницыCy2151-Engineering Chemistry-I Question Bank Part-B (16 Marks) Unit-I Polymer ChemistryMohammed ThawfeeqОценок пока нет

- Write A Function That Returns A Pointer To The Maximum Value of An Array of Double's. If The Array Is Empty, Return NULLДокумент3 страницыWrite A Function That Returns A Pointer To The Maximum Value of An Array of Double's. If The Array Is Empty, Return NULLMohammed ThawfeeqОценок пока нет

- DBMS Lab Manual PDFДокумент83 страницыDBMS Lab Manual PDFMohammed Thawfeeq100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- SMS Text Messaging (13.50A/70) : Click On The Chapter Titles Below To View ThemДокумент56 страницSMS Text Messaging (13.50A/70) : Click On The Chapter Titles Below To View ThemtekleyОценок пока нет

- Computer Architecture Cache DesignДокумент28 страницComputer Architecture Cache Designprahallad_reddy100% (3)

- Culvert ManualДокумент124 страницыCulvert Manualpelika_sueОценок пока нет

- Welded Simple Connection: Based On Block Shear Capacity ofДокумент12 страницWelded Simple Connection: Based On Block Shear Capacity ofhazelОценок пока нет

- Terostat Ms 930-EnДокумент4 страницыTerostat Ms 930-Enken philipsОценок пока нет

- Weld PreparationДокумент4 страницыWeld PreparationHelier Valdivia GuardiaОценок пока нет

- NSN Lte ParameterДокумент44 страницыNSN Lte ParameterRocky100% (1)

- APKTOP Pagina 1Документ3 страницыAPKTOP Pagina 1r4t3t3Оценок пока нет

- G + 5 (Calculation Details)Документ104 страницыG + 5 (Calculation Details)shashikantcivil025Оценок пока нет

- Call For Applications: Young Urban Designers and Architects ProgrammeДокумент2 страницыCall For Applications: Young Urban Designers and Architects ProgrammeesamridhОценок пока нет

- The Family Handyman Handy Hints 2009Документ32 страницыThe Family Handyman Handy Hints 2009Wolf Flow Red100% (5)

- CMS Implementation PlanДокумент16 страницCMS Implementation PlanpranaypaiОценок пока нет

- Infocomm 2014 KoftinoffДокумент63 страницыInfocomm 2014 KoftinoffSellappan MuthusamyОценок пока нет

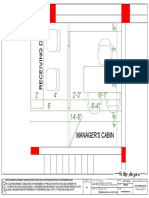

- Receiving Desk - Layout PlanДокумент1 страницаReceiving Desk - Layout Planrohit guptaОценок пока нет

- Document Management Systems - Wiki - SCN WikiДокумент4 страницыDocument Management Systems - Wiki - SCN Wikimatrix20102011Оценок пока нет

- Art Appreciation - Prelim ReviewerДокумент9 страницArt Appreciation - Prelim ReviewerSab Sadorra100% (1)

- Differential ShrinkageДокумент2 страницыDifferential ShrinkageHanamantrao KhasnisОценок пока нет

- Diskless CloningДокумент8 страницDiskless CloningArvie CaagaoОценок пока нет

- Avaya Switch ManualДокумент538 страницAvaya Switch ManualClay_BerloОценок пока нет

- Well FoundationДокумент12 страницWell FoundationNivedita Godara C-920Оценок пока нет

- Manual HLB 860 EnglishДокумент14 страницManual HLB 860 EnglishAlexandra UrruelaОценок пока нет

- English Quick Guide 2001Документ3 страницыEnglish Quick Guide 2001Dony BvsОценок пока нет

- Teradada 13 Installation GuideДокумент11 страницTeradada 13 Installation GuideAmit SharmaОценок пока нет

- Code Project Article WMICodeCreator and USB Serial FinderДокумент3 страницыCode Project Article WMICodeCreator and USB Serial FinderJoel BharathОценок пока нет

- Precast ConcreteДокумент13 страницPrecast ConcreteShokhieb Showbad CarriebОценок пока нет

- Advertising Data AnalysisДокумент12 страницAdvertising Data AnalysisVishal SharmaОценок пока нет

- ACreferencias BibliográficasДокумент3 страницыACreferencias BibliográficasSenialsell Lains GuillenОценок пока нет

- Turfpave HDДокумент2 страницыTurfpave HDAnonymous 1uGSx8bОценок пока нет

- LED Solutions Catalogue 2011Документ537 страницLED Solutions Catalogue 2011stewart_douglas574Оценок пока нет

- Beumer HD Gurtbecherwerk GBДокумент8 страницBeumer HD Gurtbecherwerk GBrimarima2barОценок пока нет