Академический Документы

Профессиональный Документы

Культура Документы

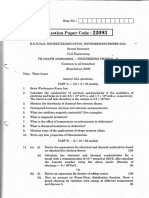

Ec1311 Nol

Загружено:

msraiИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Ec1311 Nol

Загружено:

msraiАвторское право:

Доступные форматы

EC1311-Communication Engineering

Unit 1

MODULATION

SYSTEMS

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

MODULATION & DEMODULATION

In telecommunications, modulation is the process of varying a periodic waveform, i.e. a

tone, in order to use that signal to convey a message, in a similar fashion as a musician

may modulate the tone from a musical instrument by varying its volume, timing and

pitch. Normally a high-frequency sinusoid waveform is used as carrier signal. The three

key parameters of a sine wave are its amplitude ("volume"), its phase ("timing") and its

frequency ("pitch"), all of which can be modified in accordance with a low frequency

information signal to obtain the modulated signal.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

A device that performs modulation is known as a modulator and a device that performs

the inverse operation of modulation is known as a demodulator (sometimes detector or

demod). A device that can do both operations is a modem (short for "MOdulate-

DEModulate").

A simple example: A telephone line is designed for transferring audible sounds, for example

tones, and not digital bits (zeros and ones). Computers may however communicate over a

telephone line by means of modems, which are representing the digital bits by tones, called

symbols. You can say that modems play music for each other. If there are four alternative

symbols (corresponding to a musical instrument that can generate four different tones, one at a

time), the first symbol may represent the bit sequence 00, the second 01, the third 10 and the

fourth 11. If the modem plays a melody consisting of 1000 tones per second, the symbol rate is

1000 symbols/second, or baud. Since each tone represents a message consisting of two digital bits

in this example, the bit rate is twice the symbol rate, i.e. 2000 bit per second.

The aim of modulation

The aim of digital modulation is to transfer a digital bit stream over an analog bandpass

channel, for example over the public switched telephone network (where a filter limits

the frequency range to between 300 and 3400 Hz) or a limited radio frequency band.

The aim of analog modulation is to transfer an analog lowpass signal, for example an

audio signal or TV signal, over an analog bandpass channel, for example a limited radio

frequency band or a cable TV network channel.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Analog and digital modulation facilitate frequency division multiplex (FDM), where

several low pass information signals are transferred simultaneously over the same shared

physical medium, using separate bandpass channels.

The aim of digital baseband modulation methods, also known as line coding, is to

transfer a digital bit stream over a lowpass channel, typically a non-filtered copper wire

such as a serial bus or a wired local area network.

The aim of pulse modulation methods is to transfer a narrowband analog signal, for

example a phone call over a wideband lowpass channel or, in some of the schemes, as a

bit stream over another digital transmission system.

Analog modulation methods

In analog modulation, the modulation is applied continuously in response to the analog

information signal.

A low-frequency message signal (top) may be carried by an AM or FM radio wave.

Common analog modulation techniques are:

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

• Amplitude modulation (AM) (here the amplitude of the modulated signal is

varied)

o Double-sideband modulation DSB

Double-sideband modulation with unsuppressed carrier (DSB-WC)

(used on the AM radio broadcasting band)

Double-sideband suppressed-carrier transmission (DSB-SC)

Double-sideband reduced carrier transmission (DSB-RC)

o Single-sideband modulation (SSB, or SSB-AM),

SSB with carrier (SSB-WC)

SSB suppressed carrier modulation (SSB-SC)

o Vestigial sideband modulation (VSB, or VSB-AM)

o Quadrature amplitude modulation (QAM)

• Angle modulation

o Frequency modulation (FM) (here the frequency of the modulated signal is

varied)

o Phase modulation (PM) (here the phase shift of the modulated signal is

varied)

Digital modulation methods

In digital modulation, an analog carrier signal is modulated by a digital bit stream. Digital

modulation methods can be considered as digital-to-analog conversion, and the

corresponding demodulation or detection as analog-to-digital conversion. The changes in

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

the carrier signal are chosen from a finite number of M alternative symbols (the

modulation alphabet).

Fundamental digital modulation methods

These are the most fundamental digital modulation techniques:

• In the case of PSK, a finite number of phases are used.

• In the case of FSK, a finite number of frequencies are used.

• In the case of ASK, a finite number of amplitudes are used.

• In the case of QAM, a finite number of at least two phases, and at least two

amplitudes are used.

In QAM, an inphase signal (the I signal, for example a cosine waveform) and a

quadrature phase signal (the Q signal, for example a sine wave) are amplitude modulated

with a finite number of amplitudes, and summed. It can be seen as a two-channel system,

each channel using ASK. The resulting signal is equivalent to a combination of PSK and

ASK.

In all of the above methods, each of these phases, frequencies or amplitudes are assigned

a unique pattern of binary bits. Usually, each phase, frequency or amplitude encodes an

equal number of bits. This number of bits comprises the symbol that is represented by the

particular phase.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

If the alphabet consists of M = 2N alternative symbols, each symbol represents a message

consisting of N bits. If the symbol rate (also known as the baud rate) is fS symbols/second

(or baud), the data rate is NfS bit/second.

For example, with an alphabet consisting of 16 alternative symbols, each symbol

represents 4 bits. Thus, the data rate is four times the baud rate.

In the case of PSK, ASK or QAM, where the carrier frequency of the modulated signal is

constant, the modulation alphabet is often conveniently represented on a constellation

diagram, showing the amplitude of the I signal at the x-axis, and the amplitude of the Q

signal at the y-axis, for each symbol.

Modulator and detector principles of operation

PSK and ASK, and sometimes also FSK, are often generated and detected using the

principle of QAM. The I and Q signals can be combined into a complex-valued signal

I+jQ (where j is the imaginary unit). The resulting so called equivalent lowpass signal or

equivalent baseband signal is a representation of the real-valued modulated physical

signal (the so called passband signal or RF signal).

These are the general steps used by the modulator to transmit data:

1. Group the incoming data bits into codewords, one for each symbol that will be

transmitted.

2. Map the codewords to attributes, for example amplitudes of the I and Q signals

(the equivalent low pass signal), or frequency or phase values.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

3. Adapt pulse shaping or some other filtering to limit the bandwidth and form the

spectrum of the equivalent low pass signal, typically using digital signal

processing.

4. Perform digital-to-analog conversion (DAC) of the I and Q signals (since today

all of the above is normally achieved using digital signal processing, DSP).

5. Generate a high-frequency sine wave carrier waveform, and perhaps also a cosine

quadrature component. Carry out the modulation, for example by multiplying the

sine and cosine wave form with the I and Q signals, resulting in that the

equivalent low pass signal is frequency shifted into a modulated passband signal

or RF signal. Sometimes this is achieved using DSP technology, for example

direct digital synthesis using a waveform table, instead of analog signal

processing. In that case the above DAC step should be done after this step.

6. Amplification and analog bandpass filtering to avoid harmonic distortion and

periodic spectrum

At the receiver side, the demodulator typically performs:

1. Bandpass filtering.

2. Automatic gain control, AGC (to compensate for attenuation, for example

fading).

3. Frequency shifting of the RF signal to the equivalent baseband I and Q signals, or

to an intermediate frequency (IF) signal, by multiplying the RF signal with a local

oscillator sinewave and cosine wave frequency (see the superheterodyne receiver

principle).

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

4. Sampling and analog-to-digital conversion (ADC) (Sometimes before or instead

of the above point, for example by means of undersampling).

5. Equalization filtering, for example a matched filter, compensation for multipath

propagation, time spreading, phase distortion and frequency selective fading, to

avoid intersymbol interference and symbol distortion.

6. Detection of the amplitudes of the I and Q signals, or the frequency or phase of

the IF signal.

7. Quantization of the amplitudes, frequencies or phases to the nearest allowed

symbol values.

8. Mapping of the quantized amplitudes, frequencies or phases to codewords (bit

groups);.

9. Parallel-to-serial conversion of the codewords into a bit stream.

10. Pass the resultant bit stream on for further processing such as removal of any

error-correcting codes.

As is common to all digital communication systems, the design of both the modulator and

demodulator must be done simultaneously. Digital modulation schemes are possible

because the transmitter-receiver pair have prior knowledge of how data is encoded and

represented in the communications system. In all digital communication systems, both

the modulator at the transmitter and the demodulator at the receiver are structured so that

they perform inverse operations.

Non-coherent modulation methods do not require a receiver reference clock signal that is

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

phase synchronized with the sender carrier wave. In this case, modulation symbols (rather

than bits, characters, or data packets) are asynchronously transferred. The opposite is

coherent modulation.

List of common digital modulation techniques

The most common digital modulation techniques are:

• Phase-shift keying (PSK):

o Binary PSK (BPSK), using M=2 symbols

o Quadrature (QPSK), using M=4 symbols

o 8PSK, using M=8 symbols

o 16PSK, usign M=16 symbols

o Differential PSK (DPSK)

o Differential QPSK (DQPSK)

o Offset QPSK (OQPSK)

o π/4–QPSK

• Frequency-shift keying (FSK):

o Audio frequency-shift keying (AFSK)

o Multi-frequency shift keying (M-ary FSK or MFSK)

o Dual-tone multi-frequency (DTMF)

o Continuous-phase frequency-shift keying (CPFSK)

• Amplitude-shift keying (ASK):

o On-off keying (OOK), the most common ASK form

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

o M-ary vestigial sideband modulation, for example 8VSB

• Quadrature amplitude modulation (QAM) - a combination of PSK and ASK:

o Polar modulation like QAM a combination of PSK and ASK.

• Continuous phase modulation (CPM) methods:

o Minimum-shift keying (MSK)

o Gaussian minimum-shift keying (GMSK)

• Orthogonal frequency division multiplexing (OFDM) modulation:

o discrete multitone (DMT) - including adaptive modulation and bit-loading.

• Wavelet modulation

• Trellis coded modulation (TCM), also known as trellis modulation

See also spread spectrum and digital pulse modulation methods.

MSK and GMSK are particular cases of continuous phase modulation (CPM). Indeed,

MSK is a particular case of the sub-family of CPM known as continuous-phase

frequency-shift keying (CPFSK) which is defined by a rectangular frequency pulse (i.e. a

linearly increasing phase pulse) of one symbol-time duration (total response signaling).

OFDM is based on the idea of Frequency Division Multiplex (FDM), but is utilized as a

digital modulation scheme. The bit stream is split into several parallel data streams, each

transferred over its own sub-carrier using some conventional digital modulation scheme.

The modulated sub-carriers are summed to form an OFDM signal. OFDM is considered

as a modulation technique rather than a multiplex technique, since it transfers one bit

stream over one communication channel using one sequence of so-called OFDM

symbols. OFDM can be extended to multi-user channel access method in the Orthogonal

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Frequency Division Multiple Access (OFDMA) and MC-OFDM schemes, allowing

several users to share the same physical medium by giving different sub-carriers or

spreading codes to different users.

Of the two kinds of RF power amplifier, switching amplifiers (Class C amplifiers)cost

less and use less battery power than linear amplifiers of the same output power. However,

they only work with relatively constant-amplitude-modulation signals such as angle

modulation (FSK or PSK) and CDMA, but not with QAM and OFDM. Nevertheless,

even though switching amplifiers are completely unsuitable for normal QAM

constellations, often the QAM modulation principle are used to drive switching

amplifiers with these FM and other waveforms, and sometimes sometimes QAM

demodulators are used to receive the signals put out by these switching amplifiers.

Digital baseband modulation or line coding

The term digital baseband modulation is synonymous to line codes, which are methods

to transfer a digital bit stream over an analog lowpass channel using a pulse train, i.e. a

discrete number of signal levels, by directly modulating the voltage or current on a cable.

Common examples are unipolar, non-return-to-zero (NRZ), Manchester and alternate

mark inversion (AMI) coding.

Pulse modulation methods

Pulse modulation schemes aim at transferring a narrowband analog signal over an analog

lowpass channel as a two-level quantized signal, by modulating a pulse train. Some pulse

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

modulation schemes also allow the narrowband analog signal to be transferred as a digital

signal (i.e. as a quantized discrete-time signal) with a fixed bit rate, which can be

transferred over an underlying digital transmission system, for example some line code.

They are not modulation schemes in the conventional sense since they are not channel

coding schemes, but should be considered as source coding schemes, and in some cases

analog-to-digital conversion techniques.

• Pulse-code modulation (PCM) (Analog-over-digital)

• Pulse-width modulation (PWM) (Analog-over-analog)

• Pulse-amplitude modulation (PAM) (Analog-over-analog)

• Pulse-position modulation (PPM) (Analog-over-analog)

• Pulse-density modulation (PDM) (Analog-over-analog)

• Sigma-delta modulation (∑Δ) (Analog-over-digital)

• Adaptive-delta modulation (ADM)(Analog-over-digital)

Direct-sequence spread spectrum (DSSS) is based on pulse-amplitude modulation.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

UNIT 2

TRANSMISSION MEDIUM

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

A transmission line is the material medium or structure that forms all or part of a path

from one place to another for directing the transmission of energy, such as

electromagnetic waves or acoustic waves, as well as electric power transmission.

Components of transmission lines include wires, coaxial cables, dielectric slabs, optical

fibers, electric power lines, and waveguides.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

A transmission line is the material medium or structure that forms all or part of a path

from one place to another for directing the transmission of energy, such as

electromagnetic waves or acoustic waves, as well as electric power transmission.

Components of transmission lines include wires, coaxial cables, dielectric slabs, optical

fibers, electric power lines, and waveguides.

The four terminal model

Variations on the schematic electronic symbol for a transmission line.

For the purposes of analysis, an electrical transmission line can be modelled as a two-port

network (also called a quadrupole network), as follows:

In the simplest case, the network is assumed to be linear (i.e. the complex voltage across

either port is proportional to the complex current flowing into it when there are no

reflections), and the two ports are assumed to be interchangeable. If the transmission line

is uniform along its length, then its behaviour is largely described by a single parameter

called the characteristic impedance, symbol Z0. This is the ratio of the complex voltage

of a given wave to the complex current of the same wave at any point on the line. Typical

values of Z0 are 50 or 75 ohms for a coaxial cable, about 100 ohms for a twisted pair of

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

wires, and about 300 ohms for a common type of untwisted pair used in radio

transmission.

When sending power down a transmission line, it is usually desirable that as much power

as possible will be absorbed by the load and as little as possible will be reflected back to

the source. This can be ensured by making the source and load impedances equal to Z 0, in

which case the transmission line is said to be matched.

Some of the power that is fed into a transmission line is lost because of its resistance.

This effect is called ohmic or resistive loss (see ohmic heating). At high frequencies,

another effect called dielectric loss becomes significant, adding to the losses caused by

resistance. Dielectric loss is caused when the insulating material inside the transmission

line absorbs energy from the alternating electric field and converts it to heat (see

dielectric heating).

The total loss of power in a transmission line is often specified in decibels per metre

(dB/m), and usually depends on the frequency of the signal. The manufacturer often

supplies a chart showing the loss in dB/m at a range of frequencies. A loss of 3 dB

corresponds approximately to a halving of the power.

High-frequency transmission lines can be defined as transmission lines that are designed

to carry electromagnetic waves whose wavelengths are shorter than or comparable to the

length of the line. Under these conditions, the approximations useful for calculations at

lower frequencies are no longer accurate. This often occurs with radio, microwave and

optical signals, and with the signals found in high-speed digital circuits.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

LOSSES IN TRANSMISSION LINES

The discussion of transmission lines so far has not directly addressed

LINE LOSSES; actually some line losses occur in all lines. Line losses

may be any of three types - COPPER, DIELECTRIC, and RADIATION or

INDUCTION LOSSES.

NOTE: Transmission lines are sometimes referred to as rf lines. In this

text the terms are used interchangeably.

Copper Losses

One type of copper loss is I2R LOSS. In rf lines the resistance of the

conductors is never equal to zero. Whenever current flows through one

of these conductors, some energy is dissipated in the form of heat.

This heat loss is a POWER LOSS. With copper braid, which has a

resistance higher than solid tubing, this power loss is higher.

Another type of copper loss is due to SKIN EFFECT. When dc flows

through a conductor, the movement of electrons through the conductor's

cross section is uniform. The situation is somewhat different when ac

is applied. The expanding and collapsing fields about each electron

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

encircle other electrons. This phenomenon, called SELF INDUCTION,

retards the movement of the encircled electrons. The flux density at

the center is so great that electron movement at this point is reduced.

As frequency is increased, the opposition to the flow of current in the

center of the wire increases. Current in the center of the wire becomes

smaller and most of the electron flow is on the wire surface. When the

frequency applied is 100 megahertz or higher, the electron movement in

the center is so small that the center of the wire could be removed

without any noticeable effect on current. You should be able to see

that the effective cross-sectional area decreases as the frequency

increases. Since resistance is inversely proportional to the cross-

sectional area, the resistance will increase as the frequency is

increased. Also, since power loss increases as resistance increases,

power losses increase with an increase in frequency because of skin

effect.

Copper losses can be minimized and conductivity increased in an rf line

by plating the line with silver. Since silver is a better conductor

than copper, most of the current will flow through the silver layer.

The tubing then serves primarily as a mechanical support.

STANDING WAVES

STANDING WAVES ON A TRANSMISSION LINE There is a large variety of terminations for rf

lines. Each type of termination has a characteristic effect on the standing waves on the line. From the nature

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

of the standing waves, you can determine the type of termination that produces the waves.

TERMINATION IN Z Termination in Z (characteristic impedance) will cause a constant reading on an

0 0

ac meter when it is moved along the length of the line. As illustrated in figure 3-34, view A, the curve,

provided there are no losses in the line, will be a straight line. If there are losses in the line, the amplitude of

the voltage and current will diminish as they move down the line (view B). The losses are due to dc

resistance in the line itself.

IMPEDANCE MATCHING

Impedance matching is the practice of attempting to make the output impedance ZS of a

source equal to the input impedance ZL of the load to which it is ultimately connected,

usually in order to maximize the power transfer and minimize reflections from the load.

This only applies when both are linear devices.

The concept of impedance matching was originally developed for electrical power, but

can be applied to any other field where a form of energy (not just electrical) is transferred

between a source and a load.

Impedance matching is the practice of attempting to make the output impedance ZS of a

source equal to the input impedance ZL of the load to which it is ultimately connected,

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

usually in order to maximize the power transfer and minimize reflections from the load.

This only applies when both are linear devices.

The concept of impedance matching was originally developed for electrical power, but

can be applied to any other field where a form of energy (not just electrical) is transferred

between a source and a load.

RADIO PROPAGATION

Radio propagation is a term used to explain how radio waves behave when they are

transmitted, or are propagated from one point on the Earth to another.

This is an illustration showing how radio signals are split into two components (the

ordinary component in red and the extraordinary component in green) when penetrating

into the ionosphere. Two separate signals of differing transmitted elevation angles are

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

broadcast from the transmitter at the left toward the receiver (triangle on base of grid) at

the right. Click the image for access to a movie of this example showing the three

dimensionality of the example.

In free space, all electromagnetic waves (radio, light, X-rays, etc) obey the inverse-square

law which states that the power density of an electromagnetic wave is proportional to the

inverse of the square of r (where r is the distance [radius] from the source) or:

Doubling the distance from a transmitter means that the power density of the radiated

wave at that new location is reduced to one-quarter of its previous value.

The far-field magnitudes of the electric and magnetic field components of

electromagnetic radiation are equal, and their field strengths are inversely proportional to

distance. The power density per surface unit is proportional to the product of the two field

strengths, which are expressed in linear units. Thus, doubling the propagation path

distance from the transmitter reduces their received field strengths over a free-space path

by one-half.

Electromagnetic wave propagation is also affected by several other factors determined by

its path from point to point. This path can be a direct line of sight path or an over-the-

horizon path aided by refraction in the ionosphere. Factors influencing ionospheric radio

signal propagation can include sporadic-E, spread-F, solar flares, geomagnetic storms,

ionospheric layer tilts, and solar proton events.

Lower frequencies (between 30 and 3,000 kHz) have the property of following the

curvature of the earth via groundwave propagation in the majority of occurrences. The

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

interaction of radio waves with the ionized regions of the atmosphere makes radio

propagation more complex to predict and analyze than in free space (see image at right).

Ionospheric radio propagation has a strong connection to space weather.

Since radio propagation is somewhat unpredictable, such services as emergency locator

transmitters, in-flight communication with ocean-crossing aircraft, and some television

broadcasting have been moved to satellite transmitters. A satellite link, though expensive,

can offer highly predictable and stable line of sight coverage of a given area (see Google

Maps for a "real-world" application).

A sudden ionospheric disturbance or shortwave fadeout is observed when the x-rays

associated with a solar flare ionizes the ionospheric D-region. Enhanced ionization in that

region increases the absorption of radio signals passing through it. During the strongest

solar x-ray flares, complete absorption of virtually all ionospherically propagated radio

signals in the sunlit hemisphere can occur. These solar flares can disrupt HF radio

propagation and affect GPS accuracy.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

SPACE (DIRECT) WAVE

PROPAGATION

Space Waves, also known as direct waves, are radio waves that travel directly from the

transmitting antenna to the receiving antenna. In order for this to occur, the two antennas

must be able to “see” each other; that is there must be a line of sight path between them.

The diagram on the next page shows a typical line of sight. The maximum line of sight

distance between two antennas depends on the height of each antenna. If the heights are

measured in feet, the maximum line of sight, in miles, is given by:

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Because a typical transmission path is filled with buildings, hills and other obstacles, it is

possible for radio waves to be reflected by these obstacles, resulting in radio waves that

arrive at the receive antenna from several different directions. Because the length of each

path is different, the waves will not arrive in phase. They may reinforce each other or

cancel each other, depending on the phase differences. This situation is known as

multipath propagation. It can cause major distortion to certain types of signals. Ghost

images seen on broadcast TV signals are the result of multipath – one picture arrives

slightly later than the other and is shifted in position on the screen. Multipath is very

troublesome for mobile communications. When the transmitter and/or receiver are in

motion, the path lengths are continuously changing and the signal fluctuates wildly in

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

amplitude. For this reason, NBFM is used almost exclusively for mobile

communications. Amplitude variations caused by multipath that make AM unreadable

are eliminated by the limiter stage in an NBFM receiver.

An interesting example of direct communications is satellite communications. If a

satellite is placed in an orbit 22,000 miles above the equator, it appears to stand still in

the sky, as viewed from the ground. A high gain antenna can be pointed at the satellite to

transmit signals to it. The satellite is used as a relay station, from which approximately ¼

of the earth’s surface is visible. The satellite receives signals from the ground at one

frequency, known as the uplink frequency, translates this frequency to a different

frequency, known as the downlink frequency, and retransmits the signal. Because two

frequencies are used, the reception and transmission can happen simultaneously. A

satellite operating in this way is known as a transponder. The satellite has a tremendous

line of sight from its vantage point in space and many ground stations can communicate

through a single satellite.

Ground wave propagation

In physics, surface wave can refer to a mechanical wave that propagates along the

interface between differing media, usually two fluids with different densities. A surface

wave can also be an electromagnetic wave guided by a refractive index gradient. In radio

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

transmission, a ground wave is a surface wave that propagates close to the surface of the

Earth.

PATH LOSS:

Path loss (or path attenuation) is the reduction in power density (attenuation) of an

electromagnetic wave as it propagates through space. Path loss is a major component in

the analysis and design of the link budget of a telecommunication system.

This term is commonly used in wireless communications and signal propagation. Path

loss may be due to many effects, such as free-space loss, refraction, diffraction,

reflection, aperture-medium coupling loss, and absorption. Path loss is also influenced by

terrain contours, environment (urban or rural, vegetation and foliage), propagation

medium (dry or moist air), the distance between the transmitter and the receiver, and the

height and location of antennas.

Path loss normally includes propagation losses caused by the natural expansion of the

radio wave front in free space (which usually takes the shape of an ever-increasing

sphere), absorption losses (sometimes called penetration losses), when the signal passes

through media not transparent to electromagnetic waves, diffraction losses when part of

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

the radiowave front is obstructed by an opaque obstacle, and losses caused by other

phenomena.

The signal radiated by a transmitter may also travel along many and different paths to a

receiver simultaneously; this effect is called multipath. Multipath can either increase or

decrease received signal strength, depending on whether the individual multipath

wavefronts interfere constructively or destructively. The total power of interfering waves

in a Rayleigh fading scenario vary quickly as a function of space (which is known as

small scale fading), resulting in fast fades which are very sensitive to receiver position.

WHITE GUASSIAN NOISE

In communications, the additive white Gaussian noise (AWGN) channel model is one

in which the only impairment is the linear addition of wideband or white noise with a

constant spectral density (expressed as watts per hertz of bandwidth) and a Gaussian

distribution of amplitude. The model does not account for the phenomena of fading,

frequency selectivity, interference, nonlinearity or dispersion. However, it produces

simple, tractable mathematical models which are useful for gaining insight into the

underlying behavior of a system before these other phenomena are considered.

Wideband Gaussian noise comes from many natural sources, such as the thermal

vibrations of atoms in antennas (referred to as thermal noise or Johnson-Nyquist noise),

shot noise, black body radiation from the earth and other warm objects, and from celestial

sources such as the Sun.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

The AWGN channel is a good model for many satellite and deep space communication

links. It is not a good model for most terrestrial links because of multipath, terrain

blocking, interference, etc. However for terrestrial path modeling, AWGN is commonly

used to simulate background noise of the channel under study, in addition to multipath,

terrain blocking, interference, ground clutter and self interference that modern radio

systems encounter in terrestrial operation.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

UNIT 3

DIGITAL

COMMUNICATION

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

PULSE-CODE MODULATION (PCM)

Pulse-code modulation (PCM) is a digital representation of an analog signal where the

magnitude of the signal is sampled regularly at uniform intervals, then quantized to a

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

series of symbols in a numeric (usually binary) code. PCM has been used in digital

telephone systems and 1980s-era electronic musical keyboards. It is also the standard

form for digital audio in computers and the compact disc "red book" format. It is also

standard in digital video, for example, using ITU-R BT.601. However, straight PCM is

not typically used for video in standard definition consumer applications such as DVD or

DVR because the bit rate required is far too high. Very frequently, PCM encoding

facilitates digital transmission from one point to another (within a given system, or

geographically) in serial form.

In the diagram, a sine wave (red curve) is sampled and quantized for PCM. The sine

wave is sampled at regular intervals, shown as ticks on the x-axis. For each sample, one

of the available values (ticks on the y-axis) is chosen by some algorithm (in this case, the

floor function is used). This produces a fully discrete representation of the input signal

(shaded area) that can be easily encoded as digital data for storage or manipulation. For

the sine wave example at right, we can verify that the quantized values at the sampling

moments are 7, 9, 11, 12, 13, 14, 14, 15, 15, 15, 14, etc. Encoding these values as binary

numbers would result in the following set of nibbles: 0111, 1001, 1011, 1100, 1101,

1110, 1110, 1111, 1111, 1111, 1110, etc. These digital values could then be further

processed or analyzed by a purpose-specific digital signal processor or general purpose

CPU. Several Pulse Code Modulation streams could also be multiplexed into a larger

aggregate data stream, generally for transmission of multiple streams over a single

physical link. This technique is called time-division multiplexing, or TDM, and is widely

used, notably in the modern public telephone system.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

There are many ways to implement a real device that performs this task. In real systems,

such a device is commonly implemented on a single integrated circuit that lacks only the

clock necessary for sampling, and is generally referred to as an ADC (Analog-to-Digital

converter). These devices will produce on their output a binary representation of the input

whenever they are triggered by a clock signal, which would then be read by a processor

of some sort.

Demodulation

To produce output from the sampled data, the procedure of modulation is applied in

reverse. After each sampling period has passed, the next value is read and the output of

the system is shifted instantaneously (in an idealized system) to the new value. As a result

of these instantaneous transitions, the discrete signal will have a significant amount of

inherent high frequency energy, mostly harmonics of the sampling frequency (see square

wave). To smooth out the signal and remove these undesirable harmonics, the signal

would be passed through analog filters that suppress artifacts outside the expected

frequency range (i.e., greater than , the maximum resolvable frequency). Some systems

use digital filtering to remove the lowest and largest harmonics. In some systems, no

explicit filtering is done at all; as it's impossible for any system to reproduce a signal with

infinite bandwidth, inherent losses in the system compensate for the artifacts — or the

system simply does not require much precision. The sampling theorem suggests that

practical PCM devices, provided a sampling frequency that is sufficiently greater than

that of the input signal, can operate without introducing significant distortions within

their designed frequency bands.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

The electronics involved in producing an accurate analog signal from the discrete data are

similar to those used for generating the digital signal. These devices are DACs (digital-to-

analog converters), and operate similarly to ADCs. They produce on their output a

voltage or current (depending on type) that represents the value presented on their inputs.

This output would then generally be filtered and amplified for use.

Limitations

There are two sources of impairment implicit in any PCM system:

• Choosing a discrete value near the analog signal for each sample (quantization

error)

• Between samples no measurement of the signal is made; due to the sampling

theorem this results in any frequency above or equal to (fs being the sampling

frequency) being distorted or lost completely (aliasing error). This is also called

the Nyquist frequency.

As samples are dependent on time, an accurate clock is required for accurate

reproduction. If either the encoding or decoding clock is not stable, its frequency drift

will directly affect the output quality of the device. A slight difference between the

encoding and decoding clock frequencies is not generally a major concern; a small

constant error is not noticeable. Clock error does become a major issue if the clock is not

stable, however. A drifting clock, even with a relatively small error, will cause very

obvious distortions in audio and video signals, for example.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Time-Division Multiplexing (TDM) is a type of digital or (rarely) analog multiplexing

in which two or more signals or bit streams are transferred apparently simultaneously as

sub-channels in one communication channel, but are physically taking turns on the

channel. The time domain is divided into several recurrent timeslots of fixed length, one

for each sub-channel. A sample byte or data block of sub-channel 1 is transmitted during

timeslot 1, sub-channel 2 during timeslot 2, etc. One TDM frame consists of one timeslot

per sub-channel. After the last sub-channel the cycle starts all over again with a new

frame, starting with the second sample, byte or data block from sub-channel 1, etc.

Transmission using Time Division Multiplexing (TDM)

In circuit switched networks such as the Public Switched Telephone Network (PSTN)

there exists the need to transmit multiple subscribers’ calls along the same transmission

medium.[2] To accomplish this, network designers make use of TDM. TDM allows

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

switches to create channels, also known as tributaries, within a transmission stream.[2] A

standard DS0 voice signal has a data bit rate of 64 kbit/s, determined using Nyquist’s

Sampling Criterion.[2][3] TDM takes frames of the voice signals and multiplexes them into

a TDM frame which runs at a higher bandwidth. So if the TDM frame consists of n voice

frames, the bandwidth will be n*64 kbit/s.[2]

Each voice frame in the TDM frame is called a channel or tributary.[2] In European

systems, TDM frames contain 30 digital voice frames and in American systems, TDM

frames contain 24 digital voice frames.[2] Both of the standards also contain extra space

for signalling (see Signaling System 7) and synchronisation data.[2]

Multiplexing more than 24 or 30 digital voice frames is called Higher Order

Multiplexing.[2] Higher Order Multiplexing is accomplished by multiplexing the standard

TDM frames.[2] For example, a European 120 channel TDM frame is formed by

multiplexing four standard 30 channel TDM frames.[2] At each higher order multiplex,

four TDM frames from the immediate lower order are combined, creating multiplexes

with a bandwidth of n x 64 kbit/s, where n = 120, 480, 1920, etc.[2]

Synchronous Digital Hierarchy (SDH)

Plesiochronous Digital Hierarchy (PDH) was developed as a standard for multiplexing

higher order frames.[2][3] PDH created larger numbers of channels by multiplexing the

standard Europeans 30 channel TDM frames.[2] This solution worked for a while;

however PDH suffered from several inherent drawbacks which ultimately resulted in the

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

development of the Synchronous Digital Hierarchy (SDH). The requirements which

drove the development of SDH were as follows:[2][3]

• Be synchronous – All clocks in the system must align with a reference clock.

• Be service-oriented – SDH must route traffic from End Exchange to End

Exchange without worrying about exchanges in between, where the bandwidth

can be reserved at a fixed level for a fixed period of time.

• Allow frames of any size to be removed or inserted into an SDH frame of any

size.

• Easily manageable with the capability of transferring management data across

links.

• Provide high levels of recovery from faults.

• Provide high data rates by multiplexing any size frame, limited only by

technology.

• Give reduced bit rate errors.

SDH has become the primary transmission protocol in most PSTN networks. [2][3] It was

developed to allow streams 1.544 Mbit/s and above to be multiplexed, so as to create

larger SDH frames known as Synchronous Transport Modules (STM).[2] The STM-1

frame consists of smaller streams that are multiplexed to create a 155.52 Mbit/s frame.[2][3]

SDH can also multiplex packet based frames such as Ethernet, PPP and ATM.[2]

While SDH is considered to be a transmission protocol (Layer 1 in the OSI Reference

Model), it also performs some switching functions, as stated in the third bullet point

requirement listed above.[2] The most common SDH Networking functions are as follows:

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

• SDH Crossconnect – The SDH Crossconnect is the SDH version of a Time-

Space-Time crosspoint switch. It connects any channel on any of its inputs to any

channel on any of its outputs. The SDH Crossconnect is used in Transit

Exchanges, where all inputs and outputs are connected to other exchanges.[2]

• SDH Add-Drop Multiplexer – The SDH Add-Drop Multiplexer (ADM) can add or

remove any multiplexed frame down to 1.544Mb. Below this level, standard

TDM can be performed. SDH ADMs can also perform the task of an SDH

Crossconnect and are used in End Exchanges where the channels from subscribers

are connected to the core PSTN network.[2]

SDH Network functions are connected using high-speed Optic Fibre. Optic Fibre uses

light pulses to transmit data and is therefore extremely fast.[2] Modern optic fibre

transmission makes use of Wavelength Division Multiplexing (WDM) where signals

transmitted across the fibre are transmitted at different wavelengths, creating additional

channels for transmission.[2][3] This increases the speed and capacity of the link, which in

turn reduces both unit and total costs.[2]

Statistical Time-division Multiplexing (STDM)

STDM is an advanced version of TDM in which both the address of the terminal and the

data itself are transmitted together for better routing. Using STDM allows bandwidth to

be split over 1 line. Many college and corporate campuses use this type of TDM to

logically distribute bandwidth.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

If there is one 10MBit line coming into the building, STDM can be used to provide 178

terminals with a dedicated 56k connection (178 * 56k = 9.96Mb). A more common use

however is to only grant the bandwidth when that much is needed. STDM does not

reserve a time slot for each terminal, rather it assigns a slot when the terminal is requiring

data to be sent or received.

Digital T – carrier systems

The T-carrier system, introduced by the Bell System in the U.S. in the 1960s, was the

first successful system that supported digitized voice transmission. The original

transmission rate (1.544 Mbps) in the T1 line is in common use today in Internet service

provider (ISP) connections to the Internet. Another level, the T3 line, providing 44.736

Mbps, is also commonly used by Internet service providers.

The T-carrier system is entirely digital, using pulse code modulation (PCM) and time-

division multiplexing (TDM). The system uses four wires and provides duplex capability

(two wires for receiving and two for sending at the same time). The T1 digital stream

consists of 24 64-Kbps channels that are multiplexed. (The standardized 64 Kbps channel

is based on the bandwidth required for a voice conversation.) The four wires were

originally a pair of twisted pair copper wires, but can now also include coaxial cable,

optical fiber, digital microwave, and other media. A number of variations on the number

and use of channels are possible.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

A T1 line in which each channel serves a different application is known as integrated T1

or channelized T1. Another commonly installed service is a fractional T1, which is the

rental of some portion of the 24 channels in a T1 line, with the other channels going

unused.

In the T1 system, voice or other analog signals are sampled 8,000 times a second and

each sample is digitized into an 8-bit word. With 24 channels being digitized at the same

time, a 192-bit frame (24 channels each with an 8-bit word) is thus being transmitted

8,000 times a second. Each frame is separated from the next by a single bit, making a

193-bit block. The 192 bit frame multiplied by 8,000 and the additional 8,000 framing

bits make up the T1's 1.544 Mbps data rate. The signaling bits are the least significant

bits in each frame.

Existing Frequency-division multiplexing carrier systems worked well for connections

between distant cities, but required expensive modulators, demodulators and filters for

every voice channel. For connections within metropolitan areas, Bell Labs in the late

1950s sought cheaper terminal equipment. Pulse-code modulation allowed sharing a

coder and decoder among several voice trunks, so this method was chosen for the T1

system introduced into local use in 1961. In later decades, the cost of digital electronics

declined to the point that an individual codec per voice channel became commonplace,

but by then the other advantages of digital transmission had become entrenched.

The most common legacy of this system is the line rate speeds. "T1" now seems to mean

any data circuit that runs at the original 1.544 Mbit/s line rate. Originally the T1 format

carried 24 pulse-code modulated, time-division multiplexed speech signals each encoded

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

in 64 kbit/s streams, leaving 8 kbit/s of framing information which facilitates the

synchronization and demultiplexing at the receiver. T2 and T3 circuit channels carry

multiple T1 channels multiplexed, resulting in transmission rates of 6.312 and 44.736

Mbit/s, respectively.

Supposedly, the 1.544 Mbit/s rate was chosen because tests done by AT&T Long Lines

in Chicago were conducted underground. To accommodate loading coils, cable vault

manholes were physically 6600 feet apart, and so the optimum bit rate was chosen

empirically--the capacity was increased until the failure rate was unacceptable, then

reduced to leave a margin. Companding allowed acceptable audio performance with only

seven bits per PCM sample in this original T1/D1 system. The later D3 and D4 channel

banks had an extended frame format, allowing eight bits per sample, reduced to seven

every sixth sample or frame when one bit was "robbed" for signaling the state of the

channel. The standard does not allow an all zero sample which would produce a long

string of binary zeros and cause the repeaters to lose bit sync. However, when carrying

data (Switched 56) there could be long strings of zeroes, so one bit per sample is set to

"1" (jam bit 7) leaving 7 bits x 8000 frames per second for data.

A more common understanding of how the rate of 1.544 Mbit/s was achieved is as

follows. (This explanation glosses over T1 voice communications, and deals mainly with

the numbers involved.) Given that the highest voice frequency which the telephone

system transmits is 4000 Hz, the required digital sampling rate is 8000 Hz (see Nyquist

rate). Since each T1 frame contains 1 byte of voice data for each of the 24 channels, that

system needs then 8000 frames per second to maintain those 24 simultaneous voice

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

channels. Because each frame of a T1 is 193 bits in length (24 channels X 8 bits per

channel + 1 framing bit = 193 bits), 8000 frames per second is multiplied by 193 bits to

yield a transfer rate of 1.544 Mbit/s (8000 X 193 = 1544000)

Initially, T1 used Alternate Mark Inversion (AMI) to reduce bandwidth and eliminate

the DC component of the signal. Later B8ZS became common practice. For AMI, each

mark pulse had the opposite polarity of the previous one and each space was at a level of

zero, resulting in a three level signal which however only carried binary data. Similar

British 23 channel systems at 1.536 Mbaud in the 1970s were equipped with ternary

signal repeaters, in anticipation of using a 3B2T or 4B3T code to increase the number of

voice channels in future, but in the 1980s the systems were merely replaced with

European standard ones. American T-carriers could only work in AMI or B8ZS mode.

The AMI or B8ZS signal allowed a simple error rate measurement. The D bank in the

central office could detect a bit with the wrong polarity, or "bipolarity violation" and

sound an alarm. Later systems could count the number of violations and reframes and

otherwise measure signal quality.

Historical Note on the 193-bit T1 frame

The decision to use a 193-bit frame was made in 1958, during the early stages of T1

system design. To allow for the identification of information bits within a frame, two

alternatives were considered. Assign (a) just one extra bit, or (b) additional 8 bits per

frame. The 8-bit choice is cleaner, resulting in a 200-bit frame, 25 8-bit channels, of

which 24 are traffic and 1 8-bit channel available for operations, administration, and

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

maintenance (OA&M). The single bit per frame was chosen, not because a single bit

saves bandwidth (by a trivial amount 1.544 vs 1.6 Mbit/s), but from a comment from

AT&T Marketing. They claim that "if 8 bits were chosen for OA&M function, someone

would then try to sell this as a voice channel and you wind up with nothing."

Soon after commercial success of T1 in 1962, the T1 engineering team realized the

mistake of having only one bit to serve the increasing demand for housekeeping

functions. They petitioned AT&T management to change to 8-bit framing. This was flatly

turned down because it would make installed systems obsolete.

Having this hindsight, some ten years later, CEPT chose 8 bits for framing the European

E1.

Higher T

In the late 1960s and early 1970s Bell Labs developed higher rate systems. T-1C with a

more sophisticated modulation scheme carried 3 Mbit/s, on those balanced pair cables

that could support it. T-2 carried 6.312 Mbit/s, requiring a special low-capacitance cable

with foam insulation. This was standard for Picturephone. T-4 and T-5 used coaxial

cables, similar to the old L-carriers used by AT&T Long Lines. TD microwave radio

relay systems were also fitted with high rate modems to allow them to carry a DS1 signal

in a portion of their FM spectrum that had too poor quality for voice service. Later they

carried DS3 and DS4 signals. Later optical fiber, typically using SONET transmission

scheme, overtook them.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Digital modulation methods

In digital modulation, an analog carrier signal is modulated by a digital bit stream. Digital

modulation methods can be considered as digital-to-analog conversion, and the

corresponding demodulation or detection as analog-to-digital conversion. The changes in

the carrier signal are chosen from a finite number of M alternative symbols (the

modulation alphabet).

Fundamental digital modulation methods

These are the most fundamental digital modulation techniques:

• In the case of PSK, a finite number of phases are used.

• In the case of FSK, a finite number of frequencies are used.

• In the case of ASK, a finite number of amplitudes are used.

• In the case of QAM, a finite number of at least two phases, and at least two

amplitudes are used.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

In QAM, an inphase signal (the I signal, for example a cosine waveform) and a

quadrature phase signal (the Q signal, for example a sine wave) are amplitude modulated

with a finite number of amplitudes, and summed. It can be seen as a two-channel system,

each channel using ASK. The resulting signal is equivalent to a combination of PSK and

ASK.

In all of the above methods, each of these phases, frequencies or amplitudes are assigned

a unique pattern of binary bits. Usually, each phase, frequency or amplitude encodes an

equal number of bits. This number of bits comprises the symbol that is represented by the

particular phase.

If the alphabet consists of M = 2N alternative symbols, each symbol represents a message

consisting of N bits. If the symbol rate (also known as the baud rate) is fS symbols/second

(or baud), the data rate is NfS bit/second.

For example, with an alphabet consisting of 16 alternative symbols, each symbol

represents 4 bits. Thus, the data rate is four times the baud rate.

In the case of PSK, ASK or QAM, where the carrier frequency of the modulated signal is

constant, the modulation alphabet is often conveniently represented on a constellation

diagram, showing the amplitude of the I signal at the x-axis, and the amplitude of the Q

signal at the y-axis, for each symbol.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Modulator and detector principles of operation

PSK and ASK, and sometimes also FSK, are often generated and detected using the

principle of QAM. The I and Q signals can be combined into a complex-valued signal

I+jQ (where j is the imaginary unit). The resulting so called equivalent lowpass signal or

equivalent baseband signal is a representation of the real-valued modulated physical

signal (the so called passband signal or RF signal).

These are the general steps used by the modulator to transmit data:

1. Group the incoming data bits into codewords, one for each symbol that will be

transmitted.

2. Map the codewords to attributes, for example amplitudes of the I and Q signals

(the equivalent low pass signal), or frequency or phase values.

3. Adapt pulse shaping or some other filtering to limit the bandwidth and form the

spectrum of the equivalent low pass signal, typically using digital signal

processing.

4. Perform digital-to-analog conversion (DAC) of the I and Q signals (since today

all of the above is normally achieved using digital signal processing, DSP).

5. Generate a high-frequency sine wave carrier waveform, and perhaps also a cosine

quadrature component. Carry out the modulation, for example by multiplying the

sine and cosine wave form with the I and Q signals, resulting in that the

equivalent low pass signal is frequency shifted into a modulated passband signal

or RF signal. Sometimes this is achieved using DSP technology, for example

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

direct digital synthesis using a waveform table, instead of analog signal

processing. In that case the above DAC step should be done after this step.

6. Amplification and analog bandpass filtering to avoid harmonic distortion and

periodic spectrum

At the receiver side, the demodulator typically performs:

1. Bandpass filtering.

2. Automatic gain control, AGC (to compensate for attenuation, for example

fading).

3. Frequency shifting of the RF signal to the equivalent baseband I and Q signals, or

to an intermediate frequency (IF) signal, by multiplying the RF signal with a local

oscillator sinewave and cosine wave frequency (see the superheterodyne receiver

principle).

4. Sampling and analog-to-digital conversion (ADC) (Sometimes before or instead

of the above point, for example by means of undersampling).

5. Equalization filtering, for example a matched filter, compensation for multipath

propagation, time spreading, phase distortion and frequency selective fading, to

avoid intersymbol interference and symbol distortion.

6. Detection of the amplitudes of the I and Q signals, or the frequency or phase of

the IF signal.

7. Quantization of the amplitudes, frequencies or phases to the nearest allowed

symbol values.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

8. Mapping of the quantized amplitudes, frequencies or phases to codewords (bit

groups);.

9. Parallel-to-serial conversion of the codewords into a bit stream.

10. Pass the resultant bit stream on for further processing such as removal of any

error-correcting codes.

As is common to all digital communication systems, the design of both the modulator and

demodulator must be done simultaneously. Digital modulation schemes are possible

because the transmitter-receiver pair have prior knowledge of how data is encoded and

represented in the communications system. In all digital communication systems, both

the modulator at the transmitter and the demodulator at the receiver are structured so that

they perform inverse operations.

Non-coherent modulation methods do not require a receiver reference clock signal that is

phase synchronized with the sender carrier wave. In this case, modulation symbols (rather

than bits, characters, or data packets) are asynchronously transferred. The opposite is

coherent modulation.

List of common digital modulation techniques

The most common digital modulation techniques are:

• Phase-shift keying (PSK):

o Binary PSK (BPSK), using M=2 symbols

o Quadrature (QPSK), using M=4 symbols

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

o 8PSK, using M=8 symbols

o 16PSK, usign M=16 symbols

o Differential PSK (DPSK)

o Differential QPSK (DQPSK)

o Offset QPSK (OQPSK)

o π/4–QPSK

• Frequency-shift keying (FSK):

o Audio frequency-shift keying (AFSK)

o Multi-frequency shift keying (M-ary FSK or MFSK)

o Dual-tone multi-frequency (DTMF)

o Continuous-phase frequency-shift keying (CPFSK)

• Amplitude-shift keying (ASK):

o On-off keying (OOK), the most common ASK form

o M-ary vestigial sideband modulation, for example 8VSB

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Unit 4

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

DATA COMMUNICATION

& NETWORK PROTOCOL

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

DATA COMMUNICATION CODE

In communications, a code is a rule for converting a piece of information (for example, a

letter, word, or phrase) into another form or representation, not necessarily of the same

type. In communications and information processing, encoding is the process by which

information from a source is converted into symbols to be communicated. Decoding is

the reverse process, converting these code symbols back into information understandable

by a receiver.

One reason for coding is to enable communication in places where ordinary spoken or

written language is difficult or impossible. For example, a cable code replaces words

(e.g., ship or invoice) into shorter words, allowing the same information to be sent with

fewer characters, more quickly, and most important, less expensively. Another example

is the use of semaphore, where the configuration of flags held by a signaller or the arms

of a semaphore tower encodes parts of the message, typically individual letters and

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

numbers. Another person standing a great distance away can interpret the flags and

reproduce the words sent.

SERIAL INTERFACE

In communications, a code is a rule for converting a piece of information (for example, a

letter, word, or phrase) into another form or representation, not necessarily of the same

type. In communications and information processing, encoding is the process by which

information from a source is converted into symbols to be communicated. Decoding is

the reverse process, converting these code symbols back into information understandable

by a receiver.

One reason for coding is to enable communication in places where ordinary spoken or

written language is difficult or impossible. For example, a cable code replaces words

(e.g., ship or invoice) into shorter words, allowing the same information to be sent with

fewer characters, more quickly, and most important, less expensively. Another example

is the use of semaphore, where the configuration of flags held by a signaller or the arms

of a semaphore tower encodes parts of the message, typically individual letters and

numbers. Another person standing a great distance away can interpret the flags and

reproduce the words sent.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

In communications, a code is a rule for converting a piece of information (for example, a

letter, word, or phrase) into another form or representation, not necessarily of the same

type. In communications and information processing, encoding is the process by which

information from a source is converted into symbols to be communicated. Decoding is

the reverse process, converting these code symbols back into information understandable

by a receiver.

One reason for coding is to enable communication in places where ordinary spoken or

written language is difficult or impossible. For example, a cable code replaces words

(e.g., ship or invoice) into shorter words, allowing the same information to be sent with

fewer characters, more quickly, and most important, less expensively. Another example

is the use of semaphore, where the configuration of flags held by a signaller or the arms

of a semaphore tower encodes parts of the message, typically individual letters and

numbers. Another person standing a great distance away can interpret the flags and

reproduce the words sent.

VOCAL offers a comprehensive and fully optimized modem software library, based on over 20 years

success on a wide variety of platforms. Data modulations include V.92 (client/server), V.90

(client/server), V.34, V.32bis/V.32, and V.22bis/V.22/V.23/V.21. Automatic modulation

determination procedures (Automode) include those of V.8, V.8bis and PN-2330. V.92/V.90 digital

client is also available for specialized server requirements (i.e. self-test), as well as analog client

V.92/V.90 support.

The higher data protocol layers include V.42 (including MNP2-4), V.42bis, V.44 and MNP-5. PPP

framing support is provided as a runtime option. The application interface of this software can support

an industry standard AT command set or may be used directly by an application. The modulation layer

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

of this software can provide a HDLC, V.14 or a direct binary framing layer. The line interface may be

an analog front end (codec and DAA) or a digital interface such as T1/E1, xDSL, ATM, and ISDN.

VOCAL's embedded software libraries include a complete range of ETSI / ITU / IEEE compliant

algorithms, in addition to many other standard and proprietary algorithms. Our software is optimized

for execution on ANSI C and leading DSP architectures (TI, ADI, AMD, ARM, MIPS, CEVA, LSI

Logic ZSP, etc.). These libraries are modular and can be executed as a single task under a variety of

operating systems or standalone with its own microkernel

The public switched telephone network (PSTN) is the network of the world's public

circuit-switched telephone networks, in much the same way that the Internet is the

network of the world's public IP-based packet-switched networks. Originally a network

of fixed-line analog telephone systems, the PSTN is now almost entirely digital, and now

includes mobile as well as fixed telephones.

The PSTN is largely governed by technical standards created by the ITU-T, and uses

E.163/E.164 addresses (more commonly known as telephone numbers) for addressing.

Architecture and context

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

The PSTN was the earliest example of traffic engineering to deliver Quality of Service

(QoS) guarantees. A.K. Erlang (1878–1929) is credited with establishing the

mathematical foundations of methods required to determine the amount and configuration

of equipment and the number of personnel required to deliver a specific level of service.

In the 1970s the telecommunications industry conceived that digital services would

follow much the same pattern as voice services, and conceived a vision of end-to-end

circuit switched services, known as the Broadband Integrated Services Digital Network

(B-ISDN). The B-ISDN vision has been overtaken by the disruptive technology of the

Internet. Only the oldest parts of the telephone network still use analog technology for

anything other than the last mile loop to the end user, and in recent years digital services

have been increasingly rolled out to end users using services such as DSL, ISDN, FTTP

and cable modem systems.

Many observers believe that the long term future of the PSTN is to be just one application

of the Internet - however, the Internet has some way to go before this transition can be

made. The QoS guarantee is one aspect that needs to be improved in the Voice over IP

(VoIP) technology.

There are a number of large private telephone networks which are not linked to the

PSTN, usually for military purposes. There are also private networks run by large

companies which are linked to the PSTN only through limited gateways, like a large

private branch exchange (PBX).

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Early history

The first telephones had no network but were in private use, wired together in pairs.

Users who wanted to talk to different people had as many telephones as necessary for the

purpose. A user who wished to speak, whistled into the transmitter until the other party

heard. Soon, however, a bell was added for signalling, and then a switchhook, and

telephones took advantage of the exchange principle already employed in telegraph

networks. Each telephone was wired to a local telephone exchange, and the exchanges

were wired together with trunks. Networks were connected together in a hierarchical

manner until they spanned cities, countries, continents and oceans. This was the

beginning of the PSTN, though the term was unknown for many decades.

Automation introduced pulse dialing between the phone and the exchange, and then

among exchanges, followed by more sophisticated address signaling including multi-

frequency, culminating in the SS7 network that connected most exchanges by the end of

the 20th century.

Digital Channel

Main article: telephone exchange

Although the network was created using analog voice connections through manual

switchboards, automated telephone exchanges replaced most switchboards, and later

digital switch technologies were used. Most switches now use digital circuits between

exchanges, with analog two-wire circuits still used to connect to most telephones.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

The basic digital circuit in the PSTN is a 64-kilobits-per-second channel, originally

designed by Bell Labs, called Digital Signal 0 (DS0). To carry a typical phone call from a

calling party to a called party, the audio sound is digitized at an 8 kHz sample rate using

8-bit pulse code modulation (PCM). The call is then transmitted from one end to another

via telephone exchanges. The call is switched using a signaling protocol (SS7) between

the telephone exchanges under an overall routing strategy.

The DS0s are the basic granularity at which switching takes place in a telephone

exchange. DS0s are also known as timeslots because they are multiplexed together using

time-division multiplexing (TDM). Multiple DS0s are multiplexed together on higher

capacity circuits into a DS1 signal, carrying 24 DS0s on a North American or Japanese

T1 line, or 32 DS0s (30 for calls plus two for framing and signalling) on an E1 line used

in most other countries. In modern networks, this multiplexing is moved as close to the

end user as possible, usually into cabinets at the roadside in residential areas, or into large

business premises.

The timeslots are conveyed from the initial multiplexer to the exchange over a set of

equipment collectively known as the access network. The access network and inter-

exchange transport of the PSTN use synchronous optical transmission (SONET and

SDH) technology, although some parts still use the older PDH technology.

Within the access network, there are a number of reference points defined. Most of these

are of interest mainly to ISDN but one – the V reference point – is of more general

interest. This is the reference point between a primary multiplexer and an exchange. The

protocols at this reference point were standardised in ETSI areas as the V5 interface.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Architecture and context

The PSTN was the earliest example of traffic engineering to deliver Quality of Service

(QoS) guarantees. A.K. Erlang (1878–1929) is credited with establishing the

mathematical foundations of methods required to determine the amount and configuration

of equipment and the number of personnel required to deliver a specific level of service.

In the 1970s the telecommunications industry conceived that digital services would

follow much the same pattern as voice services, and conceived a vision of end-to-end

circuit switched services, known as the Broadband Integrated Services Digital Network

(B-ISDN). The B-ISDN vision has been overtaken by the disruptive technology of the

Internet. Only the oldest parts of the telephone network still use analog technology for

anything other than the last mile loop to the end user, and in recent years digital services

have been increasingly rolled out to end users using services such as DSL, ISDN, FTTP

and cable modem systems.

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

Many observers believe that the long term future of the PSTN is to be just one application

of the Internet - however, the Internet has some way to go before this transition can be

made. The QoS guarantee is one aspect that needs to be improved in the Voice over IP

(VoIP) technology.

There are a number of large private telephone networks which are not linked to the

PSTN, usually for military purposes. There are also private networks run by large

companies which are linked to the PSTN only through limited gateways, like a large

private branch exchange (PBX).

Early history

The first telephones had no network but were in private use, wired together in pairs.

Users who wanted to talk to different people had as many telephones as necessary for the

purpose. A user who wished to speak, whistled into the transmitter until the other party

heard. Soon, however, a bell was added for signalling, and then a switchhook, and

telephones took advantage of the exchange principle already employed in telegraph

networks. Each telephone was wired to a local telephone exchange, and the exchanges

were wired together with trunks. Networks were connected together in a hierarchical

manner until they spanned cities, countries, continents and oceans. This was the

beginning of the PSTN, though the term was unknown for many decades.

Automation introduced pulse dialing between the phone and the exchange, and then

among exchanges, followed by more sophisticated address signaling including multi-

S.Venkatanarayanan M.E.,M.B.A.,M.Phil.,/KLNCE/EEE venjey@yahoo.co.uk

frequency, culminating in the SS7 network that connected most exchanges by the end of

the 20th century.

LAN:

A local-area network is a computer network covering a small geographic area, like a

home, office, or group of buildings e.g. a school. The defining characteristics of LANs, in

contrast to wide-area networks (WANs), include their much higher data-transfer rates,

smaller geographic range, and lack of a need for leased telecommunication lines.