Академический Документы

Профессиональный Документы

Культура Документы

Restaurant Data Processing Engine

Загружено:

RKАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Restaurant Data Processing Engine

Загружено:

RKАвторское право:

Доступные форматы

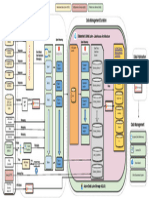

Data Processing Engine Using Kafka & K-Strems

Rajakrishna Reddy | 02 August 2019

Processing state to the state topic

Stored Data Categorized by Store or Multiple Stores

Saves the current

Push the data to Kafka grouped by store state to one of the

Core Processing Engine core topics for fault

tolerance

Store A Processor A Semi Processed A Processor A(2..n)

SemiProcsd

Semi

Semi

Procsd A( (Level2)

Level2)

Procsd A A( Level2)

Finalizer A Final A Grabs the analysis

Legends: Store-Data: Stored in Kafka

identified by Store, and also can be

grouped by Stores, partitioned in the

same way.

Collection of records that get stored

in this topic (These could be related

a single store or multiple stores.)

Processor which processes the

stored data on the fly using the K-

Strems

Transformer that could transform

the data to anther format or could

enrich the existing data by

requirement.

Problem:

A large fast food chain wants you to generate forecast for 2000 restaurants of this fast food chain. Each restaurant has close to 500 items that they sell.

The fast food chain provides data for last 3 years at a store, item, day level. The ask is provide forecast out for the following year.

Assume the data is static. Data Scientist look at the problem and have figured out a solution that provides the best forecast.

The data scientist have built an algorithm that takes all data at a store level and produce forecasted output at the store level.

It takes them 15 minutes to process each store.

Solution:

I've planned to use Kafka and K-Streams for this solution as we need, a data storage and fast computing power. Furnished my solutions for all under each question.

Questions:

1) Given the input data is static. What is the right technology to store the data and what would be the partitioning strategy?

As we are using Kafka for this, the data would get stored as part of the Kafka itself and the partitioning could be store wise or we can group multiple stores data in each partition (This

would be the better approach as we have 2k+ stores), based on our computing requirement as computing is done based on the partitions.

2) Each store takes 15 minutes, how would you design the system to orchestrate the compute faster - so the entire compute can finish this in < 5hrs

We can reduce the computing time by allowing the system to run in parallel for each store or group of stores based on the number of partitions, as each partition data can be run in parallel.

Here we can make use of the K-Streams which could transform/analyze the data on the fly and also has the capability to run custom analytical algorithms on the data flow. If you could see

my design doc, there are multiple levels and intermediate topics in each store's data processing, those processes could run our custom analytical algorithms and yield the results and store

them in a final topic.

Вам также может понравиться

- StoreOnce Deepdive ISV IntegrationДокумент77 страницStoreOnce Deepdive ISV IntegrationGuillermo García GándaraОценок пока нет

- Real Time Analytics With Apache Kafka and Spark: @rahuldausaДокумент54 страницыReal Time Analytics With Apache Kafka and Spark: @rahuldausaOzioma IhekwoabaОценок пока нет

- Presto: Sql-On-Anything: Dataworks Summit, San Jose 2017Документ31 страницаPresto: Sql-On-Anything: Dataworks Summit, San Jose 2017DheepikaОценок пока нет

- Impairment Modelling Using R v1.0Документ43 страницыImpairment Modelling Using R v1.0Anita LucyanaОценок пока нет

- Developing Web Applications with Apache, MySQL, memcached, and PerlОт EverandDeveloping Web Applications with Apache, MySQL, memcached, and PerlОценок пока нет

- CPA CacheДокумент11 страницCPA CacheguiovannimОценок пока нет

- BGS Survey Folder StructureДокумент6 страницBGS Survey Folder Structureeplan drawingsОценок пока нет

- Spark Vs Hadoop Features SparkДокумент9 страницSpark Vs Hadoop Features SparkconsaniaОценок пока нет

- 08u 1Документ31 страница08u 1Anonymous G1iPoNOKОценок пока нет

- DBA2 PremmДокумент36 страницDBA2 Premmafour98Оценок пока нет

- 09 - 1 - An Efficient and Modular Approach For Formally Verifying Cache ImplementationsДокумент9 страниц09 - 1 - An Efficient and Modular Approach For Formally Verifying Cache Implementationsamitpatel1991Оценок пока нет

- XI30 CPA Cache: Dennis KroppДокумент8 страницXI30 CPA Cache: Dennis KroppAlexОценок пока нет

- Real Time Analytics With Apache Kafka and Spark: Rahul JainДокумент54 страницыReal Time Analytics With Apache Kafka and Spark: Rahul JainSudhanshoo SaxenaОценок пока нет

- Spark和YARN:最好一起工作 讲话Документ22 страницыSpark和YARN:最好一起工作 讲话NavneetKambojОценок пока нет

- Compute Caches: NtroductionДокумент12 страницCompute Caches: NtroductionGoblenОценок пока нет

- Delta Lake Landscape 2022 Target State Architecture 1.5Документ1 страницаDelta Lake Landscape 2022 Target State Architecture 1.5Le VietОценок пока нет

- Extreme Scale 15min IntroДокумент18 страницExtreme Scale 15min IntroYakura CoffeeОценок пока нет

- BRK2272 SAP Migration To AzureДокумент34 страницыBRK2272 SAP Migration To Azurepatip9Оценок пока нет

- Cheat Sheet 1Документ2 страницыCheat Sheet 1Anusha GuptaОценок пока нет

- Cloud Native London HopsworksДокумент33 страницыCloud Native London HopsworksJim DowlingОценок пока нет

- Integrated Space Planning Data ExtractioДокумент7 страницIntegrated Space Planning Data Extractiosusmita jenaОценок пока нет

- Light: A Scalable, High-Performance and Fully-Compatible User-Level TCP StackДокумент27 страницLight: A Scalable, High-Performance and Fully-Compatible User-Level TCP StackhomeparaОценок пока нет

- Abinitio-MaterialДокумент11 страницAbinitio-Materialinderjeetkumar singhОценок пока нет

- SmartBabyincub DrawioДокумент1 страницаSmartBabyincub DrawioYOSEF AbdoОценок пока нет

- System Architecture: Client/server Processes Instances ClientsДокумент8 страницSystem Architecture: Client/server Processes Instances ClientsDr. Amresh NikamОценок пока нет

- Data Platform and Analytics Foundational Training: (Speaker Name)Документ14 страницData Platform and Analytics Foundational Training: (Speaker Name)Kathalina SuarezОценок пока нет

- ZopzopДокумент1 страницаZopzopMaciel VierОценок пока нет

- PowerHA - 3 Basic ConfigurationДокумент52 страницыPowerHA - 3 Basic ConfigurationRobin LiОценок пока нет

- Java MaterialДокумент123 страницыJava MaterialSai Krishna BОценок пока нет

- Spring XD and Sqoop (Dec 2014)Документ26 страницSpring XD and Sqoop (Dec 2014)Krishna JandhyalaОценок пока нет

- Bda Unit-4 PDFДокумент63 страницыBda Unit-4 PDFHarryОценок пока нет

- 5 Spark Kafka Cassandra Slides PDFДокумент20 страниц5 Spark Kafka Cassandra Slides PDFusernameusernaОценок пока нет

- JVM ArchitectureДокумент4 страницыJVM ArchitecturePavan ReddyОценок пока нет

- Eden EMS ArchitectureДокумент5 страницEden EMS ArchitectureKin KawОценок пока нет

- Big Data Meets HPCДокумент3 страницыBig Data Meets HPCPeterJohn32Оценок пока нет

- Technical Notes: Emc Recoverpoint Deploying Recoverpoint With Santap and San-OsДокумент63 страницыTechnical Notes: Emc Recoverpoint Deploying Recoverpoint With Santap and San-Osluna5330Оценок пока нет

- Infineon-S25fl128p 128 Mbit 3.0 V Flash Memory-datasheet-V14 00-EnДокумент44 страницыInfineon-S25fl128p 128 Mbit 3.0 V Flash Memory-datasheet-V14 00-EnAlexОценок пока нет

- High Availability Oracle DatabaseДокумент15 страницHigh Availability Oracle DatabaseAswin HadinataОценок пока нет

- Strand7 Feature Summary - USAДокумент1 страницаStrand7 Feature Summary - USADave LiОценок пока нет

- DPDKДокумент10 страницDPDKGabriel FrancischiniОценок пока нет

- Enable Greater Data Reduction and Storage Performance With The Dell EMC PowerStore 7000X Storage ArrayДокумент1 страницаEnable Greater Data Reduction and Storage Performance With The Dell EMC PowerStore 7000X Storage ArrayPrincipled TechnologiesОценок пока нет

- Performance Comparison of FPGA, GPU and CPU in Image ProcessingДокумент7 страницPerformance Comparison of FPGA, GPU and CPU in Image ProcessingA. HОценок пока нет

- Object Oriented Programming JavaДокумент29 страницObject Oriented Programming JavaanshikaОценок пока нет

- Top 5 Reasons To Choose Zwcad: Create Amazing ThingsДокумент1 страницаTop 5 Reasons To Choose Zwcad: Create Amazing ThingsMuflihMuhammadОценок пока нет

- OC HUG 2014-10-4x3 Apache PhoenixДокумент58 страницOC HUG 2014-10-4x3 Apache PhoenixshivasudhakarОценок пока нет

- DMO Introduction SPS 28Документ22 страницыDMO Introduction SPS 28tahirОценок пока нет

- TCS Big Data Lake Presentation - VIL - 17apr2019Документ21 страницаTCS Big Data Lake Presentation - VIL - 17apr20191977amОценок пока нет

- Database Migration As Part of SUM 1641000052Документ21 страницаDatabase Migration As Part of SUM 1641000052Nor Azly Mohd NorОценок пока нет

- Hardwareanforderungen VISUS JiveX PACSДокумент1 страницаHardwareanforderungen VISUS JiveX PACSInan SahinОценок пока нет

- NVMe Developer Days - December 2018 - The NVMe Managemant Interface NVMe MI - Overview and New DevelopmentsДокумент22 страницыNVMe Developer Days - December 2018 - The NVMe Managemant Interface NVMe MI - Overview and New DevelopmentsIvan PetrovОценок пока нет

- POSTER - Laboratory Software That Connects To Your Labs EcosystemДокумент1 страницаPOSTER - Laboratory Software That Connects To Your Labs Ecosystemmicrobehunter007Оценок пока нет

- Ux402 04 SSGДокумент32 страницыUx402 04 SSGahmedОценок пока нет

- The Two Types of Modes Are: 1) Normal Mode in Which For Every Record A Separate DML STMT Will Be Prepared and ExecutedДокумент6 страницThe Two Types of Modes Are: 1) Normal Mode in Which For Every Record A Separate DML STMT Will Be Prepared and ExecutedRajesh RaiОценок пока нет

- CoreJava NotesДокумент25 страницCoreJava Notesnikita thombreОценок пока нет

- Always Ready For Your Next Challenge: The All-New Raptor Tank Gauging SystemДокумент11 страницAlways Ready For Your Next Challenge: The All-New Raptor Tank Gauging SystemAna-Maria GligorОценок пока нет

- Aws Backup (Per 15 Minute) : Reverse FeedДокумент1 страницаAws Backup (Per 15 Minute) : Reverse FeedsiyakinОценок пока нет

- PostgresChina2018 刘东明 PostgreSQL并行查询Документ36 страницPostgresChina2018 刘东明 PostgreSQL并行查询Thoa NhuОценок пока нет

- 2-Transaction-Aware SSD Cache Allocation For The Virtualization EnvironmentДокумент6 страниц2-Transaction-Aware SSD Cache Allocation For The Virtualization EnvironmentJOSE GABRIEL CORTAZAR OCAMPOОценок пока нет

- Managing Services in The Enterprise Services Repository: WarningДокумент15 страницManaging Services in The Enterprise Services Repository: WarningSujith KumarОценок пока нет

- Homework 1Документ3 страницыHomework 1Aditya MishraОценок пока нет

- CSI201 Syllabus Fall2015Документ6 страницCSI201 Syllabus Fall2015lolОценок пока нет

- Step Replace Module Flexi BSC NokiaДокумент19 страницStep Replace Module Flexi BSC Nokiarudibenget0% (1)

- MBA (DS) - Introduction To Python ProgrammingДокумент144 страницыMBA (DS) - Introduction To Python ProgrammingSyed AmeenОценок пока нет

- Exception Handling in C#Документ42 страницыException Handling in C#asrat yehombaworkОценок пока нет

- Lapp - Pro217en - PT FSL 5x075 - Db0034302en - DatasheetДокумент1 страницаLapp - Pro217en - PT FSL 5x075 - Db0034302en - DatasheetMihai BancuОценок пока нет

- Thanks For Downloading OperaДокумент2 страницыThanks For Downloading OperaAlexis KarampasОценок пока нет

- Rab CCTVДокумент10 страницRab CCTVMjska rakaОценок пока нет

- Rickert Excel PDFДокумент11 страницRickert Excel PDFKirito NajdОценок пока нет

- Proizvodnja Krompira - DR Petar MaksimovicДокумент57 страницProizvodnja Krompira - DR Petar MaksimovicDejan PanticОценок пока нет

- SPSS Lesson 1Документ10 страницSPSS Lesson 1maundumiОценок пока нет

- Femap Basic - Day 1Документ129 страницFemap Basic - Day 1spylogo3Оценок пока нет

- 3-D Numerical Analysis of Orthogonal Cutting Process Via Mesh-Free MethodДокумент16 страниц3-D Numerical Analysis of Orthogonal Cutting Process Via Mesh-Free MethodpramodgowdruОценок пока нет

- Install and Configure Windows 2008 R2 VPNДокумент76 страницInstall and Configure Windows 2008 R2 VPNVîrban DoinaОценок пока нет

- RecoveryДокумент14 страницRecoveryprofahmedОценок пока нет

- Bugfender AlternativeДокумент6 страницBugfender AlternativeramapandОценок пока нет

- STQA Introduction PPT 3 (Unit 3)Документ43 страницыSTQA Introduction PPT 3 (Unit 3)Gowtham RajuОценок пока нет

- 4 - Design of I2C Master Core With AHB Protocol For High Performance InterfaceДокумент72 страницы4 - Design of I2C Master Core With AHB Protocol For High Performance Interfacevsangvai26Оценок пока нет

- Timeline of C++ CourseДокумент9 страницTimeline of C++ CoursesohamОценок пока нет

- Designjet HP 111Документ18 страницDesignjet HP 111Javier Francia AlcazarОценок пока нет

- CT122-3-2-BIS - Individual Assignment Question - 2024Документ5 страницCT122-3-2-BIS - Individual Assignment Question - 2024Spam UseОценок пока нет

- CharmДокумент660 страницCharmanirbanisonlineОценок пока нет

- PMP 49 ProcessesДокумент1 страницаPMP 49 ProcessesRai Shaheryar BhattiОценок пока нет

- Iso 554-1976 - 2Документ1 страницаIso 554-1976 - 2Oleg LipskiyОценок пока нет

- Regional SettingsДокумент7 страницRegional SettingsSumana VenkateshОценок пока нет

- ABB-Welcome System and ABB-Welcome IP System: Door Entry SystemsДокумент1 страницаABB-Welcome System and ABB-Welcome IP System: Door Entry SystemspeteatkoОценок пока нет

- 2015-Questionnaire-Tourists' Intention To Visit A Destination The Role of Augmented Reality (AR) Application For A Heritage SiteДокумент12 страниц2015-Questionnaire-Tourists' Intention To Visit A Destination The Role of Augmented Reality (AR) Application For A Heritage SiteesrОценок пока нет

- Firepower System EStreamer Integration Guide v6 1Документ709 страницFirepower System EStreamer Integration Guide v6 1Leonardo Francia RiosОценок пока нет

- Turbiquant - MerckДокумент20 страницTurbiquant - MerckHedho SuryoОценок пока нет

- 1 s2.0 S2095809918301887 MainДокумент15 страниц1 s2.0 S2095809918301887 MainBudi Utami FahnunОценок пока нет