Академический Документы

Профессиональный Документы

Культура Документы

Memory Hierarchy Design

Загружено:

Omkar SanjayОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Memory Hierarchy Design

Загружено:

Omkar SanjayАвторское право:

Доступные форматы

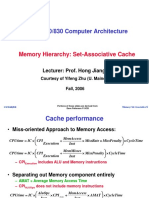

Computer Architecture

A Quantitative Approach, Fifth Edition

Chapter 2

Memory Hierarchy Design

Copyright © 2012, Elsevier Inc. All rights reserved. 1

Introduction

Introduction

Programmers want unlimited amounts of memory with

low latency

Fast memory technology is more expensive per bit than

slower memory

Solution: organize memory system into a hierarchy

Entire addressable memory space available in largest, slowest

memory

Incrementally smaller and faster memories, each containing a

subset of the memory below it, proceed in steps up toward the

processor

Temporal and spatial locality insures that nearly all

references can be found in smaller memories

Gives the illusion of a large, fast memory being presented to the

processor

Copyright © 2012, Elsevier Inc. All rights reserved. 2

Introduction

Memory Hierarchy

Copyright © 2012, Elsevier Inc. All rights reserved. 3

Introduction

Memory Performance Gap

Increase in

memory Increase in

requests/sec memory

acesses/sec

Copyright © 2012, Elsevier Inc. All rights reserved. 4

Introduction

Memory Hierarchy Design

Memory hierarchy design becomes more crucial

with recent multi-core processors:

Aggregate peak bandwidth grows with # cores:

Intel Core i7 can generate two references per core per clock

Four cores and 3.2 GHz clock

25.6 billion 64-bit data references/second +

12.8 billion 128-bit instruction references

= 409.6 GB/s!

DRAM bandwidth is only 6% of this (25 GB/s)

Requires:

Multi-port, pipelined caches

Two levels of cache per core

Shared third-level cache on chip

Copyright © 2012, Elsevier Inc. All rights reserved. 5

Introduction

Performance and Power

High-end microprocessors have >10 MB on-chip

cache

Consumes large amount of area and power budget

Static power due to leakage when not operating and

dynamic power when performing reads/writes.

Power consumption in PMDs due to caches can be

between 25% and 50% of the total power

consumption.

Copyright © 2012, Elsevier Inc. All rights reserved. 6

Introduction

Memory Hierarchy Basics

When a word is not found in the cache, a miss

occurs:

Fetch word from lower level in hierarchy, requiring a

higher latency reference

Lower level may be another cache or the main

memory

Also fetch the other words contained within the block

Takes advantage of spatial locality

Place block into cache in any location within its set,

determined by address

block address MOD number of sets in the cache

Copyright © 2012, Elsevier Inc. All rights reserved. 7

Introduction

Memory Hierarchy Basics

CPU

Read 007

CACHE MISS! CACHE

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 8

Introduction

Memory Hierarchy Basics

CPU

007

005 006 007 008

CACHE

005 006 007 008

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 9

Introduction

Memory Hierarchy Basics

CPU

Read 005

005 006 007 008

CACHE HIT! CACHE

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 10

Introduction

Memory Hierarchy Basics

CPU

005

005 006 007 008

CACHE

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 11

Introduction

Memory Hierarchy Basics

CPU

Read 018

005 006 007 008

CACHE MISS! CACHE

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 12

Introduction

Memory Hierarchy Basics

CPU

018

005 006 007 008

CACHE

017 018 019 020

CACHE MISS!

017 018 019 020

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 13

Introduction

Memory Hierarchy Basics

Placement of blocks in a cache

Set associative: block is mapped into a set and the

block is placed anywhere in the set

Finding a block: map block address to set and search

set (usually in parallel) to find block.

n blocks in a set: n-way associative cache

One block per set (n=1): direct-mapped cache

One set per cache: fully associative cache

Copyright © 2012, Elsevier Inc. All rights reserved. 14

Introduction

Fully Associative Cache

Memory block 3 can go anywhere in the cache.

Block 0

Block 1

Block 2

CACHE

Block 3

Block 0 000 001 003 004

Block 1 005 006 007 008

MEMORY

Block 2 009 010 011 012

Block 3 013 014 015 016

Block 4 017 018 019 020

Block 5 021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 15

Introduction

2-Way Associative Cache

Memory block 3 can only go into cache set (3 mod 2) = 1

in the cache.

Block 0

SET 0 Block 1

Block 2

CACHE

SET 1

Block 3

Block 0 000 001 003 004

Block 1 005 006 007 008

MEMORY

Block 2 009 010 011 012

Block 3 013 014 015 016

Block 4 017 018 019 020

Block 5 021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 16

Introduction

Direct-mapped Cache

Memory block 5 can only go into cache block (5 mod 4) = 1

in the cache.

SET 0 Block 0

SET 1

SET 2

Block 1

Block 2

CACHE

SET 3 Block 3

Block 0 000 001 003 004

Block 1 005 006 007 008

MEMORY

Block 2 009 010 011 012

Block 3 013 014 015 016

Block 4 017 018 019 020

Block 5 021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 17

Introduction

Processor/Cache Addressing

2-way associative cache.

MEMORY

Processor Set 0: B0 0000

0001

0010

V Cache data Set 1: B1

1 1 0 0 0011

B TAG 0100

Set 0: B2

1 11 01110010 10100011 0101

0 0110

1 10 11101111 10001001 Set 1: B3

0111

Set 0: B4

11101111 1000

1 10001001 1001

1010

Set 1: B5

CACHE 1011

01110010 1100

Set 0: B6

<2> <1> <1> 10100011 1101

1110

Block Set 1: B7

1111

TAG Index

Offset <8>

address

INDEX: selects set

TAG: used to check for cache hit if valid bit (VB) is 1.

Copyright © 2013, Daniel A. Menasce. All rights reserved. 18

Introduction

Processor/Cache Addressing

2-way associative cache.

MEMORY

Processor Set 0: B0 0000

0001

0010

V Cache data Set 1: B1

1 1 0 0 0011

B TAG 0100

Set 0: B2

1 11 01110010 10100011 0101

0 0110

1 10 11101111 10001001 Set 1: B3

0111

= Set 0: B4

11101111 1000

1 10001001 1001

1010

CACHE HIT Set 1: B5

CACHE 1011

01110010 1100

Set 0: B6

<2> <1> <1> 10100011 1101

1110

Block Set 1: B7

1111

TAG Index

Offset <8>

address

INDEX: selects set

TAG: used to check for cache hit if valid bit (VB) is 1.

Copyright © 2013, Daniel A. Menasce. All rights reserved. 19

Introduction

Processor/Cache Addressing

2-way associative cache.

MEMORY

Processor Set 0: B0 0000

0001

0010

V Cache data Set 1: B1

1 1 0 0 0011

B TAG 0100

Set 0: B2

0 11 01001110 10110111 0101

0 0110

1 10 11101111 10001001 Set 1: B3

0111

= Set 0: B4

11101111 1000

1 10001001 1001

1010

CACHE MISS Set 1: B5

WHY?

CACHE 1011

01110010 1100

Set 0: B6

<2> <1> <1> 10100011 1101

1110

Block Set 1: B7

1111

TAG Index

Offset <8>

address

INDEX: selects set

TAG: used to check for cache hit if valid bit (VB) is 1.

Copyright © 2013, Daniel A. Menasce. All rights reserved. 20

Introduction

Processor/Cache Addressing

2-way associative cache.

MEMORY

Processor Set 0: B0 0000

0001

0010

V Cache data Set 1: B1

0 1 0 0 0011

B TAG 0100

Set 0: B2

0 11 01001110 10110111 0101

0 0110

1 10 11101111 10001001 Set 1: B3

0111

≠ Set 0: B4

11101111 1000

1 10001001 1001

1010

CACHE MISS Set 1: B5

CACHE 1011

01110010 1100

Set 0: B6

<2> <1> <1> 10100011 1101

1110

Block Set 1: B7

1111

TAG Index

Offset <8>

address

INDEX: selects set

TAG: used to check for cache hit if valid bit (VB) is 1.

Copyright © 2013, Daniel A. Menasce. All rights reserved. 21

Introduction

Cache Miss: Block Replacement

Which Block to Replace?

Direct-mapped cache:

Only one option: replace the block in the location where the

incoming block has to go.

Fully Associative or Set Associative

Random: spreads allocation uniformly.

Least Recently Used (LRU): the block replaced is the one

that has been unused for the longest time. Can be expensive

to implement in hardware. Pseudo-LRU provides an

approximation using bits associated to each set to record

when blocks in a set were accessed.

First In First Out (FIFO): selects the oldest rather than the

LRU block.

Copyright © 2012, Elsevier Inc. All rights reserved. 22

Introduction

Memory Hierarchy Basics

Writing to cache: two strategies

Write-through

Immediately update lower levels of hierarchy

Write-back

Only update lower levels of hierarchy when an updated block

is replaced

Both strategies use write buffer to make writes

asynchronous

Copyright © 2012, Elsevier Inc. All rights reserved. 23

Introduction

Write-through

CPU

Write 018

Block 0 005 006 007 008

CACHE

Block 1 017 018 019 020

CACHE HIT!

Block 2

Block 3

018

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 24

Introduction

Write-back – Part I

CPU

Write 018

Block 0 005 006 007 008

CACHE

Block 1 017 018 019 020

CACHE HIT!

Block 2

Block 3

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 25

Introduction

Write-back – Part II

CPU

Read 024

Block 0 005 006 007 008

CACHE

Block 1 017 018 019 020

CACHE MISS!

Block 2

Block 3

018

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 26

Introduction

Write-back – Part III

CPU

BLOCK 1

REPLACEMENT

Read 024 024

Block 0 005 006 007 008

CACHE

Block 1 021 022 023 024

Block 2

Block 3

021 022 023 024

000 001 003 004

005 006 007 008

MEMORY

009 010 011 012

013 014 015 016

017 018 019 020

021 022 023 024

Copyright © 2013, Daniel A. Menasce. All rights reserved. 27

Introduction

Memory Hierarchy Basics

Miss rate

Fraction of cache access that result in a miss

Causes of misses (3 C’s)

Compulsory

First reference to a block. Would happen even for infinite

caches.

Capacity

Blocks discarded and later retrieved

Conflict

Program makes repeated references to multiple addresses

from different blocks that map to the same location in the

cache

Copyright © 2012, Elsevier Inc. All rights reserved. 28

Introduction

Memory Hierarchy Basics

MemoryStallCycles = NumberOfMisses × MissPenalty =

Misses

= IC × × MissPenalty

Instruction

MemoryAccesses

= IC × × MissRate × MissPenalty

Instruction

Note that speculative and multithreaded

processors

€ may execute other instructions during

a miss

Reduces performance impact of misses

Copyright © 2012, Elsevier Inc. All rights reserved. 29

Introduction

Memory Performance

Example: Assume that the CPI of a computer is 1.0 when all memory

accesses hit in the cache. Data access instructions (loads and stores)

account for 50% of all instructions. The miss penalty is 25 cycles and the

miss rate is 4%. Assume that 70% instructions are cache hits.

What is the execution time in clock cycles (CC) and Instruction Counts (IC)?

Instruction Data Cache Hit/Miss Probability No. CCs

0.04 miss 0.014 1+25=26

0.5 memory

0.96 hit 0.336 1

0.7 Hit

1

0.5 no memory 0.35

1

0.04 miss 0.006 25+25+1=51

0.5 memory

0.96 hit 0.144 25+1=26

0.3 Miss

25+1=26

0.5 no memory 0.15

25+1=26

IC*(0.014*26+(0.336+0.35)*1+0.006*51+(0.144+0.15)*26)*CC = 9 * IC * CC

Copyright © 2013, Daniel A. Menasce All rights reserved. 30

Introduction

Memory Performance

Example: How would the execution time in the previous example

change if the miss rate were reduced to 2%?

Instruction Data Cache Hit/Miss Probability No. CCs

0.02 miss 0.007 1+25=26

0.5 memory

0.98 0.343 1

0.7 Hit

1

0.5 no memory 0.35

1

0.02 0.003 25+25+1=51

0.5 memory

0.98 0.147 25+1=26

0.3 Miss

25+1=26

0.5 no memory 0.15

25+1=26

IC*(0.007*26+(0.334+0.35)*1+0.003*51+(0.147+0.15)*26)*CC = 8.741 * IC * CC

Copyright © 2013, Daniel A. Menasce All rights reserved. 31

Introduction

Memory Performance

Example: What is the miss rate were kept at 4% but memory access

time were improved so that the miss penalty were reduced to 10 cycles?

Instruction Data Cache Hit/Miss Probability No. CCs

0.04 miss 0.014 1+10=11

0.5 memory

0.96 hit 0.336 1

0.7 Hit

1

0.5 no memory 0.35

1

0.04 miss 0.006 10+10+1=21

0.5 memory

0.96 hit 0.144 10+1=11

0.3 Miss

10+1=11

0.5 no memory 0.15

10+1=11

IC*(0.014*11+(0.336+0.35)*1+0.006*21+(0.144+0.15)*11)*CC = 4.2 * IC * CC

Avg. of 3.2 stall cycles/instruction

Copyright © 2013, Daniel A. Menasce All rights reserved. 32

Introduction

Memory Hierarchy Basics

Six basic cache optimizations:

Larger block size

Reduces compulsory misses

Increases capacity and conflict misses, increases miss penalty

Larger total cache capacity to reduce miss rate

Increases hit time, increases power consumption

Higher associativity

Reduces conflict misses

Increases hit time, increases power consumption

Higher number of cache levels (Multi-level caches)

Reduces overall memory access time:

HitTime L1 + MissRate L1 × (HitTime L2 + MissRate L2 × MissPenalty L2 )

Giving priority to read misses over writes

Reduces miss penalty

€ Avoiding address translation in cache indexing

Reduces hit time

Copyright © 2012, Elsevier Inc. All rights reserved. 33

Advanced Optimizations

Ten Advanced Optimizations

Based on improvements on

Hit time

Miss rate

Miss penalty

Cache bandwidth

Power consumption

Copyright © 2012, Elsevier Inc. All rights reserved. 34

Advanced Optimizations

Ten Advanced Optimizations (1)

Small and simple first level caches

Critical timing path:

addressing tag memory, then

comparing tags, then

selecting correct set

Direct-mapped caches can overlap tag compare and

transmission of data

Lower associativity reduces power because fewer

cache lines are accessed

Copyright © 2012, Elsevier Inc. All rights reserved. 35

Advanced Optimizations

L1 Size and Associativity

Access time vs. size and associativity

Copyright © 2012, Elsevier Inc. All rights reserved. 36

Advanced Optimizations

L1 Size and Associativity

Energy per read vs. size and associativity

Copyright © 2012, Elsevier Inc. All rights reserved. 37

Introduction

Memory Performance Example

Determine if a 32-KB 4-way set associative L1 cache has a faster memory

access time than a 32-KB 2-way set associative L1 cache. Assume that

the miss penalty to L2 is 15 clock cycles and that the hit time for the

the faster L1 cache is 1 clock cycle. Ignore misses beyond the L2 cache

and assume miss rates for the L1 cache as below.

Miss rate for 2-way associative = 0.038

Miss rate for 4-way associative = 0.037

Copyright © 2013, Daniel A. Menasce All rights reserved. 38

Introduction

Memory Performance Example

Determine if a 32-KB 4-way set associative L1 cache has a faster memory

access time than a 32-KB 2-way set associative L1 cache. Assume that

the miss penalty to L2 is 15 clock cycles and that the hit time for the

the faster L1 cache is 1 clock cycle. Ignore misses beyond the L2 cache

and assume miss rates for the L1 cache as below.

Miss rate for 2-way associative = 0.038

Miss rate for 4-way associative = 0.037

Hit time 2-way associative = 1.

Avg. access time for 2-way associative = HitTime + MissRate * MissPenalty

= 1 + 0.038 * 15 = 1.57

The figure on slide 36 shows that the access time for the 4-way L1 cache is approximately 1.4 that of

2-way associative.

Avg. access time for 4-way associative = HitTime + MissRate * MissPenalty

= 1.4 * 1 + 0.037 * 15 = 1.955

Copyright © 2013, Daniel A. Menasce All rights reserved. 39

Advanced Optimizations

Way Prediction (2)

To improve hit time, predict the way to pre-set

mux (i.e., the block within the set of next cache

accesses).

Mis-prediction gives longer hit time

Prediction accuracy

> 90% for two-way

> 80% for four-way

I-cache has better accuracy than D-cache

First used on MIPS R10000 in mid-90s

Used on ARM Cortex-A8

Copyright © 2012, Elsevier Inc. All rights reserved. 40

Advanced Optimizations

Pipelining Cache (3)

Pipeline cache access to improve bandwidth

Examples:

Pentium: 1 cycle

Pentium Pro – Pentium III: 2 cycles

Pentium 4 – Core i7: 4 cycles

Increases branch mis-prediction penalty

Makes it easier to increase associativity

Copyright © 2012, Elsevier Inc. All rights reserved. 41

Advanced Optimizations

Nonblocking Caches (4)

Allow hits before

previous misses

complete

“Hit under miss”

“Hit under multiple

miss”

L2 must support this

In general,

processors can hide

L1 miss penalty but

not L2 miss penalty

Copyright © 2012, Elsevier Inc. All rights reserved. 42

Advanced Optimizations

Multibanked Caches (5)

Organize cache as independent banks to

support simultaneous access

ARM Cortex-A8 supports 1-4 banks for L2

Intel i7 supports 4 banks for L1 and 8 banks for L2

Interleave banks according to block address

Copyright © 2012, Elsevier Inc. All rights reserved. 43

Advanced Optimizations

Critical Word First, Early Restart (6)

Critical word first

Request missed word from memory first

Send it to the processor as soon as it arrives

Early restart

Request words in normal order

Send missed work to the processor as soon as it

arrives

Effectiveness of these strategies depends on

block size and likelihood of another access to

the portion of the block that has not yet been

fetched

Copyright © 2012, Elsevier Inc. All rights reserved. 44

Advanced Optimizations

Merging Write Buffer (7)

When storing to a block that is already pending in the

write buffer, update write buffer

Reduces stalls due to full write buffer

Do not apply to I/O addresses

No write

buffering

Write buffering

Copyright © 2012, Elsevier Inc. All rights reserved. 45

Advanced Optimizations

Compiler Optimizations (8)

Loop Interchange

Swap nested loops to access memory in

sequential order

Blocking (see examples on pages 89-90)

Instead of accessing entire rows or columns,

subdivide matrices into blocks

Requires more memory accesses but improves

locality of accesses

Copyright © 2012, Elsevier Inc. All rights reserved. 46

Advanced Optimizations

Loop Interchange

Assume [5000,100] array stored in row major

order (i.e., laid out by rows)

Before: x[0,0], x[1,0], x[2,0] …

for (j=0; j < 100; j = j + 1)

for (i=0; i < 5000; i = i + 1)

x[i][j] = 2 * x[i][j];

After: x[0,0], x[0,1], x[0,1], …

for (i=0; i < 5000; i = i + 1)

for (j=0; j < 100; j = j + 1)

x[i][j] = 2 * x[i][j];

Which one produces less misses?

Copyright © 2012, Elsevier Inc. All rights reserved. 47

Advanced Optimizations

Hardware Prefetching (9)

Fetch two blocks on miss (include next

sequential block)

Pentium 4 Pre-fetching (subset of SPEC 2000 programs)

Copyright © 2012, Elsevier Inc. All rights reserved. 48

Advanced Optimizations

Compiler Prefetching (10)

Insert prefetch instructions before data is needed

Non-faulting: prefetch doesn’t cause exceptions

Register prefetch

Loads data into register

Cache prefetch

Loads data into cache

Combine with loop unrolling and software

pipelining

Copyright © 2012, Elsevier Inc. All rights reserved. 49

Advanced Optimizations

Summary

Copyright © 2012, Elsevier Inc. All rights reserved. 50

Memory Technology

Main Memory Technology

Performance metrics

Latency is concern of cache

Bandwidth is concern of multiprocessors and I/O

Access time

Time between read request and when desired word

arrives

Cycle time

Minimum time between unrelated requests to memory

DRAM used for main memory, SRAM used

for cache

Copyright © 2012, Elsevier Inc. All rights reserved. 51

Memory Technology

Memory Technology

SRAM

Does not require refresh

Requires low power to retain bit

Requires 6 transistors/bit

DRAM (used for main memory)

Must be re-written after being read

Must also be periodically refreshed

Every ~ 8 ms

Each row can be refreshed simultaneously by reading

the row

One transistor/bit

Address lines are multiplexed:

Upper half of address: row access strobe (RAS)

Lower half of address: column access strobe (CAS)

Copyright © 2012, Elsevier Inc. All rights reserved. 52

Memory Technology

Memory Technology

Access time

Time between a memory request and when

the desired word arrives.

Cycle time

Minimum time between unrelated request

to memory

SRAMs don’t need to refresh => cycle

time and access times are very close.

Copyright © 2012, Elsevier Inc. All rights reserved. 53

Memory Technology

Memory Technology

Amdahl:

Memory capacity should grow linearly with processor speed

Unfortunately, memory capacity and speed has not kept

pace with processors

Some optimizations:

Multiple accesses to same row (using row buffer)

Synchronous DRAM (SDRAM)

Added clock to DRAM interface

Burst mode with critical word first

Wider interfaces (DDR2 and DDR3)

Double data rate (DDR): transfer data at the rising and falling

edges of the clock cycle.

Multiple independent banks on each DRAM device =>

address = (bank #, row #, column #)

Copyright © 2012, Elsevier Inc. All rights reserved. 54

Memory Technology

Memory Optimizations

Copyright © 2012, Elsevier Inc. All rights reserved. 55

Memory Technology

Memory Optimizations

Copyright © 2012, Elsevier Inc. All rights reserved. 56

Memory Technology

Memory Optimizations

DDR:

DDR2

Lower power (2.5 V -> 1.8 V)

Higher clock rates (266 MHz, 333 MHz, 400 MHz)

DDR3

1.5 V

Maximum clock frequency: 800 MHz

DDR4

1-1.2 V

Maximum clock frequency: 1600 MHz

GDDR5 is graphics memory based on DDR3

Used in GPUs.

Copyright © 2012, Elsevier Inc. All rights reserved. 57

Memory Technology

Memory Optimizations

Graphics memory:

Achieve 2-5 X bandwidth per DRAM vs. DDR3

Wider interfaces (32 vs. 16 bit)

Higher clock rate

Possible because they are attached via soldering instead of

socketted DIMM modules

Reducing power in SDRAMs:

Lower voltage (1.35V or 1.5V)

Low power mode (ignores clock, continues to

refresh)

Copyright © 2012, Elsevier Inc. All rights reserved. 58

Memory Technology

Memory Power Consumption

Micron 1.5 V 2Gb DDR3-1066 SDRAM

Copyright © 2012, Elsevier Inc. All rights reserved. 59

Memory Technology

Flash Memory

Type of EEPROM (Electronically Erasable

Programmable Read-Only Memory)

Must be erased (in blocks) before being

overwritten

Non volatile

Limited number of write cycles

Cheaper than SDRAM, more expensive than

disk

Slower than SRAM, faster than disk

Copyright © 2012, Elsevier Inc. All rights reserved. 60

Memory Technology

Memory Dependability

Memory is susceptible to cosmic rays

Soft errors: dynamic errors

Detected and fixed by error correcting codes

(ECC)

Hard errors: permanent errors

Use sparse rows to replace defective rows

Chipkill: a RAID-like error recovery

technique:

Data and ECC is distributed so that loss of a

single memory chip can be tolerated and its

content reconstructed from the others.

Copyright © 2012, Elsevier Inc. All rights reserved. 61

Virtual Memory and Virtual Machines

Virtual Memory Basics

The address space of a process is divided

into equal sized pages (e.g., 4KB in size).

Main memory is divided into page frames that

can hold one page each.

The size of a process’ address space can be

larger than main memory.

At any time, some pages of a process may

not be in main memory. All pages are on

the paging disk.

When a page that is not in memory is

referenced, it must be brought from disk to

memory. This is a page fault.

Copyright © 2013, D.A. Menasce. All rights reserved. 62

Virtual Memory and Virtual Machines

Virtual Memory Basics

If all page frames are occupied when a page

fault occurs, a page has to evicted from

memory using a page replacement algorithm.

Least Recently Used (LRU) is an example

of a PRA.

There is a Page Table per process,

maintained by the operating system.

Translation from a virtual address to a

physical address is done by the

hardware.

A page fault invokes the operating

system.

Copyright © 2013, D.A. Menasce. All rights reserved. 63

Virtual Memory and Virtual Machines

Virtual Memory Basics

Virtual Address

A Page 0

C Page Frame 0

B Page 1

VPN Page Offset P Page Frame 1 C Page 2

N Page Frame 2 D Page 3

B Page Frame 3 E Page 4

Main memory F Page 5

G Page 6

H Page 7

Physical Address I Page 8

PFN Page Offset J Page 9

K Page 10

VPN Frame Number L Page 11

Page 0 M Page 12

Page 1 3 N Page 13

Page 2 0 0 Page 14

… … P Page 15

Paging Disk

Page 13 2

(process address

Page 14

Page 15 1 space)

Page Table

Copyright © 2013, D.A. Menasce. All rights reserved. 64

Virtual Memory and Virtual Machines

Virtual Memory Basics

Virtual Address

VPN A Page 0

C Page Frame 0

B Page 1

2 Page Offset P Page Frame 1 C Page 2

N Page Frame 2 D Page 3

B Page Frame 3 E Page 4

Main memory F Page 5

G Page 6

H Page 7

Physical Address I Page 8

0 Page Offset J Page 9

K Page 10

VPN Frame Number L Page 11

Page 0 - M Page 12

Page 1 3 N Page 13

Page 2 0 0 Page 14

… … P Page 15

Paging Disk

Page 13 2

(process address

Page 14 -

Page 15 1 space)

Page Table

Copyright © 2013, D.A. Menasce. All rights reserved. 65

Virtual Memory and Virtual Machines

Virtual Memory Basics

Virtual Address

VPN A Page 0

C Page Frame 0

B Page 1

14 Page Offset P Page Frame 1 C Page 2

N Page Frame 2 D Page 3

B Page Frame 3 E Page 4

Main memory F Page 5

G Page 6

H Page 7

Physical Address I Page 8

Page Offset J Page 9

K Page 10

VPN Frame Number L Page 11

Page 0 - M Page 12

Page 1 3 N Page 13

Page 2 0 0 Page 14

… … P Page 15

Paging Disk

Page 13 2

(process address

Page 14 - Page Fault!

Page 15 1 space)

Page Table

Copyright © 2013, D.A. Menasce. All rights reserved. 66

Virtual Memory and Virtual Machines

Virtual Memory Basics

Virtual Address

VPN A Page 0

C Page Frame 0

B Page 1

14 Page Offset P Page Frame 1 C Page 2

O Page Frame 2 D Page 3

B Page Frame 3 E Page 4

Main memory F Page 5

G Page 6

H Page 7

Physical Address I Page 8

2 Page Offset J Page 9

K Page 10

VPN Frame Number L Page 11

Page 0 - M Page 12

Page 1 3 N Page 13

Page 2 0 0 Page 14

… … P Page 15

Paging Disk

Page 13 -

(process address

Page 14 2

Page 15 1 space)

Page Table

Copyright © 2013, D.A. Menasce. All rights reserved. 67

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many pages are there in the virtual

address space?

How many page frames are there in memory?

How many bits are needed for the VPN field

of an address?

How many bits are needed for the page frame

bit of an address?

How many bits are needed for the page offset

field?

Copyright © 2013, D.A. Menasce. All rights reserved. 68

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many pages are there in the virtual

address space?

64 * 2^30 / (8 * 2^10) = 8 * 2^20 = 2^23 pages

Copyright © 2013, D.A. Menasce. All rights reserved. 69

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many page frames are there in memory?

4 * 2^30 / (8 * 2^10) = 4 * 2^17 = 2^15 page

frames

Copyright © 2013, D.A. Menasce All rights reserved. 70

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many bits are needed for the VPN field

of an address?

2^23 pages => 23 bits

Copyright © 2013, D.A. Menasce. All rights reserved. 71

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many bits are needed for the page frame

bit of an address?

2^15 page frames => 15 bits

Copyright © 2013, D.A. Menasce. All rights reserved. 72

Virtual Memory and Virtual Machines

Virtual Memory Example

A computer system has 4 GB of main

memory and a virtual address space equal to

64 GB. Each page is 8 KB long.

How many bits are needed for the page offset

field?

If a word is 8 bytes long, then each page has

2^3 * 2^10 / 2^3 = 2^10 words

=> 10 bits are needed for the offset

Copyright © 2013, D.A. Menasce. All rights reserved. 73

Virtual Memory and Virtual Machines

Virtual Memory

Protection via virtual memory

Keeps processes in their own memory space

Role of architecture:

Provide user mode and supervisor mode

Protect certain aspects of CPU state

Provide mechanisms for switching between user

mode and supervisor mode

Provide mechanisms to limit memory accesses

Provide TLB to translate addresses

Copyright © 2012, Elsevier Inc. All rights reserved. 74

Virtual Memory and Virtual Machines

Virtual Machines

Supports isolation and security

Sharing a computer among many unrelated users

Enabled by raw speed of processors, making the

overhead more acceptable

Allows different ISAs and operating systems to be

presented to user programs

“System Virtual Machines”

SVM software is called “virtual machine monitor” or

“hypervisor”

Individual virtual machines run under the monitor are called

“guest VMs”

Copyright © 2012, Elsevier Inc. All rights reserved. 75

Virtual Memory and Virtual Machines

Impact of VMs on Virtual Memory

Each guest OS maintains its own set of page

tables

VMM adds a level of memory between physical

and virtual memory called “real memory”

VMM maintains shadow page table that maps

guest virtual addresses to physical addresses

Requires VMM to detect guest’s changes to its own page

table

Occurs naturally if accessing the page table pointer is a

privileged operation

Copyright © 2012, Elsevier Inc. All rights reserved. 76

Вам также может понравиться

- CMOS IC Layout: Concepts, Methodologies, and ToolsОт EverandCMOS IC Layout: Concepts, Methodologies, and ToolsРейтинг: 4.5 из 5 звезд4.5/5 (3)

- Cache 1 54Документ54 страницыCache 1 54thk jainОценок пока нет

- Server Architectures: Multiprocessors, Clusters, Parallel Systems, Web Servers, Storage SolutionsОт EverandServer Architectures: Multiprocessors, Clusters, Parallel Systems, Web Servers, Storage SolutionsОценок пока нет

- Chapter 09Документ88 страницChapter 09md anik hasanОценок пока нет

- Memory Hierarchy Design: A Quantitative Approach, Fifth EditionДокумент17 страницMemory Hierarchy Design: A Quantitative Approach, Fifth EditionAniruddha ShindeОценок пока нет

- Memory Hierarchy Design: A Quantitative Approach, Fifth EditionДокумент22 страницыMemory Hierarchy Design: A Quantitative Approach, Fifth EditionAbhishek singhОценок пока нет

- Operating Systems Implementation COMP3300 A Modern Computer SystemДокумент6 страницOperating Systems Implementation COMP3300 A Modern Computer SystemShyam VenkataramanОценок пока нет

- Oracle Cloud Block Volume 100Документ23 страницыOracle Cloud Block Volume 100Dharmesh BОценок пока нет

- Memory Hierarchy Design: A Quantitative Approach, Fifth EditionДокумент112 страницMemory Hierarchy Design: A Quantitative Approach, Fifth EditionErz SeОценок пока нет

- h19559 Memory Population Rules For Intel Xeon Scalable Processors On PoweredgeДокумент12 страницh19559 Memory Population Rules For Intel Xeon Scalable Processors On Poweredgefirewall822Оценок пока нет

- Memory StructureДокумент15 страницMemory StructureDlool ALmutawaОценок пока нет

- Double Wide Half Mini 2 Pcie Solid State Drive (SSD)Документ1 страницаDouble Wide Half Mini 2 Pcie Solid State Drive (SSD)Edwin CentenoОценок пока нет

- 3 Step Guide To Overclock Your Core I3, I5, or I7 - Updated! - OverclockersДокумент18 страниц3 Step Guide To Overclock Your Core I3, I5, or I7 - Updated! - OverclockersMário ApolinárioОценок пока нет

- HP S700 Pro 2.5 SpecificationsДокумент2 страницыHP S700 Pro 2.5 Specificationsvisorxst1Оценок пока нет

- Exos 7E8: Trusted. Efficient. VersatileДокумент8 страницExos 7E8: Trusted. Efficient. VersatileSebastian AceroОценок пока нет

- CH 07Документ57 страницCH 07chandu mОценок пока нет

- Atto Silicondisk™ Ram-Based Storage Appliance: Extremely Low Latency Storage For 100gbe Enterprise Fabric ArchitecturesДокумент2 страницыAtto Silicondisk™ Ram-Based Storage Appliance: Extremely Low Latency Storage For 100gbe Enterprise Fabric ArchitecturesMONICA LOHОценок пока нет

- 800 UpgrdДокумент12 страниц800 UpgrdJorge VegaОценок пока нет

- Cache Performance Research PaperДокумент96 страницCache Performance Research Papersharad6546Оценок пока нет

- Lesson 05 Course Advanced StorageДокумент36 страницLesson 05 Course Advanced StorageKrish LeeОценок пока нет

- Sesion 6 - Memorias Ram Y Optane: Inst. Rafael G. Ticona CarbajalДокумент16 страницSesion 6 - Memorias Ram Y Optane: Inst. Rafael G. Ticona CarbajalAngela Correa C.Оценок пока нет

- Digital Design and Computer Architecture, 2: EditionДокумент87 страницDigital Design and Computer Architecture, 2: EditionСергей КапустаОценок пока нет

- Cache Memory Virtual MemoryДокумент40 страницCache Memory Virtual MemoryNeha RaoОценок пока нет

- Ready To Build The Next Big Thing.: Precision 3650 TowerДокумент8 страницReady To Build The Next Big Thing.: Precision 3650 TowerKlentiB.ÇukariОценок пока нет

- Oceanstor Dorado 5000V6: Hands-On / Transferência de ConhecimentoДокумент617 страницOceanstor Dorado 5000V6: Hands-On / Transferência de ConhecimentoLuis Fernando Lima DuarteОценок пока нет

- Kit Instructions: Memory UpgradeДокумент15 страницKit Instructions: Memory Upgradejaime acevedoОценок пока нет

- Design ExamplesДокумент21 страницаDesign Examplesdata24365Оценок пока нет

- Topic MemoryManagementДокумент84 страницыTopic MemoryManagementSanthosh SgraoОценок пока нет

- MySQL Perfornance Tuning Overview JPДокумент42 страницыMySQL Perfornance Tuning Overview JPquaesonОценок пока нет

- Detailed Specifications - Thinkcentre A58 (Type 7515, 7523, 7569, 7611, 7706, 7719, 7749, 7774)Документ3 страницыDetailed Specifications - Thinkcentre A58 (Type 7515, 7523, 7569, 7611, 7706, 7719, 7749, 7774)Pavel VeselyОценок пока нет

- CSEN 402 - Ch5 Exploiting Memory Hierarchy - 4749Документ28 страницCSEN 402 - Ch5 Exploiting Memory Hierarchy - 4749Peter SarwatОценок пока нет

- Tips For Performance Schlumberger Petrel 2010-2011: Processor MemoryДокумент4 страницыTips For Performance Schlumberger Petrel 2010-2011: Processor MemoryMohanad HussienОценок пока нет

- Azure Iaas Deep DiveДокумент36 страницAzure Iaas Deep DivespringleeОценок пока нет

- Petrel Tips2Документ4 страницыPetrel Tips2blacklotus21Оценок пока нет

- High Availability: Craig RyanДокумент32 страницыHigh Availability: Craig RyanVishwas RautОценок пока нет

- Computer Architecture: Multiprocessors Shared Memory Architectures Prof. Jerry Breecher CSCI 240 Fall 2003Документ24 страницыComputer Architecture: Multiprocessors Shared Memory Architectures Prof. Jerry Breecher CSCI 240 Fall 2003nighat_princesОценок пока нет

- Mysql Replication: Pros and ConsДокумент51 страницаMysql Replication: Pros and Consdyake04Оценок пока нет

- Core I5 ReportДокумент13 страницCore I5 ReportAmelia AndreaОценок пока нет

- Les 07Документ22 страницыLes 07sherif hassan younisОценок пока нет

- Tech Pack Downhole Memory CameraДокумент7 страницTech Pack Downhole Memory Cameraluis castroОценок пока нет

- Flash MemoryДокумент12 страницFlash MemoryPriyadarshini SahooОценок пока нет

- Module 4: Intelligent Storage System 1Документ31 страницаModule 4: Intelligent Storage System 1Dr.S.P.Balakannan IT Dept, KLUОценок пока нет

- Lecture 3 Architectureofthe8051microcontrollerДокумент32 страницыLecture 3 Architectureofthe8051microcontrollerrosestrikesОценок пока нет

- Index: Power Quotient International Co., LTDДокумент73 страницыIndex: Power Quotient International Co., LTDSsr ShaОценок пока нет

- ISM Chapter 2Документ24 страницыISM Chapter 2Sarvesh ShindeОценок пока нет

- Mpi Unit 2Документ18 страницMpi Unit 2godhanipriyank8Оценок пока нет

- UntitledДокумент63 страницыUntitledshreyas nОценок пока нет

- WHKETaz Wa FPFT XBXДокумент281 страницаWHKETaz Wa FPFT XBXVenkat MurariОценок пока нет

- Computer Architecture: Memory Hierarchy DesignДокумент60 страницComputer Architecture: Memory Hierarchy Designapi-26594847Оценок пока нет

- IEC-IM03 Series: Key FeaturesДокумент1 страницаIEC-IM03 Series: Key FeaturesNestor Junior LarezОценок пока нет

- How To Fix Memory Leaks in Java - JavalobbyДокумент16 страницHow To Fix Memory Leaks in Java - JavalobbyPriyank PaliwalОценок пока нет

- FB12 BowmanДокумент22 страницыFB12 BowmanAmit PuriОценок пока нет

- ESGB5251 Unity SA Flash SoltnsДокумент16 страницESGB5251 Unity SA Flash SoltnsImtiazuddin MohammedОценок пока нет

- Bangladesh University of Professionals: Eng 1005 Computer FundamentalsДокумент33 страницыBangladesh University of Professionals: Eng 1005 Computer FundamentalsFahim AhmedОценок пока нет

- Introduction To Vlsi TechnologyДокумент70 страницIntroduction To Vlsi TechnologylinushereОценок пока нет

- Dell Laptop Led CodesДокумент2 страницыDell Laptop Led Codesmvolk03043100% (1)

- Chapter 4: Memory Management: Part 1: Mechanisms For Managing MemoryДокумент35 страницChapter 4: Memory Management: Part 1: Mechanisms For Managing MemoryArsalan ShaikhОценок пока нет

- Multi-Channel Memory Architecture: Jump To Navigation Jump To SearchДокумент3 страницыMulti-Channel Memory Architecture: Jump To Navigation Jump To SearchDexterОценок пока нет

- SUSHWANTH Dynamic Cache Management TechniqueДокумент33 страницыSUSHWANTH Dynamic Cache Management TechniqueSunil ReddyОценок пока нет

- Chöông 1 Moâ Hình Heä Thoáng Duøng VI Xöû Lyù: ©2009, CE DepartmentДокумент28 страницChöông 1 Moâ Hình Heä Thoáng Duøng VI Xöû Lyù: ©2009, CE DepartmentTuyet NhiОценок пока нет

- Wi-Fi Networks 2023-06-28-14-44-39Документ3 страницыWi-Fi Networks 2023-06-28-14-44-39Michal PuskasОценок пока нет

- Low Power DesignДокумент14 страницLow Power DesignYasser NaguibОценок пока нет

- SK Hynix - h5tq2g83cfr-h9cДокумент33 страницыSK Hynix - h5tq2g83cfr-h9cNegckОценок пока нет

- Curriculam Vitae: Job ObjectivesДокумент6 страницCurriculam Vitae: Job ObjectivesSridhar SriОценок пока нет

- Backup in MAXDBДокумент3 страницыBackup in MAXDBHari KiranОценок пока нет

- Google Pulsus Specification SheetДокумент2 страницыGoogle Pulsus Specification Sheetapi-558619907Оценок пока нет

- MS-DOS and PC-DOS - A Practical GuideДокумент193 страницыMS-DOS and PC-DOS - A Practical GuideJoseph KachereОценок пока нет

- OXUF934DSB 514902 DatasheetДокумент3 страницыOXUF934DSB 514902 DatasheetkarkeraОценок пока нет

- TCG EFI Platform Specification: For TPM Family 1.1 or 1.2Документ43 страницыTCG EFI Platform Specification: For TPM Family 1.1 or 1.2Bob DengОценок пока нет

- Acn Chap 1 To4 (Tech) Book (Searchable)Документ79 страницAcn Chap 1 To4 (Tech) Book (Searchable)Anant SinghОценок пока нет

- SPARC M8 Server Installation Specialist Online AssessmentДокумент61 страницаSPARC M8 Server Installation Specialist Online AssessmentMỹ Linh NguyễnОценок пока нет

- 3rd Yr Intern - Ivan Kakeeto FinalДокумент63 страницы3rd Yr Intern - Ivan Kakeeto FinalDave SeynabouОценок пока нет

- Cronologia de Lenguajes ProceduralesДокумент3 страницыCronologia de Lenguajes ProceduralesFershixxОценок пока нет

- ImageresДокумент4 страницыImageresNikoLa BluZer NeŠićОценок пока нет

- 8051 Instruction SetДокумент98 страниц8051 Instruction SetSmt.N.Vasantha Gowri Assistant ProfessorОценок пока нет

- 808D Diagnostics Manual 0615 en en-USДокумент566 страниц808D Diagnostics Manual 0615 en en-USSatish KumarОценок пока нет

- 1-5G Basic Acronym and AbbreviationДокумент3 страницы1-5G Basic Acronym and AbbreviationIyesusgetanewОценок пока нет

- SystemVerilogForVerification WoquizДокумент218 страницSystemVerilogForVerification WoquizKrishna Kishore100% (5)

- Install Android StudioДокумент3 страницыInstall Android StudioDesno Demitrius BokimnasiОценок пока нет

- 14 Pin Compaq Power Supply Connector For Power Supply Model PDPДокумент2 страницы14 Pin Compaq Power Supply Connector For Power Supply Model PDPprestalaОценок пока нет

- Day One:: Deploying BGP Rib Sharding and Update ThreadingДокумент41 страницаDay One:: Deploying BGP Rib Sharding and Update Threadingmahmoud mohamedОценок пока нет

- Beep CodesДокумент5 страницBeep CodesAkibo Tunde Williamson DaviesОценок пока нет

- EN - SG - Power - Supplies - LoRes (3) - 10 PDFДокумент1 страницаEN - SG - Power - Supplies - LoRes (3) - 10 PDFSamAzigh Up SbbОценок пока нет

- The Model-View-Viewmodel (MVVM) Design Pattern For WPFДокумент13 страницThe Model-View-Viewmodel (MVVM) Design Pattern For WPFrickyarya2000Оценок пока нет

- Control - Unit DesignДокумент26 страницControl - Unit DesignRonnel AgultoОценок пока нет

- Setting Up A Scheduled Task in Windows Server 2008Документ8 страницSetting Up A Scheduled Task in Windows Server 2008Naveen JaishankarОценок пока нет

- HPE Aruba 2540 Quick Setup Guide enДокумент14 страницHPE Aruba 2540 Quick Setup Guide enLuis Yefferson Tica QuispeОценок пока нет

- PSS Pash DSP 00001Документ3 страницыPSS Pash DSP 00001Regis RomaryОценок пока нет

- Software EngineeringДокумент5 страницSoftware EngineeringtesttttОценок пока нет

- Spring Cloud SleuthДокумент31 страницаSpring Cloud SleuthtejpremiumОценок пока нет