Академический Документы

Профессиональный Документы

Культура Документы

2082 PDF

Загружено:

Nae T HanОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

2082 PDF

Загружено:

Nae T HanАвторское право:

Доступные форматы

The Limits of Technology

Grady Booch January 15, 2003

from The Rational Edge: Booch briefly examines factors -- from fundamental to human -- that

limit what technology can achieve.

From fundamental to human, these are the factors that define the limits of technology:

• The laws of physics

• The laws of software

• The challenge of algorithms

• The difficulty of distribution

• The problems of design

• The problems of functionality

• The importance of organization

• The impact of economics

• The influence of politics

The Laws of Physics

Software is a flexible medium, but not so much so that we can ignore the laws of physics. In

particular, the speed of light is a given, and that fact has pragmatic implications for distributed

systems: Star Trek notwithstanding, you can't send a message faster than the speed of light.

Furthermore, there are relativistic effects such that, across space, there is no such thing as

absolute time. As a result, perfect time synchronization in a distributed system is impossible.

Quantum effects also apply, meaning that there are theoretical and practical limits to information

density: you cannot store more data than there are numbers of elementary particles in the

universe. Finally, there exist thermodynamic effects. Although software itself is weightless, the

containers that deploy software do have mass and therefore any computation conducted against

the data in those containers will dissipate heat, a very pragmatic implication for any deeply

embedded systems such as one might find in a spacecraft.

The Laws of Software

Just as inviolate as the laws of physics, there are also some fundamental laws of software:

relative to a given computation, sometimes we can't do it, sometimes we can't afford to do it, and

sometimes we just don't know how to do it (these categories and their examples come from David

Harel's delightful book, Computers, Ltd.).

© Copyright IBM Corporation 2003 Trademarks

The Limits of Technology Page 1 of 8

developerWorks® ibm.com/developerWorks/

Sometimes we simply cannot solve a problem. There exist a number of non-computable problems,

such as the halting problem. Such problems are undecideable, because no algorithm can be

followed to form a solution.

Sometimes we can't afford to solve a problem. Many problems, such as sorting a list of values,

lend themselves to a variety of algorithmic approaches, each with varying time and space

complexity. Some naive sorting algorithms run in quadratic times (meaning that their execution

time is proportional to the square of the number of items to be sorted) whereas other, more

sophisticated, algorithms run in logarithmic time. Such algorithms may be tedious but they

are nonetheless still tractable. However, there exist other classes of algorithms whose time

complexity is exponential. For example, the classic Towers of Hanoi problem as well as various

graph traversal problems cannot be solved in better than exponential time. Such problems are

considered intractable: they have an algorithmic solution, but their time or space complexity is

such that, even with a relatively small value for N, running that algorithm would take centuries (or

more) to complete.

Sometimes we just don't know how to do it. There exist a large class of problems -- finding the

shortest path in a graph, trying to find the optimal means of packing a bin with various-shaped

objects, matching class schedules to students and instructors, for example, that are tractable

but NP-complete: we just don't know if these problems have better than an exponential time

complexity. At present, the best we can do is seek optimal solutions, often by applying some

simplifying assumptions or trying some more exotic approaches such as Monte Carlo methods,

intense parallelism, or genetic programming.

These laws of software are demanding enough, but the problem is even worse as we consider the

implications of non-continuous systems. For example, if we toss a ball into the air, we can reliably

predict its path because we know that under normal conditions, certain laws of physics apply. We

would be very surprised if just because we threw the ball a little harder, halfway through its flight it

suddenly stopped and shot straight up into the sky. In a not-quite-debugged software simulation of

this ball's motion, exactly that kind of behavior can easily occur.

Within a large application, there may be hundreds or even thousands of variables as well as

multiple threads of control. The entire collection of these variables, their current values, and the

current address and calling stack of each process and thread within the system constitute the

present state of the system. Because we execute our software on digital computers, we have a

system with discrete states. By contrast, analog systems such as the motion of the tossed ball

are continuous systems. Parnas suggests that "when we say that a system is described by a

continuous function, we are saying that it can contain no hidden surprises. Small changes in

inputs will always cause correspondingly small changes in outputs." On the other hand, discrete

systems by their very nature have a finite number of possible states; in large systems, there is a

combinatorial explosion that makes this number very large. We try to design our systems with a

separation of concerns, so that the behavior in one part of a system has minimal impact upon the

behavior in another. However, the fact remains that the phase transitions among discrete states

cannot be modeled by continuous functions. Each event external to a software system has the

potential of placing that system in a new state, and furthermore, the mapping from state to state

The Limits of Technology Page 2 of 8

ibm.com/developerWorks/ developerWorks®

is not always deterministic. In the worst circumstances, an external event may corrupt the state

of a system, because its designers failed to take into account certain interactions among events.

For example, imagine a commercial airplane whose flight surfaces and cabin environment are

managed by a single computer. We would be very unhappy if, as a result of a passenger in seat

38J turning on an overhead light, the plane immediately executed a sharp dive. In continuous

systems this kind of behavior would be unlikely, but in discrete systems all external events can

affect any part of the system's internal state. Certainly, this is the primary motivation for vigorous

testing of our systems, but for all except the most trivial systems, exhaustive testing is impossible.

Since we have neither the formal mathematical tools nor the intellectual capacity to model the

complete behavior of large discrete systems, we must be content with acceptable levels of

confidence regarding their correctness.

The Challenge of Algorithms

As the previous section indicated, there are certain classes of problems that are tractable yet

exponentially complex. There also exist other tractable, albeit larger, problems for which we

can glimpse a solution but for which we have not yet settled on a reasonable algorithm: data

compression and photorealistic rendering are two such problems.

In the case of compression algorithms, we can state the theoretical limits of compressing an

image, a waveform, video, or some raw stream of bits, but even so there are a myriad of choices

if we allow some degree of information loss. The more we know about the use and form of the

information we seek to compress, the closer we can get to this theoretical limit: it's largely a bit

of hard work, some hairy mathematics, and some trial and error to find a suitable compression

algorithm for a given domain.

In the case of photorealistic rendering, the field is also characterized by hard work, hairy

mathematics, and some trial and error. A few decades ago, it was quite an accomplishment just to

model pitchers and cups -- and even then, the results looked artificial and plastic. Today, the field

has gotten much better -- we can model scenes down to the level of individual hairs on a beast

and we can do a fairly good job of biologically real movement. However, rendering human faces,

ice, and water is not quite to the point where a careful observer can be fooled. There will likely

come a time when we can do all these things, and when we do, the solution will look simple, but in

the meantime, our lack of perfect knowledge adds complexity and compromise to our systems.

The Difficulty of Distribution

When we think of an algorithm, we typically think of a flow of control that weaves its way through

a single pile of code living on exactly one processor. However, when we think of systems, it's rare

to find one that is so isolated that it stands apart from all others. Make no mistake about it: building

a distributed system is materially harder than building a monolithic one. As Leslie Lamport once

observed, "A distributed system is one in which the failure of a computer you didn't even know

existed can render your computer unusable."

We'd like to believe that building distributed systems is only moderately harder than building a

non-distributed one, but it is decidedly not, because the reality of the real world intrudes. As Peter

The Limits of Technology Page 3 of 8

developerWorks® ibm.com/developerWorks/

Deutsch once noted, there are eight fallacies of distributed computing: we'd like to believe that

these are all true, but they are definitely not:

• The network is reliable

• Latency is zero

• Bandwidth is infinite

• The network is secure

• Topology doesn't change

• There is one administrator

• Transport cost is zero

• The network is homogeneous

The fact that these elements are not true means that we have to add all sorts of protocols and

mechanisms to our systems so that our applications run as if they were true.

The Problems of Design

Simplicity is an elusive thing. Consider the design of just about any relevant Web-centric system:

it probably consists of tens of thousands of lines of custom code on top of hundreds of thousands

of lines of middleware code on top of several million lines of operating system code. From the

perspective of its end users, simplicity manifests itself in terms of a user experience made up of

a small set of concepts that can be manipulated predictably. From the perspective of those who

deploy that system, simplicity manifests itself in terms of an installation process that addresses

the most common path directly while at the same time makes alternative paths accessible and

intuitive. From the perspective of the developers who build that system, simplicity manifests itself

in terms of an architecture that is shaped by a manageable set of patterns that act upon a self-

consistent, regular, and logical model of the domain. From the perspective of the developers who

maintain that system, simplicity manifests itself in the principle of least astonishment, namely, the

ability to touch one part of the system without causing other distant parts to fall off.

Simplicity is most often expressed in terms of Occam's Razor. William Occam, a 14th-century

logician and Franciscan friar stated, "Entities should not be multiplied unnecessarily." Isaac

Newton projected Occam's work into physics by noting, "We are to admit no more causes

of natural things than such are both true and sufficient to explain their appearances." Put in

contemporary terms, physicists often observe, "When you have two competing theories which

make exactly the same predictions, the one that is simpler is the better." Finally, Albert Einstein

declared that "Everything should be made as simple as possible, but not simpler."

Often, you'll hear programmers talk about "elegance" and "beauty," both of which are projections

of simplicity in design. Don Knuth's work on literate programming -- wherein code reads like a

well-written novel -- attempts to bring beauty to code. Richard Gabriel's work on the "quality with

no name," building upon architect Christopher Alexander's work, also seeks to bring beauty and

elegance to systems. In fact, the very essence of the patterns movement encourages simplicity

in the presence of overwhelming complexity by the application of common solutions to common

problems.

The Limits of Technology Page 4 of 8

ibm.com/developerWorks/ developerWorks®

The entire history of software engineering can perhaps be told by the languages, methods,

and tools that help us raise the level of abstraction within our systems, for abstraction is the

primary means whereby we can engineer the illusion of simplicity. At the level of our programming

languages, we seek idioms that codify beautiful writing. At the level of our designs, we seek good

classes and in turn good design patterns that yield a good separation of concerns and a balanced

distribution of responsibilities. At the level of our systems, we seek architectural mechanisms that

regulate societies of these classes and patterns.

The difficulty of design, therefore, is choosing which design and architectural patterns we should

use to best balance the forces that make software development complex. To put it in terms of the

laws of software, this general problem of design is probably NP-complete: there likely exists some

absolutely optimal design for any given problem in context, but pragmatically, we have to settle

for good enough. As we as an industry gain more experience with specific genres of problems,

then we collectively begin to understand a set of design and architectural patterns that are good

enough and that have proven themselves in practice. Thus, designing a new version of an old kind

of system is easier, because we have some idea of how to break it into meaningful parts. However,

designing a new version of a new kind of system with new kinds of forces is fundamentally hard,

because we really don't know the best way to break it into meaningful parts. The best we can do is

create a design based upon past experiences, plagiarize from parts that worked in similar kinds of

situations, and iterate until we get it good enough.

The Problems of Functionality

Consider the requirements for the avionics of a multi-engine aircraft, a cellular phone switching

system, or an autonomous robot. The raw functionality of such systems is difficult enough to

comprehend, but now add all of the (often implicit) nonfunctional requirements such as usability,

survivability, and adaptability. These unrestrained, potentially contradictory, external requirements

are what form the arbitrary complexity about which Brooks writes. This external complexity

usually springs from the "impedance mismatch" that exists between the users of a system and its

developers: users generally find it very hard to give precise expression to their needs in a form

that developers can understand. In extreme cases, users may have only the vaguest ideas of what

they want in a software system. Moreover, developers may not even know exactly the expectations

of its user base, especially in the case of emerging, rapidly changing markets. This is not so

much the fault of either the users or the developers of a system; rather, it occurs because each

group generally lacks expertise in the domain of the other. Users and developers have different

perspectives on the nature of the problem and make different assumptions regarding the nature of

the solution. Actually, even if users had perfect knowledge of their needs, it is intrinsically difficult

to communicate those requirements precisely and efficiently. At one extreme, the development

team may capture requirements in the form of large volumes of text, occasionally accompanied by

a few drawings. Such documents are difficult to comprehend, are open to varying interpretations,

and too often contain elements that are designs rather than essential requirements. At the other

extreme, there will be no stated requirements, only general visions. This may work for exploratory

development, but it is absolutely impossible to manage a development process against invisible

requirements.

The Limits of Technology Page 5 of 8

developerWorks® ibm.com/developerWorks/

A further complication is the fact that, for industrial-strength software, there are typically a large

number of stakeholders who shape the development process, most of whom are completely

unimpressed by the underlying technology for technology's sake. These stakeholders will bring to

the table a multitude of hidden and not-so-hidden economic, strategic, and political agendas that

often warp the development process through the presence of competing concerns.

For software that matters, the requirements of a system will typically change during its

development -- not just because of reasons of technology churn or resilience -- but also because

the very existence of a software development project alters the rules of the problem. Seeing early

products, such as design documents and prototypes, and then using a system once it is installed

and operational, are forcing functions that lead users to better understand and articulate their real

needs. At the same time, this process helps developers master the problem domain, enabling

them to ask better questions that illuminate the dark corners of a system's desired behavior.

Because a large software system is a capital investment, we cannot afford to scrap an existing

system every time its requirements change. Planned or not, large systems tend to evolve

continuously over time, a condition that is often incorrectly labeled software maintenance. To

be more precise, it is maintenance when we correct errors; it is evolution when we respond to

changing requirements; it is preservation when we continue to use extraordinary means to keep an

ancient and decaying piece of software in operation. Unfortunately, experience suggests that an

inordinate percentage of software development resources are spent on software preservation.

The Importance of Organization

Size is no great virtue in a software system. In design, we strive to write less code by inventing

clever and more powerful mechanisms that yield the illusion of simplicity, as well as by reusing

patterns and frameworks of existing designs and code. Indeed, at the limit, the best way to reduce

the risk of a software development project is to simply not write any code at all. However, the

sheer volume of a system's requirements is often inescapable, forcing us either to write a large

amount of new software or to glue together existing software in novel ways. Just three decades

ago, assembly language programs of only a few thousand lines of code stressed the limits of our

software engineering abilities. Today, it is not unusual to find delivered systems whose size is

measured in hundreds of thousands, or even millions of lines of code (and all of that in a high-

order programming language, as well). No one person can ever understand such a system

completely. Even if we decompose our implementation in meaningful ways, we still end up with

hundreds and sometimes thousands of separate components. This amount of work demands that

we use a team of developers, and ideally, as small a team as possible. Furthermore, for software

systems that drive an entire enterprise, one typically must manage teams of teams, each of

which may be geographically distributed from one another. More developers mean more complex

communication and hence more difficult coordination, particularly if the team is geographically

dispersed. With a team of developers, the key management challenge is always to maintain a

unity and integrity of design. In the small, agile approaches to development leverage the social

dynamics of the small, hyper productive team. In the medium and large, however, you need more

control because the stakes are higher and the paths of communication among stakeholders is far

more complex.

The Limits of Technology Page 6 of 8

ibm.com/developerWorks/ developerWorks®

In contemporary software development organizations, the problem is made worse by the reality

that the complete development team typically requires a large mix of skills. For example, in most

Web-centric projects, not only do you have your typical code warriors, but you often have a set

of them who speak different languages (e.g. HTML, XML, Visual Basic, Java, C++, C#, Perl,

Python, VBScript, JavaScript, Active Server Pages, Java Server Pages, SQL, and so on). On top

of that, you'll also have graphic designers who know a lot about HTML and technologies such as

Flash, but very little about traditional software engineering. Database administrators and security

managers will have their own empires with their own languages, as will the network engineers

who control the underlying hardware topology upon which everything runs. As such, the typical

development team is often quite fractured, making it challenging to form a real sense of team.

Not only are there issues of jelling the team, there are also points of friction from the perspective

of the individual developer, friction that eats away at the individual's productivity in subtle ways.

Specifically, there exist the following points of friction:

• The cost of start up and on-going working space organization

• The schedule and mental impact of work product collaboration, including the energy spent in

negotiating with different stakeholders

• The friction of maintaining effective group communication, including the sharing of knowledge,

project status, and project memory

• Time starvation

• Time lost from software and hardware that doesn't work

We call these points of friction because energy is lost in their execution which otherwise could

be directed to more creative activities that contribute directly to the completion of the project's

mission.

Thus, all meaningful development is formed by the resonance of activities that beat at different

rhythms: the activities of the individual developer, the social dynamics among small sets of

developers, and the dynamics among teams of teams. Much like the problem of design, finding the

optimal organization at each of these three levels and deciding upon the right set of artifacts for

each to produce using the best workflows is challenging, and is deeply impacted by the specific

forces upon your project, its domain, and the current development culture. To some degree, every

team is self-organizing -- but always within the structure imposed and encouraged by its context,

and that means the organization as a whole and its management. Choosing that organization

structure is not a technical problem, but instead is a human problem, which by its very nature is

complex. As a human problem, there are naturally all the usual human dramas that play out, often

amplified by the stresses of development. Ultimately, however, what drives the structure of an

organization are its surrounding economics.

The Impact of Economics

Developing software costs money. Barry Boehm, in his classic work on software engineering

economics, based upon 20 years of empirical evidence, concludes that the performance of a

project can be predicted according to the following equation:

Performance = (Complexity**Process) * Team * Tools

The Limits of Technology Page 7 of 8

developerWorks® ibm.com/developerWorks/

where

• Performance means effort or time

• Complexity means volume of human-generated code

• Process means maturity of process and notation

• Team means skill set, experience, and motivation

• Tools means software tools automation

From this equation, we can observe that the complexity of a system can either be amplified by a

bad process or dampened by a good process and that the nature of a team and its tools are equal

contributors to the performance of a project.

During the dot com mania around the turn of the millennium, software economics was pretty much

ignored -- and thus contributed to the dot bomb collapse. Developing software costs money, and

thus for any sustainable business activity, any investment in software development must provide a

good return on that investment. Thus, we might dream up suspicious uses of software that have no

fundamental economic value, but to do so will ultimately end in economic collapse. Alternatively,

we might dream up meaningful uses of software, and to the degree we can develop that software

efficiently and use that software as a strategic weapon in our business, the effort will yield business

success.

The Influence of Politics

Speaking of success, the degree to which success as defined by the software development team

is misaligned with success as defined by the organization is a measure of that organization's

dysfunctionality. Sometimes, an organization will use software as a pawn, putting its developers

on a death march and sacrificing their health for the short-term good of the company. That's an

approach that will quickly burn out your development team. Other times, the organization will be

clueless as to how it can leverage its software resources. That's an approach that will, over time,

undermine the morale and value of the development team.

In the best of worlds -- which unfortunately is a fairly narrow space -- an organization will leverage

its investment in software development so that software is in fact a strategic weapon for the

company. Anything less and the software development team will be limited in the great things it

could have provided.

(The article is excerpted from the forthcoming third edition of Object-Oriented Analysis and Design

with Applications).

© Copyright IBM Corporation 2003

(www.ibm.com/legal/copytrade.shtml)

Trademarks

(www.ibm.com/developerworks/ibm/trademarks/)

The Limits of Technology Page 8 of 8

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Starterset Manual 21gbДокумент86 страницStarterset Manual 21gbOvidiu CiobanuОценок пока нет

- AIA CPC XML Documentation PDFДокумент38 страницAIA CPC XML Documentation PDFAnonymous 9I5YIasIpОценок пока нет

- Introduction To QBasicДокумент27 страницIntroduction To QBasicMBHladilek100% (1)

- LanguageДокумент160 страницLanguagesabinaa20Оценок пока нет

- Wirless Lan TechnologyДокумент22 страницыWirless Lan Technologypachava vaibhaviОценок пока нет

- Risks and Benefits of Business Intelligence in The CloudДокумент10 страницRisks and Benefits of Business Intelligence in The CloudElena CanajОценок пока нет

- Mini Project ReportДокумент13 страницMini Project ReportS Rajput0% (1)

- Preprocessor Preprocessor: List of Preprocessor CommandsДокумент8 страницPreprocessor Preprocessor: List of Preprocessor Commandsbalakrishna3283Оценок пока нет

- Carlos Hernandez ResumeДокумент1 страницаCarlos Hernandez Resumecarloshdz9Оценок пока нет

- Transact-SQL User-Defined Functions For MSSQL ServerДокумент479 страницTransact-SQL User-Defined Functions For MSSQL ServerNedimHolle100% (1)

- Trajectory 2Документ5 страницTrajectory 2Sreelal SreeОценок пока нет

- Configuring Tomcat With Apache or IIS For Load BalancingДокумент9 страницConfiguring Tomcat With Apache or IIS For Load BalancingGeorge AnadranistakisОценок пока нет

- Resolving Motion Correspondence For Densely Moving Points: by C.J. Veenman, M.J.T. Reinders, and E. BackerДокумент12 страницResolving Motion Correspondence For Densely Moving Points: by C.J. Veenman, M.J.T. Reinders, and E. BackerHisham KolalyОценок пока нет

- FilesДокумент154 страницыFilesBhanuОценок пока нет

- CS-871-Lecture 1Документ41 страницаCS-871-Lecture 1Yasir NiaziОценок пока нет

- Artificial Intelligence SlidesДокумент291 страницаArtificial Intelligence SlidesSumit ChaudhuriОценок пока нет

- Discrete Fourier Transform (DFT) PairsДокумент23 страницыDiscrete Fourier Transform (DFT) PairsgoutamkrsahooОценок пока нет

- Ace: A Syntax-Driven C Preprocessor: James Gosling July, 1989Документ11 страницAce: A Syntax-Driven C Preprocessor: James Gosling July, 1989MarcelojapaОценок пока нет

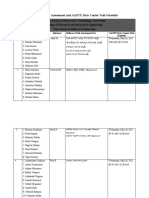

- Software Need Assessment and AASTU Data Center Visit ScheduleДокумент9 страницSoftware Need Assessment and AASTU Data Center Visit ScheduleDagmawiОценок пока нет

- Blackberry Case StudyДокумент5 страницBlackberry Case StudycoolabhishekxyzОценок пока нет

- Data Privacy ActДокумент10 страницData Privacy ActMica ValenzuelaОценок пока нет

- Tawteen EBrochure Casing and Tubing Running ServicesДокумент6 страницTawteen EBrochure Casing and Tubing Running Servicesa.hasan670Оценок пока нет

- Department of Computer Science, Liverpool Hope University (Huangr, Tawfikh, Nagara) @hope - Ac.ukДокумент8 страницDepartment of Computer Science, Liverpool Hope University (Huangr, Tawfikh, Nagara) @hope - Ac.ukUnnikrishnan MuraleedharanОценок пока нет

- 690+ Series AC Drive: Software Product ManualДокумент242 страницы690+ Series AC Drive: Software Product ManualDanilo CarvalhoОценок пока нет

- (This Post Is Written by Gandhi ManaluДокумент6 страниц(This Post Is Written by Gandhi ManaluSurendra SainiОценок пока нет

- Hardware Configuration Changes in RUN ModeДокумент4 страницыHardware Configuration Changes in RUN ModeAdarsh BalakrishnanОценок пока нет

- JDF BookДокумент1 168 страницJDF BookPere GranjaОценок пока нет

- Lecture On Computer Programming 4: JAVA Programming Alex P. Pasion, MS InstructorДокумент12 страницLecture On Computer Programming 4: JAVA Programming Alex P. Pasion, MS InstructorChristian Acab GraciaОценок пока нет

- MobcfappsДокумент48 страницMobcfappsHarshitha ShivalingacharОценок пока нет

- SAP Printer Setup Dot MatrixДокумент8 страницSAP Printer Setup Dot MatrixAti Siti FathiahОценок пока нет