Академический Документы

Профессиональный Документы

Культура Документы

Estimating The Survival Rate of Mutants

Загружено:

mriwa benabdelaliИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Estimating The Survival Rate of Mutants

Загружено:

mriwa benabdelaliАвторское право:

Доступные форматы

Estimating the Survival Rate of Mutants

Imen Marsit1 , Mohamed Nazih Omri1 and Ali Mili2

1

Faculty of Science of Monastir, Tunisia

2

New Jersey Institute of Technology, Newark NJ

{imeni4@yahoo.fr, mohamednazih.omri@fsm.rnu.tn, mili@njit.edu}

Keywords: Mutation Testing, Mutant Survival Rate, Semantic metrics

Abstract: Mutation testing is often used to assess the quality of a test suite by analyzing its ability to distinguish between

a base program and its mutants. The main threat to the validity/ reliability of this assessment approach is that

many mutants may be syntactically distinct from the base, yet functionally equivalent to it. The problem of

identifying equivalent mutants and excluding them from consideration is the focus of much recent research.

In this paper we argue that it is not necessary to identify individual equivalent mutants and count them; rather

it is sufficient to estimate their number. To do so, we consider the question: what makes a program prone

to produce equivalent mutants? Our answer is: redundancy does. Consequently, we introduce a number of

program metrics that capture various dimensions of redundancy in a program, and show empirically that they

are statistically linked to the rate of equivalent mutants.

1 Equivalent Mutants In this paper, we propose an alternative approach,

which does not seek to identify which mutants are

equivalent to the base, but merely to estimate their

Mutation testing is typically used to assess the ef- number. To do so, we consider the research question

fectiveness of test suites, by analyzing to what extent (RQ3) raised by Yao et al (Yao et al., 2014): What

a test suite T can distinguish between a base program are the causes of mutant equivalence? Our answer:

P and a set of mutants thereof, say P1 , P2 , ... PN Redundancy in the program. Consequently, we de-

(Debroy and Wong, 2010; Debroy and Wong, 2013; fine a number of software metrics that capture various

V. and W.E., 2010; Ma et al., 2005; Zemı́n et al., forms of redundancy, discuss why we believe they are

2015). A recurring source of aggravation in mutation prone to produce equivalent mutants, then run an ex-

testing is the presence of equivalent mutants: some periment that appears to bear out conjecture out. This

mutants may be syntactically distinct from the base is work in progress; we are fairly confident that our

program, yet be functionally indistinguishable from analytical arguments are sound, and we are encour-

it (i.e. compute the same function). Equivalent mu- aged by the preliminary empirical results.

tants distort the analysis of the test suite’s effective-

ness, because when a test suite fails to distinguish a Following common usage, we say that a mutant

mutant Pi from the base program P, we do not know has been killed by a test data set T if and only if exe-

whether this is because Pi is equivalent to P, or be- cution of the mutant on T exhibits a distinct behavior

cause T is not sufficiently effective at detecting faults. from the original program P; consequently, when a

Ideally, we want to quantify the effectiveness of a mutant goes through test data T and shows the same

test suite T , not by the ratio of the mutants it dis- behavior as P, we say that it has survived the test.

tinguishes over the total number of mutants (N), but Also, we use the term survival rate of a program P

rather by the ratio of the mutants it distinguishes over to designate the ratio (or percentage) of mutants of P

the number of non-equivalent (distinguishable) mu- that are found to survive the execution of test data T .

tants. Many researchers (Aadamopoulos et al., 2004; For the purpose of our study, we use the seman-

Papadakis et al., 2014; Schuler and Zeller, 2010; Just tic metrics introduced in (Mili et al., 2014), which we

et al., 2013; Nica and Wotawa, 2012) have addressed briefly review in section 2. In section 3 we discuss

this problem by proposing means to identify (and ex- why we feel that the semantic metrics introduced in

clude from consideration) mutants that are equivalent section 2 are good indicators of the number of poten-

to the base program. tially equivalent mutants that a program P may yield.

In sections 4 and 5 we present the details of our em- tinguish between the initial state redundancy and the

pirical study by discussing in turn the experimental final state redundancy. If we let S, σI and σF be (re-

set up we have put together, then the preliminary re- spectively) the random variable that ranges over the

sults we have found. Finally in section 6 we assess declared space, the initial actual space and the final

our prelimnary findings and sketch directions of fur- actual space, then we define:

ther research. • Initial state redundancy: κI (P) = H(S) − H(σI ).

• Final state redundancy: κF (P) = H(S) − H(σF ).

2 Semantic Metrics 2.2 Functional Redundancy

Whereas traditional software metrics usually cap- In the fault tolerant computing literature, it is common

ture properties of the source code of a program, se- to see references to modular redundancy, whereby

mantic software metrics reflect functional properties the same function is computed by different modules

of programs and specifications, regardless of how and their results compared to detect failures (for dou-

these programs and specifications are represented ble modular redundancy) and recover from them (for

(Mili et al., 2014; Morell and Voas, 1993; Voas and triple modular redundancy, or higher). But we do not

Miller, 1993; Gall et al., 2008; Etzkorn and Ghol- need deliberate, pre-planned, dependability-driven

ston, 2002). We consider four semantic metrics, due measures to have functional redundancy; we consider

to (Mili et al., 2014), and we discuss in the next sec- that we observe functional redundancy whenever a

tion why we believe that these metrics may give us function produces more information than is strictly

some indication on the survival rate of the mutants of necessary to represent its result. If we let Y be the

a program. To discuss these metrics, we consider a random variable that represents the range of the pro-

program P on space S, where S is defined by the vari- gram’s function, then the functional redundancy of

able declarations that appear in program P; elements program P is denoted by φ and defined by:

of S are denoted by lower case s, and are referred to

H(S) − H(Y)

as states of the program; by abuse of notation, we use φ(P) = .

the same symbol (P) to denote the program as well as H(Y )

the function that the program defines from its initial For double modular redundancy, this formula yields

states to its final states. We also consider a specifi- φ(P) = 1 and for triple modular redundancy it yields

cation R in the form of a binary relation on S, which φ(P) = 2; but it takes arbitrary (not necessarily inte-

represents the (initial state, final state) pairs that are ger) non-negative values.

considered correct by R. All the metrics we discuss

are based on the entropy function, with which we as- 2.3 Non-Injectivity

sume the reader to be familiar (Csiszar and Koerner,

2011). The injectivity of a function is the property of the

function to change its image as its argument changes;

2.1 State Redundancy the non-injectivity of a function is the property of

the function to map several distinct arguments onto

It is very common for programs to have a state space the same image. Programs are usually vastly non-

that is much larger than the range of values that it injective: a routine that sorts an array of size N maps

takes in any execution. Examples abound: we declare (N!) distinct arrays (corresponding to (N!) distinct

an integer variable (232 values) to represent a boolean permutations of an array of N distinct cells) into a

variable (2 values); we declare an integer variable single (sorted) permutation. We consider program P

(232 values) to represent the age of a person in years and we let X be the random variable that represents

(120 values, to be optimistic); we declare three integer the domain of P. A natural way to quantify the non-

variables (296 ≈ 1029 values) to represent the current injectivity of function P is to consider the conditional

year, birth year, and current age of a person (about 104 entropy of X given P(X); in other words, if we know

values if we consider that one of the three variables is what P(X) is, how much uncertainty do we have about

redundant, and take 120 for maximum age and 100 for X. If P is injective then the conditional entropy is

longevity of the application). The state redundancy of zero, since P(X) uniquely determines X; but if P is

a program is the difference between the entropy of the not injective then the conditional entropy reflects the

declared state and the entropy of the actual state; be- number of antecedents that Y = P(X) may have; in the

cause the entropy of the actual state may change (de- case of the sorting routine, for example, if we look

crease) during the execution of the program, we dis- at the output of the routine in the form of a sorted

array, then we know that the input could be any (of redundancy is better able to recover from deviations

the (N!)) permutations of this array. Hence we define caused by a mutated statement. Even in the absence

non-injectivity as: θ(P) = H(X|P(X)). of deliberate fault tolerance measures, increased func-

tional redundancy means increased likelihood that the

2.4 Non Determinacy final state is determined by non-affected functional in-

formation.

A specification is non-deterministic if and only if it

assigns multiple final states to a given initial state; let 3.3 Non Injectivity

S be the space defined by three variables, say x, y and

z, and let R be a specification of the form The non-injectivity of a program reflects the breadth

′ ′ ′ ′

R0 = {(s, s )|x = fx (s) ∧ y = fy (s) ∧ z = fz (s)}, of distinct initial (or intermediate) states that map to

the same final state. The higher the non-injectivity

where s stands for the aggregate hx, y, zi and fx (), fy (), of a program, the greater the likelihood that a state

fz () are three functions that can be evaluated for any generated by a base program and a state generated by

s in S. Then R0 is deterministic since for each s it a mutant are mapped to the same final state.

assigns a single s′ . The following three specifications

are increasingly non-deterministic, since they restrict 3.4 Non Determinacy

final states less and less, hence allow more and more

final states.

Let S be the space defined by three integer variables

R1 = {(s, s′ )|x′ = fx (s) ∧ y′ = fy (s)},

x, y and z, and let R and R′ be the following specifica-

R2 = {(s, s′ )|x′ = fx (s)},

tions on S:

R3 = {(s, s′ )|true }.

R = {(s, s′ )|x′ = y ∧ y′ = x}.

If we let X be a random variable that takes its values

R′ = {(s, s′ )|x′ = y ∧ y′ = x ∧ z′ = x}.

in the domain of R, and let R(X) be the set of images

Let P (base) and M (mutant) be the following pro-

of X by R, then we define the non-determinacy of R

grams on space S:

as the conditional entropy of R(X) given X, i.e. how

p: {z=x; x=y; y=z;}

much uncertainty we have about the image of X if we

m: {z=y; y=x; x=z;}

know X: χ(R) = H(R(X)|X).

Whether M is found to be equivalent to P or not de-

pends on which oracle we use to test M: If we use

specification R (which focuses on x and y) then we

3 Analytical Study find it to be equivalent, and if we use R′ we find it to

be distinct. Specification R differs from R′ in its de-

The question that we address here is: what reason gree of non-determinacy:

do we have to believe that the semantic metrics we χ(R) = 32 bits,

have discussed in the previous section have any rela- χ(R′ ) = 0 bits.

tion with the survival rate of mutants of a program? As the non-determinacy of a specification increases

the determination of equivalence weakens, and more

3.1 State Redundancy programs are considered equivalent.

State redundancy reflects to what extent the aggregate

of program variables carry more bits than necessary

to represent the actual program state; the increase in

4 Empirical Study

state redundancy yields an increase in the volume of

bits that have no effect on the execution of the pro- 4.1 Computing Survival Rates

gram. Hence with an increase in state redundancy, we

may expect an increase in the likelihood that the ex- In order to validate our conjecture that the seman-

ecution of a mutant will affect irrelevant parts of the tic metrics are correlated to the survival rate of a

state, hence will yield equivalent behavior. program’s mutants, we consider a number of pro-

grams, compute their semantic metrics, then we de-

3.2 Functional Redundancy ploy mutant generators to produce a set of mutants

for each program, and check how many mutants sur-

To the extent that added functional redundancy is vive the test in each case. To this effect, we con-

equated with greater tolerance to faults, it is normal sider a sample of thirteen (13) programs from the

to expect that a program that has greater functional Common Math Library, a library of open source Java

projects, along with their corresponding JUnit auto- 5 Statistical Observations

mated unit test data sets. For each class in the li-

brary, say Myclass.java, corresponds a test class 5.1 Correlations

MyclassTest.java, which contains a number of test

cases TestMethod() for each method of the class. Table 2 shows the correlation between the five factors

In order to generate mutants, we use the Pitest tool of table 1; of greatest interest to us is the correlation

(Coles, 2017), which integrates smoothly with JU- between the survival rate and the semantic metrics.

nit; we use Maven (Inozemtseva and Holmes, 2014) As shown in the rightmost column of table 2 these

to build and run tests. First, we specify to Pitest correlations are very high, ranging from 0.728 for ini-

which class to mutate, along with the corresponding tial state redundancy to 0.764 for final state redun-

test class; upon deployment, it generates mutants ac- dancy to 0.802 for functional redundancy to 0.921 for

cording to the specified parameters and runs the se- non-injectivity; this bears out our conjectures.

lected test suite on each one of them. Then it pro-

duces a report that shows: the line of code it mutates 5.2 Regression

for each mutant; the total number of mutants; and the

number of killed and live mutants. Because we have We run a linear regression on this data, using the sur-

no control over the test oracle (this is handled auto- vival rate as a dependent variable and the semantic

matically by the test environment) we do not include metrics as the independent variables, and we find the

non-determinacy in our current study. following equation:

SR = 2.417 + 0.012 × SRi + 0.001 × SR f

+0.116 × FR + 0.172 × NI,

where SR, SRi , SR f , FR and NI represent, respec-

4.2 Computing Semantic Metrics tively, the survival rate, the initial state redundancy,

the final state redundancy, the functional redundancy

and the non-injectivity. As far as regression statistics,

Given a random variable X that ranges over a set of we find 0.881 for R2 and 0.821 for adjusted R2 . Table

values {x1 , x2 , ...xN }, the entropy of X is defined with 3 shows the comparison between the estimated values

respect to a probability distribution, say π(), which and the actual values of the survival rates (as percent-

assigns a probability of occurrence π(xi ) to each value ages), along with the residuals. We find the average

of X. The formula of the entropy is: of residuals to be -0.132 and the standard devitaion to

H(X) = − ∑Ni=1 π(xi ) log(π(xi )), be 0.516; except for some outliers,most residuals are

and is given in bits. When the probability distribution within 20%, and many are well within 10%.

π() is uniform, we have π(xi ) = N1 for all i, which

yields H(X) = log(N). For a random variable X that

takes values of a 32-bit integer, N equals 232 and 6 Assessment and Prospects

log(N) is then merely equal to 32 bits. For the sake of

simplicity, we assume uniform probability through- Mutation testing is commonly used as a means to

out, so that the entropy of any program variable is assess the effectiveness of a test data set: Given a pro-

basically the number of bits in that variable. As for gram P and a test data set T , if we generate N mu-

computing conditional entropies, we use the defini- tants of P and run them on data T and find that they

tion H(X|Y ) = H(X,Y ) − H(Y ), and the property that all exhibit a different behavior from P, we can con-

if Y is a function of X then H(X,Y ) = H(X), so that clude that T is very effective at detecting faults in P.

the conditional entropy in these cases is H(X|Y ) = But if test data T can only distinguish, say 60% of the

H(X) − H(Y ). mutants from P, we have no way to tell whether it is

because T is ineffective or because 40% of the gen-

erated mutants are equivalent to P.In order to distin-

guish between these two situations, we need to know

4.3 Raw Data how many mutants of P are distinguishable from P,

and assess the effectiveness of T with respect to this

number, rather than with respect to N.

The raw data stemming from our experiment is given While most other approaches address the prob-

in table 1. Columns 1 to 4 are filled using the data lem of equivalent mutants by trying to characterize

collected in section 4.1 and the last column is filled / identify equivalent mutants and counting them, we

using the methods described in section 4.2. attempt to estimate how many equivalent mutants a

Initial State Final State Functional Non- Survival

Function Redundancy Redundancy Redundancy Injectivity Rate

Vector1D 229.44 293.44 11.04 128.00 33.33

subandcheck 50.72 114.72 8.63 64.00 16.67

addandcheck 50.72 32.00 2.40 64.00 33.33

multiplyentry 681.68 685.00 8.25 128.00 33.33

trigamma 55.36 57.36 8.63 64.00 20.83

distanceinf 688.32 792.12 19.80 192.00 50.00

pow 114.72 121.36 0.90 128.00 11.11

getreducedfraction 50.20 82.71 6.22 64.00 11.76

linearCombination 1092.00 1509.44 56.83 256.00 66.67

gamma 234.08 313.36 2.32 64.00 18.75

normalizeArray 702.00 812.88 15.90 0.00 6.25

addSub 101.72 447.80 13.84 64.00 16.67

fraction 545.00 609.00 1.58 64.00 28.12

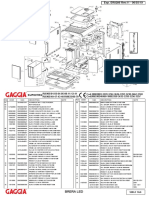

Figure 1: Raw Data

Initial State Final State Functional Non- Survival

Redundancy Redundancy Redundancy Injectivity Rate

Initial State

Redundancy 1 0.985752 0.722747 0.573626 0.727965

Final State

Redundancy 1 0.80808 0.621823 0.763944

Functional

Redundancy 1 0.704976 0.801607

Non-

Injectivity 1 0.92079

Survival

Rate 1

Figure 2: Correlation Table

Actual Estimated Relative

Function Survival Rate Survival Rate Residual

Vector1D 33.33 28.760 0.137

subandcheck 16.67 15.149 0.091

addandcheck 16.67 14.344 0.139

multiplyentry 33.33 34.255 -0.028

trigamma 20.83 15.148 0.273

distanceinf 50.00 46.789 0.064

pow 11.11 26.035 -1.343

getreducedfraction 11.76 14.831 -0.261

linearCombination 66.67 67.654 -0.015

gamma 18.75 16.816 0.103

normalizeArray 6.25 13.498 -1.159

addSub 16.67 16.353 0.019

fraction 28.12 20.757 0.262

Figure 3: Actuals, Estimates, and Residuals

program is prone to produce; in other words, we do Debroy, V. and Wong, W. E. (2010). Using mutations to

not need to know which mutants are equivalent, all automatically suggest fixes for faulty programs. In

we need to know is how many equivalent mutants we Proceedings, ICST, pages 65–74.

think there are. Our approach proceeds by analyz- Debroy, V. and Wong, W. E. (2013). Combining mutation

ing several forms of redundancy within the program, and fault localization for automated program debug-

ging. Journal of Systems and Software, 90:45–60.

which can be quantified by a set of semantic metrics.

Etzkorn, L. H. and Gholston, S. (2002). A semantic entropy

In this paper, we present the semantic metrics in ques- metric. Journal of Software Maintenance Evolution:

tion, discuss why we believe that they are related to research and Practice, 14:293–310.

the survival rate of mutants of a program, then show Gall, C. S., Lukins, S., Etzkorn, L., Gholston, L., Farring-

empirical evidence to support our conjecture, and pro- ton, P., Utley, D., Fortune, J., and Virani, S. (2008).

vide an approximate formula that estimates the sur- Semantic software metrics computed from natural lan-

vival rate of a program as a function of its semantic guage design specifications. IET Software, 2(1).

metrics. We envision the following extensions to our Inozemtseva, L. and Holmes, R. (2014). Coverage is not

work: strongly correlated with test suite effectiveness. In

Procedings, 36th International Conference on Soft-

• Because the dependent variable is normalized (a ware Engineering. ACM Press.

percentage), we argue that the independent vari-

Just, R., Ernst, M. D., and Fraser, G. (2013). Using state

ables ought to be normalized as well; hence we infection conditions to detect equivalent mutants and

envision to redo the statistical analysis using nor- sped up mutation analysis. In Proceedings, Dagstuhl

malized metrics (most can be normalized to the Seminar 13021: Symbolic Methods in Testing.

entropy of the declared state of the program). Ma, Y.-S., Offutt, J., and Kwon, Y.-R. (2005). Mujava : An

• Building a larger base of programs, involving automated class mutation system. Journal of Software

Testing, Verification and Reliability, 15(2):97–133.

larger size programs.

Mili, A., Jaoua, A., Frias, M., and Helali, R. G. (2014).

• Validate the new regression formula on a realistic Semantic metrics for software products. Innovations

set of programs, distinct from the programs with in Systems and Software Engineering, 10(3):203–217.

which we build the predictive model. Morell, L. J. and Voas, J. M. (1993). A framework for defin-

• Automating the calculation of the semantic met- ing semantic metrics. Journal of Systems and Soft-

ware, 20(3):245–251.

rics, ideally by the generation of a compiler that

analyzes the source code of the program to com- Nica, S. and Wotawa, F. (2012). Using constraints for equiv-

alent mutant detection. In Andres, C. and Llana, L.,

pute its semantic metrics. editors, Second Workshop on Formal methods in the

• Consider the oracle that is used in identify- Development of Software, EPTCS, pages 1–8.

ing equivalent mutants, and integrate the non- Papadakis, M., Delamaro, M., and LeTraon, Y. (2014).

determinacy of the oracle in the statistical model. Mitigating the effects of equivalent mutants with mu-

tant clasification strategies. Science of Computer Pro-

• Consider a possible standardization of the test gramming, 95(P3):298–319.

data we use to test mutants: currently, we are re- Schuler, D. and Zeller, A. (2010). (un-)covering equiva-

lying on the test data provided by the Commons lent mutants. In Proceedings, International Confer-

Math Library. It is possible that some test classes ence on Software Testing, Verification and Validation,

are more thorough than others; yet in order for our pages 45–54.

statistical study to be meaningful, all test classes V., D. and W.E., W. (2010). Using mutation to automatically

need to be equally thorough; we need to define suggest fixes to faulty programs. In Proceedings, ICST

standards across classes. 2010, pages 65–74.

Voas, J. M. and Miller, K. (1993). Semantic metrics for

software testability. Journal of Systems and Software,

20(3):207–216.

REFERENCES Yao, X., Harman, M., and Jia, Y. (2014). A study of equiv-

alent and stubborn mutation operators using human

Aadamopoulos, K., Harman, M., and Hierons, R. (2004). analysis of equivalence. In Proceedings, ICSE.

How to overcome the equivalent mutant problem and Zemı́n, L., Guttiérrez, S., de Rosso, S. P., Aguirre, N., Mili,

achieve tailored selective mutation using co-evolution. A., Jaoua, A., and Frias, M. (2015). Stryker: Scaling

In Proceedings, Genetic and Evolutionary Computa- specification-based program repair by pruning infeasi-

tion, volume 3103 of LNCS, pages 1338–1349. ble mutants with sat. Technical report, ITBA, Buenos

Coles, H. (2017). Real world mutation testing. Technical Aires, Argentina.

report, pitest.org.

Csiszar, I. and Koerner, J. (2011). Information Theory:

Coding Theorems for Discrete Memoryless Systems.

Cambridge University Press.

Вам также может понравиться

- Residual analysis using probability-scale residualsДокумент27 страницResidual analysis using probability-scale residualsRomualdoОценок пока нет

- A Step Towards An Intelligent Debugger: Cristinel Mateis and Markus Stumptner and Dominik Wieland and Franz WotawaДокумент7 страницA Step Towards An Intelligent Debugger: Cristinel Mateis and Markus Stumptner and Dominik Wieland and Franz WotawaaresnicketyОценок пока нет

- Modelo en Lógica Difusa de PercepciónДокумент13 страницModelo en Lógica Difusa de PercepcióndanielОценок пока нет

- Use of Informational UncertaintyДокумент6 страницUse of Informational UncertaintyGennady MankoОценок пока нет

- A Proof-Theoretic Assessment of Runtime Type ErrorsДокумент16 страницA Proof-Theoretic Assessment of Runtime Type ErrorsbromlantОценок пока нет

- Detectors and Correctors: A Theory of Fault-Tolerance ComponentsДокумент29 страницDetectors and Correctors: A Theory of Fault-Tolerance ComponentsGreis lormudaОценок пока нет

- A Practical and Complete Aproach To Predicate Refinement (JAHALA, R. McMILLAN, K. L., 2005)Документ15 страницA Practical and Complete Aproach To Predicate Refinement (JAHALA, R. McMILLAN, K. L., 2005)GaKeGuiОценок пока нет

- What Is A Fault and Why Does It Matter 2015Документ26 страницWhat Is A Fault and Why Does It Matter 2015mriwa benabdelaliОценок пока нет

- Classical Test Theory as a First-Order Item Response TheoryДокумент45 страницClassical Test Theory as a First-Order Item Response TheoryAnggoro BonongОценок пока нет

- Between-Study Heterogeneity: Doing Meta-Analysis in RДокумент23 страницыBetween-Study Heterogeneity: Doing Meta-Analysis in RNira NusaibaОценок пока нет

- Npar LDДокумент23 страницыNpar LDThaiane NolascoОценок пока нет

- Chi-Square Pearson SpearmanДокумент8 страницChi-Square Pearson SpearmanTristan da CunhaОценок пока нет

- Evaluating Verbal Models Using Statistical TechniquesДокумент9 страницEvaluating Verbal Models Using Statistical TechniquesNilesh AsnaniОценок пока нет

- Opposition-Based Differential EvolutionДокумент16 страницOpposition-Based Differential EvolutionYogesh SharmaОценок пока нет

- Comparacion GarchДокумент17 страницComparacion GarchJuan DiegoОценок пока нет

- (UM20MB502) - Unit 4 Hypothesis Testing and Linear Regression - Notes 1. Define Regression Analysis and List Their CharacteristicsДокумент6 страниц(UM20MB502) - Unit 4 Hypothesis Testing and Linear Regression - Notes 1. Define Regression Analysis and List Their CharacteristicsPrajwalОценок пока нет

- Hypothesis testing with confidence intervals and P values in PLS-SEMДокумент6 страницHypothesis testing with confidence intervals and P values in PLS-SEMSukhdeepОценок пока нет

- On Faults and Faulty Programs 2014Документ17 страницOn Faults and Faulty Programs 2014mriwa benabdelaliОценок пока нет

- Unifying Theories of Programming: A Tutorial Introduction To CSP inДокумент50 страницUnifying Theories of Programming: A Tutorial Introduction To CSP inP6E7P7Оценок пока нет

- JKBDSSKДокумент19 страницJKBDSSKcamilo4838Оценок пока нет

- Journal of Statistical Software: Power: A Reproducible Research Tool To EaseДокумент44 страницыJournal of Statistical Software: Power: A Reproducible Research Tool To Easemaf2014Оценок пока нет

- Machine Learning Methods Predict Psychology PhenomenaДокумент37 страницMachine Learning Methods Predict Psychology Phenomenaaparna gattimiОценок пока нет

- Research Paper Multiple Regression AnalysisДокумент8 страницResearch Paper Multiple Regression Analysisc9rz4vrm100% (1)

- Statistical Power As A Function of Cronbach Alpha of Instrument Questionnaire ItemsДокумент9 страницStatistical Power As A Function of Cronbach Alpha of Instrument Questionnaire ItemsAngelo PeraОценок пока нет

- Assessing Software Reliability Using Modified Genetic Algorithm: Inflection S-Shaped ModelДокумент6 страницAssessing Software Reliability Using Modified Genetic Algorithm: Inflection S-Shaped ModelAnonymous lPvvgiQjRОценок пока нет

- SRE-Yamada2011-A Bivariate Software Reliability Model With ChangeДокумент8 страницSRE-Yamada2011-A Bivariate Software Reliability Model With ChangeTirișcă AlexandruОценок пока нет

- Software Quality Measurement: Magne JørgensenДокумент11 страницSoftware Quality Measurement: Magne Jørgensenm_mubeen80Оценок пока нет

- Score (Statistics)Документ5 страницScore (Statistics)dev414Оценок пока нет

- Mme 8201-4-Linear Regression ModelsДокумент24 страницыMme 8201-4-Linear Regression ModelsJosephat KalanziОценок пока нет

- Estrategias de Control - ProcesosДокумент9 страницEstrategias de Control - ProcesosEdgar Maya PerezОценок пока нет

- Atm 08 16 982Документ8 страницAtm 08 16 982JorgeОценок пока нет

- Chapter 7 AnovaДокумент20 страницChapter 7 AnovaSolomonОценок пока нет

- R Function Analyzes One and Two SamplesДокумент17 страницR Function Analyzes One and Two SampleseuriionОценок пока нет

- CHYS 3P15 Final Exam ReviewДокумент7 страницCHYS 3P15 Final Exam ReviewAmanda ScottОценок пока нет

- 304BA AdvancedStatisticalMethodsUsingRДокумент31 страница304BA AdvancedStatisticalMethodsUsingRshubhamatilkar04Оценок пока нет

- Theoretical Computer Science Definability StudyДокумент66 страницTheoretical Computer Science Definability StudyAssaf KfouryОценок пока нет

- Statistical Testing and Prediction Using Linear Regression: AbstractДокумент10 страницStatistical Testing and Prediction Using Linear Regression: AbstractDheeraj ChavanОценок пока нет

- Factor Analysis: Statistical ModelДокумент12 страницFactor Analysis: Statistical ModelSubindu HalderОценок пока нет

- FMD PRACTICAL FILEДокумент61 страницаFMD PRACTICAL FILEMuskan AroraОценок пока нет

- Statistics ProjectДокумент13 страницStatistics ProjectFred KruegerОценок пока нет

- Approximate Reasoning in First-Order Logic Theories: Johan Wittocx and Maarten Mari en and Marc DeneckerДокумент9 страницApproximate Reasoning in First-Order Logic Theories: Johan Wittocx and Maarten Mari en and Marc DeneckergberioОценок пока нет

- 1-2b Introduction To Probability TheoryДокумент24 страницы1-2b Introduction To Probability TheoryDwi Mulyanti DwimulyantishopОценок пока нет

- One-Way Analysis of Variance F-Tests PDFДокумент18 страницOne-Way Analysis of Variance F-Tests PDFSri RamОценок пока нет

- Evco 1993 1 1 PDFДокумент25 страницEvco 1993 1 1 PDFRo HenОценок пока нет

- Effect of Uncertainty in Input and Parameter Values On Model Prediction ErrorДокумент9 страницEffect of Uncertainty in Input and Parameter Values On Model Prediction ErrorFábia Michelle da Silveira PereiraОценок пока нет

- A Tutorial On Correlation CoefficientsДокумент13 страницA Tutorial On Correlation CoefficientsJust MahasiswaОценок пока нет

- Ho - Lca - Latent Class AnalysisДокумент8 страницHo - Lca - Latent Class AnalysisYingChun PatОценок пока нет

- Gower 1966Документ15 страницGower 1966Ale CLОценок пока нет

- Evaluation JMLT Postprint-ColorДокумент28 страницEvaluation JMLT Postprint-Colorimene chatlaОценок пока нет

- B-Value Similar To The One in Our Sample (Because There Is Little Variation Across Samples)Документ4 страницыB-Value Similar To The One in Our Sample (Because There Is Little Variation Across Samples)TahleelAyazОценок пока нет

- Fast and Robust Bootstrap for Multivariate Inference: The R Package FRBДокумент32 страницыFast and Robust Bootstrap for Multivariate Inference: The R Package FRBD PОценок пока нет

- Chapter 9 Multiple Regression Analysis: The Problem of InferenceДокумент10 страницChapter 9 Multiple Regression Analysis: The Problem of InferenceAmirul Hakim Nor AzmanОценок пока нет

- Inference in HIV Dynamics Models Via Hierarchical LikelihoodДокумент27 страницInference in HIV Dynamics Models Via Hierarchical LikelihoodMsb BruceeОценок пока нет

- Generating random samples from user-defined distributionsДокумент6 страницGenerating random samples from user-defined distributionsNam LêОценок пока нет

- Stats With PythonДокумент4 страницыStats With PythonAnkur SinghОценок пока нет

- Evaluation From Precision, Recall and F MeasureДокумент28 страницEvaluation From Precision, Recall and F MeasureprobuОценок пока нет

- Imeko WC 2012 TC21 O10Документ5 страницImeko WC 2012 TC21 O10mcastillogzОценок пока нет

- Journal of Statistical Computation and SimulationДокумент18 страницJournal of Statistical Computation and SimulationAli HamiehОценок пока нет

- BAB 7 Multiple Regression and Other Extensions of The SimpleДокумент17 страницBAB 7 Multiple Regression and Other Extensions of The SimpleClaudia AndrianiОценок пока нет

- Process Performance Models: Statistical, Probabilistic & SimulationОт EverandProcess Performance Models: Statistical, Probabilistic & SimulationОценок пока нет

- Nouveau Document TexteДокумент1 страницаNouveau Document Textemriwa benabdelaliОценок пока нет

- Projecting Programs On Specifications Definitions and ApplicationsДокумент35 страницProjecting Programs On Specifications Definitions and Applicationsmriwa benabdelaliОценок пока нет

- Test-Driven Synthesis: Daniel Perelman Sumit Gulwani Dan GrossmanДокумент11 страницTest-Driven Synthesis: Daniel Perelman Sumit Gulwani Dan Grossmanmriwa benabdelaliОценок пока нет

- These de Doctorat: Universit e de Tunis Institut Sup Erieur de GestionДокумент39 страницThese de Doctorat: Universit e de Tunis Institut Sup Erieur de Gestionmriwa benabdelaliОценок пока нет

- Relational Mathematics For Relative Correctness Nov2015Документ19 страницRelational Mathematics For Relative Correctness Nov2015mriwa benabdelaliОценок пока нет

- Programming Without Refinement: Aleksandr ZakharchenkoДокумент20 страницProgramming Without Refinement: Aleksandr Zakharchenkomriwa benabdelaliОценок пока нет

- Toward A New Classi Cation of Non-Functional Requirements For Service Oriented Product LineДокумент6 страницToward A New Classi Cation of Non-Functional Requirements For Service Oriented Product LinemarwaОценок пока нет

- Projecting Programs On Specifications Definition and ImplicationsДокумент34 страницыProjecting Programs On Specifications Definition and Implicationsmriwa benabdelaliОценок пока нет

- The Z/EVES Reference Manual (For Version 1.5)Документ74 страницыThe Z/EVES Reference Manual (For Version 1.5)mriwa benabdelaliОценок пока нет

- What Is A Fault? and Why Does It Matter?Документ21 страницаWhat Is A Fault? and Why Does It Matter?mriwa benabdelaliОценок пока нет

- Proofs - RelativeCorrectness Junary 2014 Teresa MarquesДокумент18 страницProofs - RelativeCorrectness Junary 2014 Teresa Marquesmriwa benabdelaliОценок пока нет

- (R P) L RL: Description R Refine R'Документ2 страницы(R P) L RL: Description R Refine R'mriwa benabdelaliОценок пока нет

- Software Evolution by Correctness EnhancementДокумент6 страницSoftware Evolution by Correctness Enhancementmriwa benabdelaliОценок пока нет

- Correctness Enhancement, A Pervasive SEPДокумент8 страницCorrectness Enhancement, A Pervasive SEPmriwa benabdelaliОценок пока нет

- Convergence: Integrating Termination and Abort-Freedom: Nafi DialloДокумент43 страницыConvergence: Integrating Termination and Abort-Freedom: Nafi Diallomriwa benabdelaliОценок пока нет

- What Is A Fault and Why Does It Matter 2015Документ26 страницWhat Is A Fault and Why Does It Matter 2015mriwa benabdelaliОценок пока нет

- Defining Software Faults: Why It MattersДокумент11 страницDefining Software Faults: Why It Mattersmriwa benabdelaliОценок пока нет

- What Is A Fault and Why Does It Matter Nouv2015Документ14 страницWhat Is A Fault and Why Does It Matter Nouv2015mriwa benabdelaliОценок пока нет

- Bar PDFДокумент7 страницBar PDFmriwa benabdelaliОценок пока нет

- Ramics2015porto Mili AlДокумент22 страницыRamics2015porto Mili Almriwa benabdelaliОценок пока нет

- Verifying While Loops With Invariant Relations Mars 2013Документ23 страницыVerifying While Loops With Invariant Relations Mars 2013mriwa benabdelaliОценок пока нет

- Relational Mathematics For Relative Correctness Nov2015Документ19 страницRelational Mathematics For Relative Correctness Nov2015mriwa benabdelaliОценок пока нет

- On Faults and Faulty Programs 2014Документ17 страницOn Faults and Faulty Programs 2014mriwa benabdelaliОценок пока нет

- On The Lattice of Specifications Applications To A Specification Methodology 1992Документ28 страницOn The Lattice of Specifications Applications To A Specification Methodology 1992mriwa benabdelaliОценок пока нет

- Software Evolution by Correctness EnhancementДокумент6 страницSoftware Evolution by Correctness Enhancementmriwa benabdelaliОценок пока нет

- Correctness Enhancement, A Pervasive SEPДокумент8 страницCorrectness Enhancement, A Pervasive SEPmriwa benabdelaliОценок пока нет

- Defining and Applying Measures of Distance Between Specifications 2001Документ31 страницаDefining and Applying Measures of Distance Between Specifications 2001mriwa benabdelaliОценок пока нет

- Programming Without Refinement: Aleksandr ZakharchenkoДокумент20 страницProgramming Without Refinement: Aleksandr Zakharchenkomriwa benabdelaliОценок пока нет

- Correctness and Relative Correctness Mai2015 PDFДокумент4 страницыCorrectness and Relative Correctness Mai2015 PDFmriwa benabdelaliОценок пока нет

- Design and Fanrication of Tricycle For HandicappedДокумент4 страницыDesign and Fanrication of Tricycle For HandicappedshilpaОценок пока нет

- Adamu Abubakar Sidiq: Bachelor of Engineering (Chemical)Документ2 страницыAdamu Abubakar Sidiq: Bachelor of Engineering (Chemical)Abdulmumin AliyuОценок пока нет

- Blessdajwett TloДокумент2 страницыBlessdajwett TloLàürâ BøyОценок пока нет

- 434424026-Prolink-Ups-Brochure - STABILIZERДокумент2 страницы434424026-Prolink-Ups-Brochure - STABILIZERbramy santosaОценок пока нет

- Asme Section II A-2 Sa-587Документ8 страницAsme Section II A-2 Sa-587Anonymous GhPzn1xОценок пока нет

- 2019 3N MatricesДокумент11 страниц2019 3N MatricesTeow JeffОценок пока нет

- Lab 5 SQLДокумент3 страницыLab 5 SQLSHAIK ALAM (RA2111026010482)Оценок пока нет

- 12 Inch Full-Range Passive Speaker Key Features:: Marquis Bar SeriesДокумент2 страницы12 Inch Full-Range Passive Speaker Key Features:: Marquis Bar Serieslu zimmerОценок пока нет

- Power Electronics Laboratory User Manual: Revised: March 18, 2009Документ51 страницаPower Electronics Laboratory User Manual: Revised: March 18, 2009srinivasulupadarthi100% (1)

- Esxi IssuesДокумент25 страницEsxi Issuesapi-319592042Оценок пока нет

- SUP037RG: Brera LedДокумент7 страницSUP037RG: Brera LedElizabeta JakimovskaОценок пока нет

- Catalog Tecumseh CompresoresДокумент10 страницCatalog Tecumseh Compresorescesardsc1Оценок пока нет

- Quickstart Guide for EWI5000 Wireless InstrumentДокумент40 страницQuickstart Guide for EWI5000 Wireless InstrumentAdolfo LinaresОценок пока нет

- Flying™ RTK Solution As Effective Enhancement of Conventional Float RTKДокумент19 страницFlying™ RTK Solution As Effective Enhancement of Conventional Float RTKMariamОценок пока нет

- Computers As Information and Communication TechnologyДокумент23 страницыComputers As Information and Communication TechnologyRaynalie DispoОценок пока нет

- An 12705Документ35 страницAn 12705ignacioinda39Оценок пока нет

- I&m Hpto12wДокумент50 страницI&m Hpto12wdaniel100% (9)

- Shamlan Water Industry: Industrial Training ReportДокумент9 страницShamlan Water Industry: Industrial Training ReportAbu Mohammad Ebrahim AlrohmiОценок пока нет

- Chapter 1Документ8 страницChapter 1Hazel Mae HerreraОценок пока нет

- Lesson Navigation: Network Access Control List (NACL)Документ13 страницLesson Navigation: Network Access Control List (NACL)SUNNY SINGH0% (1)

- BOOK. DEMOCRACY Electronic Democracy, Mobilisation, Organisation and ParticipationДокумент222 страницыBOOK. DEMOCRACY Electronic Democracy, Mobilisation, Organisation and ParticipationMayssa BougherraОценок пока нет

- IEC 60092-352 Choice & Installation of Cables For Low Voltage Power SystemsДокумент70 страницIEC 60092-352 Choice & Installation of Cables For Low Voltage Power SystemsNguyễn Nhật ÁnhОценок пока нет

- Elcometer 456Документ72 страницыElcometer 456fajar_92Оценок пока нет

- ZFL - SouthAfrica - Hazard - RSDroughtDetection and AnalysisДокумент39 страницZFL - SouthAfrica - Hazard - RSDroughtDetection and AnalysisAsrofiОценок пока нет

- Te 1444 WebДокумент336 страницTe 1444 Webmohammed gwailОценок пока нет

- Samsung TV 2020Документ357 страницSamsung TV 2020Andreas LorentsenОценок пока нет

- WILLSEMI Will Semicon ESD5311N 2 TR - C153721 PDFДокумент5 страницWILLSEMI Will Semicon ESD5311N 2 TR - C153721 PDFPippoОценок пока нет

- Minerval A12 - EN-en-18491-1Документ2 страницыMinerval A12 - EN-en-18491-1sr2011glassОценок пока нет

- Informatica PowerCenter 9.0 Transformation Language ReferenceДокумент223 страницыInformatica PowerCenter 9.0 Transformation Language ReferenceDipankar100% (2)

- Guideline 0.2 Commissioning Process For Existing Systems and Assemblies 2015Документ80 страницGuideline 0.2 Commissioning Process For Existing Systems and Assemblies 2015Abd Alhadi100% (2)