Академический Документы

Профессиональный Документы

Культура Документы

Out-of-the-Box Data Engineering: Events in Heterogeneous Data Environments

Загружено:

heresenthilИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Out-of-the-Box Data Engineering: Events in Heterogeneous Data Environments

Загружено:

heresenthilАвторское право:

Доступные форматы

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

Out-of-the-Box Data Engineering

Events in Heterogeneous Data Environments

Ramesh Jain

Electrical and Computer Engineering, and

College of Computing

Georgia Institute of Technology,

Atlanta, GA 30332-0250

jain@ece.gatech.edu

Abstract

Data has changed significantly over the last few decades. Computing systems that

initially dealt with data and computation rapidly moved to information and

communication. The next step on the evolutionary scale is insight and experience.

Applications are demanding the use of live, spatio-temporal, heterogeneous data. Data

engineering must keep pace by designing experiential environments that let users apply

their senses to observe data and information about an event and to interact with aspects of

the event that are of particular interest. I call this out-of-the-box data engineering because

it means we must think beyond many of our timeworn perspectives and technical

approaches.

1.0 Introduction

Data engineering has evolved and continues to evolve faster than most people ever

imagined. While computing in the early 1970s had only alphabet and numbers,

technology now furnishes its users with an unprecedented volume and variety of

data—from encyclopedia pages to clips of the latest music, from a spreadsheet to a real-

time recording of a triple bypass. And access methods and requirements are evolving at

the same pace.

To keep pace with the demand for live, spatio-temporal, heterogeneous data, data

engineering must let go of old paradigms. They have outlived their application. It is time

to think out of the box—to consider what the operating environments of these new

systems should look like. How can we build something that is experiential, not

information-centric?

Equally interesting is that user expectations of the data system have changed more

rapidly than the data itself. To keep up with these changes, we must consider what the

operating environments of future systems would be and how to realize those

environments rather than how to accommodate new functionality in our existing

paradigm.

In this paper, I look at the changing nature of applications by considering a few novel

applications that use large volumes of data and then discuss the functionality expected

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

from these systems. That computing systems have evolved to follow user demand and

application development is an important insight in this discussion: applications initially

focused on data and computation, then information and communication, and now insight

and experience. Most techniques in data engineering were developed to meet the needs of

data systems of the last quarter of the 20th century. Data engineers must now address the

needs of this century.

2.0 Emerging Applications

Some of us are old enough to remember the gentler times of database engineering. To

define the requirements and structure of a database application, we merely looked at the

corporate database. An entity, usually an employee, consisted of alphanumeric fields,

each of which represented some attribute. Users posed a query to discover an employee

attribute or to find all employees that satisfied certain attribute-related predicates.

But although 2003 users have vastly different expectations, most databases still

have the 1970s philosophy: Users ask queries to get answers in an information-centric

environment. This works well as long as all the users have the same requirements. The

database is a resource for many people and provides a well-defined environment for

articulating queries.

Problems come when user requirements differ or when users don’t know enough

to ask the right questions. I see some of these problems already in applications that at first

glance don’t appear to be database applications. But that’s why we need out-of-the-box

thinking—to recognize that these applications are in fact the future of databases.

2.1 Personalized Event Experience

Suppose you are interested in cricket. A match lasts for five days and may not result in a

decision, even after 30 hours of play. You may be an avid fan, but you don’t want to

spend five days watching a game video. You want to watch specific events in the game,

how specific players perform, all scoring or all defensive highlights of a specific team, or

any player comments on a specific play. You may also want to understand how the game

evolved by seeing a fast animation of statistics related to different categories, or see a

particular event from several camera perspectives or listen to different commentators

describe the same event.

All these desires translate to query types that the game database must answer, and

it must present the answer in way that lets you enjoy the game. Current portals isolate

information in silos so completely that users spend more time trying to navigate within

and across silos than in enjoying the game. Also, users, not portals, should determine the

events of interest.

2.2 EventWeb

Web search engines, for example, are notorious for their lack of discrimination. XML has

not solved these problems because ultimately no search engine can anticipate a user’s

exact needs. The semantic Web is receiving a lot of press and people are pinning many

expectations on personal agents to help find the right information and services.

I’m not convinced that this will solve the problem. The semantic Web still follows

the legacy of Gutenberg. It is a web of “pages” that are predominantly prepared in a

document mode. Again, this is fine if all you want are descriptions. But we can do so

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

much more. Visualize instead a web of events, in which each node represents an event,

past or present. Each event is not just someone’s description of the event or some

statistics related to it. It is the event, brought to you by one or more cameras,

microphones, infrared sensors, or any other technology that lets you experience the event.

For each event, all the information from sensors, documents, and other sources is united

and presented to the user independently of the source. The user then experiences the

preferred parts of a particular event in the preferred medium.

In this vision, events are treated equally. The archived video of a news event is

accessible in the same way as the CEO’s Web cast or your son’s first football game. The

source can be anything from CNN to the local elementary school—whatever or whoever

generates events worth archiving. And perhaps most important, because it is not text

centered, the event Web will reach the 90% of humanity who either cannot grasp or

cannot access current information and communication technology.

I see the rudiments of this vision already. Stores are stocking Web cams in every

shape and size at prices that even students can afford. Sensors that were once discrete are

now being connected to form networks for various Internet applications, from a sushi bar

in San Francisco to an ant colony in Lansing, Michigan. Multimedia phones with built in

cameras will be next. In short, we are witnessing the beginnings of the event Web

explosion; just as decades ago we saw the document Web transform.

2.3 Scientific Applications and Data Warehouses

Transforming data from disparate sources to a sensory medium that people can

experience provides the opportunity for deeper insight into a problem or situation. This

was the basis for the use of the oscilloscope and many similar instruments. Visualization

techniques—a more modern “oscilloscope”—emerged as a powerful analysis and insight

generation environment. Now data for many applications, from customer transactions in a

retail outlet to bioinformatics, is collected and stored in data warehouses.

The data sources for these are diverse and the volumes very large. To use the data

in data warehouses effectively, we need tools and techniques to transform disparate data

into a form that will let people experience and gain insights into the situation. Current

database technology was developed for applications that were challenging three decades

ago. With the completely changed landscape of data, we require new technology to

explore this data in most cases to generate insights into an event. We cannot do this in a

query-centric environment. The oscilloscope brought an experiential environment to

science and technology in the last century. We require an oscilloscope to bring

experience to computing in this century.

2.4 Situation Monitoring and Analysis

The increasing number of applications requires that we establish a large network of

disparate data sources, including both sensors and human-entered data systems, to

produce a data stream. The data from these sources must be interpreted and combined to

provide an overall picture of the situation. This data is used for warning about potential

disastrous events, to provide the status of activities at different locations, and to analyze

the causes of past events. In many cases, using a real situation in the past, different what-

if analysis must be done to develop solutions for similar situations. In all such

applications, real-time data analysis must combine with real-time data assimilation from

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

all sensors to provide a unified model of the situation. Users should not see the situation

as raw data from different sensors, but as the evolving big picture of the situation. Thus,

in an emergency situation, users see not just isolated sensor streams from different

locations, but situation characterized as needing medical help, fire engines, or police. In

all these applications, users are interested in the real-world situation from a user’s

perspective not the data from a specific source. Sensor data is but one of several sources

that form the model of the situation.

3.0 Common Data Characteristics

On the surface the requirements for experiential applications seem very different, but

upon closer examination they have important similarities:

® Spatio-temporal data is important.

® Different data sources provide information to form the holistic picture.

® Users are not concerned with the data source. They want to know the result of the

data assimilation (the big picture of the event).

® Real-time data processing is the only way to extract meaningful information

® Exploration, not querying, is the predominant mode of interaction, which makes

context and state critical.

® The user is interested in experience and information, independent of the medium and

the source.

Data sources are broadly of two types, precise and imprecise, and user requirements for

the data fall into roughly two categories, insight and information. The matrix in Figure 1

captures the tensions between these four characteristics. In many situations, I know the

data source precisely, even though it may be distributed. In other cases, I know only that

what I need is available from somewhere. Likewise, in some situations, I am trying to

gain insights into the behavior of a system, event, or concept, so my primary need is to

explore and understand. In other situations, I need information and I want a specific

answer.

Predictably, databases are in the intersection of precise and information, the

bottom left quadrant. Nothing beats them as a means of getting information from a

precise source. In the top left quadrant are visualization environments and tools,

promising ways to gain insight from a precise source. In the bottom right quadrant are

search engines. Few people will argue that search engines are an imprecise source.

However, their intention is to provide information, not further exploration. Unfortunately,

exploration does occur, but it usually to find a suitable match for the query, not to explore

an event further. Finally, in the top right quadrant is the intersection of insight and

imprecise source. This intersection produces what I call the experiential environment, a

new way of presenting data that will become increasingly common in most data-intensive

applications. This will then improve techniques in the other three quadrants.

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

Insight Experiential

Visualization

Environments

Information

Current Databases Search Engines

Precise Source Imprecise Source

Figure 1. Data sources and access goals

4.0 Experiential Environment

An experiential environment is the collection of sensors and other data sources presented

in a unified model that lets the user directly apply his senses to observe data and

information of interest related to an event and to interact with the data according to his

interests.

Current database systems are essentially stateless, which lets them interact with

multiple users in multiple contexts as efficiently as possible. The drawback is that people

must adapt to the machine’s way of doing things. In the early days of computing, people

were in awe of the machine, so they were willing to rearrange their perspectives and

activities to be part of its environment. Now they are far less reverent and demand that

the machine make the adjustments. My Yahoo, My AOL, and other personalized Web

pages reflect this “my way” shift. People expect their systems to remember what they

like, where they went, what they need to do next, and where they like to shop. The

system must remember how they got to a particular state, to answer questions in the

context of that state, and to evolve to another state in a kind of symbiotic partnership with

the user. E-commerce recognizes this shift, which is why Amazon suggests other books

you might enjoy and displays books and other products that you most recently browsed.

Ironically, this relationship brings out the best in both partners. Humans are

efficient conceptual and perceptual analysts, but relatively poor in mathematical and

logical evaluation; computers are exactly opposite. Computers can perform mathematical

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

and logical operations millions of times faster than any person, but their perceptual

capabilities—even after all the progress of the last 40 years—remain relatively primitive.

Yet current databases present sequential and logical information to humans and

expect computers to detect complex patterns. The powerful synergy of human and

machine is short-circuited. If we use computers and users synergistically, we can develop

the experiential environment.

4.1 Emphasis on natural

We are interaction-oriented creatures. It is how we learn about our environment. We

perform an action, see its effect on the environment, and act in response to that. In the

typical query system, however, we articulate a query, wait for the system to provide an

answer, analyze the response to see if the system understood what we really wanted, and

more often than not, formulate a new query and start all over again. This process is

painfully out of synch with our natural desire to learn through interaction. In experiential

environments, users get data that they can easily and rapidly interpret using their natural

senses. Once they interpret the data, they can interact with the data set to either get a

modified dataset or to perform certain actions. At any given time, the data set from the

previous interaction is available and the user interacts with the system as a result of this

holistic information.

4.2 Similar query and presentation spaces

Most current systems use different query and presentation spaces. A query environment

helps users articulate their queries, the system computes the results of the query, and

presents them in a very different form. Search engines provide a box for the user to enter

keywords and the system responds with a list of thousands of entries spanning hundreds

of pages. A user has no idea how the entries on the first page relate to the entries on the

113th page (if she gets that far), how many times the same entry appears, and often how

entries on the same page can possibly have anything in common. Contrast this to video

games, in which the player formulates a query by selecting some action on the screen and

the system presents the result as some change on the same screen. Here query and

presentation spaces are the same, and the relationships among all relevant objects are

clearly presented in a form that is obvious to the user.

4.3 State and context

People don’t willingly change their physical and mental states abruptly. A gradual shift in

context is much preferred, even in natural language, which is why we take pains to insert

transitions like “on the other hand” to signal a contrast or “similarly” to signal a

comparison. People simply operate better in known contexts because they can understand

enough about the relationships among different objects in space and time to draw

inferences about them or create models of them. We live in a world that is continuous in

both space and time, so we are most comfortable organizing our knowledge of our

surroundings in that manner.

The space-time continuum is foreign to databases, and information systems in general.

How can a stateless system respect the demands of spatio-temporal data? Databases may

be efficient, but being stateless has distinct disadvantages. Latency is a big one. Not only

is the system slow, it doesn’t even give feedback about its lack of progress. The perpetual

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

hourglass, the bar that takes an agonizing amount of time to fill, or the endless flitting

pages are the only indications that the system hasn’t completely abandoned its task. Some

Web sites try to reveal the number of bytes left, which is marginally useful as long as

traffic allows. Nothing, however, will induce users to explore if it takes too long to move

from place to place. When latency is low, on the other hand, exploration is much more

pleasurable. Video games are an example. Their appeal is due in part to their near-zero

latency.

4.4 Multimedia immersion

Video games are also popular because they provide a powerful visual environment, and

in some cases tactile inputs. Early computing environments were strongly text oriented

because they had to be. The technology couldn’t support any alternative form. There is no

longer a reason to avoid powerful visual and audio presentations. Other senses may also

become a familiar part of the computing environment. In some cases, like chemical

industry or culinary applications, smell could make a more compelling and immersive

presentation.

I allude to video games often because they are a powerful example of small-scale

experiential environment. Anyone can use them, even children who cannot yet read or

write. A video game can keep even these young users engaged for hours, a testimony to

its natural interactive ability.

5.0 Assimilating Data into Unified Information and Knowledge

In the experiential environment, data can be anything—audio, video, text, alphanumerics,

infrared—depending on the sensors employed. Current databases and information

systems were designed using data obtained and mediated by people, so predictably they

ended up in alphanumeric form or in text. Database designers developed techniques to

organize such data and deal with it effectively. New applications require not just the data

repository, but complete environments for information and insight.

This complete environment requires rethinking the way we index data. Current

indexing techniques for different data types depend on metadata for that particular type.

Metadata plays a key role in introducing semantics and is important in determining how

data will be used. Schemas provide semantics in relational tables.

XML has become very popular for introducing semantics in text. Ironically,

XML came about because researchers were trying to develop automatic approaches to

deduce semantics from the data. When it became clear that reaching this goal was far

more complex than they had thought, researchers turned to a mark-up approach to

semantics and threw in languages as well. I fail to understand the degree of excitement

about XML. Clearly, it will solve some interesting problems, but it is not the panacea

many people believe.

XML’s utility is limited to the introduction of semantics in strongly human-

mediated environments. For sensory data like audio and video, feature-based techniques

are much more useful. Here the goal is to identify some features in data that will serve as

a bridge between data and semantics. The idea of using clearly detectable attributes as

features to infer semantics seems an obvious solution. For images, commonly used

features are color histograms and simple measures of texture and structure. Most

techniques measure global images, not objects within images, yet people are most often

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

interested in objects. How to get to those objects is a problem that most data engineers

are ignoring. It is true for most other signals in medical, seismic, and other applications

also. Signals are usually indexed using features that capture global or semi-global

features, while semantics usually requires the structure of local features.

Unfortunately, these techniques are still immature, primarily because researchers

are interested in developing general-purpose techniques rather than restricting their

system to a specific context. Researchers can learn from the success of natural language

or speech recognition systems—all successful systems work in a specific context.

5.1 Breaking down silos

Everyone gets information about objects and events from different sources in different

data types. What I know about the war against terrorism is based on what I saw on TV,

read in newspapers and magazines, heard on the radio, and discussed with my friends.

That is, my perception is based on information I’ve assimilated from multiple data

sources. Somehow, and quite naturally, I’ve assimilated all this information and

represented it in a unified form that is independent of the individual data source.

Information systems, in contrast, create data silos. The metadata is defined and

introduced for a data of a particular type, which is indexed and neatly stashed in its own

place. A video collection cannot interact with a text collection to produce a videotext

collection. Indeed, the silo structure is strongly defined with little or no interaction among

silos, as Figure 2 shows.

Vide Audi Data Tex Sensor

o o and t s

Statistic

s

Inde Inde Inde Inde Inde

x x x x x

Figure 2. Different data sources have different indexing mechanisms, but these sources

live in their own silos.

The challenge to the database community, then, is to break down these silos to unify

information. This requires more out-of-the-box thinking because most data sources are

designed to behave like independent silos. Their creators assume that after the integration

system analyzes the silos and extracts their metadata, it will somehow combine the

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

metadata to provide correct results. Indeed, many current research efforts are aimed at

this kind of solution.

Researchers also form strong silos. I know from experience in many research areas,

including image and video database research, that tunnel vision is common. Just as the

six blind men had vastly different ideas about the size, shape, and function of an elephant,

so the database, computer vision, and information retrieval communities have diverse

(and equally stubborn) views of an image database. Having all these people develop

systems without communicating is no more productive than having five students in

separate rooms attempt to produce a coherent thesis.

Perhaps the challenge is to break down both kinds of silos.

5.2 Information Assimilation

Many system engineers, particularly designers of control and communication systems,

use a strong, domain-based information-assimilation approach to estimate system

parameters that uses many disjoint and disparate information sources. The parameters of

the mathematical system model are successively refined by observing the data as it

becomes available. In this approach, each data source is just one more source that

contributes to the model’s refinement, and the goal is to get the most precise model

possible. At some point, it is possible to completely ignore data from a specific source.

Thus, a data source is just that, a data source, and the model represents the current

knowledge about the system, knowledge that in turn is based on evidence from all the

data sources. Conceptually this approach is very different from current information

integration, in which the system analyzes a particular data source and then combines its

results with those of other data sources.

A very important result of the assimilation approach is that the system can

efficiently deal with real-time data by keeping only what is important for the goal of the

system. Most applications collect real-time data, and it is very important to know that all

data is not equal and the importance is context dependent. Data-engineering systems will

have to learn to ignore data.

This approach also allows a very smooth and effective introduction of semantics

in the process. Here the semantics will be brought by modeling the data and information

flow in the system, representing states and state transitions, and the role of different

objects in different states. Thus, this modeling process will help in representing data as

well as in analyzing the data as it comes to extract only meaningful information.

5.3 Event Graphs for Unified Indexing

An approach to data silo breakdown, which I and my colleagues at PRAJA and at UC

San Diego’s Electrical and Computer Engineering Department developed, is to build a

unifying indexing system that introduces a layer on top of the metadata layer of each data

silo, or disparate data source. The layer uses an event-based domain model and metadata

to construct a new index that is independent of data type. We decided to use the event—a

significant occurrence or happening located at a single point in space and time—as the

basic organizational entity for the unifying index because it has many applications and

theories in human memory organization. An application domain can be modeled in terms

of events and objects. Events are hierarchical and have all desirable characteristics that

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

have made objects so popular in software development. In fact, events could be

considered objects whose primary attributes are time and space.

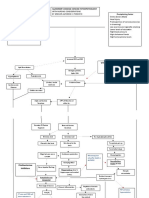

In our approach, an event graph parses the data as it is coming and assimilates

data to build an environment model that reflects knowledge about the event on the basis

of information collected so far. As Figure 3 shows, event graphs essentially create a list

of spatio-temporal events as they take place. This becomes the database that describes

domain semantics and links these events to individual data streams. Users can study as

many or as few of these as they want or they can visit the entire stream to experience the

full event. Event graphs also capture the entities and their roles in the event, the event’s

location and time, and event-transition information. They capture causality in an event-

transition mechanism.

An event base stores the name and nature of the event and all other relevant

information. The relevant information may not be available at the time the event is

created. If so, when it becomes available, the system attaches it to the event. For example,

comments in the local newspaper about the CEO’s talk can be linked to the talk when

they become available.

Thus, the event base is an organic database that keeps growing as a result of many

different processes running. It differs from the current database form in this respect.

The event base also stores links to original data sources, which means the system

can present the appropriate media in the context of a particular event. Thus, when the

cricket fan accesses the match to see all Virendar Sehwag’s boundaries in the first inning,

the system can suppress all other shots and show only what the user wants.

The user interacts with the event base directly, and the event base uses original

data sources as required. This has several important implications: The system can

preprocess important information related to events and objects according to its domain

knowledge. It can present information using domain-based visualization, and it can

provide unified access to all information related to an event, independent of the time the

data became available or was entered in an associated database.

Because of these characteristics, unified indexing is the backbone of an

experiential environment.

DataSources

Eventbase

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

Figure 3. Event graphs unify different data sources by providing a semantic indexing and

linking approach.

6.0 WYSIWYG Search

As Figure 4 shows, an event has three dimensions: what it is (its name and class),

where it took place, and when it took place. A user navigating through an event base is

interested in finding all events in a certain class that occurred at a particular location and

time. The event name captures the event’s purpose and identity, the event class is

organized in an ontology defined for the application. The ‘What’ part on the screen (top

left) presents a list of all events modeled in the system. Location can be specified in some

kind of map—geographic, schematic, or conceptual. Time is organized as a timeline.

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

Figure 4. An approach to show events and create a WYSIWYG search environment.

Clear What-When-Where areas provide a multidimensional WYSIWYG search

environment.

Users can select one or two event classes or navigate through class ontology hierarchies

endlessly; there is no theoretical limit on the subclass structure. The depth of the

hierarchy depends on the model used in the application and the data available. Selecting a

class automatically selects all its subclasses. To navigate through event location and time,

users either zoom or move in different directions, similar to the way video game players

select parts of a map, from a room to an entire world. The event timeline could be

anything from microseconds to centuries, or even light years.

At all points of the search the user experiences What-You-See-Is-What-You-Get

(WYSIWYG) characteristics. Once a user selects event classes, part of the map, and time,

the system presents all events and their selected attributes at all three places. In the figure,

the user has selected the inventory class for SBU accessories worldwide in 2001, which is

akin to the text query, “Show me inventory status of all the SBU accessories worldwide

in 2001. The event list (bottom half of the screen) shows the details of the inventories.

The colored dots on the map show the location and status of the inventory: needs

immediate attention, needs some attention, okay. To avoid confusion, this example does

not show a color-code list and timeline, but if the user selects an item in the list, the

display will change color to highlight that selection and its corresponding symbols in the

time and location areas. The exact mix of color and symbols depends on the application.

By displaying events on a map as well as on a timeline, the WYSIWYG approach

maintains event context. The user can then refine the search through any window, say by

zooming into and out of the map or timeline or going left or right. A change in one part

automatically updates results in the other windows. Consequently, the query and

presentation spaces remain equal. Also, as users change the search criteria, they get

immediate results with minimal latency. In most applications, the results can be

instantaneous. Users can experiment with the data set on their own terms and develop

insights at their own pace, always with the event’s context. The system displays results

continuously, making it easier to hypothesize about data relationships. It will be possible

to test a hypothesis by linking such a system to data-mining tools that would let the user

explore large data warehouses.

If a user wants to know more about the event, he can explore it by double clicking in

any of the three windows (what, when, or where). The system then provides all the data

sources (audio, video, or text) and any other event characteristics packaged in an event

envelope, which the system automatically generates on the basis of the information

assimilated in the event base. The system can automatically create event envelopes using

the information in the event base as it is created. The user can launch a variety of sources

from the envelope, and they will open in the desired mode in either a different window

than the user originally selected or in the same window. The system accesses and

appropriately presents much of the information in the event envelope through links to

original sources, such as programs launched to present results of a particular dataset or a

simulation.

An event envelope is a powerful mechanism that unifies the results of many complex

operations. If selected variables have dynamic attributes, the event envelope can present

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

historical attributes for those variables. Users can then save an event envelope as a snap

shot—the particular state of an event—and compare it with other snapshots representing

later states. The snapshot button (top right in Figure 3) lists all event envelopes the user

has saved. An event envelope can be sent through e-mail and hence can help build

communities around specific themes. Amateur astronomers are interested in scanning the

sky for near-Earth-objects like comets. Clubs could exchange event envelopes and

commentary about the images in the same e-mails. Moreover, the envelopes would

contain links to details like magnitude and angular distance from a known star. This kind

of rich communication increases both individual and community knowledge.

7.0 Applications

Here we briefly describe three applications to give an idea of what could be done in

this environment.

5.1 Football

n Game Drive

n Quarter 1 TD

ß Team A:

Drive FG

ß Team B:

Drive

n Quarter 2 3rd 4th

1st 2nd

ß Team A: Dow Dow Dow Dow

n

Drive n n n

ß Team B:

Drive TO

n Quarter 3

ß Team A:

Drive

ß Team B:

Drive

n Quarter 4

Figure 5: Event graph of a football game

ß Team A:

Drive

The graph in Figure 5 is of the events in a football game. The text shows several levels

ß Team B: from the complete game to a particular drive. For simplicity, the

of event hierarchies,

figure does not show levels below a drive. The graph also shows potential transitions

Drive

from one event to the other in the game in terms of downs. Thus, the graph represents a

subset of an event-transition diagram for the game.

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

The model is generic for the game. The sequence of events generated depends on the

particular game. Figure 4 shows only show a small subset of the event model to give a

flavor of application.

Our data sources for the game included video (plus audio) from multiple cameras;

play-by-play information, which various companies generated and made available as a

data stream; and a player and statistics database. A rule-based system decided if a

particular play (an event) would be of interest to anyone and thus whether or not to save

the related video.

The system parsed the play-by-play data stream, applied the rule base, and prepared an

event base for the game. As Figure 6 shows, the event base appeared to the user as a

“time machine,” in that users could go to any moment and see all the related statistics,

including score and timeout left, rushing yards, and first downs for each team at that

particular time.

This display is like the one in we discussed above but designed using the domain

information for football and hence familiar to football fans. By clicking on the time line a

user can select any time instant and see what was the state of the game at that time. By

moving the pointer on the timeline, users can see how the game evolved. They could

filter events of their choice and set them in standard football representation—the football

field at the bottom of the screen. By double clicking on a play, they could get more

information about the play or watch a video of it through the event viewer? As they

watched scoring plays, from various angles, they could click on a player to get more

information.

Twenty five college football teams used this system. These fans could not watch the

game on national TV either because the game was not televised or because they were in a

wrong place, but could enjoy football games of their team in a compelling way using this

system. They could watch the same play of their favorite player from multiple angles to

gain insight in what really happened.

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

Figure5 : Experiential environment for Football fans to enjoy multimedia presentation

in a time-machine format.

Insight building via experience and exploration is not limited to entertainment

applications. In a modern enterprise, line managers would like to identify in real time the

potential problem areas, how they got here, and how they can change things. The focus is

on performance indicators, which measure the discrepancies between planned and actual

performances, and on the relationships among performance indicators and available

infrastructure, environmental factors, promotional efforts, and so on. It is not enough to

discover a problem; companies want to analyze why the problem arose so that they can

improve their processes. In this context, the why includes activities related to the problem

as well as its historical perspective.

YearlySalesActivity

+TargetSalesAmount

+ActualSalesAmount

+SalesAmountDiscrepancy

What +TargetSalesCalls

Sales +ActualSalesCalls

Overall +SalesCallsDiscrepancy

By Customer

Customer1 QuarterlySalesAmount

Customer2 1

By Product Category QuarterlySalesCalls

Overall

Cat1 12

Overall

By Product MonthlySalesActivity

Product1 +TargetSalesAmount

Product2 +ActualSalesAmount

+SalesAmountDiscrepancy

Cat2

+TargetSalesCalls

Overall +ActualSalesCalls

... +SalesCallsDiscrepancy

Inventory

.... DailySalesAmount

Markerting

.... DailySalesCalls

Figure 6: Event graph and taxonomy of a Demand Activity Monitoring application

Figure 6 shows an event graph for a sales forecast and inventory monitoring system

designed to monitor an automotive parts manufacturer’s key activities. These include

sales (monthly, daily, and hourly forecast target and actual for different sales regions) and

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

inventory (monthly, daily, and hourly available inventory for different warehouses).

Activities are rolled up temporally (hourly _ daily _ monthly) and by various “actors”

(customers, parts, parts line, and so on). Figure 7 shows a screen shot of EventViewer for

this application. Performance indicators for each activity are mapped to red, yellow, and

green based on domain specific criteria. The display in figure 7 is the close-up version of

the display in figure 4 shown earlier. It is easy to see that one can select different

geographic regions and different parts to understand what was going on in that part of the

world. These displays provide a holistic picture of the situation to an analyst who can

then drill deeper into the situation. The system allows those tools, but we will not discuss

those things here due to space limitations.

Figure 7: Another display of the inventory application. Compare this display to the

one in figure 4 to see how the system can be used in WYSIWYG mode.

Conclusion

Rapid advances in many related areas have brought in some interesting challenges for the

data engineering community. Traditional database techniques need to be reconsidered

and readapted for the new applications. Relational approaches are powerful and will still

© Ramesh Jain and IEEE

Draft: Keynote Talk at Int. Conf. On Data Engineering 2003.

be useful as a backend. But the front end of these systems requires data engineering that

is very different from what we have done so far. The challenge is take a more solution-

oriented perspective or be boxed into back-end repository management.

Some new attributes of data emerge as dominant issues: semantics, multimedia,

live, location sensitivity, and separate streams of sensor and other data. To unify all

sources of information, events appear to offer a powerful approach for modeling,

managing and presenting data. I believe that event-based experiential environments will

be useful in many emerging applications. The thoughts and ideas I have presented are

still in the conceptual stage. We have a long way to go in refining this approach to make

it practical, but it is clear we must take a new path, one that is outside conventional

thinking, if we are to keep pace with and enable these new applications.

Bibliography

[1] A. Katkere, S. Moezzi, D.Y. Kuramura, P. Kelly, and R. Jain, “Towards video based

immersive environments,” Multimedia Systems, Vol. 5, No. 2, pp.69-85, 1997.

[2] S. Moezzi, A. Katkere, D.Y. Kuramura, and R. Jain, “Reality modeling and

visualization from multiple video sequences,” IEEE Computer Graphics and

Applications, pp. 58-63, No. 1996.

[3] A. Katkere, J. Schlenzig, A. Gupta, and R. Jain, “Interactive video on WWW: Beyond

VCR like interfaces,” Computer Networks and ISDN Systems, Vol. 28, pp. 1559-1572,

1996.

[4] Ramesh Jain and Arun Katkere, “Experiential environment for accessing multimedia

information”, Proc. Of Second International Symposium on Multimedia Mediators,

University of Tokyo, Tokyo, March 7-8, 2002.

[5] Y. Roth, R. Jain, "Knowledge Caching for Sensor-Based Systems." Artificial

Intelligence, 2-24. 1994.

[6] Simone Santini and Ramesh Jain, “A Multiple Perspective interactive Video

Architecture for VSAM,” Proceedings of the 1998 DARPA Image Understanding

Workshop, Monterey, CA, November 1998

[7] Simone Santini and Ramesh Jain, “Semantic Interactivity in Presence Systems”,

Proceedings of the Third International Workshop on Cooperative and Distributed Vision,

Kyoto, Japan, November 1999.

© Ramesh Jain and IEEE

Вам также может понравиться

- Digital Systems Design With VHDL and FPGAs 2020Документ8 страницDigital Systems Design With VHDL and FPGAs 2020heresenthilОценок пока нет

- Crompton PaperДокумент6 страницCrompton PaperheresenthilОценок пока нет

- ImageprocessДокумент4 страницыImageprocesssheryldsouza199Оценок пока нет

- Antenna PaperДокумент3 страницыAntenna PaperheresenthilОценок пока нет

- A Low-Complexity Robust OFDM Receiver For Fast Fading ChannelsДокумент11 страницA Low-Complexity Robust OFDM Receiver For Fast Fading ChannelsheresenthilОценок пока нет

- Civil 3D TutorialsДокумент840 страницCivil 3D TutorialsRebecca ZodinpuiiОценок пока нет

- ResumДокумент3 страницыResumheresenthilОценок пока нет

- ImageprocessДокумент4 страницыImageprocesssheryldsouza199Оценок пока нет

- Resume Industry PDFДокумент2 страницыResume Industry PDFheresenthilОценок пока нет

- Bluesaver A Multi PHY Approach To SmartphoneДокумент11 страницBluesaver A Multi PHY Approach To SmartphoneheresenthilОценок пока нет

- PDFДокумент11 страницPDFheresenthilОценок пока нет

- Building Confidential and Efficient Query Services in The Cloud With RASP Data Perturbation - IEEE Project 2014-2015Документ6 страницBuilding Confidential and Efficient Query Services in The Cloud With RASP Data Perturbation - IEEE Project 2014-2015heresenthilОценок пока нет

- Obstacle DetectionДокумент6 страницObstacle DetectionheresenthilОценок пока нет

- Cseit List PDFДокумент17 страницCseit List PDFheresenthilОценок пока нет

- Base Paper PDFДокумент14 страницBase Paper PDFheresenthilОценок пока нет

- Cse - It - 2013..ieee Projects Final Year Projects Mca Me Be Java Dot Net Projects Micans InfotechДокумент28 страницCse - It - 2013..ieee Projects Final Year Projects Mca Me Be Java Dot Net Projects Micans InfotechheresenthilОценок пока нет

- Secure and Efficient Handover Authentication Based On Bilinear Pairing FunctionsДокумент10 страницSecure and Efficient Handover Authentication Based On Bilinear Pairing FunctionsheresenthilОценок пока нет

- Vlsi Lab ManualДокумент54 страницыVlsi Lab ManualheresenthilОценок пока нет

- Analysis and Implementation of A NovelДокумент4 страницыAnalysis and Implementation of A NovelheresenthilОценок пока нет

- Optimal Power Allocation in Multi Optimal Power Allocation in Multi-Relay MIMOДокумент5 страницOptimal Power Allocation in Multi Optimal Power Allocation in Multi-Relay MIMOheresenthilОценок пока нет

- Projection Method For Image WatermarkingДокумент4 страницыProjection Method For Image WatermarkingheresenthilОценок пока нет

- Scalable and Secure Sharing of Personal Health Records in Cloud Computing Using Attribute-Based EncryptionДокумент6 страницScalable and Secure Sharing of Personal Health Records in Cloud Computing Using Attribute-Based EncryptionheresenthilОценок пока нет

- Latency Equalization As A New Network Service PrimitiveДокумент7 страницLatency Equalization As A New Network Service PrimitiveheresenthilОценок пока нет

- Query Planning For Continuous Aggregation QueriesДокумент13 страницQuery Planning For Continuous Aggregation QueriesheresenthilОценок пока нет

- Compressed-Sensing-Enabled Video Streaming For Wireless Multimedia Sensor NetworksДокумент5 страницCompressed-Sensing-Enabled Video Streaming For Wireless Multimedia Sensor NetworksheresenthilОценок пока нет

- Gibraltar Prototype For Detection of Kernel Level Rootkits (Abstract)Документ6 страницGibraltar Prototype For Detection of Kernel Level Rootkits (Abstract)heresenthilОценок пока нет

- Heuristics Based Query Processing For Large RDF Graphs Using Cloud ComputingДокумент4 страницыHeuristics Based Query Processing For Large RDF Graphs Using Cloud ComputingheresenthilОценок пока нет

- Grouping-Enhanced Resilient Probabilistic En-Route Filtering of Injected False Data in WSNsДокумент11 страницGrouping-Enhanced Resilient Probabilistic En-Route Filtering of Injected False Data in WSNsheresenthilОценок пока нет

- Automatic Reconfiguration For Large-Scale Reliable Storage SystemsДокумент4 страницыAutomatic Reconfiguration For Large-Scale Reliable Storage SystemsheresenthilОценок пока нет

- Joint Relay and Jammer Selection For Secure Two-Way Relay NetworksДокумент5 страницJoint Relay and Jammer Selection For Secure Two-Way Relay NetworksheresenthilОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Baptism of ApostlesДокумент67 страницBaptism of ApostlesaudubelaiaОценок пока нет

- The Ecology of School PDFДокумент37 страницThe Ecology of School PDFSravyaSreeОценок пока нет

- Action Plan For My Long Term GoalsДокумент4 страницыAction Plan For My Long Term Goalsapi-280095267Оценок пока нет

- 5e Lesson Plan Technical WritingДокумент3 страницы5e Lesson Plan Technical Writingapi-608904180Оценок пока нет

- Virtual Intimacies - Media, Affect, and Queer Sociality (PDFDrive) PDFДокумент180 страницVirtual Intimacies - Media, Affect, and Queer Sociality (PDFDrive) PDFNick JensenОценок пока нет

- Group 9 - Icp MonitoringДокумент25 страницGroup 9 - Icp MonitoringKimberly Abella CabreraОценок пока нет

- TPAДокумент9 страницTPAAli ShahОценок пока нет

- Grammar Dictation ExplainedДокумент9 страницGrammar Dictation ExplainedlirutОценок пока нет

- Chapter IДокумент30 страницChapter ILorenzoAgramon100% (2)

- John 1.1 (106 Traduções) PDFДокумент112 страницJohn 1.1 (106 Traduções) PDFTayna Vilela100% (1)

- Designing Organizational Structure: Specialization and CoordinationДокумент30 страницDesigning Organizational Structure: Specialization and CoordinationUtkuОценок пока нет

- Math 226 Differential Equation: Edgar B. Manubag, Ce, PHDДокумент18 страницMath 226 Differential Equation: Edgar B. Manubag, Ce, PHDJosh T CONLUОценок пока нет

- Pathophysiology of Alzheimer's Disease With Nursing ConsiderationsДокумент10 страницPathophysiology of Alzheimer's Disease With Nursing ConsiderationsTiger Knee100% (1)

- Critical Reading As ReasoningДокумент18 страницCritical Reading As ReasoningKyle Velasquez100% (2)

- Assignment 2Документ28 страницAssignment 2Jinglin DengОценок пока нет

- THE PHILIPPINE JUDICIAL SYSTEM: PRE-SPANISH AND SPANISH PERIODДокумент17 страницTHE PHILIPPINE JUDICIAL SYSTEM: PRE-SPANISH AND SPANISH PERIODFranchesca Revello100% (1)

- Pathfit 1Документ2 страницыPathfit 1Brian AnggotОценок пока нет

- Skripsi Tanpa Bab Pembahasan PDFДокумент117 страницSkripsi Tanpa Bab Pembahasan PDFArdi Dwi PutraОценок пока нет

- Drama GuidelinesДокумент120 страницDrama GuidelinesAnonymous SmtsMiad100% (3)

- Psychological ContractДокумент14 страницPsychological ContractvamsibuОценок пока нет

- Happy Birthday Lesson PlanДокумент13 страницHappy Birthday Lesson Planfirststepspoken kidsОценок пока нет

- Merged Fa Cwa NotesДокумент799 страницMerged Fa Cwa NotesAkash VaidОценок пока нет

- PACIFICO B. ARCEO, JR, Jr. vs. People of The Philippines, G.R. No. 142641, 17 July 2006Документ1 страницаPACIFICO B. ARCEO, JR, Jr. vs. People of The Philippines, G.R. No. 142641, 17 July 2006Sonson VelosoОценок пока нет

- Stories of Narada Pancaratra and Lord Krishna's protection of devoteesДокумент9 страницStories of Narada Pancaratra and Lord Krishna's protection of devoteesPedro RamosОценок пока нет

- Lit Exam 2nd QuarterДокумент4 страницыLit Exam 2nd Quarterjoel Torres100% (2)

- Integrative Teaching Strategy - Module #6Документ10 страницIntegrative Teaching Strategy - Module #6Joann BalmedinaОценок пока нет

- PepsiДокумент101 страницаPepsiashu548836Оценок пока нет

- Ovl QC Manual-2012Документ78 страницOvl QC Manual-2012Sonu SihmarОценок пока нет

- Forbidden TextsДокумент179 страницForbidden TextsThalles FerreiraОценок пока нет