Академический Документы

Профессиональный Документы

Культура Документы

Lovely Professional University Term Paper OF Digita L Communicaton System (Data Compression)

Загружено:

Ajeet PandeyИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Lovely Professional University Term Paper OF Digita L Communicaton System (Data Compression)

Загружено:

Ajeet PandeyАвторское право:

Доступные форматы

LOVELY PROFESSIONAL UNIVERSITY

TERM PAPER

OF

DIGITA L COMMUNICATON SYSTEM

(DATA COMPRESSION)

BY : Joginder singh

Roll:34

Sec:B6803

About the Author:-

ABSTRACT: This term paper deals with the term data Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was

compression. how it is done ,lossy compression, it also explain an American mathematician, electronic engineer, and

about limitations and future aspects of data compression. cryptographer known as "the father of information theory".

History:- Shannon is famous for having founded information theory with

one landmark paper published in 1948. But he is also credited with

founding both digital computer and digital circuit design theory in

In his 1948 paper, ``A Mathematical Theory of Communication,''

1937, when, as a 21-year-old master's student at MIT, he wrote a

Claude E. Shannon formulated the theory of data compression. thesis demonstrating that electrical application of Boolean algebra

Shannon established that there is a fundamental limit to lossless could construct and resolve any logical, numerical relationship. It

data compression. This limit, called the entropy rate, is denoted by has been claimed that this was the most important master's thesis

H. The exact value of H depends on the information source --- of all time.

more specifically, the statistical nature of the source. It is possible

to compress the source, in a lossless manner, with compression rate

close to H. It is mathematically impossible to do better than H.

Shannon also developed the theory of lossy data compression. This

is better known as rate-distortion theory. In lossy data

compression, the decompressed data does not have to be exactly WHAT IS DATA COPRESSION ?

the same as the original data. Instead, some amount of distortion,

D, is tolerated. Shannon showed that, for a given source (with all

its statistical properties known) and a given distortion measure, IN GENERAL:-

there is a function, R(D), called the rate-distortion function. The

theory says that if D is the tolerable amount of distortion, then

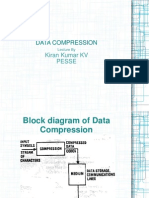

In computer science and information theory, data compression,

R(D) is the best possible compression rate.

source coding or bit-rate reduction is the process of encoding

information using fewer bits than the original representation would

When the compression is lossless (i.e., no distortion or D=0), the use.

best possible compression rate is R(0)=H (for a finite alphabet

source). In other words, the best possible lossless compression rate

is the entropy rate. In this sense, rate-distortion theory is a In terms of Digital:-

generalization of lossless data compression theory, where we went

from no distortion (D=0) to some distortion (D>0). Compression is useful because it helps reduce the consumption of

expensive resources, such as hard disk space or transmission

Lossless data compression theory and rate-distortion theory are bandwidth. On the downside, compressed data must be

known collectively as source coding theory. Source coding theory decompressed to be used, and this extra processing may be

sets fundamental limits on the performance of all data compression detrimental to some applications.

algorithms. The theory, in itself, does not specify exactly how to

design and implement these algorithms. It does, however, provide Example to show compression :-

some hints and guidelines on how to achieve optimal performance.

In the following sections, we will described how Shannon modeled

an information source in terms of a random process, we will For instance, a compression scheme for video may require

present Shannon lossless source coding theorem, and we will expensive hardware for the video to be decompressed fast enough

discuss Shannon rate-distortion theory. A background in to be viewed as it is being decompressed (the option of

probability theory is recommended. decompressing the video in full before watching it may be

inconvenient, and requires storage space for the decompressed

video).

phrase, we just point to the words in the first half and fill in the

spaces and punctuation.

The design of data compression schemes therefore involves trade-

offs among various factors, including the degree of compression,

the amount of distortion introduced (if using a lossy compression

scheme), and the computational resources required to compress

and uncompress the data. Types of data compressions:-

How file compression works:- 1. Lossy data compression

2. Lossless data compression

If you download many programs and files off the Internet, you've

probably encountered ZIP files before. This compression system is

a very handy invention, especially for Web users, because it lets

you reduce the overall number of bits and bytes in a file so it can

be transmitted faster over slower Internet connections, or take up

less space on a disk. Once you download the file, your computer

uses a program such as WinZip or Stuffit to expand the file back to

its original size. If everything works correctly, the expanded file is

identical to the original file before it was compressed.

At first glance, this seems very mysterious. How can you reduce

the number of bits and bytes and then add those exact bits and

bytes back later? As it turns out, the basic idea behind the process

is fairly straightforward. In this article, we'll examine this simple

method as we take a very small file through the basic process of

compression.

Most types of computer files are fairly redundant -- they have the

same information listed over and over again. File-compression

programs simply get rid of the redundancy. Instead of listing a

piece of information over and over again, a file-compression Lossy compression:-

program lists that information once and then refers back to it

whenever it appears in the original program. In information technology, "lossy" compression is a data encoding

method which compresses data by discarding (losing) some of it.

As an example, let's look at a type of information we're all familiar The procedure aims to minimise the amount of data that needs to

with: words. be held, handled, and/or transmitted by a computer. Typically, a

substantial amount of data can be discarded before the result is

sufficiently degraded to be noticed by the user.

In John F. Kennedy's 1961 inaugural address, he delivered this

famous line:

Lossy compression is most commonly used to compress

multimedia data (audio, video, still images), especially in

"Ask not what your country can do for you -- ask what you can do applications such as streaming media and internet telephony. By

for your country." contrast, lossless compression is required for text and data files,

such as bank records, text articles, etc. In many cases it is

The quote has 17 words, made up of 61 letters, 16 spaces, one dash advantageous to make a master lossless file which can then be used

and one period. If each letter, space or punctuation mark takes up to produce compressed files for different purposes; for example a

one unit of memory, we get a total file size of 79 units. To get the multi-megabyte file can be used at full size to produce a full-page

file size down, we need to look for redundancies. advertisement in a glossy magazine, and a 10 kilobyte lossy copy

made for a small image on a web page.

Immediately, we notice that:

Transform coding:-

"ask" appears two times

"what" appears two times More generally, lossy compression can be thought of as an

"your" appears two times application of transform coding – in the case of multimedia data,

"country" appears two times perceptual coding: it transforms the raw data to a domain that more

"can" appears two times accurately reflects the information content. For example, rather

"do" appears two times than expressing a sound file as the amplitude levels over time, one

may express it as the frequency spectrum over time, which

"for" appears two times

corresponds more accurately to human audio perception.

"you" appears two times

While data reduction (compression, be it lossy or lossless) is a

Ignoring the difference between capital and lower-case letters,

main goal of transform coding, it also allows other goals: one may

roughly half of the phrase is redundant. Nine words -- ask, not,

represent data more accurately for the original amount of space [1] –

what, your, country, can, do, for, you -- give us almost everything

for example, in principle, if one starts with an analog or high-

we need for the entire quote. To construct the second half of the

resolution digital master, an MP3 file of a given bitrate (e.g. 320

kbit/s) should provide a better representation than a raw Video can be compressed immensely (e.g. 100:1) with

uncompressed audio in WAV or AIFF file of the same bitrate. little visible quality loss;

(Uncompressed audio can get lower bitrate only by lowering Audio can often be compressed at 10:1 with

sampling frequency and/or sampling resolution.) Further, a imperceptible loss of quality;

transform coding may provide a better domain for manipulating or Still images are often lossily compressed at 10:1, as

otherwise editing the data – for example, equalization of audio is with audio, but the quality loss is more noticeable,

most naturally expressed in the frequency domain (boost the bass, especially on closer inspection.

for instance) rather than in the raw time domain.

The compression rate is 5 to 6 % in case of lossy compression

From this point of view, perceptual encoding is not essentially while in case of lossless compression it is about 50 to 60 % of the

about discarding data, but rather about a better representation of actual file

data.

Lowering resolution:-

Another use is for backward compatibility and graceful

degradation: in color television, encoding color via a luminance-

chrominance transform domain (such as YUV) means that black- A general kind of lossy compression is to lower the resolution of

and-white sets display the luminance, while ignoring the color an image, as in image scaling, particularly decimation. One may

information. also remove less "lower information" parts of an image, such as by

seam carving.

Another example is chroma subsampling: the use of color spaces

such as YIQ, used in NTSC, allow one to reduce the resolution on Many media transforms, such as Gaussian blur, are, like lossy

the components to accord with human perception – humans have compression, irreversible: the original signal cannot be

highest resolution for black-and-white (luma), lower resolution for reconstructed from the transformed signal. However, in general

mid-spectrum colors like yellow and green, and lowest for red and these will have the same size as the original, and are not a form of

blues – thus NTSC displays approximately 350 pixels of luma per compression.

scanline, 150 pixels of yellow vs. green, and 50 pixels of blue vs.

red, which are proportional to human sensitivity to each

Lowering resolution has practical uses, as the NASA New

component.

Horizons craft will transmit thumbnails of its encounter with Pluto-

Charon before it sends the higher resolution images.

Information loss:-

Lossless data compression:-

Lossy compression formats suffer from generation loss: repeatedly

compressing and decompressing the file will cause it to

progressively lose quality. This is in contrast with lossless data In general:-

compression, where data will not be lost via the use of such a

procedure. Refers to data compression techniques in which no data is lost. The

PKZIP compression technology is an example of lossless

Information-theoretical foundations for lossy data compression are compression. For most types of data, lossless compression

provided by rate-distortion theory. Much like the use of probability techniques can reduce the space needed by only about 50%. For

in optimal coding theory, rate-distortion theory heavily draws on greater compression, one must use a lossy compression technique.

Bayesian estimation and decision theory in order to model

Note, however, that only certain types of data -- graphics, audio,

perceptual distortion and even aesthetic judgment.

and video -- can tolerate lossy compression. You must use a

lossless compression technique when compressing data and

Transparency:- programs.

When a user acquires a lossily compressed file, (for example, to

reduce download time) the retrieved file can be quite different

In terms of digital:-

from the original at the bit level while being indistinguishable to

the human ear or eye for most practical purposes. Many Lossless data compression is a class of data compression

compression methods focus on the idiosyncrasies of human algorithms that allows the exact original data to be reconstructed

physiology, taking into account, for instance, that the human eye from the compressed data. The term lossless is in contrast to lossy

can see only certain wavelengths of light. The psychoacoustic data compression, which only allows an approximation of the

model describes how sound can be highly compressed without original data to be reconstructed, in exchange for better

degrading perceived quality. Flaws caused by lossy compression compression rates.

that are noticeable to the hu;man eye or ear are known as

compression artifacts.

Lossless data compression is used in many applications. For

example, it is used in the ZIP file format and in the Unix tool gzip.

Compression ratio:- It is also often used as a component within lossy data compression

technologies (e.g. lossless mid/side joint stereo preprocessing by

the LAME MP3 encoder and other lossy audio encoders).

The compression ratio (that is, the size of the compressed file

compared to that of the uncompressed file) of lossy video codecs is

nearly always far superior to that of the audio and still-image Lossless compression is used in cases where it is important that the

equivalents. original and the decompressed data be identical, or where

deviations from the original data could be deleterious. Typical

examples are executable programs, text documents and source will be compressed into something that is shorter than

code. Some image file formats, like PNG or GIF, use only lossless itself.

compression, while others like TIFF and MNG may use either Let M be the least number such that there is a file F

lossless or lossy methods. Lossless audio formats are most often with length M bits that compresses to something

used for archiving or production purposes, with smaller lossy shorter. Let N be the length (in bits) of the compressed

audio files being typically used on portable players and in other version of F.

cases where storage space is limited and/or exact replication of the Because N < M, every file of length N keeps its size

audio is unnecessary. during compression. There are 2N such files. Together

with F, this makes 2N + 1 files which all compress into

one of the 2N files of length N.

Lossless compression techniques:- But 2N is smaller than 2N + 1, so by the pigeonhole

principle there must be some file of length N which is

Most lossless compression programs do two things in sequence: simultaneously the output of the compression function

the first step generates a statistical model for the input data, and the on two different inputs. That file cannot be

second step uses this model to map input data to bit sequences in decompressed reliably (which of the two originals

such a way that "probable" (e.g. frequently encountered) data will should that yield?), which contradicts the assumption

produce shorter output than "improbable" data. that the algorithm was lossless.

We must therefore conclude that our original

hypothesis (that the compression function makes no file

The primary encoding algorithms used to produce bit sequences longer) is necessarily untrue.

are Huffman coding (also used by DEFLATE) and arithmetic

coding. Arithmetic coding achieves compression rates close to the

best possible for a particular statistical model, which is given by Any lossless compression algorithm that makes some files shorter

the information entropy, whereas Huffman compression is simpler must necessarily make some files longer, but it is not necessary

and faster but produces poor results for models that deal with that those files become very much longer. Most practical

symbol probabilities close to 1. compression algorithms provide an "escape" facility that can turn

off the normal coding for files that would become longer by being

encoded. Then the only increase in size is a few bits to tell the

There are two primary ways of constructing statistical models: in a decoder that the normal coding has been turned off for the entire

static model, the data is analyzed and a model is constructed, then input. For example, DEFLATE compressed files never need to

this model is stored with the compressed data. This approach is grow by more than 5 bytes per 65,535 bytes of input.

simple and modular, but has the disadvantage that the model itself

can be expensive to store, and also that it forces a single model to

be used for all data being compressed, and so performs poorly on In fact, if we consider files of length N, if all files were equally

files containing heterogeneous data. Adaptive models dynamically probable, then for any lossless compression that reduces the size of

update the model as the data is compressed. Both the encoder and some file, the expected length of a compressed file (averaged over

decoder begin with a trivial model, yielding poor compression of all possible files of length N) must necessarily be greater than N.

initial data, but as they learn more about the data performance So if we know nothing about the properties of the data we are

improves. Most popular types of compression used in practice now compressing, we might as well not compress it at all. A lossless

use adaptive coders. compression algorithm is useful only when we are more likely to

compress certain types of files than others; then the algorithm

could be designed to compress those types of data better.

Lossless compression methods may be categorized according to

the type of data they are designed to compress. While, in principle,

any general-purpose lossless compression algorithm (general- Thus, the main lesson from the argument is not that one risks big

purpose meaning that they can compress any bitstring) can be used losses, but merely that one cannot always win. To choose an

on any type of data, many are unable to achieve significant algorithm always means implicitly to select a subset of all files that

compression on data that are not of the form for which they were will become usefully shorter. This is the theoretical reason why we

designed to compress. Many of the lossless compression need to have different compression algorithms for different kinds

techniques used for text also work reasonably well for indexed of files: there cannot be any algorithm that is good for all kinds of

images. data.

The "trick" that allows lossless compression algorithms, used on

the type of data they were designed for, to consistently compress

such files to a shorter form is that the files the algorithms are

Limitations:- designed to act on all have some form of easily-modeled

redundancy that the algorithm is designed to remove, and thus

belong to the subset of files that that algorithm can make shorter,

Lossless data compression algorithms cannot guarantee whereas other files would not get compressed or even get bigger.

compression for all input data sets. In other words, for any Algorithms are generally quite specifically tuned to a particular

(lossless) data compression algorithm, there will be an input data type of file: for example, lossless audio compression programs do

set that does not get smaller when processed by the algorithm. This not work well on text files, and vice versa.

is easily proven with elementary mathematics using a counting

argument, as follows:

In particular, files of random data cannot be consistently

compressed by any conceivable lossless data compression

Assume that each file is represented as a string of bits algorithm: indeed, this result is used to define the concept of

of some arbitrary length. randomness in algorithmic complexity theory.

Suppose that there is a compression algorithm that

transforms every file into a distinct file which is no

longer than the original file, and that at least one file An algorithm that is asserted to be able to losslessly compress any

data stream is provably impossible.[6] While there have been many

claims through the years of companies achieving "perfect Lossless

compression" where an arbitrary number N of random bits can

always be compressed to N-1 bits, these kinds of claims can be

The Lempel–Ziv (LZ) compression methods are among the most

safely discarded without even looking at any further details

popular algorithms for lossless storage. DEFLATE is a variation

regarding the purported compression scheme. Such an algorithm

on LZ which is optimized for decompression speed and

contradicts fundamental laws of mathematics because, if it existed,

compression ratio, therefore compression can be slow. DEFLATE

it could be applied repeatedly to losslessly reduce any file to length

is used in PKZIP, gzip and PNG. LZW (Lempel–Ziv–Welch) is

0. Allegedly "perfect" compression algorithms are usually called

used in GIF images. Also noteworthy are the LZR (LZ–Renau)

derisively "magic" compression algorithms.

methods, which serve as the basis of the Zip method. LZ methods

utilize a table-based compression model where table entries are

On the other hand, it has also been proven that there is no substituted for repeated strings of data. For most LZ methods, this

algorithm to determine whether a file is incompressible in the table is generated dynamically from earlier data in the input. The

sense of Kolmogorov complexity; hence, given any particular file, table itself is often Huffman encoded (e.g. SHRI, LZX). A current

even if it appears random, it's possible that it may be significantly LZ-based coding scheme that performs well is LZX, used in

compressed, even including the size of the decompressor. An Microsoft's CAB format.

example is the digits of the mathematical constant pi, which appear

random but can be generated by a very small program. However,

The very best modern lossless compressors use probabilistic

even though it cannot be determined whether a particular file is

models, such as prediction by partial matching. The Burrows–

incompressible, a simple theorem about incompressible strings

Wheeler transform can also be viewed as an indirect form of

shows that over 99% of files of any given length cannot be

statistical modelling.

compressed by more than one byte (including the size of the

decompressor).

In a further refinement of these techniques, statistical predictions

can be coupled to an algorithm called arithmetic coding.

Arithmetic coding, invented by Jorma Rissanen, and turned into a

practical method by Witten, Neal, and Cleary, achieves superior

Mathematical background:- compression to the better-known Huffman algorithm, and lends

itself especially well to adaptive data compression tasks where the

predictions are strongly context-dependent. Arithmetic coding is

Any compression algorithm can be viewed as a function that maps used in the bilevel image-compression standard JBIG, and the

sequences of units (normally octets) into other sequences of the document-compression standard DjVu. The text entry system,

same units. Compression is successful if the resulting sequence is Dasher, is an inverse-arithmetic-coder.

shorter than the original sequence plus the map needed to

decompress it. In order for a compression algorithm to be

considered lossless, there needs to exist a reverse mapping from Lossless and lossy compression are terms that describe whether or

compressed bit sequences to original bit sequences; that is to say, not, in the compression of a file, all original data can be recovered

the compression method would need to encapsulate a bijection when the file is uncompressed. With lossless compression, every

between "plain" and "compressed" bit sequences. single bit of data that was originally in the file remains after the

file is uncompressed. All of the information is completely restored.

The sequences of length N or less are clearly a strict superset of the This is generally the technique of choice for text or spreadsheet

sequences of length N-1 or less. It follows that there are more files, where losing words or financial data could pose a problem.

sequences of length N or less than there are sequences of length N- The Graphics Interchange File (GIF) is an image format used on

1 or less. It therefore follows from the pigeonhole principle that it the Web that provides lossless compression.

is not possible to map every sequence of length N or less to a

unique sequence of length N-1 or less. Therefore it is not possible

to produce an algorithm that reduces the size of every possible On the other hand, lossy compression reduces a file by

input sequence. permanently eliminating certain information, especially redundant

information. When the file is uncompressed, only a part of the

original information is still there (although the user may not notice

Lossless versus lossy compression:- it). Lossy compression is generally used for video and sound,

where a certain amount of information loss will not be detected by

Lossy:- most users. The JPEG image file, commonly used for photographs

and other complex still images on the Web, is an image that has

lossy compression. Using JPEG compression, the creator can

Lossy image compression is used in digital cameras, to increase decide how much loss to introduce and make a trade-off between

storage capacities with minimal degradation of picture quality. file size and image quality.

Similarly, DVDs use the lossy MPEG-2 Video codec for video

compression.

In lossy audio compression, methods of psychoacoustics are used

to remove non-audible (or less audible) components of the signal. The type of compression we've been discussing here is called

Compression of human speech is often performed with even more lossless compression, because it lets you recreate the original file

specialized techniques, so that "speech compression" or "voice exactly. All lossless compression is based on the idea of breaking a

coding" is sometimes distinguished as a separate discipline from file into a "smaller" form for transmission or storage and then

"audio compression". Different audio and speech compression putting it back together on the other end so it can be used again.

standards are listed under audio codecs. Voice compression is used

in Internet telephony for example, while audio compression is used Lossy compression works very differently. These programs simply

for CD ripping and is decoded by audio players. eliminate "unnecessary" bits of information, tailoring the file so

that it is smaller. This type of compression is used a lot for matter of data compression, one can say precisely and confidently

reducing the file size of bitmap pictures, which tend to be fairly things like "This bit of knowledge explains 3.716% of the given

bulky. To see how this works, let's consider how your computer dataset".

might compress a scanned photograph.

Advantages of data compression:-

A lossless compression program can't do much with this type of

file. While large parts of the picture may look the same -- the

whole sky is blue, for example -- most of the individual pixels are i) It reduces the data storage requirements

a little bit different. To make this picture smaller without ii) The audience can experience rich-quality signals for audio-visual data

compromising the resolution, you have to change the color value representation

for certain pixels. If the picture had a lot of blue sky, the program iii) Data security can also be greatly enhanced by encrypting the decoding

would pick one color of blue that could be used for every pixel. parameters andtransmitting them separately from the compressed database

Then, the program rewrites the file so that the value for every sky files to restrict access of proprietary information

pixel refers back to this information. If the compression scheme iv) The rate of input-output operations in a computing device can be greatly

works well, you won't notice the change, but the file size will be increased due toshorter representation of data

significantly reduced. v) Data Compression obviously reduces the cost of backup and recovery of

data in computersystems by storing the backup of large database files in

compressed form

Of course, with lossy compression, you can't get the original file

back after it has been compressed. You're stuck with the

compression program's reinterpretation of the original. For this

reason, you can't use this sort of compression for anything that Disadvantages of Data Compression:-

needs to be reproduced exactly, including software applications,

databases and presidential inauguration speeches. i) The extra overhead incurred by encoding and decoding process is one of

the most seriousdrawbacks of data compression, which discourages its use in

someareas

Future scope of data compression:- ii)Data compression generally reduces the reliability of the records

iii) Transmission of very sensitive compressed data through a noisy

communication channel isrisky because the burst errors introduced by the

The ultimate form of data compression is when you compile the

noisy channel can destroy the transmitteddata

dataset into a program which, when executed, produces the

iv) Disruption of data properties of a compressed data, will result in

original dataset.

compressed data differentfrom the original data

v) In many hardware and systems implementations, the extra complexity

It is important not because it is an immediately practical way of added by data

compressing datasets for storage or transmission, but because there compression can increase the system‟s cost and reduce the

is a deep connection between understanding a dataset and being system‟s efficiency, especially

able to compress it: Anything you genuinely know about a dataset in the areas of applications that require very low-power VLSI implementation

allows you to compress it better, and conversely anything that

allows you to compress a dataset better represents real knowledge

of some sort about that dataset. (And contrawise, if it doesn't help

you compress the dataset, it isn't knowledge, just fanciful References:

projection of your own thoughts onto the screen of the dataset.)

1. www.data-compression.com

This in turn is important because it puts the notion of 2. datacompression.info

"understanding" on a firm quantitative footing: Shannon's 3. The data compression book by Mark Nelson

information theory gives us a solid theoretical basis for measuring

and comparing information content. In conventional qualitative 4. http://en.wikipedia.org/wiki/Data_compression

artificial intelligence hacking, whether and how much a system 5. http://en.wikipedia.org/wiki/Lossless_data_compressio

learns is very much a matter of subjective handwaving rather than n

precise calculation: "Looks smart to me!" By looking at it as a

Вам также может понравиться

- Data Compression: Unlocking Efficiency in Computer Vision with Data CompressionОт EverandData Compression: Unlocking Efficiency in Computer Vision with Data CompressionОценок пока нет

- Image Compression: Efficient Techniques for Visual Data OptimizationОт EverandImage Compression: Efficient Techniques for Visual Data OptimizationОценок пока нет

- Matter UnrevДокумент77 страницMatter UnrevmohanОценок пока нет

- Dereje Teferi Dereje - Teferi@aau - Edu.etДокумент36 страницDereje Teferi Dereje - Teferi@aau - Edu.etdaveОценок пока нет

- Data Compression: This Article May Require byДокумент25 страницData Compression: This Article May Require bygayathri2012Оценок пока нет

- Data CompressionДокумент18 страницData Compressionsebastian431Оценок пока нет

- Efficiency of Lossless Data Compression: MIPRO 2011, May 23-27, 2011, Opatija, CroatiaДокумент6 страницEfficiency of Lossless Data Compression: MIPRO 2011, May 23-27, 2011, Opatija, CroatiaGaurav SaxenaОценок пока нет

- Data Compression in Multimedia Text Imag PDFДокумент7 страницData Compression in Multimedia Text Imag PDFKam PisesОценок пока нет

- Image Compression Using Discrete Cosine Transform and Adaptive Huffman CodingДокумент5 страницImage Compression Using Discrete Cosine Transform and Adaptive Huffman CodingInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Lecture 1Документ11 страницLecture 1Sam De EvansОценок пока нет

- CompressionДокумент3 страницыCompressionMaria Deborah Vivas BaluranОценок пока нет

- MCE 316 Lecture NoteДокумент56 страницMCE 316 Lecture NoteRaji RidwanОценок пока нет

- A Study On Various Data Compression Types and TechniquesДокумент6 страницA Study On Various Data Compression Types and TechniquesperhackerОценок пока нет

- Lossless CompressionДокумент11 страницLossless CompressionTDelfuegoОценок пока нет

- Data CompressionДокумент20 страницData CompressionHimanshu PandayОценок пока нет

- Termpaper ReportДокумент16 страницTermpaper ReportNamanОценок пока нет

- Data Compression ReportДокумент12 страницData Compression ReportNikhil NandaОценок пока нет

- Encoder & DecoderДокумент7 страницEncoder & DecoderPradeep JaganathanОценок пока нет

- PHD Thesis Image CompressionДокумент4 страницыPHD Thesis Image Compressionfczeohief100% (1)

- 20.1 References For Image CompressionДокумент64 страницы20.1 References For Image CompressionKanhu apsОценок пока нет

- Graphic Compression CAD/CAMДокумент22 страницыGraphic Compression CAD/CAMKler ManpreetОценок пока нет

- Review of Data Compression and Different Techniques of Data Compression IJERTV2IS1106Документ8 страницReview of Data Compression and Different Techniques of Data Compression IJERTV2IS1106Rashmi RaiОценок пока нет

- Data Compression ReportДокумент10 страницData Compression ReportBunty LambaОценок пока нет

- Text Data CompressionДокумент13 страницText Data CompressionJim AchuffОценок пока нет

- DaggyДокумент8 страницDaggysamson oinoОценок пока нет

- Modified Run Length Encoding Scheme For High Data Compression RateДокумент5 страницModified Run Length Encoding Scheme For High Data Compression RateGroleo MuttОценок пока нет

- A Novel Approach of Lossless Image Compression Using Two TechniquesДокумент5 страницA Novel Approach of Lossless Image Compression Using Two Techniquessklove123Оценок пока нет

- A Review of Data Compression Techniques: International Journal of Applied Engineering Research January 2017Документ9 страницA Review of Data Compression Techniques: International Journal of Applied Engineering Research January 2017Stanley SathlerОценок пока нет

- Image Compression: TransmitДокумент16 страницImage Compression: TransmitGaurav LathwalОценок пока нет

- Data Compression ExplainedДокумент110 страницData Compression ExplainedARob109Оценок пока нет

- Data Compression: Jorge SolorzanoДокумент11 страницData Compression: Jorge Solorzanofrancisco KaundaОценок пока нет

- Compressed Data Transmission Among Nodes in Bigdata: American Journal of Engineering Research (Ajer)Документ4 страницыCompressed Data Transmission Among Nodes in Bigdata: American Journal of Engineering Research (Ajer)AJER JOURNALОценок пока нет

- A Review of Data Compression TechniquesДокумент9 страницA Review of Data Compression TechniquesperhackerОценок пока нет

- Data CompressionДокумент23 страницыData Compressionप्रणव प्रसाद सिंहОценок пока нет

- Content-Based Textual Big Data Analysis and Compression: Fei Gao Ananya Dutta Jiangjiang LiuДокумент6 страницContent-Based Textual Big Data Analysis and Compression: Fei Gao Ananya Dutta Jiangjiang LiuYHON YERSON SANCHEZ YUCRAОценок пока нет

- Data CompressionДокумент49 страницData CompressionAakash MahajanОценок пока нет

- Chapter 3 Multimedia Data CompressionДокумент23 страницыChapter 3 Multimedia Data CompressionKalya YbsufkadОценок пока нет

- IMAGE COMPRESSION AND DECOMPRESSION SYSTEM USING RUN LENGTH ENCODING ALGORITHM (Chapter 1-3)Документ18 страницIMAGE COMPRESSION AND DECOMPRESSION SYSTEM USING RUN LENGTH ENCODING ALGORITHM (Chapter 1-3)femiОценок пока нет

- Trans JourДокумент2 страницыTrans JourMarvin NgwenyaОценок пока нет

- RDT OrtegoДокумент28 страницRDT OrtegokarthikbgmОценок пока нет

- Data Compression IntroДокумент107 страницData Compression Intromadanram100% (1)

- STL For Large Data ManagementДокумент51 страницаSTL For Large Data Managementmanoj-kumar-jainОценок пока нет

- Data Compresion 1Документ2 страницыData Compresion 1Murithi G BorisОценок пока нет

- Assignment 1Документ14 страницAssignment 1Harshit WaliaОценок пока нет

- Fast Image Compression Using Dic AlgorithmДокумент21 страницаFast Image Compression Using Dic AlgorithmvenkateshmukharjiОценок пока нет

- Compression Ratio and Peak Signal To Noise Ratio in Grayscale Image Compression Using WaveletДокумент5 страницCompression Ratio and Peak Signal To Noise Ratio in Grayscale Image Compression Using WaveletpakepeОценок пока нет

- Mmultimedia 3Документ45 страницMmultimedia 3iyasu ayenekulu100% (2)

- Radu Lixandroiu A EconomicaДокумент36 страницRadu Lixandroiu A EconomicaCosmin RaducanuОценок пока нет

- Digital Image ProcessingДокумент11 страницDigital Image ProcessingSudeshОценок пока нет

- XyzДокумент69 страницXyzKaviyarasuОценок пока нет

- R16 4-1 Dip Unit 4Документ23 страницыR16 4-1 Dip Unit 4kuchipudi durga pravallikaОценок пока нет

- Introduction To Multimedia Compression: National Chiao Tung University Chun-Jen Tsai 2/21/2012Документ22 страницыIntroduction To Multimedia Compression: National Chiao Tung University Chun-Jen Tsai 2/21/2012Puja AstawaОценок пока нет

- Digital Image Processing U8Документ5 страницDigital Image Processing U8Kpsteja TejaОценок пока нет

- Multimedia Systems Chapter 7Документ21 страницаMultimedia Systems Chapter 7sileshi alelignОценок пока нет

- Data CompressionДокумент7 страницData CompressionM.AllaritОценок пока нет

- Design of Test Data Compressor/Decompressor Using Xmatchpro MethodДокумент10 страницDesign of Test Data Compressor/Decompressor Using Xmatchpro MethodInternational Journal of computational Engineering research (IJCER)Оценок пока нет

- Image Compression Using VerilogДокумент5 страницImage Compression Using VerilogvisuОценок пока нет

- Journal of Visual Communication and Image Representation Volume 59 Issue 2019 (Doi 10.1016 - J.jvcir.2018.12.043) Yuan, Shuyun Hu, Jianbo - Research On Image Compression Technology Based On HuffmanДокумент6 страницJournal of Visual Communication and Image Representation Volume 59 Issue 2019 (Doi 10.1016 - J.jvcir.2018.12.043) Yuan, Shuyun Hu, Jianbo - Research On Image Compression Technology Based On HuffmanpusherОценок пока нет

- Real-Time Compression of Logging DataДокумент10 страницReal-Time Compression of Logging DataBOURECHAK TayebОценок пока нет

- Implementation of Lempel-Ziv Algorithm For Lossless Compression Using VHDLДокумент2 страницыImplementation of Lempel-Ziv Algorithm For Lossless Compression Using VHDLBijoy Kumar UpadhyayaОценок пока нет

- CS3308 Information Retrieval QuizДокумент63 страницыCS3308 Information Retrieval QuizMagarsa Bedasa50% (2)

- Graphics and Digital Photo-EditingДокумент10 страницGraphics and Digital Photo-EditingTamerlan MirzayevОценок пока нет

- Digital Image ProcessingДокумент118 страницDigital Image ProcessingSigra JisawОценок пока нет

- (1996) JOSA 13 Space-Bandwidth Product of Optical Signals and SyДокумент4 страницы(1996) JOSA 13 Space-Bandwidth Product of Optical Signals and Sythanhevt92Оценок пока нет

- KSV - DC - IT 702 - April 2020 - APДокумент2 страницыKSV - DC - IT 702 - April 2020 - APmalavОценок пока нет

- Fundamentals of Compression: Prepared By: Haval AkrawiДокумент21 страницаFundamentals of Compression: Prepared By: Haval AkrawiMuhammad Yusif AbdulrahmanОценок пока нет

- Graphics and Multimedia Question Bank With AnswerДокумент21 страницаGraphics and Multimedia Question Bank With AnswerSaira KhanОценок пока нет

- Difference Between Lossy Compression and Lossless CompressionДокумент4 страницыDifference Between Lossy Compression and Lossless CompressionKyla LotaОценок пока нет

- Computer Science Extended EssayДокумент15 страницComputer Science Extended EssayMonkey D LuffyОценок пока нет

- Image File FormatsДокумент5 страницImage File Formatsapi-529020281Оценок пока нет

- Projectreport (2) - 1 - 111Документ56 страницProjectreport (2) - 1 - 111Melinda BerbОценок пока нет

- Image Steganography Project ReportДокумент13 страницImage Steganography Project ReportAshesh Mishra75% (4)

- Data Compression: Multimedia Authoring 1Документ23 страницыData Compression: Multimedia Authoring 1Jai PratapОценок пока нет

- Chapter 8-Image CompressionДокумент61 страницаChapter 8-Image CompressionAshish GauravОценок пока нет

- CH - 3 - Handling Image - Color Palet & Compression TechniquesДокумент47 страницCH - 3 - Handling Image - Color Palet & Compression TechniquesSumaira NoorОценок пока нет

- Matlab Code For Image Compression Using SPIHT AlgorithmДокумент19 страницMatlab Code For Image Compression Using SPIHT AlgorithmArsenalBestlyОценок пока нет

- Algorithms For Data Science: CSOR W4246Документ58 страницAlgorithms For Data Science: CSOR W4246EarthaОценок пока нет

- 32 BitДокумент21 страница32 Bitammayi9845_930467904Оценок пока нет

- Sitsyll PDFДокумент57 страницSitsyll PDFpreranaОценок пока нет

- Scanning Guide - How To Produce Optimal 2D Gel ImagesДокумент24 страницыScanning Guide - How To Produce Optimal 2D Gel ImagesClebing100% (1)

- Presentation MultimediaДокумент15 страницPresentation MultimediaPriyanka MeenaОценок пока нет

- Improving LZW Image CompressionДокумент8 страницImproving LZW Image CompressionRizki AnugrahОценок пока нет

- Module 3Документ23 страницыModule 3Gayatri JoshiОценок пока нет

- Dokument - Pub Cardiff University Examination Paper Academic Year Flipbook PDFДокумент13 страницDokument - Pub Cardiff University Examination Paper Academic Year Flipbook PDFالامتحانات السياحةОценок пока нет

- Dip Unit 5Документ33 страницыDip Unit 5sheikdavoodОценок пока нет

- Design & Implementation of Compression Algorithm For Nucleotide Sequence Using Direct Coding and LZ77Документ5 страницDesign & Implementation of Compression Algorithm For Nucleotide Sequence Using Direct Coding and LZ77erpublicationОценок пока нет

- Meridian MLPДокумент11 страницMeridian MLPmflemi4Оценок пока нет

- D2: The Impact That File Format, Compression Techniques, Image Resolution and Colour Depth Have On File Size and Image QualityДокумент5 страницD2: The Impact That File Format, Compression Techniques, Image Resolution and Colour Depth Have On File Size and Image Qualityapi-300831642Оценок пока нет

- Basics of Image Processing and AnalysisДокумент175 страницBasics of Image Processing and AnalysisVikrant ViksОценок пока нет

- Elective: Data Compression and Encryption V Extc ECCDLO 5014Документ60 страницElective: Data Compression and Encryption V Extc ECCDLO 5014anitasjadhavОценок пока нет