Академический Документы

Профессиональный Документы

Культура Документы

Math 373 Lecture Notes 71

Загружено:

hikolИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Math 373 Lecture Notes 71

Загружено:

hikolАвторское право:

Доступные форматы

MATH 373 LECTURE NOTES

71

13.4. Predictor-corrector methods. We consider the Adams methods, obtained from the formula

xn+1 xn+1

y(xn+1 y(xn ) =

xn

y (x) dx =

xn

f (x, y(x)) dx

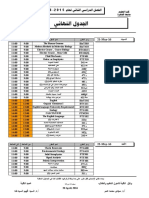

by replacing f by an interpolating polynomial. If we use the points xn , xn1 , . . . , xnp , we get the Adams-Bashforth methods. If we use the points xn+1 , xn , . . . , xnp , we get the AdamsMoulton formulas. In a p + 1-step method, implicit methods have one additional parameter (b1 ) to specify. Hence, one can show that the highest attainable order of a zero-stable p + 1-step method is less in the case of an explicit method than for an implicit method. If we compare methods of the same order, then explicit methods require an additional starting value, but we might still prefer an explicit method with a higher stepnumber to an implicit method of the same order, (but lower stepnumber). To see the advantages of implicit methods, consider the following table comparing explicit Adams-Bashforth methods to implicit Adams-Moulton methods. Table 5. Adams-Bashforth explicit methods stepnumber k 1 2 3 4 order r 1 2 3 4 error constant Cr+1 1/2 5/12 3/8 251/720 abs. stab. interval (, 0) -2 -1 -6/11 -3/10 Table 6. Adams-Moulton implicit methods stepnumber k 1 2 3 4 order r 2 3 4 5 error constant Cr+1 -1/12 -1/24 -19/720 -3/160 abs. stab. interval (, 0) -6 -3 -90/49 If we want a 4th order Adams method, then the choices are a 4-step explicity method or a 3-step implicit method. Note that the implicit method has an error constant smaller by a factor of 1/13 and an absolute stability interval 10 times that of an explicit method. Hence for stabilty and accuracy, we would like to use the implicit method. To do so, we must solve at each step the nonlinear equation:

p p

yn+1 =

i=0

ai yni +

i=1

bi f (xni , yni )

(note yn+1 also appears on the right hand side of the equation). A simple iteration procedure for this is:

p j+1 yn+1 = i=0 j [ai yni + bi f (xni , yni )] + hb1 fn+1 ,

72

MATH 373 LECTURE NOTES

j j j+1 where fn+1 = f (xn+1 , yn+1 ). Hence, the error between yn+1 and the approximation yn+1 satises: j+1 j yn+1 yn+1 = hb1 [fn+1 fn+1 ].

Assuming f satises a Lipschitz condition with Lipschitz constant L, we get

j+1 j |yn+1 yn+1 | h|b1 |L|yn+1 yn+1 |.

It easily follows that

j 0 |yn+1 yn+1 | [h|b1 |L]j |yn+1 yn+1 |.

Hence, if h|b1 |L < 1, the iteration converges. Since each iteration involves an evaluation of the function f , we would like a good starting guess to minimize the number of iterations needed to meet a given error tolerance. We do 0 this by using an explicit method (called a predictor) to produce the initial guess yn+1 . The iteration then consists of the following steps: PECECE ..., where P denotes the application j of the predictor method, E denotes the evaluation of f at this value of yn+1 , and C denotes the application of the corrector method (the implicit method), using the evaluation of f just produced on the right hand side. There are two basic possibilities for stopping this iteration. j j+1 The rst is to iterate to convergence. In practice this would mean until |yn+1 yn+1 | < , where is a preassigned tolerance. The advantage of this is that the nal result is independent of the starting guess and the local trunction error and stability properties are those of the corrector alone. The disadvantage is that we dont know how many function evaluations this may take and hence it may be time consuming. The second possibility is to specify in advance the number of times the corrector is applied. In this case, the number of function evaluations is known, but the local truncation error and stability properties now depend on the combination of the predictor and corrector. These methods are denoted by P(EC)m or P(EC)m E, depending on whether a nal evaluation is done. The common practice is to use m = 1 or m = 2. The Adams-Bashforth, Adams-Moulton p + 1-step methods provide good combinations for predictor-corrector pairs. In that case, the order of the corrector will be one higher than the order of the predictor. A variable order, variable step-size code, written by Shampine and Gordon, uses these methods and the iteration PECE. Changes in step-size and order are based on estimating the local error by comparing the dierence between a kth and k + 1 order corrector, both using a kth order predictor. In using multistep methods, one needs a way of generating the additional starting values and also additional values when the step-size is changed. Starting values can be obtained by using Runge-Kutta or Taylor series methods. When the step-size is changed, additional values can be obtained by Runge-Kutta methods or by interpolation. In the ShampineGordon code, the codes are started with a 1-step Adams method, then a 2-step method, and so on until all the starting values are generated. The step-size is changed using the divided dierence form of the interpolating polynomial, which allows the method to be generalized to unequally spaced points.

MATH 373 LECTURE NOTES

73

13.5. Generalization to rst order systems. We consider the rst order system Y = F (x, Y ), Y (a) = ,

where Y = (y 1 , . . . , y m )T and F (x, Y ) = (f 1 (x, y 1 , . . . , y m ), . . . , f m (x, y 1 , . . . , y m ))T . We showed previously that Runge-Kutta methods are applicable to such systems. Multistep methods are also applicable, although we need to extend some of the theory. The stability theory previously developed was for the model problem y = y, where was considered a local approximation of f /y. For systems, the analogous model problem is: Y = AY , where A is a matrix with constant coecients, considered a local approximation to the Jacobian matrix, i.e., Aij (f i /y j ). Applying the multistep method, we obtain

p

[I hb1 A]Yn+1 =

i=0

[ai I + hbi A]Yni .

If A is diagonalizable, i.e., there exists a nonsingular matrix H and a diagonal matrix such that H 1 AH = , (where the eigenvalues of A lie on the diagonal of ), then multiplying the above on the left by H 1 , we get

p

[H

hb1 H

A]Yn+1 =

i=0

[ai H 1 + hbi H 1 A]Yni .

Setting HZn = Yn , we get

p

[I hb1 ]Zn+1 =

i=0

[ai I + hbi ]Zni .

Since I and are diagonal matrices, the system decouples and we get for each component j,

p

[1

j hb1 j ]zn+1

=

i=0

j [ai + hbi j ]zni .

The denition of absolute stability depended on the interval of h for which all solutions of the multistep method applied to the model problem y = y remain bounded as n . Since H is constant, the components of Yn will remain bounded if the components of Zn do. Now the dierence equation obtained above is the same one obtained previously. Hence, the solutions remain bounded if all roots r of the characteristic polynomial (z) h(z) satisfy |r| 1 (and roots of magnitude one are simple). To be absolutely stable, we require these conditions to hold when is any eigenvalue of the matrix A. Although A is real, its eigenvalues might be complex. Hence, for rst order systems, we modify our denition to read: Denition: A linear multistep method is absolutely stable in the region R of the complex plane if for all h R, all roots of the characteristic polynomial (z) h(z) associated with the method satisfy |r| 1 (and roots of magnitude one are simple). A similar modication is made for the denition of relative stability.

74

MATH 373 LECTURE NOTES

Example: Eulers method: Yn+1 = Yn + hFn . We apply the method to y = y to get the dierence equation yn+1 = (1 + h)yn . The characteristic polynomial has one root r = 1 + h, so we want |1 + h| 1. Now = 1 + i2 may be complex, so |1 + h| = (1 + h1 )2 + (h2 )2 1, i.e., (1 + h1 )2 + (h2 )2 1. Thus R is a circle in the complex plane (with real axis h1 and imaginary axis h2 ) centered at (1, 0) and radius 1. The interval of absolute stability (1, 0) is the intersection of R with the h1 axis. Example: Trapezoidal rule: Yn+1 = Yn + (h/2)(Fn+1 + Fn ). There is only one root of the characteristic polynomial given by r = (1 + h/2)/(1 h/2). Hence, we require |1 + h/2| |1 h/2|, i.e., (1 + h1 /2)2 + (h2 /2)2 (1 h1 /2)2 + (h2 /2)2 . Clearly, this will hold for h1 0, so the region of absolute stability is the entire left half-plane (the largest possible region of absolute stability).

Вам также может понравиться

- A-level Maths Revision: Cheeky Revision ShortcutsОт EverandA-level Maths Revision: Cheeky Revision ShortcutsРейтинг: 3.5 из 5 звезд3.5/5 (8)

- Polynomial Evaluation Over Finite Fields: New Algorithms and Complexity BoundsДокумент12 страницPolynomial Evaluation Over Finite Fields: New Algorithms and Complexity BoundsJaime SalguerroОценок пока нет

- Lim 05429427Документ10 страницLim 05429427thisisme987681649816Оценок пока нет

- Forward and Backwadrd Euler MethodsДокумент5 страницForward and Backwadrd Euler Methodsnellai kumarОценок пока нет

- FORTRAN 77 Lesson 7: 1 Numerical IntegrationДокумент7 страницFORTRAN 77 Lesson 7: 1 Numerical IntegrationManjita BudhirajaОценок пока нет

- Euler and SimpsonДокумент14 страницEuler and SimpsonEdward SmithОценок пока нет

- 4 Stability Analysis of Finite-Difference Methods For OdesДокумент12 страниц4 Stability Analysis of Finite-Difference Methods For OdesFadly NurullahОценок пока нет

- Roots of EquationsДокумент8 страницRoots of EquationsrawadОценок пока нет

- Solution of Nonlinear Algebraic Equations: Method 1. The Bisection MethodДокумент5 страницSolution of Nonlinear Algebraic Equations: Method 1. The Bisection MethodVerevol DeathrowОценок пока нет

- Reu Project: 1 PrefaceДокумент10 страницReu Project: 1 PrefaceEpic WinОценок пока нет

- Review of Numerical MethodsДокумент3 страницыReview of Numerical Methodssrv srvОценок пока нет

- Very-High-Precision Normalized Eigenfunctions For A Class of SCHR Odinger Type EquationsДокумент6 страницVery-High-Precision Normalized Eigenfunctions For A Class of SCHR Odinger Type EquationshiryanizamОценок пока нет

- 1 Week 02: 2 and 4 September 2008Документ11 страниц1 Week 02: 2 and 4 September 2008Roney WuОценок пока нет

- Numerical Methods For Solving Nonlinear Equations1Документ7 страницNumerical Methods For Solving Nonlinear Equations1Reza MohammadianОценок пока нет

- MTH603 4th Quiz 2023Документ5 страницMTH603 4th Quiz 2023CyberSite StatusОценок пока нет

- Rayhan Deandra K - 5008211165 - Practicum Report - Module (2) - KNTK (I)Документ11 страницRayhan Deandra K - 5008211165 - Practicum Report - Module (2) - KNTK (I)dhani7Оценок пока нет

- NM Unit5Документ6 страницNM Unit5Rohit GadekarОценок пока нет

- Arena Stanfordlecturenotes11Документ9 страницArena Stanfordlecturenotes11Victoria MooreОценок пока нет

- Dif Cal - Module 2 - Limits and ContinuityДокумент17 страницDif Cal - Module 2 - Limits and ContinuityRainОценок пока нет

- CUHK MATH 3060 Lecture Notes by KS ChouДокумент98 страницCUHK MATH 3060 Lecture Notes by KS ChouAlice YuenОценок пока нет

- EM AlgoДокумент8 страницEM AlgocoolaclОценок пока нет

- First Order Exist UniqueДокумент14 страницFirst Order Exist Uniquedave lunaОценок пока нет

- The Application of Homotopy Analysis Method To Nonlinear Equations Arising in Heat TransferДокумент5 страницThe Application of Homotopy Analysis Method To Nonlinear Equations Arising in Heat Transfergorot1Оценок пока нет

- A New Iterative Refinement of The Solution of Ill-Conditioned Linear System of EquationsДокумент10 страницA New Iterative Refinement of The Solution of Ill-Conditioned Linear System of Equationsscribd8885708Оценок пока нет

- Numerical Solutions of Nonlinear Ordinary DifferenДокумент8 страницNumerical Solutions of Nonlinear Ordinary DifferenDaniel LópezОценок пока нет

- Chapter 8Документ3 страницыChapter 8Dhynelle MuycoОценок пока нет

- Nonlinear Systems: Rooting-Finding ProblemДокумент28 страницNonlinear Systems: Rooting-Finding ProblemLam WongОценок пока нет

- Chap2 603Документ29 страницChap2 603naefmubarak100% (1)

- Unit Multistep and Predictor Corrector Methods For Solving Initial Value ProblemsДокумент27 страницUnit Multistep and Predictor Corrector Methods For Solving Initial Value ProblemsJAGANNATH PRASADОценок пока нет

- Statistics 580 Nonlinear Least Squares: I I I I I I I 2 N I I 2Документ14 страницStatistics 580 Nonlinear Least Squares: I I I I I I I 2 N I I 2zwobeliskОценок пока нет

- Roots of Equations: 1.0.1 Newton's MethodДокумент20 страницRoots of Equations: 1.0.1 Newton's MethodMahmoud El-MahdyОценок пока нет

- Scientific Computation (COMS 3210) Bigass Study Guide: Spring 2012Документ30 страницScientific Computation (COMS 3210) Bigass Study Guide: Spring 2012David CohenОценок пока нет

- Asmt 4Документ6 страницAsmt 4Garth BaughmanОценок пока нет

- Lect Notes 9Документ43 страницыLect Notes 9Safis HajjouzОценок пока нет

- Introduction To Stochastic Approximation AlgorithmsДокумент14 страницIntroduction To Stochastic Approximation AlgorithmsMassimo TormenОценок пока нет

- Lect 04Документ6 страницLect 04AparaОценок пока нет

- Linear Multistep MethodДокумент31 страницаLinear Multistep MethodShankar Prakash GОценок пока нет

- Evaluation of Definite IntegralДокумент4 страницыEvaluation of Definite Integralapi-127466285Оценок пока нет

- Ch3 Nume Integration 2022Документ43 страницыCh3 Nume Integration 2022SK. BayzeedОценок пока нет

- An Automated Method For Sensitivity Analysis Using Complex VariablesДокумент12 страницAn Automated Method For Sensitivity Analysis Using Complex VariablesJ Luis MlsОценок пока нет

- Solving Equations (Finding Zeros)Документ20 страницSolving Equations (Finding Zeros)nguyendaibkaОценок пока нет

- Numerical Methods DynamicsДокумент16 страницNumerical Methods DynamicsZaeem KhanОценок пока нет

- Hoeffding BoundsДокумент9 страницHoeffding Boundsphanminh91Оценок пока нет

- Numerical SДокумент58 страницNumerical SSrinyanavel ஸ்ரீஞானவேல்Оценок пока нет

- Math 130 Numerical Solution To Ce ProblemsДокумент10 страницMath 130 Numerical Solution To Ce ProblemsMia ManguiobОценок пока нет

- Adjoint Tutorial PDFДокумент6 страницAdjoint Tutorial PDFPeter AngeloОценок пока нет

- Lion 5 PaperДокумент15 страницLion 5 Papermilp2Оценок пока нет

- Numerical Method 1 PDFДокумент2 страницыNumerical Method 1 PDFGagan DeepОценок пока нет

- Num 1Документ15 страницNum 1Bhumika KavaiyaОценок пока нет

- 1 The Probably Approximately Correct (PAC) Model: COS 511: Theoretical Machine LearningДокумент6 страниц1 The Probably Approximately Correct (PAC) Model: COS 511: Theoretical Machine LearningBrijesh KunduОценок пока нет

- Roots of Equation PDFДокумент10 страницRoots of Equation PDFMia ManguiobОценок пока нет

- Factor IzationДокумент13 страницFactor Izationoitc85Оценок пока нет

- Variational Problems in Machine Learning and Their Solution With Finite ElementsДокумент11 страницVariational Problems in Machine Learning and Their Solution With Finite ElementsJohn David ReaverОценок пока нет

- Solving Nonlinear Algebraic Systems Using Artificial Neural NetworksДокумент13 страницSolving Nonlinear Algebraic Systems Using Artificial Neural NetworksAthanasios MargarisОценок пока нет

- The Automatic Control of Numerical IntegrationДокумент20 страницThe Automatic Control of Numerical IntegrationSargis von DonnerОценок пока нет

- Linear System: 2011 Intro. To Computation Mathematics LAB SessionДокумент7 страницLinear System: 2011 Intro. To Computation Mathematics LAB Session陳仁豪Оценок пока нет

- Module 1 (1,2,3)Документ45 страницModule 1 (1,2,3)vikram sethupathiОценок пока нет

- Partial Differential EquationsДокумент18 страницPartial Differential EquationsJuanParedesCasasОценок пока нет

- Introductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)От EverandIntroductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)Оценок пока нет

- Thesis PDFДокумент85 страницThesis PDFhikolОценок пока нет

- 10 15004 PDFДокумент4 страницы10 15004 PDFhikolОценок пока нет

- Adders SubstractorsДокумент4 страницыAdders SubstractorsAkshat AtrayОценок пока нет

- Assignment3 Solutions 265Документ6 страницAssignment3 Solutions 265pratik_93Оценок пока нет

- بHj او Cwvf ا نBlƒ Cwr ا Xchو E D يEf .د.أ Cwr ا EcДокумент12 страницبHj او Cwvf ا نBlƒ Cwr ا Xchو E D يEf .د.أ Cwr ا EchikolОценок пока нет

- Assignment3 Solutions 265Документ6 страницAssignment3 Solutions 265pratik_93Оценок пока нет

- Pra 83 063829 PDFДокумент14 страницPra 83 063829 PDFhikolОценок пока нет

- Journal of Lightwave Technology Volume 20 Issue 7 2002 (Doi 10.1109 - JLT.2002.800376) Vannucci, A. Serena, P. Bononi, A. - The RP Method - A New Tool For The Iterative Solution PDFДокумент11 страницJournal of Lightwave Technology Volume 20 Issue 7 2002 (Doi 10.1109 - JLT.2002.800376) Vannucci, A. Serena, P. Bononi, A. - The RP Method - A New Tool For The Iterative Solution PDFhikolОценок пока нет

- MartinFlechl Susy07 PDFДокумент4 страницыMartinFlechl Susy07 PDFhikolОценок пока нет

- PDF PDFДокумент7 страницPDF PDFhikolОценок пока нет

- MLE4208 Lecture 7A PDFДокумент31 страницаMLE4208 Lecture 7A PDFhikolОценок пока нет

- 6 732-pt2Документ198 страниц6 732-pt2Lalatendu BiswalОценок пока нет

- Monocrystalline Solar Cells: ReferencesДокумент32 страницыMonocrystalline Solar Cells: ReferencesalexanderritschelОценок пока нет

- MLE4208 Lecture 2 PDFДокумент36 страницMLE4208 Lecture 2 PDFhikolОценок пока нет

- 0712 0287v1 PDFДокумент27 страниц0712 0287v1 PDFhikolОценок пока нет

- 0906 1923v3 PDFДокумент24 страницы0906 1923v3 PDFhikolОценок пока нет

- Paper PDFДокумент21 страницаPaper PDFhikolОценок пока нет

- 0710 2472v1 PDFДокумент4 страницы0710 2472v1 PDFhikolОценок пока нет

- MLE4208 Lecture 1 PDFДокумент31 страницаMLE4208 Lecture 1 PDFhikolОценок пока нет

- 0707 3952v4 PDFДокумент69 страниц0707 3952v4 PDFhikolОценок пока нет

- Ch3 Section2 Light and MatterДокумент23 страницыCh3 Section2 Light and MatterhikolОценок пока нет

- 1 s2.0 S0010465504002115 Main PDFДокумент11 страниц1 s2.0 S0010465504002115 Main PDFhikolОценок пока нет

- This Version of Total CAD Converter Is UnregisteredДокумент1 страницаThis Version of Total CAD Converter Is UnregisteredhikolОценок пока нет

- Notch Filter IДокумент5 страницNotch Filter IhikolОценок пока нет

- Zuckerberg Statement To CongressДокумент7 страницZuckerberg Statement To CongressJordan Crook100% (1)

- Lorentz Transformation For Frames in Standard ConfigurationДокумент19 страницLorentz Transformation For Frames in Standard ConfigurationhikolОценок пока нет

- Function CДокумент1 страницаFunction ChikolОценок пока нет

- Particle Physics Phenomenology 8. QCD Jets and Jet AlgorithmsДокумент6 страницParticle Physics Phenomenology 8. QCD Jets and Jet AlgorithmshikolОценок пока нет

- Function: 'Xdata' 'Ydata' 'Zdata'Документ2 страницыFunction: 'Xdata' 'Ydata' 'Zdata'hikolОценок пока нет

- MenuCommands EДокумент1 страницаMenuCommands EhikolОценок пока нет

- Image Representation: External Characteristics: Its Boundary Internal Characteristics: The Pixels Comprising TheДокумент13 страницImage Representation: External Characteristics: Its Boundary Internal Characteristics: The Pixels Comprising TheVijay AnandhОценок пока нет

- Math 9Документ4 страницыMath 9RevanVilladozОценок пока нет

- Tutorial 1.2Документ5 страницTutorial 1.2nurin afiqahОценок пока нет

- Algebra, Relations, Functions and Graphs IIДокумент27 страницAlgebra, Relations, Functions and Graphs IIAnthony Benson100% (2)

- Math Project 1Документ3 страницыMath Project 1Tyler WilliamsОценок пока нет

- Lotus Academy: Gate Tutorial: General AptitudeДокумент32 страницыLotus Academy: Gate Tutorial: General AptitudeTanishq AwasthiОценок пока нет

- MSC SyllabusДокумент62 страницыMSC Syllabusmahfooz zin noorineОценок пока нет

- CON4308 Advanced Civil Engineering Mathematics Pastpaper 2016ENGTY107Документ7 страницCON4308 Advanced Civil Engineering Mathematics Pastpaper 2016ENGTY107Trevor LingОценок пока нет

- Study GuideДокумент161 страницаStudy GuideViet Quoc Hoang100% (1)

- Complex Numbers Housekeeping, Elementary Geometry, Arguments, Polar RepresentationДокумент78 страницComplex Numbers Housekeeping, Elementary Geometry, Arguments, Polar RepresentationSunday JohnОценок пока нет

- g9 3q Simplifying RadicalsДокумент10 страницg9 3q Simplifying RadicalsDorothy Julia Dela CruzОценок пока нет

- Harmonic Sequences AnswersДокумент3 страницыHarmonic Sequences AnswersJandreive Mendoza100% (1)

- Chapter - 8: Paths, Path Products and Regular ExpressionsДокумент61 страницаChapter - 8: Paths, Path Products and Regular ExpressionsGeeta DesaiОценок пока нет

- DPP 3Документ5 страницDPP 3Rupesh Kumar SinghОценок пока нет

- Basic Sens Analysis Review PDFДокумент26 страницBasic Sens Analysis Review PDFPratik D UpadhyayОценок пока нет

- Vol1 DifferentialCalculus PDFДокумент279 страницVol1 DifferentialCalculus PDFStalin Kumar SahooОценок пока нет

- Idst 2016 SA 05 HashingДокумент68 страницIdst 2016 SA 05 HashingA Sai BhargavОценок пока нет

- RD Sharma Class 11 SolutionsДокумент3 страницыRD Sharma Class 11 SolutionsRD Sharma Solutions33% (30)

- Maths - Syllabus - Grades 10 PDFДокумент28 страницMaths - Syllabus - Grades 10 PDFAmanuel AkliluОценок пока нет

- Lesson 3.5 Derivatives of Trigonometric FunctionsДокумент13 страницLesson 3.5 Derivatives of Trigonometric FunctionsMario Caredo ManjarrezОценок пока нет

- Differential Calculus (Mcjiats 2017)Документ48 страницDifferential Calculus (Mcjiats 2017)Arn Stefhan JimenezОценок пока нет

- Solution To Extra Problem Set 7: Alternative Solution: Since Is Symmetric About The Plane 0, We HaveДокумент11 страницSolution To Extra Problem Set 7: Alternative Solution: Since Is Symmetric About The Plane 0, We Have物理系小薯Оценок пока нет

- UACE MATHEMATICS PAPER 1 2014 Marking GuideДокумент14 страницUACE MATHEMATICS PAPER 1 2014 Marking GuideSamuel HarvОценок пока нет

- History of Modern MathematicsДокумент75 страницHistory of Modern MathematicsgugizzleОценок пока нет

- c8 Linear EquationДокумент5 страницc8 Linear Equationshweta sainiОценок пока нет

- Elementary Classical Analysis 2nd Edition (Jerrold E. Marsden, Michael J. Hoffman)Документ733 страницыElementary Classical Analysis 2nd Edition (Jerrold E. Marsden, Michael J. Hoffman)傳宇100% (1)

- Year 10 - Equation & Inequalities Test With ANSДокумент12 страницYear 10 - Equation & Inequalities Test With ANSJoseph ChengОценок пока нет

- Shape of Space VandyДокумент54 страницыShape of Space VandyIts My PlaceОценок пока нет

- Tpde QP Model 2018 BДокумент3 страницыTpde QP Model 2018 BInfi Coaching CenterОценок пока нет