Академический Документы

Профессиональный Документы

Культура Документы

Spin Locks

Загружено:

gettopoonamИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Spin Locks

Загружено:

gettopoonamАвторское право:

Доступные форматы

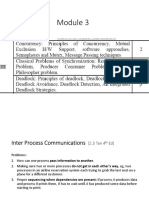

Spin Locks Spin locks are a low-level synchronization mechanism suitable primarily for use on shared memory multiprocessors.

When the calling thread requests a spin lock that is already held by another thread, the second thread spins in a loop to test if the lock has become available. When the lock is obtained, it should be held only for a short time, as the spinning wastes processor cycles. Callers should unlock spin locks before calling sleep operations to enable other threads to obtain the lock. Spin locks can be implemented using mutexes and conditional variables, but the pthread_spin_* functions are a standardized way to practice spin locking. The pthread_spin_* functions require much lower overhead for locks of short duration. When performing any lock, a trade-off is made between the processor resources consumed while setting up to block the thread and the processor resources consumed by the thread while it is blocked. Spin locks require few resources to set up the blocking of a thread and then do a simple loop, repeating the atomic locking operation until the lock is available. The thread continues to consume processor resources while it is waiting. Compared to spin locks, mutexes consume a larger amount of processor resources to block the thread. When a mutex lock is not available, the thread changes its scheduling state and adds itself to the queue of waiting threads. When the lock becomes available, these steps must be reversed before the thread obtains the lock. While the thread is blocked, it consumes no processor resources. Therefore, spin locks and mutexes can be useful for different purposes. Spin locks might have lower overall overhead for very short-term blocking, and mutexes might have lower overall overhead when a thread will be blocked for longer periods of time. What about using a lock variable, which must be tested by each process before it enters its critical section? If another process is already in its critical section, the lock is set to 1, and the process currently using the processor is not permitted to enter its critical section. If the value of the lock variable is 0, then the process enters its critical section, and it sets the lock to 1. The problem with this potential solution is that the operation that reads the value of the lock variable, the operation that compares that value to 0, and the operation that sets the lock, are three different atomic actions. With this solution, it is possible that one process might test the lock variable, see that it is open, but before it can set the lock, another process is scheduled, runs, sets the lock and enters its critical section. When the original process returns, it too will enter its critical section, violation the policy of mutual exclusion. The only problem with the lock variable solution is that the action of testing the variable and the action of setting the variable are executed as seperate instructions. If these operations could be combined into one indivisible step, this could be a workable solution. These steps can be combined, with a little help from hardware, into what is known as a TSL or TEST and SET LOCK instruction. A call to the TSL instruction copies the

value of the lock variable and sets it to a nonzero (locked) value, all in one step. While the value of the lock variable is being tested, no other process can enter its critical section, because the lock is set. Let us look at an example of the TSL in use with two operations, enter_region and leave_region:

Semaphores

A hardware or software flag. In multitasking systems, a semaphore is a variable with a value that indicates the status of a common resource. Its used to lock the resource that is being used. A process needing the resource checks the semaphore to determine the resource's status and then decides how to proceed. In programming, especially in UNIX systems, semaphores are a technique for coordinating or synchronizing activities in which multiple process compete for the same operating system resources. A semaphore is a value in a designated place in operating system (or kernel) storage that each process can check and then change. Depending on the value that is found, the process can use the resource or will find that it is already in use and must wait for some period before trying again. Semaphones can be binary (0 or 1) or can have additional values. Typically, a process using semaphores checks the value and then, if it using the resource, changes the value to reflect this so that subsequent semaphore users will know to wait. Semaphores are commonly use for two purposes: to share a common memory space and to share access to files. Semaphores are one of the techniques for interprocess communication (interprocess communication). The C programming language provides a set of interfaces or "functions" for managing semaphores.

Semaphores are used to protect critical regions of code or data structures. Remember that each access of a critical piece of data such as a VFS inode describing a directory is made by kernel code running on behalf of a process. It would be very dangerous to allow one process to alter a critical data structure that is being used by another process. One way to achieve this would be to use a buzz lock around the critical piece of data that is being accessed, but this is a simplistic approach that would degrade system performance. Instead Linux uses semaphores to allow just one process at a time to access critical regions of code and data; all other processes wishing to access this resource will be made to wait until it becomes free. The waiting processes are suspended, other processes in the system can continue to run as normal.

Suppose the initial count for a semaphore is 1, the first process to come along will see that the count is positive and decrement it by 1, making it 0. The process now ``owns'' the critical piece of code or resource that is being protected by the semaphore. When the process leaves the critical region it increments the semphore's count. The most optimal case is where there are no other processes contending for ownership of the critical region. Linux has implemented semaphores to work efficiently for this, the most common case. If another process wishes to enter the critical region whilst it is owned by a process it too will decrement the count. As the count is now negative (-1) the process cannot enter the critical region. Instead it must wait until the owning process exits it. Linux makes the waiting process sleep until the owning process wakes it on exiting the critical region. The waiting process adds itself to the semaphore's wait queue and sits in a loop checking the value of the waking field and calling the scheduler until waking is non-zero. The owner of the critical region increments the semaphore's count and if it is less than or equal to zero then there are processes sleeping, waiting for this resource. In the optimal case the semaphore's count would have been returned to its initial value of 1 and no further work would be neccessary. The owning process increments the waking counter and wakes up the process sleeping on the semaphore's wait queue. When the waiting process wakes up, the waking counter is now 1 and it knows that it may now enter the critical region. It decrements the waking counter, returning it to a value of zero, and continues. All access to the waking field of semaphore are protected by a buzz lock using the semaphore's lock.

Вам также может понравиться

- The Common Lisp Condition System: Beyond Exception Handling with Control Flow MechanismsОт EverandThe Common Lisp Condition System: Beyond Exception Handling with Control Flow MechanismsОценок пока нет

- CS347 04 Process SyncДокумент14 страницCS347 04 Process SyncahilanОценок пока нет

- Unit V: Task CommunicationДокумент21 страницаUnit V: Task Communicationsirisha akulaОценок пока нет

- Scheduling AlgorithmДокумент7 страницScheduling AlgorithmUchi RomeoОценок пока нет

- A Term Paper of Principle of Operating System On: Submitted ToДокумент16 страницA Term Paper of Principle of Operating System On: Submitted ToRaj ShahОценок пока нет

- Conditions For Critical Section in OSДокумент7 страницConditions For Critical Section in OSValeh MəmmədliОценок пока нет

- Process Synchronization: Critical Section ProblemДокумент8 страницProcess Synchronization: Critical Section ProblemLinda BrownОценок пока нет

- Dead LockДокумент6 страницDead Lockmuzammil sulОценок пока нет

- Operating SystemДокумент19 страницOperating Systemcorote1026Оценок пока нет

- Ass2 AnswersДокумент8 страницAss2 AnswersNeha PatilОценок пока нет

- OS Assignment+2Документ5 страницOS Assignment+2ask4rahmОценок пока нет

- Assignment 1Документ10 страницAssignment 1Anitha JersonОценок пока нет

- Race Conditions: Process SynchronizationДокумент5 страницRace Conditions: Process SynchronizationshezipakОценок пока нет

- MCP-Unit 2Документ77 страницMCP-Unit 2Sarath PathariОценок пока нет

- DeadLock Vs SpinlockДокумент3 страницыDeadLock Vs SpinlockMohamedChiheb BenChaabaneОценок пока нет

- Dining Philosopher's ComparisionДокумент18 страницDining Philosopher's ComparisionZer0 .requiemОценок пока нет

- Synchronization PrimitivesДокумент14 страницSynchronization Primitiveshaani104Оценок пока нет

- 23 Concurrency ExamplesДокумент16 страниц23 Concurrency ExamplesTr TrОценок пока нет

- Os AnsДокумент14 страницOs AnsBosten DesanОценок пока нет

- Lock (Computer Science) : Locks, Where Attempting Unauthorized Access To A Locked Resource Will Force AnДокумент9 страницLock (Computer Science) : Locks, Where Attempting Unauthorized Access To A Locked Resource Will Force AnAyaz Saa'irОценок пока нет

- OS Unit 2 QBДокумент19 страницOS Unit 2 QBSaiteja KandakatlaОценок пока нет

- Enjoy OSДокумент36 страницEnjoy OSShivansh PundirОценок пока нет

- What Is SemaphoreДокумент5 страницWhat Is SemaphoreVaijayanthi SОценок пока нет

- IPC (Inter-Process Communication)Документ3 страницыIPC (Inter-Process Communication)axorbtanjiroОценок пока нет

- Futex Seminar ReportДокумент33 страницыFutex Seminar Reportsahil_sharma437Оценок пока нет

- Assignment 3Документ7 страницAssignment 3RAYYAN IRFANОценок пока нет

- Process SynchronizationДокумент4 страницыProcess SynchronizationJatin SinghОценок пока нет

- Final Name Sewa SinghДокумент15 страницFinal Name Sewa SinghSingh VadanОценок пока нет

- Lab 09 - Concurrency (Answers) PDFДокумент5 страницLab 09 - Concurrency (Answers) PDFliОценок пока нет

- Fuss, Futexes and Furwocks: Fast Userlevel Locking in LinuxДокумент19 страницFuss, Futexes and Furwocks: Fast Userlevel Locking in LinuxSnehansu Sekhar SahuОценок пока нет

- Os Assignment 2Документ6 страницOs Assignment 2humairanaz100% (1)

- Ksync NotesДокумент6 страницKsync NotesRAMUОценок пока нет

- Explain The Concept of ReentrancyДокумент3 страницыExplain The Concept of ReentrancymaniarunaiОценок пока нет

- SEmphoreДокумент9 страницSEmphoreArpitha NaiduОценок пока нет

- Difference Between Serial Access and Concurrent Access ?Документ12 страницDifference Between Serial Access and Concurrent Access ?ravaliОценок пока нет

- Operating System Viva Questions: 1. Explain The Concept of Reentrancy?Документ7 страницOperating System Viva Questions: 1. Explain The Concept of Reentrancy?Sreeraj JayarajОценок пока нет

- Chapter 4 - Concurrency-Synchronization Mutual Exclusion, Semaphores and MonitorsДокумент49 страницChapter 4 - Concurrency-Synchronization Mutual Exclusion, Semaphores and MonitorsKarthikhaKV100% (1)

- Unit - 3 Part IДокумент46 страницUnit - 3 Part IHARSHA VINAY KOTNI HU21CSEN0100574Оценок пока нет

- Operating Systems Sample Questions: AnswerДокумент9 страницOperating Systems Sample Questions: Answerapi-3843022Оценок пока нет

- Unit 3 Process SynchronizationДокумент18 страницUnit 3 Process SynchronizationpoornicuteeОценок пока нет

- Stack: No Registers: No Heap: Yes (If You Have To Choose y or N, The True Answers Is It Depends) Globals: YesДокумент6 страницStack: No Registers: No Heap: Yes (If You Have To Choose y or N, The True Answers Is It Depends) Globals: YesPruthviraj ShelarОценок пока нет

- Name Kris Pun Subject Operating SystemДокумент35 страницName Kris Pun Subject Operating SystemRaito KriesОценок пока нет

- Operating Systems: 1. Explain The Concept of ReentrancyДокумент7 страницOperating Systems: 1. Explain The Concept of ReentrancyRahul PariОценок пока нет

- Unit - 3 Ipc and SynchronizationДокумент68 страницUnit - 3 Ipc and SynchronizationAyush ShresthaОценок пока нет

- Process Management and SynchronizationДокумент19 страницProcess Management and SynchronizationLinda BrownОценок пока нет

- A Critical Section Is A Section of Code That Must Never Allow More Than One Process Inside It at All TimesДокумент3 страницыA Critical Section Is A Section of Code That Must Never Allow More Than One Process Inside It at All TimesSandeep SagarОценок пока нет

- Mod 3Документ12 страницMod 3Atul DrawsОценок пока нет

- Associated Name A Unique ID A Priority (If Part of A Pre-Emptive Scheduling Plan) A Task Control Block (TCB) A Stack and A Task Routine Task ObjectsДокумент23 страницыAssociated Name A Unique ID A Priority (If Part of A Pre-Emptive Scheduling Plan) A Task Control Block (TCB) A Stack and A Task Routine Task ObjectsAleenaОценок пока нет

- Operating Systems: 1. Explain The Concept of ReentrancyДокумент8 страницOperating Systems: 1. Explain The Concept of Reentrancyapi-3804388100% (1)

- Operating Systems: Vartika DhagatДокумент8 страницOperating Systems: Vartika Dhagatsandeep18100@yahoo.inОценок пока нет

- Unit 2 Interprocess CommunicationДокумент15 страницUnit 2 Interprocess CommunicationArpoxonОценок пока нет

- SBG Study Advance OS Unit 2Документ6 страницSBG Study Advance OS Unit 2Shubhi GuptaОценок пока нет

- 6 IpcДокумент45 страниц6 Ipcchokkagnapikasri.21.itОценок пока нет

- 8 (Synchronization) : AnswerДокумент8 страниц8 (Synchronization) : Answerdfg68Оценок пока нет

- Informations Some Interfaces of Proc Filesystem. Is There Any Other Ways To Get Process Memory Informations Apart From Proc Interfaces?Документ10 страницInformations Some Interfaces of Proc Filesystem. Is There Any Other Ways To Get Process Memory Informations Apart From Proc Interfaces?kumar0% (1)

- Introduction To Real-Time Operating SystemДокумент28 страницIntroduction To Real-Time Operating SystemAbhishek Basava0% (1)

- Important Questions in Operating SystemsДокумент6 страницImportant Questions in Operating SystemsSanthana BharathiОценок пока нет

- Ppi MultithreadingДокумент4 страницыPpi MultithreadingmaniОценок пока нет

- Mutex Vs SemaphoreДокумент3 страницыMutex Vs SemaphorerajaОценок пока нет

- Os Galvinsilberschatz SolДокумент134 страницыOs Galvinsilberschatz SolAakash DuggalОценок пока нет

- Aim: To Provide A General Overview of Challenges Students Face When Balancing Work and StudyДокумент1 страницаAim: To Provide A General Overview of Challenges Students Face When Balancing Work and StudygettopoonamОценок пока нет

- Presented By: Pooja Baluja Nirav Desai Bipin Prajapati Aasim Shaikh Sawan JainДокумент38 страницPresented By: Pooja Baluja Nirav Desai Bipin Prajapati Aasim Shaikh Sawan Jaingettopoonam100% (2)

- The Cement IndustryДокумент3 страницыThe Cement IndustrygettopoonamОценок пока нет

- Prolog FactsДокумент1 страницаProlog FactsgettopoonamОценок пока нет

- Emp DemoДокумент1 страницаEmp DemogettopoonamОценок пока нет

- Pract 22Документ1 страницаPract 22gettopoonamОценок пока нет

- The NSA and Its "Complia... N O'Neill - Mises DailyДокумент3 страницыThe NSA and Its "Complia... N O'Neill - Mises DailysilberksouzaОценок пока нет

- Pondicherry University Admissions 2015 16 Entrance Examination Hall TicketДокумент1 страницаPondicherry University Admissions 2015 16 Entrance Examination Hall TicketShivnath SharmaОценок пока нет

- R.A. No. 10175 The Cybercrime Prevention Act The Net CommandmentsДокумент17 страницR.A. No. 10175 The Cybercrime Prevention Act The Net CommandmentsRyan SarmientoОценок пока нет

- 2Документ1 страница2nazmulОценок пока нет

- Artificial Intelligence and The Law Part 2Документ14 страницArtificial Intelligence and The Law Part 2jillian llanesОценок пока нет

- Employment ApplicationДокумент3 страницыEmployment Applicationapi-251839072100% (1)

- Software PiracyДокумент67 страницSoftware PiracyShivendra Singh100% (1)

- SBM4304+Assessment+Brief T1,+2021 FNДокумент7 страницSBM4304+Assessment+Brief T1,+2021 FNTalha NaseemОценок пока нет

- Pentesting BibleДокумент56 страницPentesting BibleRajeev GhoshОценок пока нет

- Guidelines On MGMT of ITДокумент5 страницGuidelines On MGMT of ITGilagolf AsiaОценок пока нет

- Sathyabama University Department of Management Studies Oracle Lab Lab Manual Lab ProgramsДокумент26 страницSathyabama University Department of Management Studies Oracle Lab Lab Manual Lab ProgramsZacharia VincentОценок пока нет

- Secret Codes Keep Messages PrivateДокумент3 страницыSecret Codes Keep Messages Privatevampire_459100% (1)

- What Is Cryptography?: U-I Foundations of CryptographyДокумент63 страницыWhat Is Cryptography?: U-I Foundations of CryptographyAman RoyОценок пока нет

- Child Pornography On The InternetДокумент76 страницChild Pornography On The InternetAlpheus Shem ROJASОценок пока нет

- Security in Linux, Volker NeumannДокумент185 страницSecurity in Linux, Volker NeumannVolker NeumannОценок пока нет

- Iradv65xx Code ArticleДокумент1 страницаIradv65xx Code ArticleTony ChangОценок пока нет

- NaderДокумент9 страницNaderAnonymous 4yLPy0ICОценок пока нет

- Fall 2016 EditionДокумент67 страницFall 2016 EditionSC International ReviewОценок пока нет

- Mall3 Manual PDFДокумент15 страницMall3 Manual PDFHeartRangerОценок пока нет

- Evidenta SC Sisteme AlarmaДокумент368 страницEvidenta SC Sisteme Alarmatituspop2006100% (1)

- Syllabus of B.SC Forensic Science2013Документ87 страницSyllabus of B.SC Forensic Science2013ashsha111Оценок пока нет

- COMPUTER VIRUS AND TYPES AssignmentДокумент6 страницCOMPUTER VIRUS AND TYPES AssignmentNafisa IjazОценок пока нет

- C++test 92 Rules PDFДокумент3 013 страницC++test 92 Rules PDFlordnrОценок пока нет

- Country Title of Legislation/Draft Legislation Albania AlbaniaДокумент30 страницCountry Title of Legislation/Draft Legislation Albania AlbaniadgoscribdОценок пока нет

- DBA Interview Questions With AnswersДокумент104 страницыDBA Interview Questions With AnswersBirendra Padhi67% (3)

- Linked Lists: CENG 213 Data Structures 1Документ34 страницыLinked Lists: CENG 213 Data Structures 1bsgindia82Оценок пока нет

- Railway ReservationДокумент33 страницыRailway ReservationvijayakumarОценок пока нет

- William Pawelec - The Secret Government Has Made Billions of Implantable RFID Microchip - Above Black Projects - SocioecohistoryДокумент7 страницWilliam Pawelec - The Secret Government Has Made Billions of Implantable RFID Microchip - Above Black Projects - SocioecohistoryEmil-WendtlandОценок пока нет

- AtmДокумент46 страницAtmAnurag AwasthiОценок пока нет

- Chapter Two (Network Thesis Book)Документ16 страницChapter Two (Network Thesis Book)Latest Tricks100% (1)

- iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]От EverandiPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]Рейтинг: 5 из 5 звезд5/5 (2)

- iPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsОт EverandiPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsРейтинг: 5 из 5 звезд5/5 (3)

- RHCSA Red Hat Enterprise Linux 9: Training and Exam Preparation Guide (EX200), Third EditionОт EverandRHCSA Red Hat Enterprise Linux 9: Training and Exam Preparation Guide (EX200), Third EditionОценок пока нет

- Kali Linux - An Ethical Hacker's Cookbook - Second Edition: Practical recipes that combine strategies, attacks, and tools for advanced penetration testing, 2nd EditionОт EverandKali Linux - An Ethical Hacker's Cookbook - Second Edition: Practical recipes that combine strategies, attacks, and tools for advanced penetration testing, 2nd EditionРейтинг: 5 из 5 звезд5/5 (1)

- Linux For Beginners: The Comprehensive Guide To Learning Linux Operating System And Mastering Linux Command Line Like A ProОт EverandLinux For Beginners: The Comprehensive Guide To Learning Linux Operating System And Mastering Linux Command Line Like A ProОценок пока нет

- Hacking : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Ethical HackingОт EverandHacking : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Ethical HackingРейтинг: 5 из 5 звезд5/5 (3)

- Mastering Swift 5 - Fifth Edition: Deep dive into the latest edition of the Swift programming language, 5th EditionОт EverandMastering Swift 5 - Fifth Edition: Deep dive into the latest edition of the Swift programming language, 5th EditionОценок пока нет

- Linux: A Comprehensive Guide to Linux Operating System and Command LineОт EverandLinux: A Comprehensive Guide to Linux Operating System and Command LineОценок пока нет

- Excel : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Excel Programming: 1От EverandExcel : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Excel Programming: 1Рейтинг: 4.5 из 5 звезд4.5/5 (3)

- Mastering Linux Security and Hardening - Second Edition: Protect your Linux systems from intruders, malware attacks, and other cyber threats, 2nd EditionОт EverandMastering Linux Security and Hardening - Second Edition: Protect your Linux systems from intruders, malware attacks, and other cyber threats, 2nd EditionОценок пока нет

- React.js for A Beginners Guide : From Basics to Advanced - A Comprehensive Guide to Effortless Web Development for Beginners, Intermediates, and ExpertsОт EverandReact.js for A Beginners Guide : From Basics to Advanced - A Comprehensive Guide to Effortless Web Development for Beginners, Intermediates, and ExpertsОценок пока нет

- Azure DevOps Engineer: Exam AZ-400: Azure DevOps Engineer: Exam AZ-400 Designing and Implementing Microsoft DevOps SolutionsОт EverandAzure DevOps Engineer: Exam AZ-400: Azure DevOps Engineer: Exam AZ-400 Designing and Implementing Microsoft DevOps SolutionsОценок пока нет

- Hello Swift!: iOS app programming for kids and other beginnersОт EverandHello Swift!: iOS app programming for kids and other beginnersОценок пока нет

- RHCSA Exam Pass: Red Hat Certified System Administrator Study GuideОт EverandRHCSA Exam Pass: Red Hat Certified System Administrator Study GuideОценок пока нет

![iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]](https://imgv2-1-f.scribdassets.com/img/audiobook_square_badge/728318688/198x198/f3385cbfef/1714829744?v=1)