Академический Документы

Профессиональный Документы

Культура Документы

SVD

Загружено:

m_here03Исходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

SVD

Загружено:

m_here03Авторское право:

Доступные форматы

Dimensionality Reduction One common way to represent datasets is as vectors in a feature space.

For example, if we let each dimension be a movie, then we can represent users as points. Though we cannot visualize this in more than three dimensions, the idea works for any number of dimensions.

One natural question to ask in this setting is whether or not it is possible to reduce the number of dimensions we need to represent the data. For example, if every user who likes The Matrix also likes Star Wars, then we can group them together to form an agglomerative movie or feature. We can then compare two users by looking at their ratings for different features rather than for individual movies. There are several reasons we might want to do this. The first is scalability. If we have a dataset with 17,000 movies, than each user is a vector of 17,000 coordinates, and this makes storing and comparing users relatively slow and memory-intensive. It turns out, however, that using a smaller number of dimensions can actually improve prediction accuracy. For example, suppose we have two users who both like science fiction movies. If one user has rated Star Wars highly and the other has rated Empire Strikes Back highly, then it makes sense to say the users are similar. If we compare the users based on individual movies, however, only those movies that both users have rated will affect their similarity. This is an extreme example, but one can certainly imagine that there are various classes of movies that should be compared. The Singular Value Decomposition In an important paper, Deerwester et al. examined the dimensionality reduction problem in the context of information retrieval [2]. They were trying to compare documents using the words they contained, and they proposed the idea of creating features representing multiple words and then comparing those. To accomplish this, they made use of a mathematical technique known as Singular Value Decomposition. More recently, Sarwar et al. made use of this technique for recommender systems [3]. The Singular Value Decomposition (SVD) is a well known matrix factorization technique that factors anm by n matrix X into three matrices as follows:

The matrix S is a diagonal matrix containing the singular values of the matrix X. There are exactly rsingular values, where r is the rank of X. The rank of a matrix is the number of linearly independent rows or columns in the matrix. Recall that two vectors are linearly independent if they can not be written as the sum or scalar multiple of any other vectors in the space. Observe that linear independence somehow captures the notion of a feature or agglomerative item that we are trying to get at. To return to our previous example, if every user who liked Star Wars also liked The Matrix, the two movie vectors would be linearly dependent and would only contribute one to the rank. We can do more, however. We would really like to compare movies if most users who like one also like the other. To accomplish we can simply keep the first k singular values in S, where k<r. This will give us the best rank-k approximation to X, and thus has effectively reduced the dimensionality of our original space. Thus we have

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

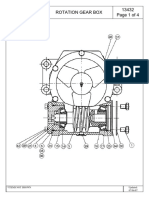

- Caja de Engranes para Pluma DunbarRotationGearBoxДокумент4 страницыCaja de Engranes para Pluma DunbarRotationGearBoxMartin CalderonОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Construction Cost Estimation SystemДокумент5 страницConstruction Cost Estimation SystemURBANHIJAUОценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Oracle Ow811 Certification Matrix 161594Документ8 страницOracle Ow811 Certification Matrix 161594ps.calado5870Оценок пока нет

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Pelina PositionPaperДокумент4 страницыPelina PositionPaperJohn Tristan HilaОценок пока нет

- IGCSE COMMERCE Chapter 10.1Документ5 страницIGCSE COMMERCE Chapter 10.1Tahmid Raihan100% (1)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- JKIT - Dialog SAP Revamp Phase II As-Is Proposal V2.0Документ10 страницJKIT - Dialog SAP Revamp Phase II As-Is Proposal V2.0Hathim MalickОценок пока нет

- T100-V Service ManualДокумент22 страницыT100-V Service ManualSergey KutsОценок пока нет

- Temperature Control Systems: Product Reference Guide For Truck and Trailer ApplicationsДокумент40 страницTemperature Control Systems: Product Reference Guide For Truck and Trailer ApplicationsSaMos AdRiianОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- U19EC416 DSP Lab SyllabusДокумент2 страницыU19EC416 DSP Lab SyllabusRamesh MallaiОценок пока нет

- Schengen Visa Application 2021-11-26Документ5 страницSchengen Visa Application 2021-11-26Edivaldo NehoneОценок пока нет

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- DRC-4720 Bridge SensorДокумент3 страницыDRC-4720 Bridge SensortedysuОценок пока нет

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- EM0505 3.0v1 Introduction To Sophos EmailДокумент19 страницEM0505 3.0v1 Introduction To Sophos EmailemendozaОценок пока нет

- NOT 012 ROTAX Fuel PumpДокумент9 страницNOT 012 ROTAX Fuel PumpGaberОценок пока нет

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Kampung Admiralty - A Mix of Public Facilities - UrbanNextДокумент7 страницKampung Admiralty - A Mix of Public Facilities - UrbanNextkohvictorОценок пока нет

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- MSMQ Service Failing To StartДокумент2 страницыMSMQ Service Failing To Startann_scribdОценок пока нет

- Reliability and Its Application in TQMДокумент15 страницReliability and Its Application in TQMVijay Anand50% (2)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Ab 521 Requirements For Engineered Pressure EnclosuresДокумент39 страницAb 521 Requirements For Engineered Pressure EnclosuresCarlos Maldonado SalazarОценок пока нет

- Gen Set Controller GC 1f 2Документ13 страницGen Set Controller GC 1f 2Luis Campagnoli100% (2)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Maintenance Instructions For Voltage Detecting SystemДокумент1 страницаMaintenance Instructions For Voltage Detecting Systemيوسف خضر النسورОценок пока нет

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- SHS SEPNAS Paper-Formatting-guidelines Pr2 Quantitative ReserachДокумент24 страницыSHS SEPNAS Paper-Formatting-guidelines Pr2 Quantitative ReserachAnalyn RosarioОценок пока нет

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Module 6: Flow Control Valves: Basic PneumaticsДокумент18 страницModule 6: Flow Control Valves: Basic PneumaticsJohn Fernand RacelisОценок пока нет

- TPS65150 Low Input Voltage, Compact LCD Bias IC With VCOM BufferДокумент48 страницTPS65150 Low Input Voltage, Compact LCD Bias IC With VCOM BufferNurudeen KaikaiОценок пока нет

- Construction Planning and SchedulingДокумент70 страницConstruction Planning and SchedulingDessalegn AyenewОценок пока нет

- Chap 4 R SCM CoyleДокумент28 страницChap 4 R SCM CoyleZeeshan QaraОценок пока нет

- Coursera XQVQSBQSPQA5 - 2 PDFДокумент1 страницаCoursera XQVQSBQSPQA5 - 2 PDFAlexar89Оценок пока нет

- Terminal Parking Spaces + 30% Open Space As Required by National Building CodeДокумент3 страницыTerminal Parking Spaces + 30% Open Space As Required by National Building CodeDyra Angelique CamposanoОценок пока нет

- F612/F627/F626B: Semi-Lugged Gearbox Operated Butterfly Valves PN16Документ1 страницаF612/F627/F626B: Semi-Lugged Gearbox Operated Butterfly Valves PN16RonaldОценок пока нет

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (120)

- Facial RecognitionДокумент5 страницFacial RecognitionPeterОценок пока нет

- NAA Standard For The Storage of Archival RecordsДокумент17 страницNAA Standard For The Storage of Archival RecordsickoОценок пока нет

- Questions and AnswersДокумент14 страницQuestions and AnswersRishit KunwarОценок пока нет