Академический Документы

Профессиональный Документы

Культура Документы

M8L4 LN

Загружено:

Engr Bilal KhurshidИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

M8L4 LN

Загружено:

Engr Bilal KhurshidАвторское право:

Доступные форматы

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods Module 8 Lecture Notes 4 Direct and

nd Indirect Search Methods Introduction

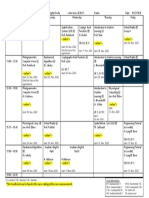

Most of the real world system models involve nonlinear optimization with complicated objective functions or constraints for which analytical solutions (solutions using quadratic programming, geometric programming, etc.) are not available. In such cases one of the possible solutions is the search algorithm in which, the objective function is first computed with a trial solution and then the solution is sequentially improved based on the corresponding objective function value till convergence. A generalized flowchart of the search algorithm in solving a nonlinear optimization with decision variable Xi, is presented in Fig. 1.

Start with Trial Solution Xi, Set i=1

Compute Objective function f(Xi)

Generate new solution Xi+1

Compute Objective function f(Xi+1) Set i=i+1

Convergence Check Yes Optimal Solution Xopt=Xi

No

Fig. 1 Flowchart of Search Algorithm

D Nagesh Kumar, IISc, Bangalore

M8L4

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods

The search algorithms can be broadly classified into two types: (1) direct search algorithm and (2) indirect search algorithm. A direct search algorithm for numerical search optimization depends on the objective function only through ranking a countable set of function values. It does not involve the partial derivatives of the function and hence it is also called nongradient or zeroth order method. Indirect search algorithm, also called the descent method, depends on the first (first-order methods) and often second derivatives (second-order methods) of the objective function. A brief overview of the direct search algorithm is presented. Direct Search Algorithm Some of the direct search algorithms for solving nonlinear optimization, which requires objective functions, are described below: A) Random Search Method: This method generates trial solutions for the optimization model using random number generators for the decision variables. Random search method includes random jump method, random walk method and random walk method with direction exploitation. Random jump method generates huge number of data points for the decision variable assuming a uniform distribution for them and finds out the best solution by comparing the corresponding objective function values. Random walk method generates trial solution with sequential improvements which is governed by a scalar step length and a unit random vector. The random walk method with direct exploitation is an improved version of random walk method, in which, first the successful direction of generating trial solutions is found out and then maximum possible steps are taken along this successful direction. B) Grid Search Method: This methodology involves setting up of grids in the decision space and evaluating the values of the objective function at each grid point. The point which corresponds to the best value of the objective function is considered to be the optimum solution. A major drawback of this methodology is that the number of grid points increases exponentially with the number of decision variables, which makes the method computationally costlier. C) Univariate Method: This procedure involves generation of trial solutions for one decision variable at a time, keeping all the others fixed. Thus the best solution for a decision variable

D Nagesh Kumar, IISc, Bangalore

M8L4

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods

keeping others constant can be obtained. After completion of the process with all the decision variables, the algorithm is repeated till convergence. D) Pattern Directions: In univariate method the search direction is along the direction of coordinate axis which makes the rate of convergence very slow. To overcome this drawback, the method of pattern direction is used, in which, the search is performed not along the direction of the co-ordinate axes but along the direction towards the best solution. This can be achieved with Hooke and Jeeves method or Powells method. In the Hooke and Jeeves method, a sequential technique is used consisting of two moves: exploratory move and the pattern move. Exploratory move is used to explore the local behavior of the objective function, and the pattern move is used to take advantage of the pattern direction. Powells method is a direct search method with conjugate gradient, which minimizes the quadratic function in a finite number of steps. Since a general nonlinear function can be approximated reasonably well by a quadratic function, conjugate gradient minimizes the computational time to convergence. E)Rosen Brocks Method of Rotating Coordinates: This is a modified version of Hooke and Jeeves method, in which, the coordinate system is rotated in such a way that the first axis always orients to the locally estimated direction of the best solution and all the axes are made mutually orthogonal and normal to the first one. F) Simplex Method: Simplex method is a conventional direct search algorithm where the best solution lies on the vertices of a geometric figure in N-dimensional space made of a set of N+1 points. The method compares the objective function values at the N+1 vertices and moves towards the optimum point iteratively. The movement of the simplex algorithm is achieved by reflection, contraction and expansion. Indirect Search Algorithm The indirect search algorithms are based on the derivatives or gradients of the objective function. The gradient of a function in N-dimensional space is given by:

D Nagesh Kumar, IISc, Bangalore

M8L4

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods f x1 f x 2 f = . . . f x N

(1)

Indirect search algorithms include: A) Steepest Descent (Cauchy) Method: In this method, the search starts from an initial trial point X1, and iteratively moves along the steepest descent directions until the optimum point is found. Although, the method is straightforward, it is not applicable to the problems having multiple local optima. In such cases the solution may get stuck at local optimum points. B) Conjugate Gradient (Fletcher-Reeves) Method: The convergence technique of the steepest descent method can be greatly improved by using the concept of conjugate gradient with the use of the property of quadratic convergence. C) Newtons Method: Newtons method is a very popular method which is based on Taylors series expansion. The Taylors series expansion of a function f(X) at X=Xi is given by:

f ( X ) = f ( X i ) + f i T ( X X i ) + 1 ( X X i )T [J i ]( X X i ) 2

(2)

where, [Ji]=[J]|xi , is the Hessian matrix of f evaluated at the point Xi. Setting the partial derivatives of Eq. (2), to zero, the minimum value of f(X) can be obtained.

f ( X ) = 0, x j

j = 1,2,..., N

(3)

D Nagesh Kumar, IISc, Bangalore

M8L4

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods From Eq. (2) and (3) f = f i + [J i ]( X X i ) = 0 Eq. (4) can be solved to obtain an improved solution Xi+1

(4)

X i +1 = X i [J i ] f i

1

(5)

The procedure is repeated till convergence for finding out the optimal solution. D) Marquardt Method: Marquardt method is a combination method of both the steepest descent algorithm and Newtons method, which has the advantages of both the methods, movement of function value towards optimum point and fast convergence rate. By modifying the diagonal elements of the Hessian matrix iteratively, the optimum solution is obtained in this method. E) Quasi-Newton Method: Quasi-Newton methods are well-known algorithms for finding maxima and minima of nonlinear functions. They are based on Newton's method, but they approximate the Hessian matrix, or its inverse, in order to reduce the amount of computation per iteration. The Hessian matrix is updated using the secant equation, a generalization of the secant method for multidimensional problems. It should be noted that the above mentioned algorithms can be used for solving only unconstrained optimization. For solving constrained optimization, a common procedure is the use of a penalty function to convert the constrained optimization problem into an unconstrained optimization problem. Let us assume that for a point Xi, the amount of violation of a constraint is . In such cases the objective function is given by:

f (X i ) = f (X i ) + M 2

(6)

where, =1( for minimization problem) and -1 ( for maximization problem), M=dummy variable with a very high value. The penalty function automatically makes the solution inferior where there is a violation of constraint.

D Nagesh Kumar, IISc, Bangalore

M8L4

Optimization Methods: Advanced Topics in Optimization - Direct and Indirect Search Methods Summary

Various methods for direct and indirect search algorithms are discussed briefly in the present class. The models are useful when no analytical solution is available for an optimization problem. It should be noted that when there is availability of an analytical solution, the search algorithms should not be used, because analytical solution gives a global optima whereas, there is always a possibility that the numerical solution may get stuck at local optima.

D Nagesh Kumar, IISc, Bangalore

M8L4

Вам также может понравиться

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Decision Maths: Networks Prim's AlgorithmДокумент20 страницDecision Maths: Networks Prim's AlgorithmNix Zal100% (1)

- EPAM Curriculum For Batch 2023Документ9 страницEPAM Curriculum For Batch 2023sravan kumarОценок пока нет

- Daa Unit-1: Introduction To AlgorithmsДокумент16 страницDaa Unit-1: Introduction To AlgorithmsmhghtgxdfhhjkjlОценок пока нет

- DS Algo - Questions - Imp - QДокумент75 страницDS Algo - Questions - Imp - QItisha SharmaОценок пока нет

- Knapsack AlgorithmДокумент9 страницKnapsack AlgorithmWalker DemelОценок пока нет

- Numerical Methods QBДокумент4 страницыNumerical Methods QBNarmatha ParthasarathyОценок пока нет

- Travelling Sales Man ProblemДокумент3 страницыTravelling Sales Man ProblemYaseen ShОценок пока нет

- Squares and Square RootsДокумент3 страницыSquares and Square RootsJP VijaykumarОценок пока нет

- Data Structures Using C May 2016 (2009 Scheme)Документ2 страницыData Structures Using C May 2016 (2009 Scheme)chethan 9731Оценок пока нет

- Numerical Methods For Engineers 7th Edition Chapra Solutions ManualДокумент18 страницNumerical Methods For Engineers 7th Edition Chapra Solutions Manualtiffanynelsonqejopgnixy100% (31)

- Choclate Wrapper Problem (Shivam Pandey)Документ8 страницChoclate Wrapper Problem (Shivam Pandey)Education for allОценок пока нет

- String Matching ProblemДокумент16 страницString Matching ProblemSiva Agora KarthikeyanОценок пока нет

- 15ECSC701 - 576 - KLE47-15Ecsc701-set1 Cse PaperДокумент5 страниц15ECSC701 - 576 - KLE47-15Ecsc701-set1 Cse PaperAniket AmbekarОценок пока нет

- Assignment MtechДокумент5 страницAssignment MtechyounisОценок пока нет

- Scheduling 7 and SequencingДокумент25 страницScheduling 7 and SequencingBharadhwaj BalasubramaniamОценок пока нет

- Ada 3Документ2 страницыAda 3Tarushi GandhiОценок пока нет

- Harris Hawks Optimization (HHO) Algorithm and ApplicationsДокумент63 страницыHarris Hawks Optimization (HHO) Algorithm and ApplicationsalihОценок пока нет

- Numerical MCQS2Документ7 страницNumerical MCQS2Mian Hasham Azhar AZHARОценок пока нет

- Genetic Algorithms - 0-1 KnapsackДокумент14 страницGenetic Algorithms - 0-1 KnapsackswatsurmyОценок пока нет

- Neural Networks and Fuzzy Systems: NeurolabДокумент17 страницNeural Networks and Fuzzy Systems: NeurolabmesferОценок пока нет

- Merge Sort: Merge Function Is Used For Merging Two Halves. The Merge (Arr, L, M, R) Is KeyДокумент8 страницMerge Sort: Merge Function Is Used For Merging Two Halves. The Merge (Arr, L, M, R) Is KeyPedro FigueiredoОценок пока нет

- Gauss Elimination Method PDFДокумент23 страницыGauss Elimination Method PDFJoeren JarinОценок пока нет

- Ai Assignment 1Документ5 страницAi Assignment 1issaalhassan turayОценок пока нет

- SimplexДокумент4 страницыSimplexJustine Rubillete Mendoza MarianoОценок пока нет

- lAB MANUAL Data Structures Using C (Final)Документ73 страницыlAB MANUAL Data Structures Using C (Final)SATYAM JHAОценок пока нет

- WochenstundenplΣne CS4DM WS20-21 Stand 27Okt20Документ1 страницаWochenstundenplΣne CS4DM WS20-21 Stand 27Okt20Anas AsifОценок пока нет

- Ant Colony OptimizationДокумент18 страницAnt Colony OptimizationAster AlemuОценок пока нет

- Cs 2208 Data Structures Lab 0 0 3 2 (Repaired)Документ33 страницыCs 2208 Data Structures Lab 0 0 3 2 (Repaired)sumiyamini08Оценок пока нет

- Design and Analysis of A New Hash Algorithm With Key IntegrationДокумент6 страницDesign and Analysis of A New Hash Algorithm With Key IntegrationD BanОценок пока нет