Академический Документы

Профессиональный Документы

Культура Документы

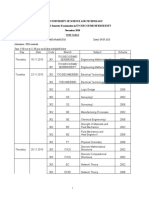

Seminar Topics

Загружено:

rgkripaИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Seminar Topics

Загружено:

rgkripaАвторское право:

Доступные форматы

Plastic Memory

A conducting plastic has been used to create a new memory technology with the potential to store a megabit of data in a millimeter-square device - 10 times denser than current magnetic memories. The device should also be cheap and fast, but cannot be rewritten, so would only be suitable for permanent storage. Imagine a scenario where the memory stored in your digital camera or personal digital assistant is partially based one of the most flexible materials made by man: plastic. Scientists at HP Labs and Princeton University are excited a new memory technology that could store more data and cost less than traditional silicon-based chips for mobile devices such as handheld computers, cell phones and MP3 players. But this chip is different than silicon technologies such as the popular flash memory, the researchers said, because it's partially made of plastic in addition to a foil substrate and some silicon. And while flash memory can be rewritten, the new technology can be written to only once. But it can be read several times and retains data without power because it won't require a laser or motor to read or write. HP scientist Warren Jackson said simplifying the production of such memory chips is a key factor because it has the potential to lower the cost of memory use on a per megabyte basis for customers. However, this technology could potentially store more data than flash, and perhaps even become fast enough to store video, he said. "This has the ability to work for a slightly different market than flash because we would now have the ability to not be able to write it a bunch of applications, but just read it so it becomes a permanent record.," Jackson told internetnews.com.

Moreover, this could be favorable to companies concerned about compliance regulations such as HIPAA and Sarbanes-Oxley, ensuring that the integrity of data on documents is preserved over long periods of time, the scientists said. According to research analysts, finding alternative sources of memory has become a popular research issue because flash memory is expected to reach serious limitations as the dimension demands on devices increasingly get smaller to host a variety of form factors. Smaller memory space means the transistors leak more electricity and suck up more power. But Gartner research analyst Richard Gordon said engineering obstacles facing memory technologies stretch back 30-plus years and noted that just last week Intel announced a new transistor to take care of the leakage problem. "Flash technology is currently at a process node of the .11 micron level," Gordon said "There is a roadmap to accommodate it for the next 10 years so it still has a long time to go before it runs out of steam. I don't see that changing unless there is a technology in terms of cost-per-bit and performance that blows flash out of the water." While unique the concept of plastic or polymer-based memory is not entirely alien. Rival chipmakers are also looking into polymer-based memory. Intel has a program to develop Ferro-electric polymer memory. AMD recently bought Coatue, one of several companies working on polymer memory, including Thin Film Electronics. Intel has a stake in this Swedish company.

Diamond chip

INTRODUCTION Electronics without silicon is unbelievable, but it will come true with the evolution of Diamond or Carbon chip. Now a day we are using silicon for the manufacturing of Electronic Chip's. It has many disadvantages when it is used in power electronic applications, such as bulk in size, slow operating speed etc. Carbon, Silicon and Germanium are belonging to the same group in the periodic table. They have four valance electrons in their outer shell. Pure Silicon and Germanium are semiconductors in normal temperature. So in the earlier days they are used widely for the manufacturing of electronic components. But later it is found that Germanium has many disadvantages compared to silicon, such as large reverse current, less stability towards temperature etc so in the industry focused in developing electronic components using silicon wafers Now research people found that Carbon is more advantages than Silicon. By using carbon as the manufacturing material, we can achieve smaller, faster and stronger chips. They are succeeded in making smaller prototypes of Carbon chip. They invented a major component using carbon that is "CARBON NANOTUBE", which is widely used in most modern microprocessors and it will be a major component in the Diamond chip WHAT IS IT? In single definition, Diamond Chip or carbon Chip is an electronic chip manufactured on a Diamond structural Carbon wafer. OR it can be also defined as the electronic component manufactured using carbon as the wafer. The major component using carbon is (cnt) Carbon Nanotube. Carbon Nanotube is a nano dimensional made by using carbon. It has many unique properties. HOW IS IT POSSIBLE? Pure Diamond structural carbon is non-conducting in nature. In order to make it conducting we have to perform doping process. We are using Boron as the p-type doping Agent and the Nitrogen as the n-type doping agent. The doping process is similar to that in the case of Silicon chip manufacturing. But this process will take more time compared with that of silicon because it is very difficult to diffuse through strongly bonded diamond structure. CNT (Carbon Nanotube) is already a semi conductor. ADVANTAGES OF DIAMOND CHIP 1 SMALLER COMPONENTS ARE POSSIBLE As the size of the carbon atom is small compared with that of silicon atom, it is possible to etch very smaller lines through diamond structural carbon. We can realize a transistor whose size is one in hundredth of silicon transistor. 2 IT WORKS AT HIGHER TEMPERATURE Diamond is very strongly bonded material. It can withstand higher temperatures compared with that of silicon. At very high temperature, crystal structure of the silicon will collapse. But diamond chip can function well in these elevated temperatures. Diamond is very good conductor of heat. So if there is

any heat dissipation inside the chip, heat will very quickly transferred to the heat sink or other cooling mechanics. 3 FASTER THAN SILICON CHIP Carbon chip works faster than silicon chip. Mobility of the electrons inside the doped diamond structural carbon is higher than that of in he silicon structure. As the size of the silicon is higher than that of carbon, the chance of collision of electrons with larger silicon atoms increases. But the carbon atom size is small, so the chance of collision decreases. So the mobility of the charge carriers is higher in doped diamond structural carbon compared with that of silicon. 4 LARGER POWER HANDLING CAPACITY For power electronics application silicon is used, but it has many disadvantages such as bulk in size, slow operating speed, less efficiency, lower band gap etc at very high voltages silicon structure will collapse. Diamond has a strongly bonded crystal structure. So carbon chip can work under high power environment. It is assumed that a carbon transistor will deliver one watt of power at rate of 100 GHZ. Now days in all power electronic circuits, we are using certain circuits like relays, or MOSFET inter connection circuits (inverter circuits) for the purpose of interconnecting a low power control circuit with a high power circuit .If we are using carbon chip this inter phase is not needed. We can connect high power circuit direct to the diamond chip

Dynamic TCP Connection Elapsing

INTRODUCTION When designing systems for load-balancing, process migration, or fail-over, there is eventually the point where o ne would like to be able to "move" a socket from one machine to another one, without losing the connection on that socket, similar to file descriptor passing on a single host. Such a move operation usually involves at least three elements: 1. Moving any application space state related to the connection to the new owner. E.g. in the case of a Web server serving large static files, the application state could simply be the file name and the current position in the file. 2. Making sure that packets belonging to the connection are sent to the new owner of the socket. Normally this also means that the previous owner should no longer receive them. 3. Last but not least, creating compatible network state in the kernel of the new connection such that it can resume the communication where the previous owner left off. We shall call the host from which ownership of the connection endpoint is taken the origin, the host to which it is transferred the destination, and the host on the other end of the connection (which does not change) the peer. Details of moving the application state are beyond the scope of this paper, and we will only sketch relatively simple examples. Similarly, we will mention a few ways for how the redirection in the network can be accomplished, but without going into too much detail. The complexity of the kernel state of a network connection, and the difficulty of moving this state from one host to another, varies greatly with the transport protocol being used.

Among the two major transport protocols of the Internet, UDP [1] and TCP [2], the latter clearly presents more of a challenge in this regard. Nevertheless, some issues also apply to UDP. tcpcp (TCP Connection Passing) is a proof of concept implementation of a mechanism that allows applications to transport the kernel state of a TCP endpoint from one host to another, while the connection is established, and without requiring the peer to cooperate in any way. tcpcp is not a complete process migration or load-balancing solution, but rather a building block that can be integrated into such systems. tcpcp consists of a kernel patch (at the time of writing for version 2.6.4 of the Linux kernel) that implements the operations for dumping and restoring the TCP connection endpoint, a library with wrapper functions (see Section 3), and a few applications for debugging and demonstration. The remainder of this paper is organized as follows: this section continues with a description of the context in which connection passing exists. Section 2 explains the connection passing operation in detail. Section 3 introduces the APIs tcpcp provides. The information that defines a TCP connection and its state is described in Section 4. Sections 5 and 6 discuss congestion control and the limitations TCP imposes on check pointing. Security implications of the availability and use of tcpcp are examined in Section 7. We conclude with an outlook on future direction the work on tcpcp will take in Section 8, and the conclusions in Section 9. The excellent "TCP/IP Illustrated" [3] is recommended for readers who wish to refresh their memory of TCP/IP concepts and terminology. The key feature of tcpcp is that the peer can be left completely unaware that the connection is passed from one host to another. In detail, this means: " The peer's networking stack can be used "as is," without modification and without requiring nonstandard functionality " The connection is not interrupted " The peer does not have to stop sending " No contradictory information is sent to the peer " These properties apply to all protocol layers visible to the peer

Distributed Interactive Virtual Environment

INTRODUCTION The Distributed Interactive Virtual Environment (DIVE) is an experimental platform for the development of virtual environments, user interfaces and applications based on shared 3D synthetic environments. DIVE is especially tuned to multi-user applications, where several networked participants interact over a network. Dive is based on a peer-to-peer approach with no centralized server, where peers communicate by reliable and non-reliable multicast, based on IP multicast. Conceptually, the shared state can be seen as a memory shared over a network where a set of processes interacts by making concurrent accesses to the memory. Consistency and concurrency control of common data (objects) is achieved by active replication and reliable multicast protocols. That is, objects are replicated at several nodes where the replica is kept consistent by being continuously updated. Update messages are sent using multicast so that all nodes perform the same sequence of updates.

The peer-to-peer approach without a centralized server means that as long as any peer is active within a world, the world along with its objects remains "alive". Since objects are fully replicated (not approximated) at other nodes, they are independent of any one process and can exist independently of the creator. Users navigate in 3D space and see, meet and collaborate with other users and applications in the environment. A participant in a DIVE world is called an actor, and is either a human user or an automated application process. An actor is represented by a "body-icon" (or avatar), to facilitate the recognition

and awareness of ongoing activities. The body-icon may be used as a template on which the actor's input devices are graphically modeled in 3D space. A user `sees' a world through a rendering application called a visualizer (the default is currently called Vishnu). The visualizer renders a scene from the viewpoint of the actor's eye. Changing the position of the eye, or changing the "eye" to another object, will change the viewpoint. A visualizer can be set up to accommodate a wide range of I/O devices such as an HMD, wands, data gloves, etc. Further, it reads the user's input devices and maps the physical actions taken by the user to logical actions in the Dive system. This includes navigation in 3D space, clicking on objects and grabbing objects etc. In a typical DIVE world, a number of actors leave and enter worlds dynamically. Such applications typically build their user interfaces by creating and introducing necessary graphical objects. Thereafter, they "listen" to events in the world, so that when an event occurs, the application reacts according to some control logic.

DIVE CHARACTERISTICS The characteristics of Distributed Interactive Virtual Environments (DIVE) are those applicable for Distributed Interactive Simulation applications. The DIS standards provide application protocol and communication service standards to support DIS inter-operability. The DIS characteristics that apply to DIVE are: " Interaction delay: any action issued by any participant in the Distributed Interactive Virtual Environment must reach the other participants within 100 ms. If the network delay is more than 100 ms, the received action (mainly encoded in an application data unit, or ADU) is considered as late and not used by the application. " Large number of participants: the DIVE application can be played by several users connected via a network such as the Internet. The number of participants should be unlimited to allow everybody to enter the virtual world. " Interactive data are short (few tens of bytes) and frequent. This characteristic differs from other multimedia application data like audio and video. Each simulation object can transmit its local state that, in the worst case, represents a small amount of information (less than hundred bytes). " High level of dynamicity in group structure and topology. Participants join and leave the session dynamically. In this context, the IP multicast model is particularly convenient . " Information is continuous. In most of the cases, the behavior of an Avatar at a given time T+1 is an evolution of its behavior at time T (for example the displacement of an avatar).

Intelligent RAM

INTRODUCTION Given the growing processor-memory performance gap and the awkwardness of high capacity DRAM chips, we believe that it is time to consider unifying logic and DRAM. We call such a chip an "IRAM", standing for Intelligent RAM, since most of transistors on this merged chip will be devoted to memory. The reason to put the processor in DRAM rather than increasing the on-processor SRAM is that DRAM is in practice approximately 20 times denser than SRAM. (The ratio is much larger than the transistor ratio because DRAMs use 3D structures to shrink cell size). Thus, IRAM enables a much larger amount of on-chip memory than is possible in a conventional architecutre. Although others have examined this issue in the past, IRAM is attractive today for several reasons. First, the gap between the performance of processors and DRAMs has been widening at 50% per year for 10 years, so that despite heroic efforts by architects, compiler writers, and applications developers, many more applications are limited by memory speed today than in the past. Second, since the actual processor occupies only about onethird of the die ,the upcoming gigabit DRAM has enough capacity that whole programs and data sets can fit on a single chip. In the past, so little memory could fit onchip with the CPU that IRAMs were mainly considered as building blocks for multiprocessors. Third, DRAM dies have grown about 50% each generation; DRAMs are being made with more metal layers to accelerate the longer lines of these larger chips. Also, the high speed interface of synchronous DRAM will require fast transistors on the DRAM chip. These two DRAM trends should make logic on DRAM closer to the speed of logic on logic fabs than in the past. POTENTIAL ADVANTAGES OF IRAM 1) Higher Bandwidth. A DRAM naturally has extraordinary internal bandwidth, essentially fetching the square root of its capacity each DRAM clock cycle; an on-chip processor can tap that bandwidth.The potential bandwidth of the gigabit DRAM is even greater than indicated by its logical organization. Since it is important to keep the storage cell small, the normal solution is to limit the length of the bit lines, typically with 256 to 512 bits per sense amp. This quadruples the number of sense amplifiers. To save die area, each block has a small number of I/O lines, which reduces the internal bandwidth by a factor of about 5 to 10 but still meets the external demand. One IRAM goal is to capture a larger fraction of the potential on-chip bandwidth. 2) Lower Latency. To reduce latency, the wire length should be kept as short as possible. This suggests the fewer bits per block the better. In addition, the DRAM cells furthest away from the processor will be slower than the closest ones. Rather than restricting the access timing to accommodate the worst case, the processor could be designed to be aware when it is accessing "slow" or "fast" memory. Some additional reduction in latency can be obtained simply by not multiplexing the address as there is no reason to do so on an IRAM. Also, being on the same chip with the DRAM, the processor avoids driving the offchip wires, potentially turning around the data bus, and accessing an external memory controller. In summary, the access latency of an IRAM processor does not need to be limited by the same constraints as a standard DRAM part. Much lower latency may be obtained by intelligent floor planning, utilizing faster circuit topologies, and redesigning the address/data bussing schemes. The potential memory latency for random addresses of less than 30 ns is possible for a latency-oriented DRAM design on the same chip as the processor; this is as fast as second level caches. Recall that the memory latency on the AlphaServer 8400 is 253 ns.

These first two points suggest IRAM offers performance opportunities for two types of applications: 1. Applications with predictable memory accesses, such as matrix manipulations, may take advantage of the potential 50X to 100X increase in IRAM bandwidth; and 2. Applications with unpredictable memory accesses and very large memory "footprints", such as data bases, may take advantage of the potential 5X to 10X decrease in IRAM latency. 3) Energy Efficiency. Integrating a microprocessor and DRAM memory on the same die offers the potential for improving energy consumption of the memory system. DRAM is much denser than SRAM, which is traditionally used for on-chip memory. Therefore, an IRAM will have many fewer external memory accesses, which consume a great deal of energy to drive high-capacitance off-chip buses. Even on-chip accesses will be more energy efficient, since DRAM consumes less energy than SRAM. Finally, an IRAM has the potential for higher performance than a conventional approach. Since higher performance for some fixed energy consumption can be translated into equal performance at a lower amount of energy, the performance advantages of IRAM can be translated into lower energy consumption 4) Memory Size and Width. Another advantage of IRAM over conventional designs is the ability to adjust both the size and width of the on-chip DRAM. Rather than being limited by powers of 2 in length or width, as is conventional DRAM, IRAM designers can specify exactly the number of words and their width. This flexibility can improve the cost of IRAM solutions versus memories made from conventional DRAMs. 5) Board Space. Finally, IRAM may be attractive in applications where board area is precious --such as cellular phones or portable computers--since it integrates several chips into one.

iSCSI

INTRODUCTION With the release of the Fibre Channel and SAN based on it the storage world staked on a network access to storage devices. Almost everyone announced that the future belonged to the storage area networks. For several years the FC interface was the only standard for such networks but today many realize that it's not so. The SAN based on the FC has some disadvantages, which are the price and difficulties of access to remote devices. At present there are some initiatives which are being standardized; they are meant to solve or diminish the problems. The most interesting of them is iSCSI. THE NEED FOR IP STORAGE Several factors are rapidly expanding worldwide storage requirements: " E-mail " E-Commerce " A pervasive global economy Over the past decade, many enterprises have seen a significant increase in the volume of data produced. The amount of data continues to increase, particularly in Web-based and e-Commerce environments. E-mail impacts worldwide storage by producing more data than is generated by new Web pages. These types of traffic are typically multimedia intensive. E-mail and Internet-related

business/commercial transactions combined have caused a dramatic increase in storable data moving across Internet Protocol (IP) networks. This traffic can potentially overwhelm existing backup methods. A new method is needed to bring improved storage capabilities to IP networks and reduce limitations associated with Fibre Channel SANs. A rapidly emerging technology solution is Internet SCSI (iSCSI) or SCSI over IP. WHAT IS GIGA BIT iSCSI? iSCSI Defined Internet SCSI (iSCSI) is a draft standard protocol for encapsulating SCSI command into TCP/IP packets and enabling I/O block data transport over IP networks. iSCSI can be used to build IP-based SANs. The simple, yet powerful technology can help provide a high-speed, low-cost, long-distance storage solution for Web sites, service providers, enterprises and other organizations. An iSCSI HBA, or storage NIC, connects storage resources over Ethernet. As a result, core transport layers can be managed using existing network management applications. High-level management activities of the iSCSI protocol such as permissions, device information and configuration - can easily be layered over or built into these applications. For this reason, the deployment of interoperable, robust enterprise management solutions for iSCSI devices is expected to occur quickly. First-generation iSCSI HBA performance is expected to be well suited for the workgroup or departmental storage requirements of medium- and large-sized businesses. The expected availability of TCP/IP Offload Engines in 2002 will significantly improve the performance of iSCSI products. Performance comparable to Fibre Channel is expected when vendors begin shipping 10 Gigabit Ethernet iSCSI products in 2003. The Convergence of Storage and Networking The emerging iSCSI protocol specifies a method for encapsulating SCSI commands in the TCP/IP protocol - the messaging protocol of the Internet. This encapsulation means that the Internet, or any TCP/IP network, can be used to carry storage traffic.

3D Searching

Definition

From computer-aided design (CAD) drawings of complex engineering parts to digital representations of proteins and complex molecules, an increasing amount of 3D information is making its way onto the Web and into corporate databases. Because of this, users need ways to store, index, and search this information. Typical Web-searching approaches, such as Google's, can't do this. Even for 2D images, they generally search only the textual parts of a file, noted Greg Notess, editor of the online Search Engine Showdown newsletter. However, researchers at universities such as Purdue and Princeton have begun developing search engines that can mine catalogs of 3D objects, such as airplane parts, by looking for physical, not textual, attributes. Users formulate a query by using a drawing application to sketch what they are looking for or by selecting a similar object from a catalog of images. The search engine then finds the items they want. The company must make it again, wasting valuable time and money

3D SEARCHING Advances in computing power combined with interactive modeling software, which lets users create images as queries for searches, have made 3Dsearch technology possible. Methodology used involves the following steps " Query formulation " Search process " Search result QUERY FORMULATION True 3D search systems offer two principal ways to formulate a query: Users can select objects from a catalog of images based on product groupings, such as gears or sofas; or they can utilize a drawing program to create a picture of the object they are looking for. or example, Princeton's 3D search engine uses an application to let users draw a 2D or 3D representation of the object they want to find. The above picture shows the query interface of a 3D search system. SEARCH PROCESS The 3D-search system uses algorithms to convert the selected or drawn image-based query into a mathematical model that describes the features of the object being sought. This converts drawings and objects into a form that computers can work with. The search system then compares the mathematical description of the drawn or selected object to those of 3D objects stored in a database, looking for similarities in the described features. The key to the way computer programs look for 3D objects is the voxel (volume pixel). A voxel is a set of graphical data-such as position, color, and density-that defines the smallest cubeshaped building block of a 3D image. Computers can display 3D images only in two dimensions. To do this, 3D rendering software takes an object and slices it into 2D cross sections. The cross sections consist of pixels (picture elements), which are single points in a 2D image. To render the 3D image on a 2D screen, the computer determines how to display the 2D cross sections stacked on top of each other, using the applicable interpixel and interslice distances to position them properly. The computer interpolates data to fill in interslice gaps and create a solid image.

Real Time Application Interface

INTRODUCTION A real time system can be defined as a "system capable of guaranteeing timing requirements of the processes under its control". The RTAI plug-in should help Linux to fulfill some real time constraints (few milliseconds deadline, no event loss). It is based on a RTHAL: Real Time Hardware Abstraction Layer. This concept is also known in Windows NT.

The HAL exports some Linux data & functions close related to HW. RTAI modifies them to get control over the HW platform. That allows RTAI real time tasks to run concurrently with Linux processes. The HAL defines a clear I/F between RTAI & Linux. 1.1 OS requirements summary Generally speaking, we would like Software components to rely on a platform offering both real time support and "standard" general purpose API. The Platform needs a real-time executive (small footprint, deadline fulfillment, ... ) and a comprehensive OS for Applications ( NT, UNIX, Linux, ... ). The market place already offers products for: " NT OS: real time extensions like Radisys' Intime or VenturCom's RTX " UNIX : micro kernel based technologies natively supporting real time and rich UNIX like API's.

The Linux OS exports the same application service level but suffers from a lack of real time support. Fortunately some options are foreseen: " Native real time support in Linux : does not seem the Main stream of Linux. " Linux Modifications for real time constraints (KURT): no outstanding background. " Linux and real time sharing resources ( L4Linux) : experimental project. " Linux as a task of a real time executive: RTLinux, RTAI, eCos, Nucleus, ... "Linux as a task of a real time executive" is maturing today. RTLinux is a better known solution. RTAI , now requires a closer look and justifies this presentation. 1.2 A little bit of ... Linux kernel core Linux, like other OS, offers to the Applications at least the following services: " HW management layer dealing with event polling or Processor/peripheral Interrupts " Scheduler classes dealing with process activation, priorities, time slice, soft real-time " Communications means among Applications (at least FIFO). " Hardware Interrupts are served by handlers. On Intel machine the handler addresses are store in the Interrupt Descriptor Table (IDT)...but it is still possible to pool interrupt peripherals. The Linux current releases offer some soft-real time capabilities, but neither guaranties hard deadline nor prevents event loss. 1.3 A little bit of ... RTAI RTAI is such a small proprietary (see note 1) executive, still under development at DIAMP (Dipartimento di Ingegneria Aerospaziale del Politecnico di Milano) by Paolo Mantegazza's team . It offers some services related to: " HW management layer dealing with peripherals " Scheduler classes dealing with tasks, priorities, hard real-time " Communications means among tasks & processes (at least FIFO). RTAI real time entities are simple mono-thread tasks whereas Linux applications are full features (mono or multi threads) processes. RTAI is basically an Interrupt dispatcher. The Intel processor interrupts (0..31) are still managed by Linux. RTAI mainly traps the peripherals ISA IT's and if necessary re-routes them to Linux (e.g.: disk IT). It is also able to manage other kind of ITs.

It supports, like Linux, both SMP (Symmetric Multi Processor) and UP (Uni-Processor) Architecture. From a real time point of view, it is quite similar to RTLinux. Whereas RTLinux is an intrusive modification of the kernel, RTAI uses the concept of HAL to get information from Linux and to trap some fundamental functions. This HAL provides few dependencies to Linux Kernel. This leads to: " Simple adaptation in Linux Kernel " Easy RTAI port from version to version of Linux " Easier use of other Oses instead of RTAI

Rover Technology

INTRODUCTION Rover Technology adds a user's location to other dimensions of system awareness, such as time, user preferences, and client device capabilities. The software architecture of Rover systems is designed to scale to large user populations. Consider a group touring the museums in Washington, D.C. The group arrives at a registration point, where each person receives a handheld device with audio, video, and wireless communication capabilities. an off-the-shelf PDA available in the market today. A wireless-based system tracks the location of these devices and presents relevant information about displayed objects as the user moves through the museum. Users can query their devices for maps and optimal routes to objects of interest. They can also use the devices to reserve and purchase tickets to museum events later in the day. The group leader can send messages to coordinate group activities. The part of this system that automatically tailors information and services to a mobile user's location is the basis for location-aware computing. This computing paradigm augments the more traditional dimensions of system awareness, such as time-, user-, and device-awareness. All the technology components to realize location-aware computing are available in the marketplace today. What has hindered the widespread deployment of location-based systems is the lack of an integration architecture that scales with user populations. ROVER ARCHITECTURE Rover technology tracks the location of system users and dynamically configures application-level information to different link-layer technologies and client-device capabilities. A Rover system represents a single domain of administrative control, managed and moderated by a Rover controller. Figure 1_ shows a large application domain partitioned into multiple administrative domains, each with its own Rover system - much like the Internet's Domain Name System" 2 End users interact with the system through Rover client devices- typically wireless handheld units with varying capabilities for processing, memory and storage, graphics and display, and network interfaces. Rover maintains a profile for each device, identifying its capabilities and configuring content accordingly. Rover also maintains end-user profiles, defining specific user interests and serving content tailored to them. A wireless access infrastructure provides connectivity to the Rover clients. In the current implementation, we have defined a technique to determine location based on certain properties of the wireless access infrastructure. Although Rover can leverage such properties of specific air interfaces,1 its location management technique is not tied to a particular wireless technology. Moreover, different wireless interfaces can coexist in a single Rover system or in different domains of a multi-Rover

system. Software radio technology3 offers a way to integrate the different interfaces into a single device. This would allow the device to easily roam between various Rover systems, each with different wireless access technologies. A server system implements and manages Rover's end-user services. The server system consists of five components: The Rover controller is the system's "brain." It manages the different services that Rover clients request, scheduling and filtering the content according to the current location and the user and device profiles. The location server is a dedicated unit that manages the client device location services within the Rover system. Alternatively, applications can use an externally available location service, such as the Global Positioning System (GPS). The streaming-media unit manages audio and video content streamed to clients. Many of today's offthe-shelf streaming-media units can be integrated with the Rover system.

Self Defending Networks

INTRODUCTION As the nature of threats to organizations continues to evolve, so must the defense posture of the organizations. In the past, threats from both internal and external sources were relatively slow-moving and easy to defend against. In today's environment, where Internet worms spread across the world in a matter of minutes, security systems - and the network itself - must react instantaneously. The foundation for a self-defending network is integrated security - security that is native to all aspects of an organization. Every device in the network - from desktops through the LAN and across the WAN - plays a part in securing the networked environment through a globally distributed defense. Such systems help to ensure the privacy of information transmitted and to protect against internal and external threats, while providing corporate administrators with control over access to corporate resources. SDN shows that the approach to security has evolved from a point product approach to this integrated security approach

These self-defending networks will identify threats, react appropriately to the severity level, isolate infected servers and desktops, and reconfigure the network resources in response to an attack. The vision of the Self-Defending Network brings together Secure Connectivity, Threat Defense and Trust and Identity Management System with the capability of infection containment and rouge device isolation in a single solution. SELF DEFENDING NETWORKS To defend their networks, IT professionals need to be aware of the new nature of security threats, which includes the following:

Shift from internal to external attacks Before 1999, when key applications ran on minicomputers and mainframes, threats typically were perpetrated by internal users with privileges. Between 1999 and 2002, reports of external events rose 250 percent, according to CERT. Shorter windows to react. When attacks homed in on individual computers or networks, companies had more time to understand the threat. Now that viruses can propagate worldwide in 10 minutes, that "luxury" is largely gone. Antivirus solutions are still essential but are not enough: by the time the signature has been identified, it is too late. With self-propagation, companies need network technology that can autonomously take action against threats. More difficult threat detection. Attackers are getting smarter. They used to attack the network, and now they attack the application or embed the attack in the data itself, which makes detection more difficult.An attack at the network layer, for example, can be detected by looking at the header information. But an attack embedded in a text file or attachment can only be detected by looking at the actual payload of the packet--something a typical firewall doesn't do.The burden of threat detection is shifting from the firewall to the access control server and intrusion detection system.Rather than single-point solutions, companies need holistic solutions. A lowered bar for hackers. Finally, a proliferation of easy-to-use hackers' tools and scripts has made hacking available to the less technically-literate. The advent of 'point-and-click' hacking means the attacker doesn't have to know what's going on under the hood in order to do damage. These trends in security are what have lead to the advent of SDNs or Self Defending Networks as the latest verson in security control.

Sky X Technology

INTRODUCTION Satellites are ideal for providing internet and private network access over long distance and to remote locations. However the internet protocols are not optimized for satellite conditions and consequently the throughput over the satellite networks is restricted to only a fraction of available bandwidth. We can over come these restrictions by using the Sky X protocol. The Sky X Gateway and Sky X Client/Servers systems replaces TCP over satellite link with a protocol optimized for the long latency, high loss and asymmetric bandwidth conditions of the typical satellite communication. Adding the Sky X system to a satellite network allows users to take full advantage of the available bandwidth. The Sky X Gateway transparently enhances the performance of all users on a satellite network without any modifications to the end clients and servers. The Sky X Client and the Sky X Server enhance the performance of data transmissions over satellites directly to end user PC's, thereby increasing Web performance by 3 times or more and file transfer speeds by 10 to 100 times. The Sky X solution is entirely transparent to end users, works with all TCP applications and does not require any modifications to end client and servers. Sky X products are the leading implementation of a class of products known variously as protocol gateway TCP Performance Enhancing Proxy (TCP/PEP) , or satellite spoofer.The Sky X gateways are available as ready to install hardware solutions which can be added to any satellite network.

The Sky X family consists of the Sky X Gateway, Sky x Client/Server and the sky X OEM products. The Sky X Gateway is a hardware solution designed for easy installation into any satellite network and provides performance enhancement for all devices on the network. The Sky X Client/Server provides performance enhancement to individual PC's.

PERFORMANCE OF TCP OVER SATELLITE Satellites are an attractive option for carrying Internet and other IP traffic to many locations across the globe where terrestrial options are limited or price prohibitive. However data networking over satellites is faced with overcoming the latency and high bit error rates typical of satellite communications, as well as the asymmetric bandwidth of most satellite networks. Communication over geosynchronous satellites, orbiting at an altitude of 22,300 miles has round trip times of approximately 540 m/s, an order of magnitude larger than terrestrial networks. The journey through the atmosphere can also introduce bit errors into the data stream. These factors, combined with backchannel bandwidth typically much smaller than that available on the forward channel, reduce the effectiveness of TCP which is optimized for short hops over low-loss cables or fiber.Eventhough the TCP is very effective in the local network connected by using cables or optical fibers by using its many features such as LPV6, LPsec and other leading-edge functionality. Also it will work with real time operating systems.TCP is designed for efficiency and high performance ,and optimized for maximum throughput and the highest transaction speeds in local networks. But the satellite conditions adversely interact with a number of elements of the TCP architecture, including it s window sizing, congestion avoidance algorithms, and data acknowledgment mechanisms, which contribute to severely constrict the data throughput that can be achieved over satellite links. Thus the advantages achieved by TCP in LAN's are no longer effective in the satellite link. So it is desirable to design a separate protocol for communication through the satellite to eliminate the disadvantages of using TCP over the satellite link

Spawning Networks

INTRODUCTION The ability to rapidly create, deploy, and manage new network services in response to user demands presents a significant challenge to the research community and is a key factor driving the development of programmable networks. Existing network architectures such as the Internet, mobile, telephone, and asynchronous transfer mode (ATM) exhibit two key limitations that prevent us from meeting this challenge: " Lack of intrinsic architectural flexibility in adapting to new user needs and requirements " Lack of automation of the process of realization and deployment of new and distinct network architectures In what follows we make a number of observations about the limitations encountered when designing and deploying network architectures. First, current network architectures are deployed on top of a multitude of networking technologies such as land-based, wireless, mobile, and satellite for a

bewildering array of voice, video, and data applications. Since these architectures offer a very limited capability to match the many environments and applications, the deployment of these architectures has predictably met with various degrees of success. Tremendous difficulties arise, for example, because of the inability of TCP to match the high loss rate encountered in wireless networks or for mobile IP to provide fast handoff capabilities with low loss rates to mobile devices. Protocols other than mobile IP and TCP operating in wireless access networks might help, but their implementation is difficult to realize. Second, the interface between the network and the service architecture responsible for basic communication services (e.g., connection setup procedures in ATM and telephone networks) is rigidly defined and cannot be replaced, modified, or supplemented. In other cases, such as the Internet, end user connectivity abstractions provide little support for quality of service (QoS) guarantees and accounting for usage of network resources (billing). Third, the creation and deployment of network architecture is a manual, time-consuming, and costly process. In response to these limitations, we argue that there is a need to propose, investigate, and evaluate alternative network architectures to the existing ones (e.g., IP, ATM, mobile). This challenge goes beyond the proposal for yet experimental network architecture. Rather, it calls for new approaches to the way we design, develop, deploy, observe, and analyze new network architectures in response to future needs and requirements. We believe that the design, deployment, architecting, and management of new network architectures should be automated and built on a foundation of spawning networks, a new class of open programmable networks. We describe the process of automating the creation and deployment of new network architectures as spawning. The term spawning finds a parallel with an operating system spawning a child process. By spawning a process the operating system creates a copy of the calling process. The calling process is known as the parent process and the new process as the child process. Notably, the child process inherits its parent's attributes, typically executing on the same hardware (i.e., the same processor). We envision spawning networks as having the capability to spawn not processes but complex network architectures. Spawning networks support the deployment of programmable virtual networks. We call a virtual network installed on top of a set of network resources a parent virtual network. We propose the realization of parent virtual networks with the capability of creating child virtual networks operating on a subset of network resources and topology, as illustrated in Fig. 1. . For example, part of an access network to a wired network might be redeployed as a Pico cellular virtual network that supports fast handoff (e.g., by spawning a Cellular IP virtual network), as illustrated in Fig. 1. In this case the access network is the parent and the Cellular IP network the child. We describe a framework for spawning networks based on the design of the Genesis Kernel, a virtual network operating system capable of automating a virtual network life cycle process; that is, profiling, spawning, architecting, and managing programmable network architectures on demand

Speed protocol processors

INTRODUCTION The use of Internet becomes increased and the transmission gap become increased so in order to increase the speed special processors are used here mainly explaining the TRIPOD registers structure .By using this register we can increase the speed. Here mainly implement the function such as protocol processing, memory management and scheduling. The architecture of the TRIPOD has three register files that establish a pipeline to improve protocol processing performance. The first register processing

the IP header, the other two register files are respectively loading and storing packet headers. The packet processing is used for demanding operations. Independently of such special operations, multiple PEs enable parallel execution of several instructions per packet, multithreading supports the assignment of one thread per packet to achieve fast context switching. Tramance subsystems to implement such functions as protocol processing, memory management, and scheduling. PROTOCOLS Protocol is an agreement between user and interface. The basic mechanism for transmitting information and for the receiver to detect the presence of any transmission errors. When a transmission error is detected, even if it is only a single bit, then the complete data block must be discarded. This type of scheme is thus known as best try transmission or connectionless transmission. CLOSING THE GAP Network systems have employed embedded processors to offload protocol processing and computationally expensive operations for more than a decade. In the past few years, however, the computer industry has been developing specialized network processors to close the transmissionprocessing gap in network systems. Today, network processors are an important class of embedded processors, used all across the network systems space from personal to local and wide area networks. They accommodate both the Internet's explosive growth and the proliferation of network-centric system architectures in environments ranging from embedded networks for cars and surveillance systems to mobile enterprise and consumer networks. PROTOCOL PROCESSING The protocol processing is oblivious to register file management because the register file structure is transparent to the protocol code. The transparency originates in a simple mechanism that changes the working of register file. MEMORY MANAGEMENT The memory system includes all parts of the computer that store information. It consists of primary and secondary memory. The primary memory can be referenced one byte at a time, has relatively fast access time, and is usually volatile. The secondary memory refers to collection of storage devices. The modern memory managers automatically transfer information back and forth between the primary and secondary memory using virtual memory. SCHEDULING CPU scheduling refers to the task of managing CPU sharing among a community of processors. The scheduling policy would be selected by each system administrator so it reflects the way that particular computer will be used. Two types of scheduling are preemptive and non-preemptive scheduling. in preemptive scheduling use the interval timer and scheduler to interrupt a running process in order to reallocate the CPU to a higher priority ready process. In non-preemptive scheduling algorithm allow a process to run to completion once it obtains the processor

Strata flash Memory

INTRODUCTION The L18 flash memory device provides read-while-write and read-while-erase capability with density upgrades through 256-Mbit. This family of devices provides high performance at low voltage on a 16-bit data bus. Individually erasable memory blocks are sized for optimum code and data storage. Each device density contains one parameter partition and several main partitions. The flash memory array is grouped into multiple 8-Mbit partitions. By dividing the flash memory into partitions, program or erase operations can take place at the same time as read operations. Although each partition has write, erase and burst read capabilities, simultaneous operation is limited to write or erase in one partition while other partitions are in read mode. The L18 flash memory device allows burst reads that cross partition boundaries. User application code is responsible for ensuring that burst reads don't cross into a partition that is programming or erasing. Upon initial power up or return from reset, the device defaults to asynchronous page-mode read. Configuring the Read Configuration Register enables synchronous burst-mode reads. In synchronous burst mode, output data is synchronized with a user-supplied clock signal. A WAIT signal provides easy CPU-to-flash memory synchronization. In addition to the enhanced architecture and interface, the L18 flash memory device incorporates technology that enables fast factory program and erase operations. Designed for lowvoltage systems, the L18 flash memory device supports read operations with VCC at 1.8 volt, and erase and program operations with VPP at 1.8 V or 9.0 V

MEMORY In order to enable computers to work faster, there are several types of memory available today. Within a single computer there are more than one type of memory. TYPES OF RAM The RAM family includes two important memory devices: static RAM (SRAM) and dynamic RAM (DRAM). The primary difference between them is the lifetime of the data they store. SRAM retains its contents as long as electrical power is applied to the chip. If the power is turned off or lost temporarily, its contents will be lost forever. DRAM, on the other hand, has an extremely short data lifetime-typically about four milliseconds. This is true even when power is applied constantly. In short, SRAM has all the properties of the memory you think of when you hear the word RAM. Compared to that, DRAM seems useless. However, a simple piece of hardware called a DRAM controller can be used to make DRAM behave more like SRAM. The job of the DRAM controller is to periodically refresh the data stored in the DRAM. By refreshing the data before it expires, the contents of memory can be kept alive for as long as they are needed. So DRAM is also as useful as SRAM. When deciding which type of RAM to use, a system designer must consider access time and cost. SRAM devices offer extremely fast access times (approximately four times faster than DRAM) but are much more expensive to produce. Generally, SRAM is used only where access speed is extremely important. A lower cost-per-byte makes DRAM attractive whenever large amounts of RAM are required. Many embedded systems include both types: a small block of SRAM (a few kilobytes) along a critical data path and a much larger block of dynamic random access memory (perhaps even in Megabytes) for everything else.

Вам также может понравиться

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Cobol PDFДокумент118 страницCobol PDFMahantesh RОценок пока нет

- Electronics Manual CNCДокумент219 страницElectronics Manual CNCVojkan Milenovic100% (4)

- 133 VPN Interview Questions Answers GuideДокумент6 страниц133 VPN Interview Questions Answers GuideAshish SharmaОценок пока нет

- Asli pracheen ravan samhita pdf downloadДокумент4 страницыAsli pracheen ravan samhita pdf downloadRahulMPatel41% (32)

- Internet Banking SystemДокумент45 страницInternet Banking Systemsyam praveen91% (32)

- Oracle Internet Application Server To Oracle WebLogic ServerДокумент39 страницOracle Internet Application Server To Oracle WebLogic ServerMohammad ZaheerОценок пока нет

- Seminar Topics 2009 BatchДокумент2 страницыSeminar Topics 2009 BatchrgkripaОценок пока нет

- Seminars of Senior BatchДокумент8 страницSeminars of Senior BatchrgkripaОценок пока нет

- Time Table 3 Sem Nov 2010Документ4 страницыTime Table 3 Sem Nov 2010nijumohanОценок пока нет

- Data and Computer CommunicationsДокумент22 страницыData and Computer CommunicationsrgkripaОценок пока нет

- Higher Algebra - Hall & KnightДокумент593 страницыHigher Algebra - Hall & KnightRam Gollamudi100% (2)

- SBT Net BankingДокумент3 страницыSBT Net BankingrgkripaОценок пока нет

- GSM Technology Seminar ReportДокумент29 страницGSM Technology Seminar ReportrgkripaОценок пока нет

- Smart Dust Full ReportДокумент26 страницSmart Dust Full ReportDivya ReddyОценок пока нет

- 3d TV Technology SeminarДокумент27 страниц3d TV Technology Seminarvivek gangwar83% (6)

- Usbit 32Документ4 страницыUsbit 32Julio SantanaОценок пока нет

- Remove Password from GeoVision Multicam v5.4Документ5 страницRemove Password from GeoVision Multicam v5.4awanjogjaОценок пока нет

- What's The Difference Between EPON And GPON Optical Fiber NetworksДокумент6 страницWhat's The Difference Between EPON And GPON Optical Fiber NetworksAbdulrahman Mahammed AlothmanОценок пока нет

- Distributed Objects and RMIДокумент15 страницDistributed Objects and RMIHưng Hoàng TrọngОценок пока нет

- EC1371 Advanced Analog Circuits L1Документ12 страницEC1371 Advanced Analog Circuits L1RabbitSaviorОценок пока нет

- Performing Form Validation With Validation ControlДокумент3 страницыPerforming Form Validation With Validation ControlPratyushОценок пока нет

- Evolution of Analytical Scalability and Big Data TechnologiesДокумент11 страницEvolution of Analytical Scalability and Big Data TechnologiesSangram007100% (1)

- Android Widget Tutorial PDFДокумент9 страницAndroid Widget Tutorial PDFRyan WattsОценок пока нет

- Chrome Books - Economic Value - WhitepaperДокумент17 страницChrome Books - Economic Value - Whitepaperwestan11Оценок пока нет

- DK-TM4C129X-EM-TRF7970ATB Firmware Development Package: User'S GuideДокумент26 страницDK-TM4C129X-EM-TRF7970ATB Firmware Development Package: User'S GuideTân PhanОценок пока нет

- Basic Router Configuration: Interface Port LabelsДокумент16 страницBasic Router Configuration: Interface Port LabelsvelaxsaОценок пока нет

- MS Project 2007 Torrent - KickassTorrentsДокумент2 страницыMS Project 2007 Torrent - KickassTorrentsanadinath sharmaОценок пока нет

- Combo FixДокумент8 страницCombo FixJesus Jhonny Quispe RojasОценок пока нет

- Ult Studio Config 71Документ18 страницUlt Studio Config 71JoséGuedesОценок пока нет

- FTTH/FTTP/FTTC/FTTx Current Status and ArchitecturesДокумент23 страницыFTTH/FTTP/FTTC/FTTx Current Status and ArchitecturesBambang AdiОценок пока нет

- Ibs CRSДокумент77 страницIbs CRSfoysol_cse_bdОценок пока нет

- ESD 5 UnitsДокумент117 страницESD 5 UnitsgirishgandhiОценок пока нет

- BIOSДокумент5 страницBIOSAshifa MОценок пока нет

- sc415606 PDFДокумент220 страницsc415606 PDFsonirichnaviОценок пока нет

- A Project Report On: Submitted To Dr. Dinesh BhardwajДокумент12 страницA Project Report On: Submitted To Dr. Dinesh BhardwajAyoshee BeriwalОценок пока нет

- Copy-Cut-Paste in VB - Net Windows FormsДокумент9 страницCopy-Cut-Paste in VB - Net Windows FormsMligo ClemenceОценок пока нет

- Chapter 20: Vulnerability AnalysisДокумент110 страницChapter 20: Vulnerability AnalysisSaimo MghaseОценок пока нет

- CFP The 17th International Computer Science and Engineering Conference (ICSEC 2013)Документ1 страницаCFP The 17th International Computer Science and Engineering Conference (ICSEC 2013)Davy SornОценок пока нет