Академический Документы

Профессиональный Документы

Культура Документы

MC0085

Загружено:

Palokaran Joseph AjuАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

MC0085

Загружено:

Palokaran Joseph AjuАвторское право:

Доступные форматы

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

Set-1

1. Describe the following:

Ans:1 Distributed Computing Systems Distributed Computing System Models

Distributed Computing Systems : Over the past two decades, advancements in microelectronic technology have resulted in the availability of fast, inexpensive processors, and advancements in communication technology have resulted in the availability of cost-effective and highly efficient computer networks. The advancements in these two technologies favour the use of interconnected, multiple processors in place of a single, high-speed processor. Computer architectures consisting of interconnected, multiple processors are basically of two types: In tightly coupled systems, there is a single system wide primary memory (address space) that is shared by all the processors (Fig. 1.1). If any processor writes, for example, the value 100 to the memory location x, any other processor subsequently reading from location x will get the value 100. Therefore, in these systems, any communication between the processors usually takes place through the shared memory. In loosely coupled systems, the processors do not share memory, and each processor has its own local memory (Fig. 1.2). If a processor writes the value 100 to the memory location x, this write operation will only change the contents of its local memory and will not affect the contents of the memory of any other processor. Hence, if another processor reads the memory location x, it will get whatever value was there before in that location of its own local memory. In these systems, all physical communication between the processors is done by passing messages across the network that interconnects the processors. Usually, tightly coupled systems are referred to as parallel processing systems, and loosely coupled systems are referred to as distributed computing systems, or simply distributed systems. In contrast to the tightly coupled systems, the processors of distributed computing systems can be located far from each other to cover a wider geographical area. Furthermore, in tightly coupled systems, the number of processors that can be usefully deployed is usually small and limited by the bandwidth of the shared memory. This is not the case with distributed computing systems that are more freely expandable and can have an almost unlimited number of processors.

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

Hence, a distributed computing system is basically a collection of processors interconnected by a communication network in which each processor has its own local memory and other peripherals, and the communication between any two processors of the system takes place by message passing over the communication network. For a particular processor, its own resources are local, whereas the other processors and their resources are remote. Together, a processor and its resources are usually referred to as a node or site or machine of the distributed computing system.

Distributed Computing System Models : Distributed Computing system models can be broadly classified into five categories. They are ; Minicomputer model Workstation model Workstation server model Processor pool model Hybrid model Minicomputer Model :- The minicomputer model (Fig. 1.3) is a simple extension of the centralized time-sharing system. A distributed computing system based on this model consists of a few minicomputers (they may be large supercomputers as well) interconnected by a communication network. Each minicomputer usually has multiple users simultaneously logged on to it. For this, several interactive terminals are connected to each minicomputer. Each user is logged on to one specific minicomputer, with remote access to other minicomputers. The network allows a user to access remote resources that are available on some machine other than the one on to which the user is currently logged. The minicomputer model may be used when resource sharing (such as sharing of information databases of different types, with each type of database located on a different machine) with remote users is desired. The early ARPAnet is an example of a distributed computing system based on the minicomputer model.

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

Workstation Model:- A distributed computing system based on the workstation model (Fig. 1.4) consists of several workstations interconnected by a communication network. An organization may have several workstations located throughout a building or campus, each workstation equipped with its own disk and serving as a single-user computer. It has been often found that in such an environment, at any one time a significant proportion of the workstations are idle (not being used), resulting in the waste of large amounts of CPU time. Therefore, the idea of the workstation model is to interconnect all these workstations by a high-speed LAN so that idle workstations may be used to process jobs of users who are logged onto other workstations and do not have sufficient processing power at their own workstations to get their jobs processed efficiently.

Workstation Server Model:The workstation model is a network of personal workstations, each with its own disk and a local file system. A workstation with its own local disk is usually called a diskful workstation and a workstation without a local disk is called a diskless workstation. With the proliferation of high-speed networks, diskless workstations have become more popular in network environments than diskful workstations, making the workstation-server model more popular than the workstation model for building distributed computing systems. Workstation Server Model:- The workstation model is a network of personal workstations, each with its own disk and a local file system. A workstation with its own local disk is usually called a diskful

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

workstation and a workstation without a local disk is called a diskless workstation. With the proliferation of high-speed networks, diskless workstations have become more popular in network environments than diskful workstations, making the workstation-server model more popular than the workstation model for building distributed computing systems. A distributed computing system based on the workstation-server model (Fig. 1.5) consists of a few minicomputers and several workstations (most of which are diskless, but a few of which may be diskful) interconnected by a communication network.

In this model, a user logs onto one of the workstations called his or her "home" workstation and submits jobs for execution. When the system finds that the user's workstation does not have sufficient processing power for executing the processes of the submitted jobs efficiently, it transfers one or more of the processes from the user's workstation to some other workstation that is currently idle and gets the process executed there, and finally the result of execution is returned to the user's workstation.

In this model, a user logs onto a workstation called his or her home workstation. Normal computation activities required by the user's processes are performed at the user's home workstation, but requests

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

for services provided by special servers (such as a file server or a database server) are sent to a server providing that type of service that performs the user's requested activity and returns the result of request processing to the user's workstation. For better overall system performance, the local disk of a diskful workstation is normally used for such purposes as storage of temporary files, storage of unshared files, storage of shared files that are rarely changed, paging activity in virtual-memory management, and caching of remotely accessed data. Processor Pool Model:- The processor-pool model is based on the observation that most of the time a user does not need any computing power but once in a while the user may need a very large amount of computing power for a short time (e.g., when recompiling a program consisting of a large number of files after changing a basic shared declaration). Therefore, unlike the workstation-server model in which a processor is allocated to each user, in the processor-pool model the processors are pooled together to be shared by the users as needed. The pool of processors consists of a large number of microcomputers and minicomputers attached to the network. Each processor in the pool has its own memory to load and run a system program or an application program of the distributed computing system. The pure processor-pool model (Fig. 1.6), the processors in the pool have no terminals attached directly to them, and users access the system from terminals that are attached to the network via special devices. These terminals are either small diskless workstations or graphic terminals, such as X terminals. A special server (called a run server) manages and allocates the processors in the pool to different users on a demand basis. When a user submits a job for computation, an appropriate number of processors are temporarily assigned to his or her job by the run server. For example, if the user's computation job is the compilation of a program having n segments, in which each of the segments can be compiled independently to produce separate relocatable object files, n processors from the pool can be allocated to this job to compile all the n segments in parallel. When the computation is completed, the processors are returned to the pool for use by other users. In the processor-pool model there is no concept of a home machine. That is, a user does not log onto a particular machine but to the system as a whole. This is in contrast to other models in which each user has a home machine (e.g., a workstation or minicomputer) onto which he or she logs and runs most of his or her programs there by default.

Amoeba proposed by Mullender et al. in 1990 is an example of distributed computing systems based on the processor-pool model. Hybrid Model:- Out of the four models described above, the workstation-server model, is the most widely used model for building distributed computing systems. This is because a large number of computer users only perform simple interactive tasks such as editing jobs, sending electronic mails, and executing small programs. The workstation-server model is ideal for such simple usage. However,

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

in a working environment that has groups of users who often perform jobs needing massive computation, the processor-pool model is more attractive and suitable. To combine the advantages of both the workstation-server and processor-pool models, a hybrid model may be used to build a distributed computing system. The hybrid model is based on the workstationserver model but with the addition of a pool of processors. The processors in the pool can be allocated dynamically for computations that are too large for workstations or that requires several computers concurrently for efficient execution. In addition to efficient execution of computation-intensive jobs, the hybrid model gives guaranteed response to interactive jobs by allowing them to be processed on local workstations of the users. However, the hybrid model is more expensive to implement than the workstation-server model or the processor-pool model.

2. Discuss the implementation of RPC Mechanism in detail. Ans:2 In computer science, a remote procedure call (RPC) is an inter-process communication that allows a computer program to cause a subroutine or procedure to execute in another address space (commonly on another computer on a shared network) without the programmer explicitly coding the details for this remote interaction. That is, the programmer writes essentially the same code whether the subroutine is local to the executing program, or remote. When the software in question uses objectoriented principles, RPC is called remote invocation or remote method invocation. Note that there are many different (often incompatible) technologies commonly used to accomplish this. Message passing An RPC is initiated by the client, which sends a request message to a known remote server to execute a specified procedure with supplied parameters. The remote server sends a response to the client, and the application continues its process. There are many variations and subtleties in various implementations, resulting in a variety of different (incompatible) RPC protocols. While the server is processing the call, the client is blocked (it waits until the server has finished processing before resuming execution). An important difference between remote procedure calls and local calls is that remote calls can fail because of unpredictable network problems. Also, callers generally must deal with such failures without knowing whether the remote procedure was actually invoked. Idempotent procedures (those that have no additional effects if called more than once) are easily handled, but enough difficulties remain that code to call remote procedures is often confined to carefully written low-level subsystems. Sequence of events during a RPC: The client calls the Client stub. The call is a local procedure call, with parameters pushed on to the stack in the normal way. The client stub packs the parameters into a message and makes a system call to send the message. Packing the parameters is called marshalling. The kernel sends the message from the client machine to the server machine. The kernel passes the incoming packets to the server stub. Finally, the server stub calls the server procedure. The reply traces the same steps in the reverse direction.

3. Explain the following: Distributed Shared Memory Systems Memory Consistency models Ans:3

Distributed Shared Memory Systems : Distributed Shared Memory (DSM), also known as a distributed global address space (DGAS), is a concept in computer science that refers to a wide

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's non-shared private memory. Software DSM systems can be implemented in an operating system, or as a programming library. Software DSM systems implemented in the operating system can be thought of as extensions of the underlying virtual memory architecture. Such systems are transparent to the developer; which means that the underlying distributed memory is completely hidden from the users. In contrast, Software DSM systems implemented at the library or language level are not transparent and developers usually have to program differently. However, these systems offer a more portable approach to DSM system implementation. Software DSM systems also have the flexibility to organize the shared memory region in different ways. The page based approach organizes shared memory into pages of fixed size. In contrast, the object based approach organizes the shared memory region as an abstract space for storing shareable objects of variable sizes. Another commonly seen implementation uses a tuple space, in which the unit of sharing is a tuple. Shared memory architecture may involve separating memory into shared parts distributed amongst nodes and main memory; or distributing all memory between nodes. A coherence protocol, chosen in accordance with a consistency model, maintains memory coherence. Examples of such systems include: Kerrighed OpenSSI MOSIX Terracotta TreadMarks DIPC

Memory Consistency models : Memory consistency model Order in which memory operations will appear to execute #What value can a read return? Affects ease-of-programming and performance An implementation of a memory consistency model is often stricter than the model would allow. For example, SC allows the possibility of a read returning a value that hasnt been written yet (see example discussed under 3.2 Sequential Consistency). Clearly, no implementation will ever exhibit an execution with such a history. In general, it is often simpler to implement a slightly stricter model than its definition would require. This is especially true for hardware realizations of shared memories [AHJ91, GLL+90]mThe memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory programexecution. Intuitively, a read should return the value of the last write to the same memory location. In uniprocessors, last is precisely defined by program order, i.e., the order in which memory operations appear in the program. This is not the case in multiprocessors. For example, in Figure 1, the write and read of the Data field within a record are not related by program order because they reside on two different processors. Nevertheless, an intuitive extension of the uniprocessor model can be applied to the multiprocessor case. This model is called sequential consistency. Informally, sequential consistency requires that all memory operations appear to execute one at a time, and the operations of a single processor appear to execute in the order described by that processors program. Referring back to the program in Figure 1, this model ensures that the reads of the data field within a dequeued record will return the new values written by processor P1. Sequential consistency provides a simple and intuitive programming model. However, it disallows many hardware and compiler optimizations that are possible in uniprocessors by enforcing a strict order among shared memory operations. For this reason, a number of more relaxed memory consistency models have been proposed, including some that are supported by commercially available architectures such as Digital Alpha, SPARC V8 and V9, and IBM PowerPC. Unfortunately, there has been a vast variety of relaxed consistency models proposed in the literature that differ from one another in subtle but important

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

ways. Furthermore, the complex and non-uniform terminology that is used to describe these models makes it difficult to understand and compare them. This variety and complexity also often leads to misconceptions about relaxed memory consistency models.

4. Discuss the following with respect to File Systems: Stateful Vs Stateless Servers Caching Ans:4

Stateful Vs Stateless Servers : The file servers that implement a distributed file service can be stateless or Stateful. Stateless file servers do not store any session state. This means that every client request is treated independently, and not as a part of a new or existing session. Stateful servers, on the other hand, do store session state. They may, therefore, keep track of which clients have opened which files, current read and write pointers for files, which files have been locked by which clients, etc. The main advantage of stateless servers is that they can easily recover from failure. Because there is no state that must be restored, a failed server can simply restart after a crash and immediately provide services to clients as though nothing happened. Furthermore, if clients crash the server is not stuck with abandoned opened or locked files. Another benefit is that the server implementation remains simple because it does not have to implement the state accounting associated with opening, closing, and locking of files. The main advantage of Stateful servers, on the other hand, is that they can provide better performance for clients. Because clients do not have to provide full file information every time they perform an operation, the size of messages to and from the server can be significantly decreased. Likewise the server can make use of knowledge of access patterns to perform read-ahead and do other optimizations. Stateful servers can also offer clients extra services such as file locking, and remember read and write positions. Caching : Besides replication, caching is often used to improve the performance of a DFS. In a DFS, caching involves storing either a whole file, or the results of file service operations. Caching can be performed at two locations: at the server and at the client. Server-side caching makes use of file caching provided by the host operating system. This is transparent to the server and helps to improve the servers performance by reducing costly disk accesses. Client-side caching comes in two flavours: ondisk caching, and in-memory caching. On-disk caching involves the creation of (temporary) files on the clients disk. These can either be complete files (as in the upload/download model) or they can contain partial file state, attributes, etc. In-memory caching stores the results of requests in the client-machines memory. This can be process-local (in the client process), in the kernel, or in a separate dedicated caching process. The issue of cache consistency in DFS has obvious parallels to the consistency issue in shared memory systems, but there are other tradeoffs (for example, disk access delays come into play, the granularity of sharing is different, sizes are different, etc.). Furthermore, because writethrough caches are too expensive to be useful, the consistency of caches will be weakened. This makes implementing Unix semantics impossible. Approaches used in DFS caches include, delayed writes where writes are not propagated to the server immediately, but in the background later on, and write-on-close where the server receives updates only after the file is closed. Adding a delay to write-on-close has the benefit of avoiding superfluous writes if a file is deleted shortly after it has been closed.

MC0085 Advanced Operating Systems (Distributed Systems)

(Book ID: B0967)

Вам также может понравиться

- HE Drilling JarsДокумент32 страницыHE Drilling Jarsmr_heeraОценок пока нет

- Advanced Computer Architecture SlidesДокумент105 страницAdvanced Computer Architecture SlidesShivansh JoshiОценок пока нет

- Operating Systems Interview Questions You'll Most Likely Be AskedОт EverandOperating Systems Interview Questions You'll Most Likely Be AskedОценок пока нет

- Distributed Systems Concepts ExplainedДокумент49 страницDistributed Systems Concepts ExplainedDhrumil DancerОценок пока нет

- Distributed Computing System ModelsДокумент14 страницDistributed Computing System ModelsMahamud elmogeОценок пока нет

- Ds/Unit 1 Truba College of Science & Tech., BhopalДокумент9 страницDs/Unit 1 Truba College of Science & Tech., BhopalKuldeep PalОценок пока нет

- Chapter1 DosДокумент51 страницаChapter1 DosnimmapreetuОценок пока нет

- Z-Distributed Computing)Документ16 страницZ-Distributed Computing)Surangma ParasharОценок пока нет

- A) (I) A Distributed Computer System Consists of Multiple Software Components That Are OnДокумент14 страницA) (I) A Distributed Computer System Consists of Multiple Software Components That Are OnRoebarОценок пока нет

- ChaptersДокумент39 страницChaptersAli Athar CHОценок пока нет

- Dis MaterialДокумент49 страницDis MaterialArun BОценок пока нет

- Unit IДокумент8 страницUnit Ivenkata rama krishna rao junnuОценок пока нет

- A Cluster Computer and Its ArchitectureДокумент3 страницыA Cluster Computer and Its ArchitecturePratik SharmaОценок пока нет

- Architecture of Distributed SystemsДокумент22 страницыArchitecture of Distributed SystemsAnjna SharmaОценок пока нет

- Models in DsДокумент4 страницыModels in DsDuncan OpiyoОценок пока нет

- Microcomputer: MainframeДокумент5 страницMicrocomputer: MainframejoelchaleОценок пока нет

- DOS LecturesДокумент116 страницDOS Lecturesankit.singh.47Оценок пока нет

- Lectures on distributed systems introductionДокумент8 страницLectures on distributed systems introductionaj54321Оценок пока нет

- CS8603 Unit IДокумент35 страницCS8603 Unit IPooja MОценок пока нет

- 8458expt 1Документ3 страницы8458expt 1sarveshpatil2833Оценок пока нет

- Unit 6 - Computer Organization and Architecture - WWW - Rgpvnotes.inДокумент14 страницUnit 6 - Computer Organization and Architecture - WWW - Rgpvnotes.inNandini SharmaОценок пока нет

- Relation To Computer System Components: M.D.Boomija, Ap/CseДокумент39 страницRelation To Computer System Components: M.D.Boomija, Ap/Cseboomija100% (1)

- CS02DOS Unit 1UNit - 1 IntroductionДокумент6 страницCS02DOS Unit 1UNit - 1 IntroductionSalar AhmedОценок пока нет

- Operating SystemДокумент7 страницOperating SystemVivian BaluranОценок пока нет

- IntroductionДокумент25 страницIntroductionJonah K. MensonОценок пока нет

- Distributed Systems OverviewДокумент25 страницDistributed Systems OverviewVaibhav AhujaОценок пока нет

- Hahhaha 3333Документ7 страницHahhaha 3333翁銘禧Оценок пока нет

- Taxonomy of Parallel Computing ParadigmsДокумент9 страницTaxonomy of Parallel Computing ParadigmssushmaОценок пока нет

- CH 2 Dist-ComputingДокумент20 страницCH 2 Dist-ComputingSnehal PendreОценок пока нет

- Multiprocessor Architecture and ProgrammingДокумент20 страницMultiprocessor Architecture and Programmingவெ. விஷ்வாОценок пока нет

- Unit-1 OsДокумент9 страницUnit-1 Osbharathijawahar583Оценок пока нет

- A Taxonomy of Distributed SystemsДокумент11 страницA Taxonomy of Distributed SystemssdfjsdsdvОценок пока нет

- Cloud Computing Unit - 1Документ42 страницыCloud Computing Unit - 1Sravani BaswarajОценок пока нет

- BE Comps DC Week1Документ67 страницBE Comps DC Week1Tejas BhanushaliОценок пока нет

- Distributed SystemДокумент53 страницыDistributed SystemAdarsh Srivastava100% (1)

- Distributed Systems: - CSE 380 - Lecture Note 13 - Insup LeeДокумент24 страницыDistributed Systems: - CSE 380 - Lecture Note 13 - Insup LeeApoorv JoshiОценок пока нет

- COA AssignmentДокумент21 страницаCOA Assignment3d nat natiОценок пока нет

- Cs8603 Unit 1 IntroductionДокумент51 страницаCs8603 Unit 1 IntroductionSasi BalajiОценок пока нет

- Computer cluster computing explainedДокумент5 страницComputer cluster computing explainedshaheen sayyad 463Оценок пока нет

- Symmetric Multiprocessing and MicrokernelДокумент6 страницSymmetric Multiprocessing and MicrokernelManoraj PannerselumОценок пока нет

- Unit 1.4 - Database System ArchitectureДокумент4 страницыUnit 1.4 - Database System ArchitectureRashmiRavi NaikОценок пока нет

- VIRTUALIZATIONДокумент14 страницVIRTUALIZATIONronin150101Оценок пока нет

- Advance Computing Technology (170704)Документ106 страницAdvance Computing Technology (170704)Satryo PramahardiОценок пока нет

- Tutorial 1 SolutionsДокумент5 страницTutorial 1 SolutionsByronОценок пока нет

- Distributed Systems: COMP9243 - Week 1 (12s1)Документ11 страницDistributed Systems: COMP9243 - Week 1 (12s1)Afiq AmeeraОценок пока нет

- Cloud Programming Models and Distributed Computing ParadigmsДокумент9 страницCloud Programming Models and Distributed Computing Paradigmsvishal roshanОценок пока нет

- Data Storage DatabasesДокумент6 страницData Storage DatabasesSrikanth JannuОценок пока нет

- Unit1 Operating SystemДокумент25 страницUnit1 Operating SystemPoornima.BОценок пока нет

- ParticipantsДокумент8 страницParticipantsSoban MarufОценок пока нет

- Alternative Client-Server Ations (A) - (E) : System Architecture TypesДокумент12 страницAlternative Client-Server Ations (A) - (E) : System Architecture TypesAmudha ArulОценок пока нет

- Distributed Operating Syst EM: 15SE327E Unit 1Документ49 страницDistributed Operating Syst EM: 15SE327E Unit 1Arun ChinnathambiОценок пока нет

- Two criteria and examples of advanced operating systemsДокумент27 страницTwo criteria and examples of advanced operating systemsdevil angelОценок пока нет

- What Is Parallel ComputingДокумент9 страницWhat Is Parallel ComputingLaura DavisОценок пока нет

- Notes Distributed System Unit 1Документ38 страницNotes Distributed System Unit 1rahul koravОценок пока нет

- Distributed and Real Operating SystemДокумент3 страницыDistributed and Real Operating SystemI'm hereОценок пока нет

- Cluster Computing: A Paper Presentation OnДокумент16 страницCluster Computing: A Paper Presentation OnVishal JaiswalОценок пока нет

- Lecture 1 Thay TuДокумент87 страницLecture 1 Thay Tuapi-3801193100% (1)

- Introduction To Distributed Operating Systems Communication in Distributed SystemsДокумент150 страницIntroduction To Distributed Operating Systems Communication in Distributed SystemsSweta KamatОценок пока нет

- 1-1 Into To Distributed SystemsДокумент10 страниц1-1 Into To Distributed SystemsHarrison NchoeОценок пока нет

- Distributed Sysytem Software ConceptsДокумент21 страницаDistributed Sysytem Software ConceptsbalajiОценок пока нет

- 02 - Lecture - Part1-Flynns TaxonomyДокумент21 страница02 - Lecture - Part1-Flynns TaxonomyAhmedОценок пока нет

- MC0084Документ7 страницMC0084Palokaran Joseph AjuОценок пока нет

- MC0082Документ6 страницMC0082Palokaran Joseph AjuОценок пока нет

- MC0084Документ7 страницMC0084Palokaran Joseph AjuОценок пока нет

- MC0083Документ8 страницMC0083Palokaran Joseph AjuОценок пока нет

- New Wordpad DocumentДокумент4 страницыNew Wordpad DocumentPalokaran Joseph AjuОценок пока нет

- MC0081Документ5 страницMC0081Palokaran Joseph AjuОценок пока нет

- Notes Knouckout and BanyanДокумент13 страницNotes Knouckout and BanyanmpacОценок пока нет

- Basic Chemistry Review (Students)Документ16 страницBasic Chemistry Review (Students)AnilovRozovaОценок пока нет

- Animal and Plant CellДокумент3 страницыAnimal and Plant CellElmer Tunggolh, Jr.Оценок пока нет

- 4 UIUm 8 JHNDQ 8 Suj H4 NsoДокумент8 страниц4 UIUm 8 JHNDQ 8 Suj H4 NsoAkash SadoriyaОценок пока нет

- Laura Hasley Statistics-Chi-Squared Goodness of Fit Test Lesson PlanДокумент11 страницLaura Hasley Statistics-Chi-Squared Goodness of Fit Test Lesson Planapi-242213383Оценок пока нет

- Basic Chromatography Notes 1Документ27 страницBasic Chromatography Notes 1Aufa InsyirahОценок пока нет

- MSCS) (V4.12.10) MSC Server Hardware DescriptionДокумент148 страницMSCS) (V4.12.10) MSC Server Hardware DescriptionDeepak JoshiОценок пока нет

- J Gen Physiol-1952-Hershey-39-56Документ18 страницJ Gen Physiol-1952-Hershey-39-56api-277839406Оценок пока нет

- Is.10919.1984 ESP StandardДокумент6 страницIs.10919.1984 ESP StandardhbookОценок пока нет

- Technology: ControlsДокумент32 страницыTechnology: ControlsAli Hossain AdnanОценок пока нет

- Operating Instruction Precision Balance: Kern EwДокумент15 страницOperating Instruction Precision Balance: Kern EwjohnОценок пока нет

- Queries With TableДокумент14 страницQueries With TableAkhileshОценок пока нет

- Wind Load Sheet by Abid SirДокумент4 страницыWind Load Sheet by Abid SirMohammad KasimОценок пока нет

- Shape and angle detective game for kidsДокумент21 страницаShape and angle detective game for kidsbemusaОценок пока нет

- Mathematical Investigation of Trigonometric FunctionsДокумент12 страницMathematical Investigation of Trigonometric FunctionsFirasco100% (13)

- PLC Introduction: Programmable Logic Controller BasicsДокумент3 страницыPLC Introduction: Programmable Logic Controller Basicssreekanthtg007Оценок пока нет

- Ug1085 Zynq Ultrascale TRMДокумент1 158 страницUg1085 Zynq Ultrascale TRMLeandros TzanakisОценок пока нет

- AAL1 and Segmentation and Reassembly LayerДокумент18 страницAAL1 and Segmentation and Reassembly Layeroureducation.inОценок пока нет

- openPDC DM-Tools Usage Examples GuideДокумент5 страницopenPDC DM-Tools Usage Examples GuidealantmurrayОценок пока нет

- Monico Gen. 2 Gateway Datasheet PDFДокумент2 страницыMonico Gen. 2 Gateway Datasheet PDFRicardo OyarzunОценок пока нет

- Wilo Fire Fighting BrochureДокумент20 страницWilo Fire Fighting BrochureAkhmad Darmaji DjamhuriОценок пока нет

- Atlas Copco Compressed Air Manual: 8 EditionДокумент25 страницAtlas Copco Compressed Air Manual: 8 EditionRajОценок пока нет

- 5367227Документ2 страницы5367227aliha100% (1)

- Temperarura4 PDFДокумент371 страницаTemperarura4 PDFmario yanezОценок пока нет

- Recurrent Neural Network-Based Robust NonsingularДокумент13 страницRecurrent Neural Network-Based Robust NonsingularDong HoangОценок пока нет

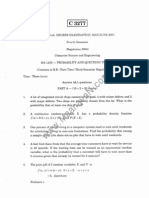

- PQT MJ07Документ6 страницPQT MJ07Raguraman BalajiОценок пока нет

- Ldp-105m150 Moso Test ReportДокумент17 страницLdp-105m150 Moso Test ReportzecyberОценок пока нет

- MBA (Travel & Tourism) 1st Year Sylabus 2020-21 - 28th SeptДокумент34 страницыMBA (Travel & Tourism) 1st Year Sylabus 2020-21 - 28th SeptHimanshuОценок пока нет

- HM130 5Документ1 страницаHM130 5AntonelloОценок пока нет