Академический Документы

Профессиональный Документы

Культура Документы

Data Center Lan Migration Guide

Загружено:

Helmi Amir BahaswanАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Data Center Lan Migration Guide

Загружено:

Helmi Amir BahaswanАвторское право:

Доступные форматы

DATA CENTER LAN MIGRATION GUIDE

Data Center LAN Migration Guide

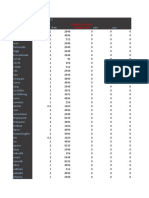

Table of Contents

Chapter 1: Why Migrate to Juniper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 Introduction to the Migration Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 Data Center Architecture and Guide Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 Why Migrate? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9 Scaling Is Too Complex with Current Data Center Architectures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10 The Case for a High Performing, Simplified Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11 Why Juniper? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13 Other Considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13 Chapter 2: Pre-Migration Information Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 Pre-Migration Information Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16 Technical Knowledge and Education . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16 Chapter 3: Data Center Migration -Trigger Events and Deployment Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27 How Migrations Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28 Trigger Events for Change and Their Associated Insertion Points . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28 Considerations for Introducing an Alternative Network Infrastructure Provider . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29 Trigger Events, Insertion Points, and Design Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30 IOS to Junos OS Conversion Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 Data Center Migration Insertion Points: Best Practices and Installation Tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32 New Application/Technology Refresh/Server Virtualization Trigger Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32 Design Options and Best Practices: New Application/Technology Refresh/Server Virtualization Trigger Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33 Network Challenge and Solutions for Virtual Servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38 Network Automation and Orchestration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38 Data Center Consolidation Trigger Event. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39 Best Practices: Designing the Upgraded Aggregation/Core Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40 Best Practices: Upgraded Security Services in the Core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41 Aggregation/Core Insertion Point Installation Tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41 Consolidating and Virtualizing Security Services in the Data Center: Installation Tasks . . . . . . . . . . . . . . . . . . . . . . . . . 44 Business Continuity and Workload Mobility Trigger Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46 Best Practices Design for Business Continuity and HADR Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46 Best Practices Design to Support Workload Mobility Within and Between Data Centers . . . . . . . . . . . . . . . . . . . . . . . . 48 Best Practices for Incorporating MPLS/VPLS in the Data Center Network Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49 Six Process Steps for Migrating to MPLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50 Completed Migration to a Simplified, High-Performance, Two-Tier Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52 Juniper Professional Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Chapter 4: Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56 Troubleshooting Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56 OSI Layer 1: Physical Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57 OSI Layer 2: Data Link Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58 Virtual Chassis Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58 OSI Layer 3: Network Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59 OSPF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59 VPLS Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60 Multicast . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .61 Quality of Service/Class of Service (CoS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .61 OSI Layer 4-7: Transport to Application Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62 Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62 Troubleshooting Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62 Chapter 5: Summary and Additional Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 Additional Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 Data Center Design Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 Training Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65 Juniper Networks Professional Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65 About Juniper Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Table of Figures

Figure 1: Multitier legacy data center LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7 Figure 2: Simpler two-tier data center LAN design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 Figure 3: Data center traffic flows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10 Figure 4: Collapsed network design delivers increased density, performance, and reliability . . . . . . . . . . . . . . . . . . . . . . . . . . 11 Figure 5: Junos OS - The power of one . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 Figure 6: The modular Junos OS architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18 Figure 7: Junos OS lowers operations costs across the data center . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .19 Figure 8: Troubleshooting with Service Now . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26 Figure 9: Converting IOS to Junos OS using I2J . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 Figure 10: The I2J input page for converting IOS to Junos OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32 Figure 11: Inverted U design using two physical servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34 Figure 12: Inverted U design with NIC teaming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34 Figure 13: EX4200 top-of-rack access layer deployment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36 Figure 14: Aggregation/core layer insertion point . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43 Figure 15: SRX Series platform for security consolidation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44 Figure 16: Workload mobility alternatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48 Figure 17: Switching across data centers using VPLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50 Figure 18: Transitioning to a Juniper two-tier high-performance network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Copyright 2010, Juniper Networks, Inc.

Chapter 1: Why Migrate to Juniper

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Introduction to the Migration Guide

IT has become integral to business success in virtually all industries and markets. Todays data center is the centralized repository of computing resources enabling enterprises to meet their business objectives. Todays data center traffic flows and performance requirements have changed considerably from the past with the advent of cloud computing and service-oriented architecture (SOA)-based applications. In addition, increased mobility, unified communications, compliance requirements, virtualization, the sheer number of connecting devices, and changing network security boundaries present new challenges to todays data center managers. Architecting data centers based on old traffic patterns and outdated security models is inefficient and results in lower performance, unnecessary complexity, difficulty in scaling, and higher cost. A simplified, cloud-ready, two-tier data center design is needed to meet these new challengeswithout any compromise in performance. Migrating to such a data center network can theoretically take place at any time. Practically speaking, however, most enterprises will not disrupt a production data center except for a limited time window to perform scheduled maintenance and business continuity testing. Luckily and within this context, migration to a simpler two-tier design can begin at various insertion points and proceed in controlled ways in an existing legacy data center architecture. Junipers Data Center LAN Migration Guide identifies the most common trigger events at which migration to a simplified design can take place together with design considerations at each network layer for a successful migration. The guide is segmented into two parts. For the business decision maker, Chapter 1: Why Migrate to Juniper will be most relevant. The technical decision maker will find Chapters 2 and 3 most relevant, particularly Chapter 3, which covers the data center trigger events that can stimulate a transition and the corresponding insertion points, designs, and best practices associated with pre-install, install, and post-install tasks.

Audience

While much of the high-level information presented in this document will be useful to anyone making strategic decisions about a data center LAN, this guide is targeted primarily to: Data center network and security architects evaluating the feasibility of new approaches in network design Data center network planners, engineers, and operators designing and implementing new data center networks Data center managers, IT managers, network and security managers planning and evaluating data center infrastructure and security requirements

Data Center Architecture and Guide Overview

One of the primary ways to increase data center efficiency is to simplify the infrastructure. Most data center networks in place today are based on a three-tier architecture. A simplified twotier design, made possible by the enhanced performance and more efficient packaging of todays Ethernet switches, reduces cost and complexity, and increases efficiency without compromising performance. During the 1990s, Ethernet switches became the basic building block of enterprise campus network design. Networks were typically built in a three-tier hierarchical tree structure to compensate for switch performance limitations. Each tier performed a different function and exhibited different form factors, port densities, and throughputs to handle the workload. The same topology was deployed when Ethernet moved into the data center displacing Systems Network Architecture (SNA), DECnet, and token ring designs.

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

3-TIER LEGACY NETWORK

Data Center Interconnect WAN Edge

Ethernet

Core

Aggregation Layer

Access Layer

Servers

NAS

FC Storage

FC SAN

Figure 1: Multitier legacy data center LAN

This multitiered architecture, shown in Figure 1, worked well in a client/server world where the traffic was primarily north and south, and oversubscription ratios at tiers of the network closest to the endpoints (including servers and storage) could be high. However, traffic flows and performance requirements have changed considerably with the advent of applications based on SOA, increased mobility, Web 2.0, unified communications, compliance requirements, and the sheer number of devices connecting to the corporate infrastructure. Building networks today to accommodate 5 to 10 year old traffic patterns is not optimal, and results in lower performance, unnecessary complexity, and higher cost. A new data center network design is needed to maximize IT investment and easily scale to support the new applications and services a high-performance enterprise requires to stay competitive. According to Gartner, Established LAN design practices were created for an environment of limited switch performance. Todays highcapacity switches allow new design approaches, thus reducing cost and complexity in campus and data center LANs. The three-tier concept can be discarded, because all switch ports can typically deliver rich functionality without impacting performance. 1

Neil Rikard Minimize LAN Switch Tiers to Reduce Cost and Increase Efficiency, Gartner Research ID Number: G00172149 November 17, 2009

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

WAN Edge Data Center Interconnect MX Series

Aggregation/Core EX82XX SRX5800 Access Layer EX4200

Virtual Chassis

EX4500 EX82XX

Servers

NAS

FC Storage

FC SAN

Figure 2: Simpler two-tier data center LAN design

Juniper Networks offers a next-generation data center solution, shown in Figure 2, which delivers: Simplified design for high performance and ease of management Scalable services and infrastructure to meet the needs of a high-performance enterprise Virtualized resources to increase efficiency This two-tier data center LAN architecture provides a more elastic and more efficient network that can also easily scale. This guide covers the key considerations in migrating an existing three-tier data center network to a simplified, cloudready, two-tier design. From a practical perspective, most enterprises wont initiate a complete data center redesign for an existing, operational data center. However, there are several events, such as bringing a new application or service online or a data center consolidation, which require an addition to the existing data center infrastructure. We call these common events at which migration can begin trigger events. Trigger events generate changes in design at a given network layer, which we call an insertion point. In Chapter 3 of this guide, we cover the best practices and steps involved for migration at each of the insertion points presented by a specific trigger event. By following these steps and practices, it is possible to extend migration to other legacy network tiers and continue towards a simplified two-tier Juniper infrastructure over time. In summary, this Data Center LAN Migration Guide describes: Pre-migration information requirements Migration process overview and design considerations Logical migration steps and Juniper best practices for transitioning each network layer insertion point Troubleshooting steps Additional resources

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Why Migrate?

IT continues to become more tightly integrated with business across all industries and markets. Technology is the means by which enterprises can provide better access to information in near or real time to satisfy customer needs, while simultaneously driving new efficiencies. However, todays enterprise network infrastructures face growing scalability, agility, and security challenges. This is due to factors such as increased collaboration with business partners, additional workforce mobility, and the sheer proliferation of users with smart mobile devices requiring constant access to information and services. These infrastructure challenges are seriously compounded when growth factors are combined with the trend towards data center consolidation. What is needed is a new network infrastructure that is more elastic, more efficient, and can easily scale. Scalability is a high priority, as it is safe to predict that much of the change facing businesses today is going to come as a requirement for more storage, more processing power, and more flexibility. Recent studies by companies such as IDC suggest that global enterprises will be focusing their investments and resources in the next 5 to 10 years on lowering costs while continuing to look for new growth areas. Industry analysts have identified several key data center business initiatives that align with these directions: Data center consolidation: Enterprises combine data centers as a result of merger or acquisition to reduce cost as well as centralize and consolidate resources. Virtualization: Server virtualization is used to increase utilization of CPU resources, provide flexibility, and deliver on-demand services that easily scale (currently the most prevalent virtualization example). Cloud computing: Pooling resources within a cloud provides a cost-efficient way to reconfigure, reclaim, and reuse resources to deliver responsive services. I/O convergence or consolidation: Ethernet and Fibre Channel are consolidated over a single wire on the server side. Virtual Desktop Infrastructure (VDI): Applications are run on centralized servers to reduce operational costs and also provide greater flexibility. These key initiatives all revolve around creating greater data center efficiencies. While meeting these business requirements, it is vital that efficient solutions remain flexible and scalable systems are easy to manage to maximize all aspects of potential cost savings. In todays data center, applications are constantly being introduced, updated, and retired. Demand for services is unpredictable and ever changing. Remaining responsive, and at the same time cost efficient, is a significant resource management challenge, and adding resources needs to be a last resort since it increases the cost basis for service production and delivery. Having the ability to dynamically reconfigure, reclaim, and reuse resources positions the data center to effectively address todays responsiveness and efficiency challenges. Furthermore, existing three-tier architectures are built around a client/server model that is less relevant in todays application environment. Clearly, a new data center LAN design is needed to adapt to changing network dynamics, overcome the complexity of scaling with the current multitiered architecture, as well as capitalize on the benefits of high-performance platforms and a simplified design.

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Network topologies should mirror the nature of the tra c they transport

W UP TO 70% E

Figure 3: Data center traffic flows

Applications built on SOA architecture and those delivered in the software as a service (SaaS) model require an increasing number of interactions among servers in the data center. These technologies generate a significant amount of server-to-server traffic; in fact, up to 70% of data center LAN traffic is between servers. Additional server traffic may also be produced by the increased adoption of virtualization, where shared resources such as a server pool are used at greater capacity to improve efficiency. Todays network topologies need to mirror the nature of the traffic being transported. Existing three-tier architectures were not designed to handle server-to-server traffic without going up and back through the many layers of tiers. This is inherently inefficient, adding latency at each hop, which in turn impacts performance, particularly for real-time applications like unified communications, or in industries requiring high performance such as financial trading.

Scaling Is Too Complex with Current Data Center Architectures

Simply deploying ever more servers, storage, and devices in a three-tier architecture to meet demand significantly increases network complexity and cost. In many cases, it isnt possible to add more devices due to space, power, cooling, or throughput constraints. And even when it is possible, it is often difficult and time-consuming to manage due to the size and scope of the network. Or it is inherently inefficient, as its been estimated that as much as 50% of all ports in a typical data center are used for connecting switches to each other as opposed to doing the more important task of interconnecting storage to servers and applications to users. Additionally, large Layer 2 domains using Spanning Tree Protocol (STP) are prone to failure and poor performance. Similarly, commonly deployed data center technologies like multicast dont perform at scale across tiers and devices in a consistent fashion. Legacy security services may not easily scale and are often not efficiently deployed in a data center LAN due to the difficulty of incorporating security into a legacy,multitiered design. Security blades which are bolted into switches at the aggregation layer consume excessive power and space, impact performance, and dont protect virtualized resources. Another challenge of legacy security service appliances is the limited performance scalability, which may be far below the throughput requirements of most high-performance enterprises consolidating applications or data centers. The ability to cluster together firewalls as a single logical entity to increase scalability without added management complexity is another important consideration. Proprietary systems may also limit further expansion with vendor lock-in to low performance equipment. Different operating systems at each layer may add to the complexity to operate and scale the network. This complexity is costly, limits flexibility, increases the time it takes to provision new capacity or services, and restricts the dynamic allocation of resources for services such as virtualization.

10

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

The Case for a High Performing, Simplified Architecture

Enhanced, high-performance LAN switch technology can help meet these scaling challenges. According to Network World, Over the next few years, the old switching equipment needs to be replaced with faster and more flexible switches. This time, speed needs to be coupled with lower latency, abandoning spanning tree and support for the new storage protocols. Networking in the data center must evolve to a unified switching fabric.2 New switching technology such as that found in Juniper Networks EX Series of Ethernet Switches has caught up to meet or surpass the demands of even the most high-performance enterprise. Due to specially designed applicationspecific integrated circuits (ASICs) which perform in-device switching functions, enhanced switches now offer high throughput capacity of more than one terabit per second (Tbps) with numerous GbE and 10GbE ports, vastly improving performance and reducing the number of uplink connections. Some new switches also provide built-in virtualization that reduces the number of devices that must be managed, yet can rapidly scale with growth. Providing much greater performance, enhanced switches also enable the collapsing of unnecessary network tiersmoving towards a new, simplified network design. Similarly, scalable enhanced security devices can be added to complement such a design, providing security services throughout the data center LAN. A simplified, two-tier data center LAN design can lower costs without compromising performance. Built on highperformance platforms, a collapsed design requires fewer devices, thereby reducing capital outlay and the operational costs to manage the data center LAN. Having fewer network tiers also decreases latency and increases performance, enabling wider support of additional cost savings and high bandwidth applications such as unified communications. Despite having fewer devices, a simplified design still offers high availability (HA) with key devices being deployed in redundant pairs and dual homed to upstream devices. Additional HA is offered with features like redundant switching fabrics, dual power supplies, and the other resilient capabilities available in enhanced platforms.

MULTI-TIER LEGACY NETWORK

2-TIER DESIGN

Density Performance Reliability

Figure 4: Collapsed network design delivers increased density, performance, and reliability

Robin Layland/Layland Consulting 10G Ethernet shakes Net Design to the Core/Shift from three- to two-tier architectures accelerating, Network World September 14, 2009

Copyright 2010, Juniper Networks, Inc.

11

Data Center LAN Migration Guide

Two-Tier Design Facilitates Cloud Computing

By simplifying the design, by sharing resources, and by allowing for integrated security, a two-tier design also enables the enterprise to take advantage of the benefits of cloud computing. Cloud computing delivers on-demand services to any point on the network without requiring the acquisition or provisioning of location-specific hardware and software. These cloud services are delivered via a centrally managed and consolidated infrastructure that has been virtualized. Standard data center elements such as servers, appliances, storage, and other networking devices can be arranged in resource pools that are shared securely across multiple applications, users, departments, or any other way they should be logically shared. The resources are dynamically allocated to accommodate the changing capacity requirements of different applications and improve asset utilization levels. This type of on-demand service and infrastructure simplifies management, reduces operating and ownership costs, and allows services to be provisioned with unprecedented speed. Reduced application and service delivery times mean that the enterprise is able to capitalize on opportunities as they occur.

Achieving Power Savings and Operating Efficiencies

Fewer devices require less power, which in turn reduces cooling requirements, thus adding up to substantial power savings. For example, a simplified design can offer more than a 39% power savings over a three-tier legacy architecture. Ideally, a common operating system should be used on all data center LAN devices to reduce errors, decrease training costs, ensure consistent features, and thus lower the cost of operating the network.

Consolidating Data Centers

Due to expanding services, enterprises often have more than one data center. Virtualization technologies like server migration and application load balancing require multiple data centers to be virtually consolidated into a single, logical data center. Locations need to be transparently interconnected with technologies such as virtual private LAN service (VPLS) to interoperate and appear as one. All this is possible with a new, simplified data center LAN design from Juniper Networks. However, as stated earlier, Juniper recognizes that it is impractical to flash migrate from an existing, operational, three-tier production data center LAN design to a simpler two-tier design, regardless of the substantial benefits. However, migration can begin as a result of any of the following trigger events: Addition of a new application or service Refresh cycle Server virtualization migration Data center consolidation Business continuity and workload mobility initiatives Data center core network upgrade Higher performance and scalability for security services The design considerations and steps for initiating migration from any of these trigger events is covered in detail in Chapter 3: Data Center MigrationTrigger Events and Deployment Processes.

12

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Why Juniper?

Juniper delivers high-performance networks that are open to and embrace third-party partnerships to lower total cost of ownership (TCO) as well as to create flexibility and choice. Juniper is able to provide this based on its extensive investment in software, silicon, and systems. Software: Junipers investment in software starts with Juniper Networks Junos operating system. Junos OS offers the advantage of one operating system with one release train and one modular architecture across the enterprise portfolio. This results in feature consistency and simplified management throughout all platforms in the network. Silicon: Juniper is one of the few network vendors that invests in ASICs which are optimized for Junos OS to maximize performance and resiliency. Systems: The combination of the investment in ASICs and Junos OS produces high-performance systems that simultaneously scale connectivity, capacity, and the control capability needed to deliver new applications and business processes on a single infrastructure that also reduces application and service delivery time. Juniper Networks has been delivering a steady stream of network innovations for more than a decade. Juniper brings this innovation to a simplified data center LAN solution built on four core principles: simplify, share, secure, and automate. Creating a simplified infrastructure with shared resources and secure services delivers significant advantages over other designs. It helps lower costs, increase efficiency, and keep the data center agile enough to accommodate any future business changes or technology infrastructure requirements. Simplify the architecture: Consolidating legacy siloed systems and collapsing inefficient tiers results in fewer devices, a smaller operational footprint, and simplified management from a single pane of glass. Share the resources: Segmenting the network into simple, logical, and scalable partitions with privacy, flexibility, high performance, and quality of service (QoS) enables network agility to rapidly adapt to an increasing number of users, applications, and services. Secure the data flows: Integrating scalable, virtualized security services into the network core provides benefits to all users and applications. Comprehensive protection secures data flows into, within, and between data centers. It also provides centralized management and the distributed dynamic enforcement of application and identity-aware policies. Automate network operations at each stepAn open, extensible software platform reduces operational costs and complexity, enables rapid scaling, minimizes operator errors, and increases reliability through a single network operating system. A powerful network application platform with innovative applications enables network operators to leverage Juniper or third-party applications for simplifying operations and scaling application infrastructure to improve operational efficiency. Junipers data center LAN architecture embodies these principles and enables high-performance enterprises to build next-generation, cloud-ready data centers. For information on Building the Cloud-Ready Data Center, please refer to: www.juniper.net/us/en/solutions/enterprise/data-center.

Other Considerations

It is interesting to note that even as vendors introduce new product lines, the legacy three-tier architecture remains as the reference architecture for Data Centers. This legacy three-tier architecture retains the same limitations in terms of scalability and increased complexity.

Copyright 2010, Juniper Networks, Inc.

13

Data Center LAN Migration Guide

Additionally, migrating to a new product line, even with an incumbent vendor, may require adopting a new OS, modifying configurations, and replacing hardware. The potential operational impact of introducing new hardware is a key consideration for insertion into an existing data center infrastructure, regardless of the platform provider. Prior to specific implementation at any layer of the network, it is sound practice to test interoperability and feature consistency in terms of availability and implementation. When considering an incumbent vendor with a new platform, any Enterprise organization weighing migration to a new platform from their existing one, should also evaluate moving towards a simpler high performing Juniper-based solution, which can deliver substantial incremental benefits. (See Chapter 3: Data Center MigrationTrigger Events and Deployment Processes for more details about introducing a second switching infrastructure vendor into an existing single vendor network.) In summary, migrating to a simpler data center design enables an enterprise to improve the end user experience and scale without complexity, while also driving down operational costs.

14

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Chapter 2: Pre-Migration Information Requirements

Copyright 2010, Juniper Networks, Inc.

15

Data Center LAN Migration Guide

Pre-Migration Information Requirements

Migrating towards a simplified design is based on a certain level of familiarity with the following Juniper solutions: Juniper Networks Junos operating system Juniper Networks EX Series Ethernet Switches and MX Series 3D Universal Edge Routers Juniper Networks SRX Series Services Gateways Juniper Networks Network and Security Manager, STRM Series Security Threat Response Managers, and Junos Space network management solutions Juniper Networks Cloud-Ready Data Center Reference Architecture communicates Junipers conceptual framework and architectural philosophy in creating data center and cloud computing networks robust enough to serve the range of customer environments that exist today. It can be downloaded from: www.juniper.net/us/en/solutions/enterprise/ data-center/simplify/#literature.

Technical Knowledge and Education

This Migration Guide assumes some experience with Junos OS and its rich tool set, which will not only help simplify the data center LAN migration but also ongoing network operations. A brief overview of Junos OS is provided in the following section. Juniper also offers a comprehensive series of Junos OS workshops. Standardization of networking protocols should ease the introduction of Junos OS into the data center since the basic constructs are similar. Juniper Networks offers a rich curriculum of introductory and advanced courses on all of its products and solutions. Learn more about Junipers free and fee-based online and instructor-led hands-on training offerings at: www.juniper.net/us/en/training/technical_education. Additional education may be required for migrating security services such as firewall and intrusion prevention system (IPS). If needed, Juniper Networks Professional Services can provide access to industry-leading IP experts to help with all phases of the design, planning, testing, and migration process. These experts are also available as training resources, to help with project management, risk assessment, and more. The full suite of Juniper Networks Professional Services offerings can be found at: www.juniper.net/us/en/products-services/consulting-services.

Junos OS Overview

Enterprises deploying legacy-based solutions today are most likely familiar with the number of different operating systems (OS versions) running on switching, security, and routing platforms. This can result in feature inconsistencies, software instability, time-consuming fixes and upgrades. Its not uncommon for a legacy data center to be running many different versions of a switching OS, which may increase network downtime and require greater time, effort, and cost to manage the network. From its beginning, Juniper set out to create an operating system that addressed these common problems. The result is Junos OS, which offers one consistent operating system across all of Junipers routing, switching, and security devices.

16

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

T Series Junos Space

EX8216

EX8208 Junos Pulse SRX5000 Line

NSM

NSMXpress SRX3000 Line MX Series SRX650 SRX240 M Series SRX100 SRX210 J Series LN1000 EX4500 Line EX4200 Line EX3200 Line EX2200 Line

SECURITY

ROUTERS

SWITCHES

Branch

Core

Frequent Releases

Module X

One OS

One Release Track

Figure 5: Junos OS - The power of one

One Architecture

Junos OS serves as the foundation of a highly reliable network infrastructure and has been at the core of the worlds largest service provider networks for over 10 years. Junos OS offers identical carrier-class performance and reliability to any sized enterprise data center LAN. Also through open, standards-based protocols and an API, Junos OS can be customized to optimize any enterprise-specific requirement. What sets Junos OS apart from other network operating systems is the way it is built: one operating system (OS) delivered in one software release train, and with one modular architecture. Feature consistency across platforms and one predictable release of new features ensure compatibility throughout the data center LAN. This reduces network management complexity, increases network availability, and enables faster service deployment, lowering TCO and providing greater flexibility to capitalize on new business opportunities. Junos OS consistent user experience and automated tool sets make planning and training easier and day-to-day operations more efficient, allowing for faster changes. Further, integrating new software functionality protects not just hardware investments, but also an organizations investment in internal systems, practices, and knowledge.

Junos OS Architecture

The Junos OS architecture is a modular design conceived for flexible yet stable innovation across many networking functions and platforms. The architectures modularity and well-defined interfaces streamline new development and enable complete, holistic integration of services.

API

10.0

10.1

10.2

Copyright 2010, Juniper Networks, Inc.

17

Data Center LAN Migration Guide

OPEN MANAGEMENT INTERFACES

Scripts CLI

NSM/ Junos Space

J-Web

Toolkit

CONTROL PLANE

Management

Interfaces

Module n

Routing

Service App 1

Kernel

Service App 3

DATA PLANE

Packet Forwarding Service App n

Physical Interfaces

Figure 6: The modular Junos OS architecture

The advantages of modularity reach beyond the operating system softwares stable, evolutionary design. For example, the Junos OS architectures process modules run independently in their own protected memory space, so one module cannot disrupt another. The architecture also provides separation between control and forwarding functions to support predictable high performance with powerful scalability. This separation also hardens Junos OS against distributed denial-of-service (DDoS) attacks. Junos operating systems modularity is integral to the high reliability, performance, and scalability delivered by its software design. It enables unified in-service software upgrade (ISSU), graceful Routing Engine switchover (GRES), and nonstop routing.

Automated Scripting with Junoscript Automation

With Junoscript Automation, experienced engineers can create scripts that reflect their own organizations needs and procedures. The scripts can be used to flag potential errors in basic configuration elements such as interfaces and peering. The scripts can also automate network troubleshooting and quickly detect, diagnose, and fix problems as they occur. In this way, new personnel running the scripts benefit from their predecessors long-term knowledge and expertise. Networks using Junoscript Automation can increase productivity, reduce OpEx, and increase high availability (HA), since the most common reason for a network outage is operator error. For more detailed information on Junos Script Automation, please see: www.juniper.net/us/en/community/junos.

18

Copyright 2010, Juniper Networks, Inc.

SERVICES PLANE

Services Interfaces

Service App 2

Data Center LAN Migration Guide

A key benefit of using Junos OS is lower TCO as a result of reduced operational challenges and improved operational productivity at all levels in the network.

Critical Categories of Enterprise Network Operational Costs

Switch and Router Downtime Costs Switch and Router Maintenance and Support Costs Switch and Router Deployment Time Costs Unplanned Switch and Router Events Resolution Costs Overall Switch and Router Network Operations Costs

Baseline for all network operating systems

27%* Lower with Junos

54%* Lower with Junos

25%* Lower with Junos

40%* Lower with Junos

41%* Lower with Junos

(Based on reduction in frequency and duration of unplanned network events)

(A planned events category)

(The adding infrastructure task)

(The time needed to resolve unplanned network events)

(The combined total savings associated with planned, unplanned, planning and provisioning, and adding infrastructure tasks)

Multiple network operating systems diminish e ciency3

Figure 7: Junos OS lowers operations costs across the data center

An independent commissioned study conducted by Forrester Consulting3 (www.juniper.net/us/en/reports/junos_tei.pdf) found that the use of Junos OS and Juniper platforms produced a 41% reduction in overall operations costs for network operational tasks including planning and provisioning, deployment, and planned and unplanned network events.

Juniper Platform Overview

The ability to migrate from a three-tier network design to a simpler two-tier design with increased performance, scalability, and simplicity is predicated on the availability of hardware-based services found in networking platforms such as the EX Series Ethernet Switches, MX Series 3D Universal Edge Routers, and the SRX Series Services Gateways. A consistent and unified view of the data center, campus, and branch office networks is provided by Junipers single pane of glass management platforms, including the recently introduced Junos Space. The following section provides a brief overview of the capabilities of Junipers platforms. All of the Junos OS-based platforms highlighted provide feature consistency throughout the data center LAN and lower TCO.

EX4200 Switch with Virtual Chassis Technology

Typically deployed at the access layer in a data center, Juniper Networks EX4200 Ethernet Switch provides chassisclass, high availability features, and high-performance throughput in a pay as you grow 1 rack unit (1 U) switch. Depending on the size of the data center, the EX4200 may also be deployed at the aggregation layer. Offering flexible cabling options, the EX4200 can be located at the top of a rack or end of a row. There are several different port configurations available with each EX4200 switch, providing up to 48 wire-speed, non-blocking, 10/100/1000 ports with full or partial Power over Ethernet (PoE). Despite its small size, this high-performance switch also offers multiple GbE or 10Gbe uplinks to the core, eliminating the need for an aggregation layer. And because of its small size, it takes less space, requires less power and cooling, and it costs less to deploy and maintain sparing.

The Total Economic Impact of Junos Network Operating Systems, a commissioned study conducted by Forrester Consulting on behalf of Juniper Networks, February 2009

Copyright 2010, Juniper Networks, Inc.

19

Data Center LAN Migration Guide

Up to 10 EX4200 line switches can be connected, configured, and managed as one single logical device through built-in Virtual Chassis technology. The actual number deployed in a single Virtual Chassis instance depends upon the physical layout of your data center and the nature of your traffic. Connected via a 128 Gbps backplane, a Virtual Chassis can be comprised of EX4200 switches within a rack or row, or it can use a 10GbE connection anywhere within a data center or across data centers up to 40 km apart. Junipers Virtual Chassis technology enables virtualization at the access layer, offering three key benefits: 1. It reduces the number of managed devices by a factor of 10X. 2. The network topology now closely maps to the traffic flow. Rather than sending inter-server traffic up to an aggregation layer and then back down in order to send it across the rack, its sent directly east-to-west, reducing the latency for these transactions. This also more easily facilitates workload mobility when server virtualization is deployed. 3. Since the network topology now maps to the traffic flows directly, the number of uplinks required can be reduced. The Virtual Chassis also delivers best-in-class performance. According to testing done by Network World (see full report at www.networkworld.com/slideshows/2008/071408-juniper-ex4200.html), the EX4200 offers the lowest latency of any Ethernet switch they had tested, making the EX4200 an optimal solution for high-performance, low latency, real-time applications. There has also been EX4200 performance testing done in May 2010 by Network Test which demonstrates the low latency high performance and high availability capabilities of the EX 4200 series, viewable at http://networktest.com/jnprvc. When multiple EX4200 platforms are connected in a Virtual Chassis configuration, they offer the same software high availability as traditional chassis-based platforms. Each Virtual Chassis has a master and backup Routing Engine preelected with synchronized routing tables and routing protocol states for rapid failover should a master switch fail. The EX4200 line also offers fully redundant power and cooling. To further lower TCO, Juniper includes core routing features such as OSFP and RIPv2 in the base software license, providing a no incremental cost option for deploying Layer 3 at the access layer. In every deployment, the EX4200 reduces network configuration burdens and measurably improves performance for server-to-server communications in SOA, Web services, and other distributed application designs. For more information, refer to the EX4200 Ethernet Switch data sheet for a complete list of features, benefits, and specifications at: www.juniper.net/us/en/products-services/switching/ex-series.

EX4500 10GbE Switch

The Juniper Networks EX4500 Ethernet Switch delivers a scalable, compact, high-performance platform for supporting high-density 10 gigabit per second (10 Gbps) data center top-of-rack, as well as data center, campus, and service provider aggregation deployments .The Junos OS-based EX4500 is a 48 port wire-speed switch whose ports can be provisioned as either gigabit Ethernet (GbE) or 10GbE ports in a two rack unit (2 U) form factor. The 48 ports are allocated with 40 1000BaseT ports in the base unit and 8 optional uplink module ports. The EX4500 delivers 960 Gbps throughput (full duplex) for both Layer 2 and Layer 3 protocols. For enterprises introducing 10GbE into their racks, the EX4500 can be used to add 10GbE-attached servers, iSCSI, and network-attached storage (NAS) with minimal impact to the current switching infrastructure. The EX4500 is also in Junipers roadmap to support Virtual Chassis fabric technology. For smaller data centers, the EX4500 can be deployed as the core layer switch, aggregating 10GbE uplinks from EX4200 Virtual Chassis configurations in the access layer. Back-to-front and front-to-back cooling ensure consistency with server designs for hot and cold aisle deployments. Juniper plans to add support to the EX4500 for Converged Enhanced Ethernet (CEE) and Fibre Channel over Ethernet (FCoE) in upcoming product releases. Refer to the EX4500 Ethernet Switch data sheet for more information at: www.juniper.net/us/en/products-services/ switching/ex-series/ex4500/#literature.

20

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

EX2500 10GbE Switch

The Juniper Networks EX2500 Ethernet Switch is designed for high-density 10GbE data center top-of-rack applications where high performance and low latency are key requirements. The low latency offered by the EX2500approximately 700 nanosecondsmakes it ideal for delay sensitive applications such as high-performance server clusters and financial applications, where this degree of low latency is required. Note that the EX2500 is not Junos OS-based. Refer to the EX2500 Ethernet Switch data sheet for more information at: www.juniper.net/us/en/products-services/ switching/ex-series/ex2500/#literature.

EX8200 Line of Ethernet Switches

The Juniper Networks EX8200 line of Ethernet switches is a high-performance chassis platform designed for the high throughput that a collapsed core layer requires. This highly scalable platform supports up to 160,000 media access control (MAC) addresses, 64,000 access control lists (ACLs), and wire-rate multicast replication. The EX8200 line may also be deployed as an end-of-rack switch for those enterprises requiring a dedicated modular chassis platform. The advanced architecture and capabilities of the EX8200 line, similar to the EX4200, accelerate migration towards a simplified data center design. The EX8200-40XS line card brings 10GbE to the access layer for end-of-row configurations. This line card will deliver 25 percent greater density per chassis and consume half the power of competing platforms, reducing rack space and management costs. The EX8200 line is expected to add Virtual Chassis support later in 2010 with additional features being added in early 2011. With the new 40-port line card, the EX8200 line with Virtual Chassis technology will enable a common fabric of more than 1200 10GbE ports. The most fundamental challenge that data center managers face is the challenge of physical plant limitations. In this environment, taking every step possible to minimize power draw for the required functionality becomes a critical goal. For data center operators searching for the most capable equipment in terms of functionality for the minimum in rack space, power, and cooling, the EX8200 line delivers higher performance and scalability in less rack space with lower power consumption than competing platforms. Designed for carrier-class HA, each EX8200 line model also features fully redundant power and cooling, fully redundant Routing Engines, and N+1 redundant switch fabrics. For more information, refer to the EX8200 line data sheets for a complete list of features and specifications at: www.juniper.net/us/en/products-services/switching/ex-series.

MX Series 3D Universal Edge Routers

Its important to have a consistent set of powerful edge services routers to be able to interconnect the data center to other data centers and out to dispersed users. The MX Series with the new Trio chipset delivers cost-effective, powerful scaling that allows enterprises to support application-level replication for disaster recovery or virtual machine migration between data centers by extending VLANs across data centers using mature, proven technologies such as VPLS. It is interesting to note the following observation from the recent 2010 MPLS Ethernet World Conference from the Day 3 Data Center Interconnect session: VPLS is the most mature technology today to map DCI requirements. Delivering carrier-class HA, each MX Series model features fully redundant power and cooling, fully redundant Routing Engines, and N+1 redundant switch fabrics. For more information, refer to the MX Series data sheet for a complete list of features, benefits, and specifications at: www.juniper.net/us/en/products-services/routing/mx-series.

Copyright 2010, Juniper Networks, Inc.

21

Data Center LAN Migration Guide

Consolidated Security with SRX Series Services Gateways

The SRX Series Services Gateways replace numerous legacy security solutions by providing a suite of services in one platform, including a firewall, IPS, and VPN services. Supporting the concept of zones, the SRX Series can provide granular security throughout the data center LAN. The SRX Series can be virtualized and consolidated into a single pool of security services via clustering. The SRX Series can scale up to 10 million concurrent sessions allowing the SRX Series to massively and rapidly scale to handle any throughput without additional devices, multiple cumbersome device configurations, or operating systems. The highly scalable performance capabilities of the SRX Series platform, as with the EX Series switches, lays the groundwork for a simplified data center infrastructure and enable enterprises to easily scale to meet future growth requirements. This is in contrast to legacy integrated firewall modules and standalone appliances which have limited performance scalability. Even when multiple firewall modules are used, the aggregate performance may still be far below the throughput required for consolidating applications or data centers, where firewall aggregate throughput of greater than 100 gigabits may be required. The lack of clustering capabilities in some legacy firewalls not only limits performance scalability but also increases management and network complexity. The SRX Series provides HA features such as redundant power supplies and cooling fans, as well as redundant switch fabrics. This robust platform also delivers carrier-class throughput. The SRX5600 is the industrys fastest firewall and IPS by a large margin, according to Network World. For more information, refer to the SRX Series data sheet for a complete list of features, benefits, and specifications at: www.juniper.net/us/en/products-services/security/srx-series.

Altor Networks Virtual Firewall (VF 4.0)

To address the unique security challenges of virtualized networks and data centers, Juniper has integrated Altors virtual firewall and cloud protection software into its security portfolio to give network and application visibility and granular control over virtual machines (VM). Combining a powerful stateful virtual firewall with virtual intrusion detection (IDS), VM Introspection and automated compliance assessment, Altors comprehensive solution for protecting virtualized workloads slipstreams easily into Juniper environments featuring any of the following: SRX Series Services Gateways STRM Series Security Threat Response Managers IDP Series Intrusion Detection and Prevention Appliances Altors integrations focus on preserving customers investment into Juniper security, and extending it to the virtualized infrastructure with the similar feature, functionality, and enterprise-grade requirements like high-performance, redundancy, and central management. Juniper customers can deploy Altor on the virtualized server, and integrate security policies, logs, and related work flow into existing SRX Series, STRM Series, and IDP Series infrastructure. Customers benefit from layered, granular security without the management and OpEx overhead. Altor v4.0 will export firewall logs and inter-VM traffic flow information to STRM Series to deliver single-pane of glass for threat management. Customers who have deployed Juniper Networks IDP Series, and management processes around threat detection and mitigation can extend that to the virtualized server infrastructure with no additional CapEx investment. Altors upcoming enhancements with SRX Series and Junos Space continues on the vision to deliver gapless security with a common management platform. Altor-SRX Series integration will ensure trust zone integrity is guaranteed to the last mile - particularly relevant in cloud and shared-infrastructure deployments. Altors integration with Junos Space will bridge the gap between management of physical resources and virtual resources to provide a comprehensive view of the entire data center. Refer to the Securing Virtual Server Environments with Juniper Networks and Altor Networks solutions brief for more: www.juniper.net/us/en/local/pdf/solutionbriefs/3510354-en.pdf.

22

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

MPLS/VPLS for Data Center Interconnect

The consolidation of network services increases the need for Data Center Interconnect (DCI). Resources in one data center are often accessed by one or more data centers. Different business units, for example, may share information across multiple data centers via VPNs. Or compliance regulations may require that certain application traffic be kept on separate networks throughout data centers. Or businesses may need a real-time synchronized standby system to provide optimum HA in a service outage. MPLS is a suite of protocols developed to add transport and virtualization capabilities to large data center networks. MPLS enables enterprises to scale their topologies and services. An MPLS network is managed using familiar protocols such as OSPF or Integrated IS-IS and BGP. MPLS provides complementary capabilities to standard IP routing. Moving to an MPLS network provides business benefits like improved network availability, performance, and policy enforcement. MPLS networks can be employed for a variety of reasons: Inter Data Center Transport: To connect consolidated data centers to support mission critical applications. For example, real-time mainframe replication or disk, database, or transaction mirroring. Virtualizing the Network Core: For logically separating network services. For example, providing different levels of QoS for certain applications or separate application traffic due to compliance requirements. Extending L2VPNs for Data Center Interconnect: To extend L2 domains across data centers using VPLS. For example, to support application mobility with virtualization technologies like VMware VMotion, or to provide resilient business continuity for HA by copying transaction information in real time to another set of servers in another data center. The MX Series provides high capacity MPLS and VPLS technologies. MPLS networks can also facilitate migration towards a simpler, highly scalable and flexible data center infrastructure.

Junipers Unified Management Solution

Juniper provides three powerful management solutions for the data center LAN via its NSM and STRM Series platforms, as well as Junos Space. For more information on MPLS/VPLS, please refer to the Implementing VPLS for Data Center Interconnectivity Implementation Guide at: www.juniper.net/us/en/solutions/enterprise/data-center/simplify/#literature.

Network and Security Manager

NSM offers a single pane of glass to manage and maintain Juniper platforms as the network grows. It also helps maintain and configure consistent routing and security policies across the entire network. And NSM helps delegate roles and permissions as well. Delivered as a software application or a network appliance, NSM provides many benefits: Centralized activation of routers, switches, and security devices Granular role-based access and policies Global policies and objects Monitoring and investigative tools Scalable and deployable solutions Reliability and redundancy Lower TCO

Copyright 2010, Juniper Networks, Inc.

23

Data Center LAN Migration Guide

The comprehensive NSM solution provides full life cycle management for all platforms in the data center LAN. Deployment: Provides a number of options for adding device configurations into the database, such as importing a list of devices, or discovering and importing deployed network devices, or manually adding a device and configuration in NSM, or having the device contact NSM to add its configuration to the database. Configuration: Offers central configuration to view and edit all managed devices. Provides offline editing/modeling of device configuration. Facilitates the sharing of common configurations across devices via templates and policies. Provides configuration file management for backup, versioning, configuration comparisons, and more. Monitoring: Provides centralized event log management with predefined and user-customizable reports. Provides tools for auditing log trends and finding anomalies. Provides automatic network topology creation using standardsbased discovery of Juniper and non-Juniper devices based on configured subnets. Offers inventory management for device management interface (DMI)-enabled devices, and Job Manager to view device operations performed by other team members. Maintenance: Delivers centralized Software Manager to version track software images for network devices. Other tools also transform/validate between user inputs and device-specific data formats via DMI schemas. Using open standards like SNMP and system logging, NSM has support for third-party network management solutions from IBM, Computer Associates, InfoVista, HP, EMC, and others. Refer to the Network and Security Manager data sheet for a complete list of features, benefits, and specifications: www.juniper.net/us/en/products-services/security/nsmcm.

STRM Series Security Threat Response Managers

Complementing Junipers portfolio, the STRM Series offers a single pane of glass to manage security threats. It provides threat detection, event log management, compliance, and efficient IT access to the following: Log Management: Provides long-term collection, archival, search, and reporting of event logs, flow logs, and application data. Security Information and Event Management (SIEM): Centralizes heterogeneous event monitoring, correlation, and management. Unrivaled data management greatly improves ITs ability to meet security control objectives. Network Behavior Anomaly Detection (NBAD): Discovers aberrant network activities using network and application flow data to detect new threats that others miss. Refer to the STRM Series data sheet for a complete list of features, benefits, and specifications: www.juniper.net/us/ en/products-services/security/strm-series.

Junos Space

Another of ITs challenges has been adding new services and applications to meet the ever growing demand. Historically, this has not been easy, requiring months of planning and only making changes in strict maintenance windows. Junos Space is a new, open network application platform designed for building applications that simplify network operations, automate support, and scale services. Organizations can take control of their own networks through selfwritten programs or third-party applications from the developer community. Embodied in a number of appliances across Junipers routing, switching, and security portfolio, an enterprise can seamlessly add new applications, devices, and device updates as they become available from Juniper and the developer community, without ever restarting the system for full plug and play.

24

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Several applications will be available on Junos Space throughout 2010. Junos Space applications introduced as of the first half of 2010 include: Junos Space Virtual Control (expected availability in Q3 2010) allows users to monitor, manage, and control the virtual network environments that support virtualized servers deployed in the data center. Virtual Control provides a consolidated solution for network administrators to gain end-to-end visibility into, and control over, both virtual and physical networks from a single management screen. By enabling network-wide topology, configuration, and policy management, Virtual Control minimizes errors and dramatically simplifies data center network orchestration, while at the same time lowering total cost of ownership by providing operational consistency across the entire data center network. Virtual Control also greatly improves business agility by accelerating server virtualization deployment. Juniper has also formed a new collaboration with VMware that takes advantage of its open APIs to achieve seamless orchestration across both physical and virtual network elements by leveraging Virtual Control. The combination of Junos Space Virtual Control and VMware vSphere provides automated orchestration between the physical and virtual networks, wherein a change in the virtual network is seamlessly carried over the physical network and vice versa. Junos Space Ethernet Design (available now) is a Junos Space software application that enables end-to-end campus and data center network automation. Ethernet Design provides full automation including configuration, provisioning, monitoring, and administration of large switch and router networks. Designed to enable rapid endpoint connectivity and operationalization of the data center, Ethernet Design uses a best practice configuration and scalable workflows to scale data center operations with minimal operational overhead. It is a single pane of glass platform for end-to-end network automation that improves productivity via a simplified, create one, use extensively configuration and provisioning model. Junos Space Security Design (available now) enables fast, easy, and accurate enforcement of security state across the enterprise network. Security Design enables quick conversion of business intent to device-specific configuration, and it enables auto-configuration and provisioning through workflows and best practices to reduce the cost and complexity of security operations. Service Now and Junos Space Service Insight (available now) consists of Junos Space applications that enable fast and proactive detection, diagnosis, and resolution of network issues. (See Automated Support with Service Now for more details.) Junos Space Network Activate (expected availability Q4 2010) facilitates fast and easy setup of VPLS services, and allows for full lifecycle management of MPLS services In addition, the Junos Space Software Development Kit (SDK) will be released to enable development of a wide range of third-party applications covering all aspects of network management. Junos Space is designed to be open and provides northbound, standards-based APIs for integration to third-party data center and service provider solutions. Junos Space also includes DMI based on NetConf, an IETF standard, which can enable management of DMI-compliant third-party devices. Refer to the following URL for more information on Junos Space applications: www.juniper.net/us/en/productsservices/software/junos-platform/junos-space/applications.

Copyright 2010, Juniper Networks, Inc.

25

Data Center LAN Migration Guide

Automated Support with Service Now

Built on the Junos Space platform, Service Now delivers on Junipers promise of network efficiency, agility, and simplicity by delivering service automation that leverages Junos OS embedded technology. For devices running Junos OS 9.x and later releases, Service Now aids in troubleshooting for Junipers J-Care Technical Services. Junos OS contains the scripts which provide device and incident information that is relayed to the Service Now application where it is logged, stored, and with the customers permission, forwarded to Juniper Networks Technical Services for immediate action by the Juniper Networks Technical Assistance Center (JTAC). Not only does Service Now provide automated incident management, it offers automated inventory management for all Junos OS devices running release 9.x and later. These two elements provide substantial time savings in the form of more network uptime and less time spent on administrative tasks like inventory data collection. This results in a reduction of operational expenses and streamlined operations, allowing key personnel to focus on the goals of the network rather than its maintenanceall of which enhance Junipers ability to simplify the data center.

AI Scripts Installed

JMB Hardware So ware Resources Calibration CUSTOMER NETWORK

Service Now

Juniper Support System Service Insight

CUSTOMER OR PARTNER NOC

INTERNET

Gateway

JUNIPER

Figure 8: Troubleshooting with Service Now

The Service Insight application, available in Fall 2010 on the Junos Space platform, takes service automation to the next level by delivering proactive, customized support for networks running Juniper devices. While Service Now enables automation for reactive support components such as incident and inventory management for efficient network management and maintenance, Service Insight brings a level of proactive, actionable network insight that helps manage risk, lower TCO, and improve application reliability. The first release of Service Insight will consist of the following features: Targeted product bug notification: Proactive notification to the end user of any new bug notification that could impact network performance and availability with analysis of which devices could be vulnerable to the defect. This capability can avoid network incidents due to known product issues, as well as save numerous hours of manual impact analysis for system-wide impact of a packet-switched network (PSN). EOL/EOS reports: On-demand view of the end of life (EOL), end of service (EOS), and end of engineering (EOE) status of devices and field-replaceable units (FRUs) in the network. This capability brings efficiency to network management operations and mitigates the risk of running obsolete network devices and/or software/firmware. With this capability, the task of taking network inventory and assessing the impact of EOL/EOS announcements is reduced to the touch of a button instead of a time-consuming analysis of equipment and software revision levels and compatibility matrices.

26

Copyright 2010, Juniper Networks, Inc.

Data Center LAN Migration Guide

Chapter 3: Data Center Migration -Trigger Events and Deployment Processes

Copyright 2010, Juniper Networks, Inc.

27

Data Center LAN Migration Guide

How Migrations Begin

Many enterprises have taken on server, application, and data center consolidations to reduce costs and to increase the return on their IT investments. To continue their streamlining efforts, many organizations are also considering the use of cloud computing in their pooled, consolidated infrastructures. While migrating to a next-generation cloud-ready data center design can theoretically take place at any time, most organizations will not disrupt a production facility except for a limited time-window to perform scheduled maintenance and continuity testing, or for a suitably compelling reason whose return is worth the investment and the work. In Chapter 3 of this guide, we identify a series of such reasonstypically stimulated by trigger eventsand the way these events turn into transitions at various insertion points in the data center network. We also cover the best practices and steps involved in migration at each of the insertion points presented by a specific trigger event. By following these steps and practices, it is possible to extend migration to legacy network tiers and move safely towards a simplified data center infrastructure.

Trigger Events for Change and Their Associated Insertion Points