Академический Документы

Профессиональный Документы

Культура Документы

ESX40 Deployment Guide

Загружено:

SettuppИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

ESX40 Deployment Guide

Загружено:

SettuppАвторское право:

Доступные форматы

VMware ESX 4.

0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

Buy online or call 1-800-274-3355 to purchase

ProductsServicesSupportSolutions

Purchase Help

Search Dell | About Dell Premier Login | My Cart | My Order Status | Quote to Order

VMware ESX 4.0 and PowerVault MD3000i

This sample configuration description for using ESX/ESXi 4.0 with Dell PowerVault MD3000i storage offers best practices goals for providing multipathing and load balancing for Internet SCSI (iSCSI) storage traffic. These goals can be achieved using two NIC ports on the ESX host for iSCSI traffic and using Round Robin path-selection policy for iSCSI volumes. Please provide feedback at the bottom of this page if you find any corrections or have any suggestions.

Sample Configuration

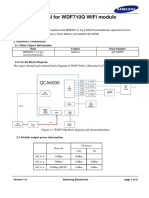

Figure 1 shows a sample configuration to use ESX/ESXi 4.0 host(s) with Dell PowerVault MD3000i storage.

An ESX/ESXi 4.0 host is connected using two NIC ports (vmnic1 and vmnic4) dedicated for iSCSI traffic to the PowerVault MD3000i. The vmnic1 and vmnic4 ports are connected to separate Gigabit Ethernet (GbE) switches 1 and 2. Ethernet switch 1 is connected to controller 0, port 0 and controller 1, port 0. Ethernet switch 2 is connected to controller 0, port 1 and controller 1, port 1.

1 of 6

11/9/2009 3:14 PM

VMware ESX 4.0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

Dell PowerVault MD3000i Network Configuration

The Dell PowerVault MD3000i array comprises two active RAID controllers. However, at any given time only one RAID controller owns a virtual disk or logical unit (LUN). Each RAID controller has two GbE ports for iSCSI data traffic. By configuring controller 0, port 0 and controller 1, port 0 in one IP subnet and controller 0, port 1 and controller 1, port 1 in another IP subnet, both traffic isolation across physical Ethernet segments (using redundant Ethernet switches) and path redundancy can be achieved. For the purpose of this configuration, the PowerVault MD3000i iSCSI host ports are configured with the following IP configuration:

Controller 0, port 0: 192.168.130.101/255.255.255.0 Controller 0, port 1: 192.168.131.101/255.255.255.0 Controller 1, port 0: 192.168.130.102/255.255.255.0 Controller 1, port 1: 192.168.131.102/255.255.255.0

Note: If using jumbo frames, enable jumbo frames on iSCSI data ports. Using the Modular Disk Storage Manager (MDSM) interface, go to iSCSI > Configure iSCSI Host Ports. For each port, click Advanced Host Port Settings. In the Advanced Host Port Settings window, check the Enable jumbo frames check box, and set MTU size to 9000. Click Ok.

iSCSI Switch Configuration

On the Ethernet switches used for iSCSI traffic, if using jumbo frames, enable jumbo frames on ports connected to ESX host(s) and the PowerVault MD3000i.

iSCSI Storage Network Configuration on an ESXi Host

Multiple-pathing: To provide path redundancy and traffic load balancing for the iSCSI storage traffic, two network adapters (vmnic1 and vmnic4) are used. As shown in Figure 2, vmnic1 is up-linked to vSwitch1 and vmnic4 is up-linked to vSwitch2. Using the esxcli command, VMkernel interfaces vmk1 and vmk2 are attached to the software iSCSI initiator.

The VMkernel-iSCSI1 interface is configured to be in the same IP subnet as PowerVault MD3000i controller 0, port 0 and controller 1, port 0. The VMkernel-iSCSI2 interface is configured to be in the same IP subnet as PowerVault MD3000i controller 0, port 1 and controller 1, Port 1. This configuration enables two active paths (one through VMkernel-iSCSI1 and the other through VMkernel-iSCSI2) to a LUN owned by any PowerVault MD3000i controller. Setting the path selection policy for a LUN to Round Robin (VMware) enables load balancing of iSCSI traffic across both active paths. The other two path-selection policies namely Most Recently Used (MRU) and Fixed do not offer load balancing. The following steps are required to configure an ESX host as described in setup in Figure 1: 1. Using VI client, enable ESX/ESXi SW initiator on the ESX host and assign appropriate IQN. 2. In the SW iSCSI initiator properties, add any IP of PowerVault MD3000i host data port for dynamic discovery. Do not rescan the SW iSCSI adapter at this time. 3. Manually add the ESX/ESXi host to the PowerVault MD3000i, and create host-to-virtual-disk mapping. If you are not using jumbo frames:

2 of 6

11/9/2009 3:14 PM

VMware ESX 4.0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

4. Using VI client GUI, create virtual switch vSwitch1 and uplink vmnic1 5. Using VI client GUI, create virtual switch vSwitch2 and uplink vmnic4 6. Create a VMkernel port group on vSwitch1 with name VMkerneliSCSI1 and IP conguration: 192.168.130.11/255.255.255.0. Leave the gateway as the default management network gateway. 7. Create a VMkernel port group on vSwitch2 with name VMkerneliSCSI2 and IP conguration: 192.168.131.11/255.255.255.0. Leave the gateway as the default management network gateway. 8. Using the VI client GUI (Host>Conguration>Neworking), note down the VMkernel port numbers (vmkX). Attach the VMkernel interfaces to software iSCSI initiator: a. $ esxcli swiscsi nic add n vmk1 d vmhbaXX b. $ esxcli swiscsi nic add n vmk2 d vmhbaXX where vmhbaXX is the vmhba number of the software iSCSI initiator. c. Rescan the SW iSCSI initiator.

If you want to enable jumbo frames, follow these steps to set up VMkerenl interfaces. On the ESX host, or using RCLI, issue the following CLI commands: 4. Create two virtual switches vSwitch1 and vSwitch2 and add up-links vmnic1 and vmnic4 respectively:

a. $ esxcfgvswitch a vSwitch1 b. $ esxcfgvswitch a vSwitch2 c. $ esxcfgvswitch vSwitch1 L vmnic1 d. $ esxcfgvswitch vSwitch2 L vmnic4

5. Enable jumbo frames at vSwitches:

a. $ esxcfgvswitch vSwitch1 m 9000 b. $ esxcfgvswitch vSwitch2 m 9000

6. Create VMkernel Port Groups:

a. $ esxcfgvswitch vSwitch1 A VMkerneliSCSI1 b. $ esxcfgvswitch vSwitch2 A VMkerneliSCSI2

7. Create VMkernel interfaces for iSCSI traffic, and enable jumbo frames on each:

a. $ esxcfgvmknic a i 192.168.130.11 n 255.255.255.0 m 9000 VMkerneliSCSI1 b. $ esxcfgvmknic a i 192.168.131.11 n 255.255.255.0 m 9000 VMkerneliSCSI2

8. Observe the output of the esxcfg-vmknic command and note VMkernel ports named vmkX. Make sure that MTU size for the newly created vmkX ports is set to 9000: a. $ esxcfg-vmknic l 9. Attach the VMkernel interfaces to software iSCSI initiator: a. $ esxcli swiscsi nic add n vmk1 d vmhbaXX b. $ esxcli swiscsi nic add n vmk2 d vmhbaXX where vmhbaXX is the vmhba number of the software iSCSI initiator. 10. Rescan the SW iSCSI initiator:

a. $ esxcfg-rescan vmhbaXX

where vmhbaXX is the vmhba device for SW iSCSI initiator.

Follow these steps regardless of jumbo frame configuration:

3 of 6

11/9/2009 3:14 PM

VMware ESX 4.0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

11. Format the LUNs exposed to ESX as VMFS. 12. To configure round robin multi-pathing policy using the VI client GUI, for each LUN exposed to the ESX server, change the default path-selection policy to Round Robin (VMware). This enables load balancing over two active paths to the LUN (two paths through the controller that owns the LUN; the other two paths should be stand-by). a. Right Click on the device and choose Manage Paths

b.In the Path Selection drop down select Round Robin. Before this selection is made one path has a status of Active, another is Active (I/O), and the other two paths in Stand by.

c.After selecting Round Robin two paths have a status of Active(I/O) and other two paths in Stand by

4 of 6

11/9/2009 3:14 PM

VMware ESX 4.0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

d. Repeat the process for each iSCSI LUN presented to the ESX 4 server from the Dell PowerVault MD3000i Note: For more details on using iSCSI storage with ESX/ESXi 4.0, refer to the vSphere iSCSI configuration guide at http://vmware.com/pdf/vsphere4/r40/vsp_40_iscsi_san_cfg.pdf

Latest page update: made by ctompsett , Jun 12 2009, 11:11 AM EDT (about this update - complete history) Keyword tags: ESX 4.0 vSphere MD3000i

ctompsett

Share this

Threads for this page Started By Stefan_Z Thread Subject ISCI config MD3000i

Thread started: Jul 8 2009, 2:19 PM EDT Watch

Replies Last Post 12 Oct 27 2009, 3:53 AM EDT by Nepharim

Hello, I understand that i have to put my nics in different vswich/portgroup with single vmkernel. But how should I design my config, when I habe three nics connected to my (I-SCSI)switch for I-SCSI Network? Thats my current config : http://img11.imageshack.us/img11/9269/iscsiconfig.jpg Show Last Reply ianbeyer chiznitz Multiple VLANs? Performance with Round robin + jumbo (page: 1 2) 3 21 Oct 6 2009, 5:29 PM EDT by GeneNZ Aug 25 2009, 6:55 AM EDT by GeneNZ

Showing 3 of 3 threads for this page

5 of 6

11/9/2009 3:14 PM

VMware ESX 4.0 and PowerVault MD3000i - The Dell TechCenter

http://www.delltechcenter.com/page/VMware+ESX+4.0+and+PowerVau...

Copyright 1999-2007 Dell Inc. Battery Recall | About Dell | Conditions of Sale & Site Terms | Unresolved Issues | NEW Privacy Policy | Contact Us | Site Map |

Feedback

6 of 6

11/9/2009 3:14 PM

Вам также может понравиться

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5795)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- SIEMENS Put-GetДокумент3 страницыSIEMENS Put-Getknightfelix12Оценок пока нет

- Cloud Hoppers Weather Station Using Arduino Uno Board and Ethernet ShieldДокумент7 страницCloud Hoppers Weather Station Using Arduino Uno Board and Ethernet ShieldPaul GomezОценок пока нет

- Dell Laptop Service CenterДокумент1 страницаDell Laptop Service CenterMaroof AhmedОценок пока нет

- Wexflow - Open Source Workflow Engine - CodeProjectДокумент84 страницыWexflow - Open Source Workflow Engine - CodeProjectgfgomesОценок пока нет

- Performance Price Capabilities Size: A Full Spectrum of Spectrum Analyzer SolutionsДокумент2 страницыPerformance Price Capabilities Size: A Full Spectrum of Spectrum Analyzer SolutionsVictorОценок пока нет

- Basic Control Hijacking AttacksДокумент35 страницBasic Control Hijacking AttacksHaider AliОценок пока нет

- Latancy Solution-Pipeline Reservation TableДокумент14 страницLatancy Solution-Pipeline Reservation TableBhavendra Raghuwanshi60% (10)

- Users Manual 2061259Документ5 страницUsers Manual 2061259Pablo Gustavo RendonОценок пока нет

- Forcepoint NGFW Mobile VPN ConfigДокумент18 страницForcepoint NGFW Mobile VPN ConfigGeorge JR BagsaoОценок пока нет

- Frame Relay and ATMДокумент36 страницFrame Relay and ATMArchana PanwarОценок пока нет

- Flashwave Packet Optical Networking Platform PDFДокумент10 страницFlashwave Packet Optical Networking Platform PDFMarcel KebreОценок пока нет

- Set Chassis Cluster Disable RebootДокумент3 страницыSet Chassis Cluster Disable RebootNoneОценок пока нет

- Performance Evaluation of TCP Reno, SACK and FACK Over WimaxДокумент5 страницPerformance Evaluation of TCP Reno, SACK and FACK Over WimaxHarjinder191Оценок пока нет

- 7615 Ijcsit 07Документ13 страниц7615 Ijcsit 07Anonymous Gl4IRRjzNОценок пока нет

- Tavrida ElectricДокумент42 страницыTavrida ElectricmmmindasОценок пока нет

- NGSCB Seminar ReportДокумент28 страницNGSCB Seminar ReportAjay Nandakumar100% (1)

- ADC0802 ADC0803 ADC0804 (Harris)Документ16 страницADC0802 ADC0803 ADC0804 (Harris)Amit PujarОценок пока нет

- Huawei eRAN KPI - Reference Summary v1r12c10Документ28 страницHuawei eRAN KPI - Reference Summary v1r12c10Fereidoun SadrОценок пока нет

- Equalis L B006 Bro GB Rev1Документ2 страницыEqualis L B006 Bro GB Rev1Armel Brissy100% (1)

- Exam 98-361 TA: Software Development FundamentalsДокумент4 страницыExam 98-361 TA: Software Development Fundamentalsawe123Оценок пока нет

- 8051 Lab Experiments With SolutionДокумент11 страниц8051 Lab Experiments With SolutionInaamahmed13Оценок пока нет

- MA5100 Technical ManualДокумент80 страницMA5100 Technical ManualLê Minh Nguyên TriềuОценок пока нет

- MATLAB - Dymola Interface: OPC Interface - Development SystemДокумент3 страницыMATLAB - Dymola Interface: OPC Interface - Development SystemshubhamОценок пока нет

- RHEV Introduction ModifiedДокумент27 страницRHEV Introduction ModifiedswamybalaОценок пока нет

- PIC 16F84A TutorialДокумент58 страницPIC 16F84A TutorialEduardo LaRaa100% (1)

- SIP - ForkingДокумент5 страницSIP - ForkingVISHAL KUMARОценок пока нет

- Asterisk DocumentationДокумент36 страницAsterisk DocumentationSafaa ShaabanОценок пока нет

- MP Lab Manual FinalДокумент72 страницыMP Lab Manual FinalRavindra KumarОценок пока нет

- Integration of New Sensor Data in Open Data CubeДокумент3 страницыIntegration of New Sensor Data in Open Data CubeAnonymous izrFWiQОценок пока нет

- The Blueprint - Monthly SEO Report TEMPLATE ALL Live Links and Content Live DataДокумент16 страницThe Blueprint - Monthly SEO Report TEMPLATE ALL Live Links and Content Live DataHani SitumorangОценок пока нет