Академический Документы

Профессиональный Документы

Культура Документы

Enhancement of Image Degraded by Fog Using Cost Function Based On Human Visual Model

Загружено:

Siju VargheseИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Enhancement of Image Degraded by Fog Using Cost Function Based On Human Visual Model

Загружено:

Siju VargheseАвторское право:

Доступные форматы

Proceedings of IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems Seoul, Korea, August 20 - 22,

2008

TM3-1

Enhancement of Image Degraded by Fog Using Cost Function Based on Human Visual Model

Dongjun Kim, Changwon Jeon, Bonghyup Kang and Hanseok Ko

AbstractIn foggy weather conditions, images become degraded due to the presence of airlight that is generated by scattering light by fog particles. In this paper, we propose an effective method to correct the degraded image by subtracting the estimated airlight map from the degraded image. The airlight map is generated using multiple linear regression, which models the relationship between regional airlight and the coordinates of the image pixels. Airlight can then be estimated using a cost function that is based on the human visual model, wherein a h uman is more insensitive to variations of the luminance in bright regions than in dark regions. For this objective, the luminance image is employed for airlight estimation. The luminance image is generated by an appropriate fusion of the R, G, and B components. Representative experiments on real foggy images confirm significant enhancement in image quality over the degraded image.

I.

INTRODUCTION

og is a phenomenon caused by tiny droplets of water in the air. Fog reduces visibility down to less than 1 km. In foggy weather, images also become degraded by additive light from scattering of light by fog particles. This additive light is called airlight. There have been some notable efforts to restore images degraded by fog. The most common method known to enhance degraded images is histogram equalization. However, even though global histogram equalization is simple and fast, it is not suitable because the fogs effect on an image is a function of the distance between the camera and the object. Subsequently, a partially overlapped sub-block histogram equalization was proposed in [1]. However, the physical model of fog was not adequately reflected in this effort. While Narasimhan and Nayar were able to restore images using a scene-depth map [2], this method required two images taken under different weather conditions. Grewe and Brooks suggested a method to enhance pictures that were blurred due to fog by using wavelets [3]. Once again, this approach required several images to accomplish the enhancement. Polarization filtering is used to reduce fogs effect on images [4, 5]. It assumes that natural light is not polarized and that

scattered light is polarized. However this method does not guarantee significant improvement in images with dense fog since it falls short of expectations in dense fog. Oakley and Bu suggested a simple correction of contrast loss in foggy images [6]. In [6], in order to estimate the airlight from a color image, a cost function is used for the RGB channel. However, it assumes that airlight is uniform over the whole image. In this paper, we improve the Oakley method [6] to make it applicable even when the airlight distribution is not uniform over the image. In order to estimate the airlight, a cost function that is based on the human visual model is used in the luminance image. The luminance image can be estimated by an appropriate fusion of the R, G, and B components. Also, the airlight map is estimated using least squares fitting, which models the relationship between regional airlight and the coordinates of the image pixels. The structure of this paper is as follows. In Section , we propose a method to estimate the airlight map and restore the fog image. We present experimental results and conclusions in Sections and V respectively. The structure of the algorithm is shown in Fig. 1.

Fig. 1 Structure of the algorithm

II. PROPOSED ALGORITHM A. Fog Effect on Image and Fog Model The foggy image is degraded by airlight that is caused by scattering of light with fog particles in air as depicted in Fig. 2 (right).

Manuscript received May 1, 2008. Dongjun Kim, Changwon Jeon and Hanseok Ko (Corresponding author) are with School of Electrical Engineering, Korea University, Seoul, Korea. (e-mail: djkim@ispl.korea.ac.kr; cwjeon@ispl.korea.ac.kr; hsko@ispl.korea.ac.kr). Bonghyup Kang is with Samsung Techwin CO., LTD. (e-mail : bh47.kang@samsung.com)

Fig. 2 Comparison of the clear image (left) and the fog image (right)

978-1-4244-2144-2/08/$25.00 2008 IEEE

Airlight plays the role of being an additional source of light as modeled in [6] and Eqn (1) below. I' R,G,B = I R,G,B + R,G,B (1) where

estimated airlight map. B. Region Segmentation In this paper, we suggest estimating the airlight for each region and modeling the airlight for each region and the coordinates within the image to generate the airlight map. In the case of an image with various depth, the contribution of airlight can be varied according to the region. Estimating the airlight for each region can reflect the variation of depth within the image. Regions are segmented uniformly to estimate the regional contribution of airlght.

I' R,G,B is the degraded image, I R,G,B is the original

R,G,B

image, and

represents the airlight for the Red, Green,

and Blue channels. This relationship can be applied in the case where airlight is uniform throughout the whole image. However, the contribution of airlight is not usually uniform over the image because it is a function of the visual depth, which is the distance between the camera and the object. Therefore, the model can be modified to reflect the depth dependence as follows. I' R,G,B (d) = I R,G,B (d) + R,G,B (d) (2) Note that d represents depth. Unfortunately, it is very difficult to estimate the depth using one image taken in foggy weather conditions, so we present an airlight map that models the relationship between the coordinates of the image pixels and the airlight. In this paper, since the amount of scattering of a visible ray by large particles like fog and clouds are almost identical, the luminance component is used alone to estimate the airlight instead of estimating the R, G, and B components. The luminance image can be obtained by a fusion of the R, G, and B components. Subsequently, the color space is transformed from RGB to YCbCr. Therefore Eqn (2) can be re-expressed as follows. Y'(i,j) = Y(i,j) + Y (i,j) (3) where Y' and Y reflect the degraded luminance and clear luminance images respectively at position (i,j). Note that Y is the estimated airlight map for the luminance image. The shifting of mean(Y) can be confirmed in Fig 3.

600

Fig. 4 Region segmentation

C. Estimate Airlight In order to estimate the airlight, we improved the cost function method in [6] using a compensation that is based on the human visual model. In Eqn (3), the airlight is to be estimated to restore the image degraded by fog. To estimate the airlight, the human visual model is employed. As described by Webers law, a human is more insensitive to variations of luminance in bright regions than in dark regions.

S =k

500

R R

(5)

400

300

200

100

0 0 50 100 150 200 250

1000 900 800 700 600 500 400 300 200 100 0 0 50 100 150 200 250

where R is an initial stimulus, R is the variation of the stimulus, and S is a variation of sensation. In the foggy weather conditions, when the luminance is already high, a human is insensitive to variations in the luminance. We can estimate the existing stimulus in the image signal by the mean of the luminance within a region. The variation between this and foggy stimulus can be estimated by the standard deviation within the region. Thus the human visual model would estimate the variation of sensation as

Fig. 3 Comparison of the Y Histogram

In order to restore the image blurred by fog, we need to estimate the airlight map and subtract the airlight from the foggy image as follows.

STD(Y) = mean(Y)

1 n

n i =1

( yi Y ) 2 Y

(6)

Y(i,j) = Y'(i,j) Y (i,j)

(4)

In this model, Y represents the restored image and Y is the

Where Y means that mean value of Y .Note that the value of Eqn (6) for a foggy image, STD(Y')/mean(Y') , is relatively small since the value of numerator is small and the value of denominator is large.

A( ) =

STD(Y' ) mean(Y' )

(7)

To correct the blurring due to fog, edge enhancement is performed. (11) where g(i,j) is the reverse Fourier transformed signal that is filtered by a high pass filter, s is a constant that determines the strength of enhancement, and luminance image.

In Eqn (7), increasing causes an increase in A( ) , which means that a human can perceive the variation in the luminance. However, if the absolute value of the luminance is too small, it is not only too dark, but the human visual sense also becomes insensitive to the variations in the luminance that still exist. To compensate for this, a second function is generated as follows.

Yde blurr (i, j ) = Y(i,j) + s g(i,j)

Ydeblurr (i, j ) is the de-blurred

B ' ( ) = (mean(Y' ))

(8)

Eqn (8) indicates information about mean of luminance. In a foggy image, the result of Eqn (8) is relatively large. And, increasing causes a decrease in B ( ) which means that overall brightness of the image decreases. Functions (7) and (8) reflect different scales from each other. Function (8) is re-scaled to produce Eqn (9) to set 0 when input image is Ideal. Note that Ideal represents the ideal image having a uniform distribution from the minimum to the maximum of the luminance range. In general, the maximum value is 235 while the minimum value is 16.

B ( ) = (mean(Y') )

STD(Ideal) mean(Ideal) 2

(9)

Fig. 5 Generation of airlight map

relatively large when is small. Increasing causes a decrease in A( ) B ( ) . If is too large, it cause an increase in | A( ) B ( ) | which means the image becomes dark. The airlight.

For dense foggy image, the result of A( ) B ( ) is

F. Post-Processing The fog particles absorb a portion of the light in addition to scattering it. By changing the color space from YCbCr to RGB, I R ,G , B can be obtained. Therefore, after the color space conversion, histogram stretching is performed as a post-processing step.

satisfying Eqn (10) is the estimated

= arg min{| A( ) B( ) |}

~ I R ,G , B = 255

(10) where

I R ,G , B min( I R ,G , B ) max( I R ,G , B ) min( I R ,G , B )

(12)

~ I R ,G , B is the result of histogram stretching, max

D. Estimate Airlight Map Using Multiple Linear Regression Objects in the image are usually located at different distances from the camera. Therefore, the contribution of the airlight in the image also differs with depth. In most cases, the depth varies with the row or column coordinates of the image scene. This paper suggests modeling between the coordinates and the airlight values that are obtained from each region. The airlight map is generated by multiple linear regression using least squares (Fig. 5). E. Restoration of luminance image In order to restore the luminance image, the estimated airlight map is subtracted from the degraded image as Eqn (4).

( I R ,G , B ) is the maximum value of I R ,G , B that is an input for post-processing, and min( I R ,G , B ) is the minimum value of

I R ,G , B .

2500

2500

2000

2000

1500

1500

1000

1000

500

500

0 0 50 100 150 200 250

0 0 50 100 150 200 250

Fig. 6 Histogram stretching

III. RESULT The experiment is performed on a 3.0GHz Pentium 4 using MATLAB. The experiment results for images taken in foggy weather are shown in Fig. 8. In order to evaluate the performance, we calculate contrast, colorfulness, and the sum of the gradient that is based on the important of edges measurement. Contrast and colorfulness are improved by 147% and 430% respectively over the foggy image. In addition, the sum of the gradient is also improved 201% compared to the foggy image.

5 4.5 4 3.5 3 2.5 2 1.5 1 0.5 0

Contrast Colourfulness Sum_gradient

E. Namer and Y. Y. Schechner, "Advanced visibility improvement based on polarization filtered images," Proc. SPIE, vol. 5888, pp. 36-45, 2005. [4] Y. Y. Schechner, S. G. Narasimhan, and S. K. Nayar, "Polarization-based vision through haze," Applied Optics, vol. 42, pp. 511-525, 2003. [5] J. P. Oakley and H. Bu, "Correction of Simple Contrast Loss in Color Images," Image Processing, IEEE Transactions on, vol. 16, pp. 511-522, 2007. [6] Y. Yitzhaky, I. Dror, and N. S. Kopeika, "Restoration of atmospherically blurred images according to weather-predicted atmospheric modulation transfer functions," Optical Engineering, vol. 36, p. 3064, 1997. [7] K. K. Tan and J. P. Oakley, "Physics-based approach to color image enhancement in poor visibility conditions," Journal of the Optical Society of America A, vol. 18, pp. 2460-2467, 2001. [8] R. S. Sirohi, "Effect of fog on the colour of a distant light source," Journal of Physics D Applied Physics, vol. 3, pp. 96-99, 1970. [9] S. Shwartz, E. Namer, and Y. Y. Schechner, "Blind haze separation," International Conference on Computer Vision and Pattern Recognition, 2006. [10] Y. Yitzhaky, I. Dror, and N. S. Kopeika, "Restoration of atmospherically blurred images according to weather-predicted atmospheric modulation transfer functions," Optical Engineering, vol. 36, p. 3064, 1997. [11] K. K. Tan and J. P. Oakley, "Physics-based approach to color image enhancement in poor visibility conditions," Journal of the Optical Society of America A, vol. 18, pp. 2460-2467, 2001. [12] R. S. Sirohi, "Effect of fog on the colour of a distant light source," Journal of Physics D Applied Physics, vol. 3, pp. 96-99, 1970. S. Shwartz, E. Namer, and Y. Y. Schechner, "Blind haze separation," International Conference on Computer Vision and Pattern Recognition, 2006.

[3]

Enhanced Image

Fig. 7 Result of the evaluation

Foggy Image

IV. CONCLUSIONS In this paper, we propose to estimate the airlight using cost function, which is based on human visual model, and generate airlight map by modeling the relationship between coordinates of image and airlight. Blurred image due to fog is restored by subtracting airlight map from degraded image. In order to evaluate the performance, we calculated contrast, colorfulness and sum of gradient. The results confirm a significant improvement in image enhancement over the degraded image. In the future, we plan to investigate a methodology to estimate the depth map from single image. In addition, enhancement of degraded image in bad weather due to non-fog weather will be investigated. ACKNOWLEDGMENT This work is supported by Samsung Techwin CO., LTD. Thanks to Professor Colin Fyfe for his valuable advices and comments. REFERENCES

[1] Y. S. Zhai and X. M. Liu, "An improved fog-degraded image enhancement algorithm," Wavelet Analysis and Pattern Recognition, 2007. ICWAPR'07. International Conference on, vol. 2, 2007. S. G. Narasimhan and S. K. Nayar, "Contrast restoration of weather degraded images," IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, pp. 713-724, 2003. Fig. 8 Results of Image Enhancement by Defogging

[2]

Вам также может понравиться

- 08-Monocular Depth EstimationДокумент15 страниц08-Monocular Depth EstimationRajul RahmadОценок пока нет

- 15arspc Submission 109Документ9 страниц15arspc Submission 109reneebartoloОценок пока нет

- Multispectral Joint Image Restoration Via Optimizing A Scale MapДокумент13 страницMultispectral Joint Image Restoration Via Optimizing A Scale MappattysuarezОценок пока нет

- Zhu Zha PANDA Motion-Artifacts-Correction ISMRM2017#1289 FullДокумент4 страницыZhu Zha PANDA Motion-Artifacts-Correction ISMRM2017#1289 FullLEPING ZHAОценок пока нет

- Image RestorationДокумент21 страницаImage Restorationعبدالوهاب الدومانيОценок пока нет

- Improved Single Image Dehazing by FusionДокумент6 страницImproved Single Image Dehazing by FusionesatjournalsОценок пока нет

- Wavelet Enhancement of Cloud-Related Shadow Areas in Single Landsat Satellite ImageryДокумент6 страницWavelet Enhancement of Cloud-Related Shadow Areas in Single Landsat Satellite ImagerySrikanth PatilОценок пока нет

- Chapter 2 Digital Image FundamentalsДокумент26 страницChapter 2 Digital Image Fundamentalsfuntime_in_life2598Оценок пока нет

- Practical Analytic Single Scattering Model for Real Time RenderingДокумент10 страницPractical Analytic Single Scattering Model for Real Time RenderingkrupkinОценок пока нет

- SSDOДокумент8 страницSSDOjonnys7gОценок пока нет

- An Improved Illumination Model For Shaded Display: Turner Whitted Bell Laboratories Holmdel, New JerseyДокумент7 страницAn Improved Illumination Model For Shaded Display: Turner Whitted Bell Laboratories Holmdel, New JerseyAG SinglaОценок пока нет

- Haitao Wang, Stan Z. Li, Yangsheng Wang, Jianjun ZhangДокумент4 страницыHaitao Wang, Stan Z. Li, Yangsheng Wang, Jianjun ZhangMohsan BilalОценок пока нет

- IOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringДокумент7 страницIOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalОценок пока нет

- Empirical TopocorrДокумент7 страницEmpirical TopocorrIvan BarriaОценок пока нет

- Unit Iii PDFДокумент21 страницаUnit Iii PDFSridhar S NОценок пока нет

- Darkchannelprior DehazeДокумент8 страницDarkchannelprior DehazeNguyễn Tuấn AnhОценок пока нет

- A Single Image Haze Removal Algorithm Using Color Attenuation PriorДокумент7 страницA Single Image Haze Removal Algorithm Using Color Attenuation PriorJASH MATHEWОценок пока нет

- Robot Vision and Image Processing TechniquesДокумент69 страницRobot Vision and Image Processing TechniquesSanjay DolareОценок пока нет

- Sparse Representation of Cast Shadows Via - Regularized Least SquaresДокумент8 страницSparse Representation of Cast Shadows Via - Regularized Least SquaresVijay SagarОценок пока нет

- Single Image Dehazing Using CNNДокумент7 страницSingle Image Dehazing Using CNNhuzaifaОценок пока нет

- Degraded Document Image Enhancing in Spatial Domain Using Adaptive Contrasting and ThresholdingДокумент14 страницDegraded Document Image Enhancing in Spatial Domain Using Adaptive Contrasting and ThresholdingiaetsdiaetsdОценок пока нет

- Polynomial Texture Maps: Tom Malzbender, Dan Gelb, Hans WoltersДокумент10 страницPolynomial Texture Maps: Tom Malzbender, Dan Gelb, Hans WolterssadfacesОценок пока нет

- He Sun TangДокумент8 страницHe Sun TangRaidenLukeОценок пока нет

- Sensors: Design of A Solar Tracking System Using The Brightest Region in The Sky Image SensorДокумент11 страницSensors: Design of A Solar Tracking System Using The Brightest Region in The Sky Image SensorLuis Azabache LoyolaОценок пока нет

- Gamma Correction Enhancement of InfraredДокумент14 страницGamma Correction Enhancement of InfraredAsif FarhanОценок пока нет

- Determining Optical Flow Horn SchunckДокумент19 страницDetermining Optical Flow Horn SchunckTomáš HodaňОценок пока нет

- Improved Weight Map Guided Single Image Dehazing: Manali Dalvi Dhanusha ShettyДокумент7 страницImproved Weight Map Guided Single Image Dehazing: Manali Dalvi Dhanusha ShettyKtkk BogeОценок пока нет

- Applicationof PhotogrammetryforДокумент12 страницApplicationof PhotogrammetryforALLISON FABIOLA LOPEZ VALVERDEОценок пока нет

- Defog ProjectДокумент9 страницDefog ProjectBhaskar Rao PОценок пока нет

- Aerial Image Simulation For Partial Coherent System With Programming Development in MATLABДокумент7 страницAerial Image Simulation For Partial Coherent System With Programming Development in MATLABocb81766Оценок пока нет

- Robust computation of polarisation imageДокумент4 страницыRobust computation of polarisation imageTauseef KhanОценок пока нет

- Image pre-processing tool for MatLabДокумент11 страницImage pre-processing tool for MatLabJason DrakeОценок пока нет

- Precomputed Atmospheric ScatteringДокумент8 страницPrecomputed Atmospheric ScatteringZStepan11Оценок пока нет

- 2007 Shadow PCAДокумент10 страниц2007 Shadow PCAÉric GustavoОценок пока нет

- Image Haze Removal: Status, Challenges and ProspectsДокумент6 страницImage Haze Removal: Status, Challenges and ProspectsDipti GuptaОценок пока нет

- Radiometric, Atmospheric and Geometric Preprocessing Optical Earth Observed ImagesДокумент15 страницRadiometric, Atmospheric and Geometric Preprocessing Optical Earth Observed ImagesZenón Rizo FernándezОценок пока нет

- A Comparison of Various Illumination Normalization TechniquesДокумент5 страницA Comparison of Various Illumination Normalization Techniquesat215Оценок пока нет

- Image Deconvolution with Nonlinear Optical ProcessingДокумент6 страницImage Deconvolution with Nonlinear Optical ProcessingmisaqОценок пока нет

- Kroon Paper BostonДокумент4 страницыKroon Paper BostonsmitapradhanОценок пока нет

- Barron Malik CV PR 2012Документ8 страницBarron Malik CV PR 2012Kintaro OeОценок пока нет

- Enhanced Images Watermarking Based On Amplitude Modulation: T. Amornraksa, K. JanthawongwilaiДокумент9 страницEnhanced Images Watermarking Based On Amplitude Modulation: T. Amornraksa, K. JanthawongwilaiBalde JunaydОценок пока нет

- Lighting DesignДокумент4 страницыLighting DesignulyjohnignacioОценок пока нет

- Rescue AsdДокумент8 страницRescue Asdkoti_naidu69Оценок пока нет

- Image Contrast Enhancement For Deep-Sea Observation SystemsДокумент6 страницImage Contrast Enhancement For Deep-Sea Observation SystemsMohammed NaseerОценок пока нет

- Robust Model-Based 3D Object Recognition by Combining Feature Matching With TrackingДокумент6 страницRobust Model-Based 3D Object Recognition by Combining Feature Matching With TrackingVinoth GunaSekaranОценок пока нет

- (IJCST-V7I2P17) : Harish Gadade, Mansi Chaudhari, Manasi PatilДокумент3 страницы(IJCST-V7I2P17) : Harish Gadade, Mansi Chaudhari, Manasi PatilEighthSenseGroupОценок пока нет

- 7.3 Softcopy-Based Systems 215: 7.3.2 Stereo EnvironmentДокумент6 страниц7.3 Softcopy-Based Systems 215: 7.3.2 Stereo Environmenttirto babbaОценок пока нет

- Scene Generation for Tracking Small Targets Against Cloud BackgroundsДокумент8 страницScene Generation for Tracking Small Targets Against Cloud Backgroundsnpadma_6Оценок пока нет

- PP 34-40 Real Time Implementation ofДокумент7 страницPP 34-40 Real Time Implementation ofEditorijset IjsetОценок пока нет

- High Accuracy Wave Filed Reconstruction Decoupled Inverse Imaging With Sparse Modelig of Phase and AmplitudeДокумент11 страницHigh Accuracy Wave Filed Reconstruction Decoupled Inverse Imaging With Sparse Modelig of Phase and AmplitudeAsim AsrarОценок пока нет

- Multi-Scale Fusion For Underwater Image Enhancement Using Multi-Layer PerceptronДокумент9 страницMulti-Scale Fusion For Underwater Image Enhancement Using Multi-Layer PerceptronIAES IJAIОценок пока нет

- Initial Results in Underwater Single Image Dehazing: Nicholas Carlevaris-Bianco, Anush Mohan, Ryan M. EusticeДокумент8 страницInitial Results in Underwater Single Image Dehazing: Nicholas Carlevaris-Bianco, Anush Mohan, Ryan M. EusticeanaqiaisyahОценок пока нет

- Detecting obstacles in foggy environments using dark channel prior and image enhancementДокумент4 страницыDetecting obstacles in foggy environments using dark channel prior and image enhancementBalagovind BaluОценок пока нет

- Zooming Digital Images Using Interpolation TechniquesДокумент12 страницZooming Digital Images Using Interpolation TechniquesInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Topics On Optical and Digital Image Processing Using Holography and Speckle TechniquesОт EverandTopics On Optical and Digital Image Processing Using Holography and Speckle TechniquesОценок пока нет

- Photovoltaic Modeling HandbookОт EverandPhotovoltaic Modeling HandbookMonika Freunek MüllerОценок пока нет

- Integrated Imaging of the Earth: Theory and ApplicationsОт EverandIntegrated Imaging of the Earth: Theory and ApplicationsMax MoorkampОценок пока нет

- Standard and Super-Resolution Bioimaging Data Analysis: A PrimerОт EverandStandard and Super-Resolution Bioimaging Data Analysis: A PrimerОценок пока нет

- OPtics PPT Begin BSCДокумент29 страницOPtics PPT Begin BSCTufail AbdullahОценок пока нет

- Nanocharacterisation, 2007, p.319 PDFДокумент319 страницNanocharacterisation, 2007, p.319 PDFMaria Ana Vulcu100% (1)

- IB Physics SL - Topic 4.4 - Wave BehaviorДокумент49 страницIB Physics SL - Topic 4.4 - Wave BehaviorMichael BriggsОценок пока нет

- Optical Shop in Thane EastДокумент3 страницыOptical Shop in Thane EastthemusicalstarОценок пока нет

- Lecture Seeding Particles For PIVДокумент30 страницLecture Seeding Particles For PIVKaffelОценок пока нет

- Seminar ReportДокумент34 страницыSeminar ReportSanjay SharmaОценок пока нет

- Variocam® High Definition: Thermographic Solution For Universal UseДокумент2 страницыVariocam® High Definition: Thermographic Solution For Universal Usedanny buiОценок пока нет

- 3d ScanningДокумент9 страниц3d ScanningRohit GuptaОценок пока нет

- Optics 27 YearsДокумент15 страницOptics 27 YearssmrutirekhaОценок пока нет

- 02 CavityModes PDFДокумент112 страниц02 CavityModes PDFUzmaОценок пока нет

- Color 1 Color 2 Color 3 Color 4 Color 5Документ4 страницыColor 1 Color 2 Color 3 Color 4 Color 5Jo MaОценок пока нет

- 14.2mm Red Numeric DisplayДокумент6 страниц14.2mm Red Numeric DisplayJose Enrique Garcia ArteagaОценок пока нет

- Synchrony Ultra HDCДокумент4 страницыSynchrony Ultra HDCMagnus KallingОценок пока нет

- PLC Xe32 SMДокумент96 страницPLC Xe32 SMMr AnonymusОценок пока нет

- Holga ManualДокумент12 страницHolga Manualchicgeek1Оценок пока нет

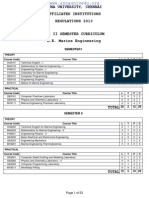

- Anna Univeristy 1st Sem Syllabus For Marine EngineeringДокумент23 страницыAnna Univeristy 1st Sem Syllabus For Marine EngineeringmadhusivaОценок пока нет

- License Plate Recognition For Low-Resolution CCTV Forensics by Integrating Sparse Representation-Based Super-ResolutionДокумент11 страницLicense Plate Recognition For Low-Resolution CCTV Forensics by Integrating Sparse Representation-Based Super-ResolutionWesley De NeveОценок пока нет

- Interesting View PointsДокумент12 страницInteresting View Pointsapi-266626976Оценок пока нет

- BASIC PHOTOGRAPHY PRINCIPLESДокумент33 страницыBASIC PHOTOGRAPHY PRINCIPLESJOEL PATROPEZ100% (1)

- NIKON Recording SettingsДокумент2 страницыNIKON Recording SettingsslavkoОценок пока нет

- Seismic Reflection Module 3: Properties, Resolution, and AmplitudesДокумент53 страницыSeismic Reflection Module 3: Properties, Resolution, and AmplitudesRoy Bryanson SihombingОценок пока нет

- 2016 Leupold Consumer CatalogДокумент42 страницы2016 Leupold Consumer CatalogMark StephensОценок пока нет

- Tips For Shooting Great Nightscapes PDFДокумент6 страницTips For Shooting Great Nightscapes PDFVargas Gómez AlvaroОценок пока нет

- sini sinr n n v v λ λ n v n u n n RДокумент10 страницsini sinr n n v v λ λ n v n u n n RAnwesha Kar, XII B, Roll No:11Оценок пока нет

- F4 PHYSICS - LENSES RevisionДокумент16 страницF4 PHYSICS - LENSES RevisionKalarajanОценок пока нет

- Study Density & Colour Measurement DevicesДокумент3 страницыStudy Density & Colour Measurement DevicesShraddha GhagОценок пока нет

- UltravioletДокумент17 страницUltravioletDhanmeet KaurОценок пока нет

- Optic Fiber CommunicationsДокумент18 страницOptic Fiber CommunicationsFahad IsmailОценок пока нет

- Test and Monitoring Solutions For Fiber To The Home (FTTH)Документ2 страницыTest and Monitoring Solutions For Fiber To The Home (FTTH)Jesus LopezОценок пока нет

- LM一80认证PCT3030 TH LM-80 S-F3-10-THIДокумент19 страницLM一80认证PCT3030 TH LM-80 S-F3-10-THIsoufianeОценок пока нет