Академический Документы

Профессиональный Документы

Культура Документы

Soalan Latihan Validity N Reliability

Загружено:

Diyana MahmudИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Soalan Latihan Validity N Reliability

Загружено:

Diyana MahmudАвторское право:

Доступные форматы

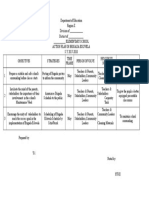

VALIDITY AND RELIABILITY 1. Which of the following is the best definition of reliability? a.

Reliability refers to whether the data collection process measures what it is supposed to measure. b. Reliability refers to the degree to which the data collection process covers the entire scope of the content it is supposed to cover. c. Reliability refers to whether or not the data collection process is appropriate for the people to whom it will be administered. d. Reliability refers to the consistency with which the data collection process measures whatever it measures.

2. Mary took a test on which she received a score of 75. The teacher's house burned down, and the tests were destroyed. Mary took the same test over again the next day and again received a score of 75. a. There is evidence to suggest that Mary's test was reliable. b. There is evidence to suggest that Mary's test was unreliable. c. There is no evidence upon which to base even a tentative judgment about the reliability of the test.

3. Marvin's final exam was scored by his teacher, who gave him a 64. This would have caused him to fail the course. He protested to the school officials, and two other teachers scored the same test. One of them gave him a 75, and the other an 85. a. There is evidence that Marvin's test was scored reliably. b. There is evidence that Marvin's test was scored unreliably. c. There is no evidence upon which to base even a tentative judgment about the reliability of the scoring process.

4. Ella May's teacher rated her behavior as indicating that she was quite popular. The teacher's aide assigned to the same classroom rated Ella May as being uncooperative when given assignments. a. There is evidence that the rating process was reliable. b. There is evidence that the rating process was unreliable. c. There is no evidence upon which to base even a tentative judgment about the reliability of the rating process.

5. Donald was rated by his teacher as being unable to perform the mathematical skills necessary for the next math unit. Because of this low rating, Donald received a special programmed unit to help him review his skills. A day later, after completing the programmed materials, Donald was rated by the same teacher as able to perform the skills necessary for the next unit. a. There is evidence that the teacher's rating system was reliable. b. There is evidence that the rating system was unreliable. c. There is no evidence upon which to base even a tentative judgment about the reliability of the rating process.

Questions 6 through 8 go together. 6. Miss Curtis was planning to teach a unit on English grammar to her ninth graders. She planned to give one test as a pretest, and another as a posttest. Then she planned to compare the two sets of scores to determine whether or not the students had profited from the unit. Examine the following list of statements and indicate which ones suggest that her tests lacked reliability. (Choose more than one answer.) a. Each form of the test contained 50 items, worth two points apiece. b. When the tests were scored by two separate persons, the results were exactly the same. c. Thirty-five of the items on each of the alternate forms of the test were answered correctly by everyone.

d. Rather than giving highly structured instructions, she allowed the students to ask questions as they went along, and provided information as it was requested. e. The average score on the posttest was substantially higher than the average score on the pretest.

7. After Miss Curtis had administered both forms of her English grammar test, she decided to revise it. By doing this, she hoped to make it a more reliable test the next year. Examine the following list of statements and indicate which ones would be likely to increase the reliability of the test. (Choose more than one.) a. She wrote out a detailed set of instructions based on the questions which had arisen this time, and she attached these instructions to the tests. b. She increased the length of the test from 50 to 75 items on each form. c. She eliminated several of the items which everyone had answered correctly, because she found that these had included irrelevant clues which enabled the students to get them right. She replaced these with items that she felt contained no irrelevant clues. d. She eliminated each of the items which had been missed by 40-60% of the students on the pretest and by 70-90% of the students on the posttest. e. She decided to base many of her new items on contemporary music, since nearly all the students seemed to be interested in such music.

8. Miss Curtis decides to compute statistical reliability to help determine the degree of reliability her tests possess. The tests are multiple choice/true-false in format. She considers them to be criterion-referenced rather than norm-referenced tests. Her main concern is that the decisions she would make on the basis of the results would be based on actual abilities of the students rather than on unique aspects of the testing situation. She is also concerned that any differences between the pretest and the posttest should indicate real differences, rather than merely differences between the two tests. Which of the following types of statistical reliability would help Miss Curtis make useful decisions about her tests? (Choose as many as necessary.) a. Test-retest reliability.

b. Equivalent-forms reliability. c. Internal consistency reliability. d. Interscorer reliability. e. Interobserver agreement

9. Which of the following types of statistical reliability require that the same test be administered to the same persons two times? (Choose as many as necessary.) a. Test-retest reliability. b. Equivalent-forms reliability. c. Internal consistency reliability. d. Interscorer reliability. e. Interobserver agreement

10. Which of the following is a major weakness of the statistical techniques for estimating reliability? (Choose only one.) a. When respondents give different answers because of chance factors such as health problems or luck, this lowers the statistical estimate of reliability. b. When a large number of persons master a skill and therefore get the answer right, this lowers statistical reliability. c. Changes in the directions as they are given or as they are perceived by the respondents will lower the statistical reliability. d. Essay tests receive lower estimates of statistical reliability than more objective tests, because there are more likely to be subjective factors influencing the scoring process.

11. If a teacher has access to two tests which attempt to measure the same research variable, she should almost always choose the test which is the more reliable (provided they take about the same amount of time to administer and score). a. True. b. False.

12. Which of the following is the best definition of validity? a. Validity deals with whether the data collection process actually measures what it purports to be measuring. b. Validity deals with whether the data collection process is designed at the appropriate level of difficulty. c. Validity deals with whether the data collection process is consistent in measuring whatever it measures. d. Validity deals with the question of how subjectivity can best be controlled in the scoring process. e. Validity deals with the standardization of procedures for administering, scoring, and interpreting data collection processes.

13. All but one of the following are factors which directly influence the validity of a data collection process. Choose the exception. a. The logical appropriateness of the operational definition. b. The match between the tasks in the data collection process and the operational definition. c. The difficulty of the data collection process. d. The reliability of the data collection process.

14. Mr. Gomez wants to help his students become familiar with educational television. He defines "familiarity" with educational television as meaning that the students will be able to name several of the shows on the local educational television station. To measure this research variable, he asks the students one day to write down the name of as many shows as they can think of which were on the local educational channel the night before. He then concludes that the students who can name more shows are more familiar with educational television than those who name few or no shows accurately. What is the most obvious reason why this measurement strategy is likely to be invalid? a. It is likely to be unreliable. b. The task doesn't match the operational definition. c. The operational definition is logically inappropriate. d. The task requires that the students be familiar with the local educational television station.

15. Miss Chesterton is teaching her students to use English grammar correctly. She operationally defines using English grammar correctly as meaning that they will follow all the rules of normal English grammar in the compositions they write. On the exam, she determines how well the students have met this goal by requiring them to diagram twenty sentences of varying levels of complexity. What is the most obvious reason why this measurement strategy is likely to be invalid? a. It is likely to be unreliable. b. The task doesn't match the operational definition. c. The operational definition is logically inappropriate. d. The task requires that the students be capable of following the rules of English grammar in their writing.

16. Professor Carter wants her students to develop a genuine appreciation of Shakespeare's plays. She operationally defines this to mean that the students will be able to recall lines of the plays from memory. She measures this by giving the

students several important scenes with lines omitted and having them fill in the missing lines. What is the most obvious reason why this measurement strategy is likely to be invalid? a. It is likely to be unreliable. b. The task doesn't match the operational definition. c. The operational definition is logically inappropriate. d. The students may not be able to recall the lines.

Questions 17 through 20 are based on the following information. Mrs. Green wants to measure Kathy's reading comprehension by having her read a story and then relate it to her own experience. Examine each of the following statements (assuming they are all true), and indicate whether each would or would not weaken the validity of Mrs. Green's testing strategy. 17. Even outside reading situations, Kathy has a great deal of trouble relating any stories at all to her personal life. a. Weakens the validity of the data collection process. b. Does not weaken the validity of the data collection process.

18. Kathy has trouble understanding the passage. a. Weakens the validity of the data collection process. b. Does not weaken the validity of the data collection process.

19. Kathy becomes anxious because she has to take the test aloud in front of the class, and anxiety makes her perform poorly. a. Weakens the validity of the data collection process.

b. Does not weaken the validity of the data collection process.

20. The passage is extremely short. a. Weakens the validity of the data collection process. b. Does not weaken the validity of the data collection process.

21. Ms. Monroe has developed a questionnaire to measure her students' attitudes toward the practicum in her nursing training program. She is concerned about whether the questions apply proportionately to all the aspects of the program. What tool for estimating aspects of validity would help Ms. Monroe make a sound judgment in this regard? a. Content validity. b. Criterion-related validity. c. Construct validity. d. None of the above.

22. Mr. Shepard has developed a criterion-referenced test on basic mathematic abilities. He wants to be sure it gives appropriate coverage to all the topics covered during the semester. What tool for estimating aspects of validity would help Mr. Shepard make a sound judgment in this regard? a. Content validity. b. Criterion-related validity. c. Construct validity. d. None of the above.

23. Professor DuParc has developed an observational strategy to measure a person's "independence from peer pressure." What tool for estimating aspects of validity would help Professor DuParc to demonstrate that his strategy really measures "independence from peer pressure" rather than some other characteristic? a. Content validity. b. Criterion-related validity. c. Construct validity. d. None of the above.

24. Mrs. Masters has been admitting persons into her Advanced Composition course on the basis of their performance in Introductory English. She decides that she could make better selections if she would have the applicants take a special test, and then successful candidates would be those who scored highest on the test. What tool for estimating aspects of validity would help Mrs. Masters demonstrate that her new procedure is better than the old one? a. Content validity. b. Criterion-related validity. c. Construct validity. d. None of the above.

Review Quiz 1. (d). This is a paraphrase of the definition given in the textbook. If you chose (a), you selected the definition of validity. 2. (a). This doesn't conclusively prove that the test is entirely valid, but it does give some evidence in that direction, since it demonstrates consistency. 3. (b). If the measurement process were reliable, Marvin should be evaluated about the same on each occasion. He has gotten three different scores on three occasions. 4. (c). The teacher and the aide are rating different characteristics (popularity and cooperativeness), and so it is reasonable that the ratings may be different. There has been no attempt here to measure the same thing twice. There is no evidence on which to base a judgment regarding consistency. 5. (c). Donald's score changed; but since he had training between the two testing occasions, there is no reason to expect the ratings to remain stable. Although there has been an attempt here to measure the same thing twice, there was no reason to expect the ratings to remain the same. There is not enough evidence on which to base a judgment regarding consistency. 6. (c) and (d). Statement (c) indicates that several of the items were excessively easy. Statement (d) indicates that the instructions might change on different testing occasions (because students might ask different questions). Statement (a) indicates a strength (50 items is a reasonably large number of questions). Statement (b) suggests consistency among people scoring the tests (interscorer reliability). Statement (e) gives no real evidence: the scores changed, but we would expect them to change after instruction. Since (except for the test results, which would involve circular reasoning) we don't know whether the instruction was effective or not, we don't know whether the test was reliable. 7. (a), (b), and (c). Statement (a) described a way to standardize the measurement process, thereby eliminating some extraneous influences. Statement (b) would increase reliability by expanding the sample of items - assuming that the additional 25 items were related to the same outcomes as the original 50. Statement (c) would increase reliability by increasing the number of effective items, since excessively easy items do not add to the reliability of a test. Statement (d) describes a bad strategy; eliminating items of medium difficulty on the pretest would actually reduce the reliability of the test. Statement (e) may be a good idea, but it is irrelevant to the concept of reliability.

8. (a) and (b). Since she is concerned about unique aspects of the testing situation, she needs test-retest reliability. Since she wants to compare posttest results to pretest results, she would like to have parallel tests, and so she also needs equivalent-forms reliability. 9. (a). Test-retest is the only one of those listed that fits this description. Equivalent-forms requires given two forms of the same test to one group of people. Internal consistency requires giving the test just once and then analyzing those results with coefficient alpha. Interscorer reliability requires giving the test just once and then having two persons score it. Interobserver agreement requires two persons to observe the same set of behaviors and to compare their results to see if the agreed on what they observed. 10. (b). Restrictions in the range of scores lower statistical reliability. Since restrictions sometimes occur for good reasons (e.g., student mastery of the information), this would be considered a possible weakness of their use. Statements (a), (c), and (d) describe strengths of reliability coefficients, since reliability is supposed to notice and rule out these extraneous factors. 11. (b). Validity (not reliability) is the most important factor in test design and selection. It is easy to develop tests with high reliability that lack validity. 12. (a). This is a paraphrase of the textbook's definition of validity. If you chose (c), you chose the definition of reliability. 13. (c). The difficulty of the data collection process may be an important consideration, but it does not directly influence validity. The other three are the factors listed by the textbook as influencing validity. 14. (a). The measurement process is likely to unreliable (and hence, invalid), because Mr. Gomez has used a single question focusing on a single night. He should sample several nights, ask more questions, or use multiple operational definitions. The operational definition seems appropriate (naming shows sounds close to familiarity), and the task matched the operational definition (He asked students to name shows). To the extent that statement (d) is true, Mr. Gomez would have evidence of validity, not invalidity, since it states exactly what he is trying to measure. 15. (b). Diagramming sentences is not even remotely synonymous with following the rules of normal English grammar in the compositions they write.

16. (c). Few people would seriously argue that recalling lines from a play is synonymous with appreciating that play. The tasks do match the operational definition, and there is no reason to believe that the test is unreliable; but the faulty operational definition ruins the validity of this data collection process. 17. (a). If Kathy has problems relating stories in general to her own life, then making her perform this task to indicate reading comprehension is invalid. She would be doing two tasks: (a) comprehending (at which she might succeed) and (b) relating to her own life (at which she might fail). By failing at the second task, she would look like she had failed at the first. Other students, who had no trouble relating stories to their personal lives, would be performing only one real task (comprehending), and so failure at that task would indicate a lack of comprehension. 18. (b). This does not weaken the validity of the test. Quite the contrary, it's evidence that the test is measuring what it's supposed to be measuring. 19. (a). The test is supposed to require her to comprehend (presumably under normal circumstances). The task she is actually required to perform is to comprehend under conditions of extreme anxiety. 20. (a). This is likely to weaken the reliability of the test, because it is an inadequate sample of behavior. Weakening reliability is one way to weaken validity. 21. (a). She is concerned that the data collection process covers the entire range of what it should cover. This is a good paraphrase of the definition of content validity. 22. (a). He is concerned that the data collection process covers the entire range of what it should cover. This is a good paraphrase of the definition of content validity. 23. (c). "Independence from peer pressure" is an internalized concept (construct) that Professor DuParc wants to measure. Construct validity would help him demonstrate that he has done so correctly. 24. (c). She is trying to predict performance in the Advanced Composition course. Predictive validity (a form of criterion-related validity) wold help her determine whether the special test was useful for this purpose.

Вам также может понравиться

- Assessment of Learning 1Документ128 страницAssessment of Learning 1Jessie DesabilleОценок пока нет

- PRE-TEST 1. Assessment of Learning 2Документ5 страницPRE-TEST 1. Assessment of Learning 2John Jose Tagorda TorresОценок пока нет

- 2020 Mar - Prof Ed 103Документ9 страниц2020 Mar - Prof Ed 103Maria Garcia Pimentel Vanguardia IIОценок пока нет

- Psych Ass PretestДокумент12 страницPsych Ass PretestMaclynjoyОценок пока нет

- Guide Counseling Board Exam Reviewer Psychological TestingДокумент13 страницGuide Counseling Board Exam Reviewer Psychological Testingtestingsc274963% (8)

- Exam in AsseДокумент5 страницExam in AssePia mae DoñesОценок пока нет

- Post Test: D. It Can Be Easily InterpretedДокумент2 страницыPost Test: D. It Can Be Easily Interpretedrosanie remotin100% (1)

- Summative Test Week 2 Practical Research 2 Quarter 2Документ3 страницыSummative Test Week 2 Practical Research 2 Quarter 2Lubeth Cabatu82% (11)

- Montevista Pre Board March 3 Up To 100 Items ANSWER KEYДокумент15 страницMontevista Pre Board March 3 Up To 100 Items ANSWER KEYAlfredo PanesОценок пока нет

- Quantitative Research Design and VariablesДокумент4 страницыQuantitative Research Design and VariablesDebbie Florida EngkohОценок пока нет

- SEE ALSO: LET Reviewer in Prof. Ed. Assessment and Evaluation of LearningДокумент5 страницSEE ALSO: LET Reviewer in Prof. Ed. Assessment and Evaluation of LearningShaira TimcangОценок пока нет

- True or False Questionnnaire EIMДокумент8 страницTrue or False Questionnnaire EIMJomer PeñonesОценок пока нет

- BBBMG2214 Business Research Methods September Semester 2021 Tutorial 6Документ7 страницBBBMG2214 Business Research Methods September Semester 2021 Tutorial 6HIEW YI LINGОценок пока нет

- Assessment Test QuestionsДокумент14 страницAssessment Test QuestionsHanna Grace Honrade100% (2)

- CompreДокумент3 страницыCompreChloedy Rose SaysonОценок пока нет

- Assessment and EvaluationДокумент7 страницAssessment and Evaluationnina ariasОценок пока нет

- LET Reviewer: Assessment and Evaluation of Learning Part 2Документ4 страницыLET Reviewer: Assessment and Evaluation of Learning Part 2Raffy Jade SalazarОценок пока нет

- Assessment 1Документ5 страницAssessment 1Claide Vencent Arendain-Cantila DesiertoОценок пока нет

- Prof Ed Assessment of Learning 2Документ5 страницProf Ed Assessment of Learning 2jerome endoОценок пока нет

- Statistics and Probability: Quarter 4 - Module 15: Illustrating The Nature of Bivariate DataДокумент22 страницыStatistics and Probability: Quarter 4 - Module 15: Illustrating The Nature of Bivariate DataMichael RetuermaОценок пока нет

- 10 Q&aДокумент2 страницы10 Q&aJoan May de LumenОценок пока нет

- ASSESS EVAL LEARNING PART 4 EditДокумент7 страницASSESS EVAL LEARNING PART 4 EditghenОценок пока нет

- Technology Based AssessmentsДокумент7 страницTechnology Based Assessmentstipp3449Оценок пока нет

- Exam Research 2 StudentsДокумент7 страницExam Research 2 StudentsVILLA 2 ANACITA LOCIONОценок пока нет

- AL 2.2 - Validity and ReliabilityДокумент11 страницAL 2.2 - Validity and ReliabilityJessa ParedesОценок пока нет

- 2ND QUARTER EXAM PRACTICAL RESEARCH 2 MULTIPLE CHOICEДокумент5 страниц2ND QUARTER EXAM PRACTICAL RESEARCH 2 MULTIPLE CHOICEQuennee Ronquillo Escobillo71% (14)

- Chapter 5 Multiple Choice Questions on Testing and MeasurementДокумент5 страницChapter 5 Multiple Choice Questions on Testing and MeasurementmeetajoshiОценок пока нет

- How To Do Statistics Coursework GcseДокумент5 страницHow To Do Statistics Coursework Gcseafaydoter100% (2)

- SF National High School Summative Exam InsightsДокумент7 страницSF National High School Summative Exam InsightsAruel Delim100% (4)

- Quiz in Practical Research 2Документ2 страницыQuiz in Practical Research 2Anthony YadaoОценок пока нет

- Giveaway QuestionsДокумент12 страницGiveaway QuestionsFarrah DeitaОценок пока нет

- Assessing Student LearningДокумент5 страницAssessing Student LearningDoc Joey100% (4)

- Assessment 1 With AnswerДокумент5 страницAssessment 1 With AnswerClaide Vencent Arendain-Cantila DesiertoОценок пока нет

- CNU Medellin Campus Final Exam in Education 10 Item AnalysisДокумент4 страницыCNU Medellin Campus Final Exam in Education 10 Item AnalysisChrysler Monzales CabusaОценок пока нет

- Chapter 1 Test ItemsДокумент19 страницChapter 1 Test Itemsgminarchick27% (15)

- Central Tendency, Test Construction, Validity & ReliabilityДокумент2 страницыCentral Tendency, Test Construction, Validity & ReliabilityCjls KthyОценок пока нет

- Guide To MCQsДокумент6 страницGuide To MCQssoharashedОценок пока нет

- Differences Between Qualitative and Quantitative ResearchДокумент5 страницDifferences Between Qualitative and Quantitative ResearchMarc AguinaldoОценок пока нет

- Practical Research IIДокумент2 страницыPractical Research IIRaymar TabrillaОценок пока нет

- Grade 12 Written Test on 3Is Table of SpecificationsДокумент7 страницGrade 12 Written Test on 3Is Table of SpecificationsElio SanchezОценок пока нет

- LET Review: Concise Guide to Assessment Terms & ConceptsДокумент154 страницыLET Review: Concise Guide to Assessment Terms & ConceptsAngelo Aniag Unay92% (12)

- Research PDFДокумент121 страницаResearch PDFDoreene AndresОценок пока нет

- Assessment 50 Test ItemsДокумент8 страницAssessment 50 Test ItemsmarkОценок пока нет

- Eng 211 ReportingДокумент4 страницыEng 211 ReportingKristine VirgulaОценок пока нет

- Data Analysis ScruggsДокумент9 страницData Analysis Scruggsapi-282053052Оценок пока нет

- Quiz#1Документ10 страницQuiz#1Fabian OrozcoОценок пока нет

- Assessment Final ExamДокумент7 страницAssessment Final ExamBesufikad ShiferawОценок пока нет

- 1st Quarter Exam - PR2 SY 2022-2023Документ5 страниц1st Quarter Exam - PR2 SY 2022-2023Jenny Vhie S. VinagreraОценок пока нет

- Exams ReviewerДокумент9 страницExams ReviewerAbby PepinoОценок пока нет

- Sample Multiple Choice Items and Bloom's TaxonomyДокумент5 страницSample Multiple Choice Items and Bloom's TaxonomySylvaen Wsw75% (4)

- Drill 30001Документ101 страницаDrill 30001ScenarfОценок пока нет

- Assessment of Student LearningДокумент6 страницAssessment of Student LearningLaurice Marie GabianaОценок пока нет

- Test Measurement and Evaluation Assessment and Evaluation: Multiple ChoicesДокумент6 страницTest Measurement and Evaluation Assessment and Evaluation: Multiple ChoicesKaren DellatanОценок пока нет

- TQ - PR 2-Quarter 2Документ8 страницTQ - PR 2-Quarter 2Czarina Ciara AndresОценок пока нет

- RESEARCH EXAM: 2ND QUARTER PRACTICAL RESEARCH 2TITLE PRACTICAL RESEARCH 2 EXAM: QUESTIONS AND ANSWERSДокумент5 страницRESEARCH EXAM: 2ND QUARTER PRACTICAL RESEARCH 2TITLE PRACTICAL RESEARCH 2 EXAM: QUESTIONS AND ANSWERSDONABEL ESPANOОценок пока нет

- Evaluation Terms 2020Документ29 страницEvaluation Terms 2020Dalet MedinaОценок пока нет

- Monitoring ExamДокумент3 страницыMonitoring ExamRochelle Onelia BaldeОценок пока нет

- Playful Testing: Designing a Formative Assessment Game for Data ScienceОт EverandPlayful Testing: Designing a Formative Assessment Game for Data ScienceОценок пока нет

- Fighting the White Knight: Saving Education from Misguided Testing, Inappropriate Standards, and Other Good IntentionsОт EverandFighting the White Knight: Saving Education from Misguided Testing, Inappropriate Standards, and Other Good IntentionsОценок пока нет

- Missing the Mark: Why So Many School Exam Grades are Wrong – and How to Get Results We Can TrustОт EverandMissing the Mark: Why So Many School Exam Grades are Wrong – and How to Get Results We Can TrustОценок пока нет

- Education For Sustainable Development in Higher Education: Looking From System Thinking PerspectiveДокумент1 страницаEducation For Sustainable Development in Higher Education: Looking From System Thinking PerspectiveDiyana MahmudОценок пока нет

- Writing Qualitative Research Purpose and Questions: A Cheat SheetДокумент11 страницWriting Qualitative Research Purpose and Questions: A Cheat SheetDiyana MahmudОценок пока нет

- Brisbane Study BrochureДокумент17 страницBrisbane Study BrochureYasir KhanОценок пока нет

- Tugasan 3: PN Siti Nur DiyanaДокумент38 страницTugasan 3: PN Siti Nur DiyanaDiyana MahmudОценок пока нет

- 01.continuous Assessment AleДокумент16 страниц01.continuous Assessment Aleazhar sumantoОценок пока нет

- Alternative Math-Review TextДокумент1 страницаAlternative Math-Review Textauvan lutfiОценок пока нет

- Organized Teacher ResumeДокумент2 страницыOrganized Teacher ResumeAdvRohit SigrohaОценок пока нет

- Case Study RajinderДокумент83 страницыCase Study Rajinderapi-161284356100% (1)

- Lesson Plan Two Mode Median Range and MeanДокумент4 страницыLesson Plan Two Mode Median Range and MeanSETHОценок пока нет

- Grade 8 3d Geometry Unit PlanДокумент11 страницGrade 8 3d Geometry Unit Planapi-297847828Оценок пока нет

- Rommel C. Bautista, Ceso V School Division Superintendent Division of Cavite Trece Martires City, CaviteДокумент2 страницыRommel C. Bautista, Ceso V School Division Superintendent Division of Cavite Trece Martires City, CaviteChristina Aguila NavarroОценок пока нет

- Tarone and LiuДокумент22 страницыTarone and LiuGiang NguyenОценок пока нет

- 3rd Grade Choral Count by Time 1Документ4 страницы3rd Grade Choral Count by Time 1api-460316451Оценок пока нет

- Action Plan in Brigada EskwelaДокумент1 страницаAction Plan in Brigada Eskwelacyril coscos80% (5)

- Test Passive2 EnaaaaaДокумент3 страницыTest Passive2 EnaaaaaJuan José Peinado PérezОценок пока нет

- A Qualitative Research Study On School Absenteeism Among College StudentsДокумент15 страницA Qualitative Research Study On School Absenteeism Among College StudentsneoclintОценок пока нет

- Petras Educ526growthassessmentДокумент11 страницPetras Educ526growthassessmentapi-239156716Оценок пока нет

- Medieval Monks and Their World - Ideas and RealitiesДокумент220 страницMedieval Monks and Their World - Ideas and RealitiesThomas Simmons100% (3)

- Lesson Plan Class 10 20-10Документ2 страницыLesson Plan Class 10 20-10polyester14Оценок пока нет

- E CtivitiesДокумент240 страницE Ctivitiespacoperez2008100% (4)

- Communication Letters AssignmentДокумент3 страницыCommunication Letters Assignmentapi-279393811Оценок пока нет

- Materials For The Teaching of GrammarДокумент11 страницMaterials For The Teaching of GrammarAdiyatsri Nashrullah100% (3)

- Lesson Plan in CookeryДокумент3 страницыLesson Plan in Cookerydesirie poliquit86% (7)

- Amelia ResumeДокумент3 страницыAmelia Resumeapi-305722904Оценок пока нет

- Simple Present Level IДокумент38 страницSimple Present Level IJuan Daniel FelipeОценок пока нет

- Philippine Teacher Accomplishment ReportsДокумент2 страницыPhilippine Teacher Accomplishment ReportsAllona Zyra CambroneroОценок пока нет

- Big Breach From Top Secret To Maximum Security, 1st Edition, 2001-01Документ243 страницыBig Breach From Top Secret To Maximum Security, 1st Edition, 2001-01Mario Leone100% (3)

- History Reflection WeeblyДокумент3 страницыHistory Reflection Weeblyapi-311884709Оценок пока нет

- Lesson Plan - 2nd Grade MusicДокумент3 страницыLesson Plan - 2nd Grade MusicMatt JoynerОценок пока нет

- A School Leader Has Been Hired As The New Principal of A Public Elementary SchoolДокумент6 страницA School Leader Has Been Hired As The New Principal of A Public Elementary SchoolFranz Wendell BalagbisОценок пока нет

- Teachers'strategies in Content and Language Integrated Learning (Clil)Документ54 страницыTeachers'strategies in Content and Language Integrated Learning (Clil)man_daineseОценок пока нет

- It Is Okay To Leave The Iron Turned On When I've Finished Using ItДокумент54 страницыIt Is Okay To Leave The Iron Turned On When I've Finished Using ItTamina BellОценок пока нет

- Gender in The Education SystemДокумент6 страницGender in The Education Systemapi-385089714Оценок пока нет

- Developing Cultural Awareness in EflДокумент27 страницDeveloping Cultural Awareness in EflSelvi PangguaОценок пока нет