Академический Документы

Профессиональный Документы

Культура Документы

Problems 2

Загружено:

Qi Ming ChenИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Problems 2

Загружено:

Qi Ming ChenАвторское право:

Доступные форматы

Solution to Problems 2, Markov Chains

Problem 1 Find the state transition matrix P for the Markov chain

1 2 1 2 0 1 2 1 2 1 4

1 2

1 4

Solution 1 The state transition matrix is 01

2 B1 @2 1 4 1 2 1 2 1 4

0

1 2

P =

C 0A :

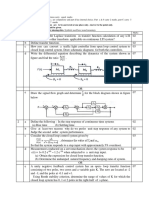

Problem 2 Each second, a laptop computers wireless LAN card reports the state of the s radio channel to an access point. The channel may be (0) poor, (1) fair, (2) good, or (3) excellent. In the poor state, the next state is equally likely to be poor or fair. In states 1; 2; and 3; there is a probability 0:9 that the next system state will be unchanged from the previous state and a probability 0:04 that the next system state will be poor. In states 1 and 2; there is a probability 0:6 that the next state is one step up in quality. When the channel is excellent, the next state is either good with probability 0:04 or fair with probability 0:02: Sketch the Markov chain and nd the state transition matrix P: Solution 2 The chain is shown below

and the transition matrix is B C B0:04 0:9 0:06 0 C B C P=B 0:04 0 0:9 0:06C @ A 0:04 0:02 0:04 0:9 Telecommunication Networks 2005 0 0:5 0:5 0 0 1

Solution to Problems 2, Markov Chains

Problem 3 The random variables

1 ; 2 ; :::

are independent and with the common probability

2 ; :::; n g

mass function. Set X0 = 0; and let Xn = max f 1 ; k= Pr ( = k) = 0 0:1 1 0:3

be the largest

observed to

date. Sketch the Markov chain, and determine the transition probability matrix. 2 0:2 3 0:4

Solution 3 the chain is shown

0.4

0.1

0.4

0.6

0.3 0.2

0.4 2 3

0.2

0.4

0 1 0:1 0:3 0:2 0:4 B C B 0 0:4 0:2 0:4C B C P=B 0 0 0:6 0:4C @ A 0 0 0 1

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

Problem 4 A 3-state Markov chain fXn ; n = 0; 1; ::g has the transition probability matrix B C P = @ 0:2 0:2 0:6 A 0:6 0:1 0:3 1. Compute the two-step transition matrix P2 2. What is Pr fX3 = 1jX1 = 0g 3. What is Pr fX3 = 1jX0 = 0g 0 0:1 0:2 0:7 1

Solution 4 1. 0 12 0 1

B C B C P(2) = @ 0:2 0:2 0:6 A = @ 0:42 0:14 0:44 A 0:6 0:1 0:3 0:26 0:17 0:57 2. We have Pr fX3 = 1jX1 = 0g = P01

(2)

0:1 0:2 0:7

0:47 0:13

0:4

which can be obtained directly from the two-step transition matrix Pr fX3 = 1jX1 = 0g = P01 = 0:13 3. We have Pr fX3 = 1jX0 = 0g = P01 Using the Chapman-Kolmogorov equation, we obtain

(3) P01 (3) (2)

= 0:47

2 X k=0

P0k Pk1 = P00 P01 + P01 P11 + P02 P21 0:2 + 0:13 0:2 + 0:4 0:1 = 0:16

(2)

(2)

(2)

(2)

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

Problem 5 Each morning an individual leaves his house and goes for a run. He is equally likely to leave either from his front of back door. Upon leaving the house, he chooses a pair of running shoes (or goes running barefoot if there are not shoes at the door from which he departed). On his return he is equally likely to enter, and leave his running shoes, either by the front or back door. If he owns a total of k pairs of running shoes, what proportion of the time does he run barefooted?

Markov chain.

Solution 5 Let Xn be the number of shoes at the front door. Then Xn 2 f0; 1; 2; :::; kg is a

0.5

0 .5

0.5

0.5 0 .5 0.5 0.5

0.5

0 .5

0.5

0.5 0

0.5

2

0.5

K-1

0 .5

0.5

0 .5

0 .5

0 .5

0. 5

Writing the balance equations 1 2 1 1 = 2 ::: = ::: 1 1 k 1 = 2 2

0

1 2 1 2

1 2

) ) )

0 1

= =

1 2

k 1

Therefore, we have

0

= ::::

k 1

we obtain after using the normalization equation

0

+

1

+ ::::

k 1

+

k

=1 1 k+1

= ::::

k 1

Therefore the proportion of the time does he run barefooted is

0

1 k+1

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

Problem 6 An organization has N employees where N is a large number. Each employee has one of three possible job classications and changes classications (independently) according to a Markov chain with transition probabilities 0 C B @0:2 0:6 0:2A 0:1 0:4 0:5 What percentage of employees are in each classication. 0:7 0:2 0:1 1

Solution 6 The limiting probabilities can be obtained by solving any two equations from the set

0 1 2

= 0:7 = 0:2 = 0:1

0 0 0

+ 0:2 + 0:6 + 0:2

1 1 1

+ 0:1 + 0:4 + 0:5

2 2 2

together with the normalization equation

0

=1

We obtain

0 1 2

= = =

6 17 7 17 4 17

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

pose that when the chain is in state i; i > 0; the next state is equally likely to be any of the states 0; 1; 2; ::; i 1: Find the limiting probabilities of this Markov chain.

Problem 7 Consider a Markov chain with states f0; 1; 2; 3; 4g : Suppose P0;4 = 1; and sup-

Solution 7 The state transition diagram is

1

1 3 1 3

1

1 2 1 1 2 2

1 3 3 1 4 1 4

1 4

1 4

and the transition matrix

The limiting probabilities are obtained by solving the equations

0 1 2 3 4

B B1 B P = B1 B2 B1 @3

1 4

0 0

1 2 1 3 1 4

0 0 0

1 3 1 4

1 0 1 C 0 0C C 0 0C C C 0 0A 1 4 0

= = = = =

+

2

1 2

+

3 4

1 3

+

4

1 4

1 2 1 3 1 4

0

1 3 1 3+ 4 +

4

1 4

together with the normalization equation

0

=1

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

We obtain

4 3

= = = =

1 4 1 3 1 2

) +

1 4

1 4 1 2+ 3

3

) + 1 4

1 11 1 + 0 = 0 34 4 3 11 11 1 ) 1= + + 23 34 4 12 37

1 2

therefore

0

1+

1 1 1 + + +1 2 3 4

= 1 =)

and we get

0 1 2 3 4

= = = = =

12 37 6 37 4 37 3 37 12 37

Telecommunication Networks 2005

Solution to Problems 2, Markov Chains

Problem 8 Two teams A and B, are to play a best of seven series of games. Suppose that the outcomes of successive games are independent, and each is won by A with probability p and won by B with probability 1 p: Let the state of the system be represented by the pair (a; b), where a is the number of games won by A, and b is the number of games won by B: Specify the transition probability matrix, and draw the state-transition diagram. Note that a+b 7 and that the series ends whenever a = 4 or b = 4:

Solution 8 The state transition diagram is

1 p 0,0 0,1 1 p 0,2 1 p 0,3 1 p 0,4

1 p 1,0 1,1

1 p 1,2

1 p 1,3

1 p 1,4

p 1 p

p 1 p

p 1 p

p 1 p

2,0

2,1

2,2

2,3

2,4

1 p 3,0 3,1

1 p 3,2

1 p 3,3

1 p 3,4

4,0

4,1

4,2

4,3

Telecommunication Networks 2005

Вам также может понравиться

- Sample Code FM Based ExtractorДокумент7 страницSample Code FM Based ExtractorVikas Gautam100% (2)

- Leibniz. Philosophical Papers and LettersДокумент742 страницыLeibniz. Philosophical Papers and LettersDiego Ernesto Julien Lopez100% (3)

- Engine Cooling System AnalysisДокумент14 страницEngine Cooling System AnalysisNimra0% (1)

- Task Sheet #4 For Lesson 4 REMOROZA, DINNAH H.Документ4 страницыTask Sheet #4 For Lesson 4 REMOROZA, DINNAH H.dinnah100% (1)

- Practice 8Документ8 страницPractice 8Rajmohan AsokanОценок пока нет

- Numerical Solutions of Stiff Initial Value Problems Using Modified Extended Backward Differentiation FormulaДокумент4 страницыNumerical Solutions of Stiff Initial Value Problems Using Modified Extended Backward Differentiation FormulaInternational Organization of Scientific Research (IOSR)Оценок пока нет

- Homework 4 SolutionsДокумент16 страницHomework 4 SolutionsAE EОценок пока нет

- W3 2016 4 - EPC 431 - Kinematics of Robot Manipulator Part4 RevisedДокумент29 страницW3 2016 4 - EPC 431 - Kinematics of Robot Manipulator Part4 RevisedNuraisha SyafiqaОценок пока нет

- Gjeodezi 2Документ12 страницGjeodezi 2Pune Inxhinieresh50% (2)

- IE 325 Stochastic Models HomeworkДокумент2 страницыIE 325 Stochastic Models HomeworkeminebОценок пока нет

- Lab 14Документ11 страницLab 14amalkatribОценок пока нет

- Linear AlgebraДокумент150 страницLinear AlgebrameastroccsmОценок пока нет

- Probability and Stochastic Processes: Edition 2 Roy D. Yates and David J. GoodmanДокумент14 страницProbability and Stochastic Processes: Edition 2 Roy D. Yates and David J. GoodmanmoroneyubedaОценок пока нет

- MTH6141 Random Processes Exercise Sheet 4Документ2 страницыMTH6141 Random Processes Exercise Sheet 4aset999Оценок пока нет

- CSM51A F10 Midterm SolutionsДокумент8 страницCSM51A F10 Midterm SolutionskrazykrnxboyОценок пока нет

- Exam 1 HW (Gradescope)Документ5 страницExam 1 HW (Gradescope)MelianaWanda041 aristaОценок пока нет

- Question Bank M Tech 2ND Sem Batch 2018Документ31 страницаQuestion Bank M Tech 2ND Sem Batch 2018king khanОценок пока нет

- A Short Survey On Quantum ComputersДокумент21 страницаA Short Survey On Quantum Computerssingh_mathitbhu5790Оценок пока нет

- Markov ChainДокумент21 страницаMarkov ChainHENRIKUS HARRY UTOMOОценок пока нет

- DC CIRCUITS - Methods of AnalysisДокумент22 страницыDC CIRCUITS - Methods of AnalysisLuthfan TaufiqОценок пока нет

- ODE Numerical Analysis RegionsДокумент17 страницODE Numerical Analysis RegionspsylancerОценок пока нет

- Sol A6 MarkovChainДокумент24 страницыSol A6 MarkovChainHaydenChadwickОценок пока нет

- F44128640 Homework LCS DING JoelДокумент19 страницF44128640 Homework LCS DING JoelNotnowОценок пока нет

- Hidden Markov ModelДокумент32 страницыHidden Markov ModelAnubhab BanerjeeОценок пока нет

- Practice Questions Lecture 7 and 8 With SolutionДокумент8 страницPractice Questions Lecture 7 and 8 With SolutionmmlmОценок пока нет

- Control Systems Design Mock ExamДокумент10 страницControl Systems Design Mock Examangelsfallfirst881959Оценок пока нет

- 14.1 Write The Characteristics Equation and Construct Routh Array For The Control System Shown - It Is Stable For (1) KC 9.5, (Ii) KC 11 (Iii) KC 12Документ12 страниц14.1 Write The Characteristics Equation and Construct Routh Array For The Control System Shown - It Is Stable For (1) KC 9.5, (Ii) KC 11 (Iii) KC 12Desy AristaОценок пока нет

- Control With Random Communication Delays Via A Discrete-Time Jump System ApproachДокумент6 страницControl With Random Communication Delays Via A Discrete-Time Jump System ApproachArshad AliОценок пока нет

- OpportunityДокумент2 страницыOpportunityRajarshi ChakrabortyОценок пока нет

- Financial Econometrics - Prof. Massimo Guidolin: Sample Multiple Choice Questions To Prepare The ExamДокумент20 страницFinancial Econometrics - Prof. Massimo Guidolin: Sample Multiple Choice Questions To Prepare The ExamMukesh ModiОценок пока нет

- MAT451 Quiz: Control Systems MathДокумент2 страницыMAT451 Quiz: Control Systems MathWahyu Sumantri PratamaОценок пока нет

- EEE 151 Quiz 5 Solution: N (S) D(S)Документ3 страницыEEE 151 Quiz 5 Solution: N (S) D(S)Chanie RamosОценок пока нет

- MA 2213 - Tutorial 3Документ2 страницыMA 2213 - Tutorial 3Manas AmbatiОценок пока нет

- Notes On V: Ariable Tructure OntrolДокумент10 страницNotes On V: Ariable Tructure OntrolAayush PatidarОценок пока нет

- Lecture 7: System Performance and StabilityДокумент20 страницLecture 7: System Performance and StabilitySabine Brosch100% (1)

- Markov Chains, Markov Processes, Queuing Theory and Application To Communication NetworksДокумент35 страницMarkov Chains, Markov Processes, Queuing Theory and Application To Communication NetworksCoché HawkОценок пока нет

- ANN 3 - PerceptronДокумент56 страницANN 3 - PerceptronNwwar100% (1)

- ReviewCh7 Markov Chains Part IIДокумент22 страницыReviewCh7 Markov Chains Part IIBomezzZ EnterprisesОценок пока нет

- An Introduction To Cellular Automata and Their Applications: 1 Introduction - For The StudentДокумент23 страницыAn Introduction To Cellular Automata and Their Applications: 1 Introduction - For The StudentHelena Martins CustódioОценок пока нет

- Eecs 554 hw6Документ7 страницEecs 554 hw6Fengxing ZhuОценок пока нет

- Solutions To Selected Questions From Assignment 2: T M J T M Z Z JДокумент5 страницSolutions To Selected Questions From Assignment 2: T M J T M Z Z JDoug Shi-DongОценок пока нет

- 1 David - Tavkhelidze Geometry of Five LinkДокумент31 страница1 David - Tavkhelidze Geometry of Five LinkRafael CrispimОценок пока нет

- Optimize Transportation ProblemДокумент49 страницOptimize Transportation Problemprocess8Оценок пока нет

- Nodal AnalysisДокумент66 страницNodal AnalysisKhaled AkashОценок пока нет

- Modal AnalysisДокумент40 страницModal AnalysisSumit Thakur100% (1)

- 1631 Topic11Документ10 страниц1631 Topic11Mauricio Sanchez PortillaОценок пока нет

- Linear Algebra - Solved Assignments - Fall 2005 SemesterДокумент28 страницLinear Algebra - Solved Assignments - Fall 2005 SemesterMuhammad Umair100% (1)

- JNU MCA 2005 QuestionsДокумент18 страницJNU MCA 2005 QuestionsVarun GopalОценок пока нет

- OmNarayanSingh CC306 IS FinalДокумент15 страницOmNarayanSingh CC306 IS FinalOm SinghОценок пока нет

- Tutorial- 6 Submission Probability ProblemsДокумент8 страницTutorial- 6 Submission Probability ProblemsJASHWIN GAUTAMОценок пока нет

- 06 EE 604 1-E - Combine - Combine PDFДокумент74 страницы06 EE 604 1-E - Combine - Combine PDFdoniya antonyОценок пока нет

- FSM SlidesДокумент37 страницFSM SlidesSahil Sharma0% (1)

- Old Question Paper Control SystemsДокумент7 страницOld Question Paper Control SystemsSatendra KushwahaОценок пока нет

- Math 312 Lecture Notes Markov ChainsДокумент9 страницMath 312 Lecture Notes Markov Chainsfakame22Оценок пока нет

- Controls Problems For Qualifying Exam - Spring 2014: Problem 1Документ11 страницControls Problems For Qualifying Exam - Spring 2014: Problem 1MnshОценок пока нет

- Full-State Feedback & Observer DesignДокумент29 страницFull-State Feedback & Observer DesignBi Chen100% (1)

- Cryptanalysis Cryptarithmetic ProblemДокумент43 страницыCryptanalysis Cryptarithmetic ProblemAbhinish Swaroop50% (2)

- Learn Digital and Microprocessor Techniques on Your SmartphoneОт EverandLearn Digital and Microprocessor Techniques on Your SmartphoneОценок пока нет

- Digital and Microprocessor Techniques V11От EverandDigital and Microprocessor Techniques V11Рейтинг: 4.5 из 5 звезд4.5/5 (2)

- Advanced Numerical and Semi-Analytical Methods for Differential EquationsОт EverandAdvanced Numerical and Semi-Analytical Methods for Differential EquationsОценок пока нет

- Chapter 9 - Areas of Parallelograms and Triangles Revision NotesДокумент8 страницChapter 9 - Areas of Parallelograms and Triangles Revision NotesHariom SinghОценок пока нет

- Sampling PDFДокумент187 страницSampling PDFAhmed ShujaОценок пока нет

- 6.045 Class 7: Computability Theory FundamentalsДокумент53 страницы6.045 Class 7: Computability Theory FundamentalsMuhammad Al KahfiОценок пока нет

- Detailed LP Dela Cruz FFFДокумент8 страницDetailed LP Dela Cruz FFFJaysan Dela CruzОценок пока нет

- CNC Turning Machines: Coordinate System and Programming CyclesДокумент34 страницыCNC Turning Machines: Coordinate System and Programming CyclesAmaterasu Susanoo TsukuyomiОценок пока нет

- Optical Fiber WaveGuiding PDFДокумент51 страницаOptical Fiber WaveGuiding PDFHimanshu AgrawalОценок пока нет

- Quantitative TechniquesДокумент62 страницыQuantitative TechniquesSteffanie GranadaОценок пока нет

- Chapter 3-Acceleration and Newton Second Law of MotionДокумент58 страницChapter 3-Acceleration and Newton Second Law of Motionsarah2941100% (1)

- Books For Reference FOR CSIR NET/JRF EXAMSДокумент3 страницыBooks For Reference FOR CSIR NET/JRF EXAMSjeganrajrajОценок пока нет

- Human Induced Vibrations On Footbridges: Application and Comparison of Pedestrian Load ModelsДокумент140 страницHuman Induced Vibrations On Footbridges: Application and Comparison of Pedestrian Load ModelsktricoteОценок пока нет

- DC Motor N GeneratorДокумент23 страницыDC Motor N GeneratorGilbert SihombingОценок пока нет

- CharacterДокумент17 страницCharacterFarhad AliОценок пока нет

- (AMC8) Permutations and CombinationsДокумент4 страницы(AMC8) Permutations and CombinationsNam NGUYENОценок пока нет

- 02page SageДокумент140 страниц02page SageSergio MontesОценок пока нет

- Lec 1 Data Mining IntroductionДокумент71 страницаLec 1 Data Mining Introductionturjo987Оценок пока нет

- Math9 Quarter1 Module1 Final v3 1 RevisedДокумент15 страницMath9 Quarter1 Module1 Final v3 1 RevisedMichel S. Ante - LuisОценок пока нет

- EE719 Tutorial Assigment 1Документ24 страницыEE719 Tutorial Assigment 1Siddhesh SharmaОценок пока нет

- ZTEC Instruments ZT - 4610-f - Dig Storage Scopes - Data Sheet PDFДокумент16 страницZTEC Instruments ZT - 4610-f - Dig Storage Scopes - Data Sheet PDFchaparalОценок пока нет

- Lecture Notes On Matroid Intersection: 6.1.1 Bipartite MatchingsДокумент13 страницLecture Notes On Matroid Intersection: 6.1.1 Bipartite MatchingsJoe SchmoeОценок пока нет

- Jules Lissajous' Figures: Visualizing Sound WavesДокумент5 страницJules Lissajous' Figures: Visualizing Sound WavesJayapal RajanОценок пока нет

- V Foreword VI Preface VII Authors' Profiles VIII Convention Ix Abbreviations X List of Tables Xi List of Figures Xii 1 1Документ152 страницыV Foreword VI Preface VII Authors' Profiles VIII Convention Ix Abbreviations X List of Tables Xi List of Figures Xii 1 1July Rodriguez100% (3)

- Chapter 5 CVP Analysis-1Документ29 страницChapter 5 CVP Analysis-1Ummay HabibaОценок пока нет

- Comparitive Study of Various Watermarking TechniquesДокумент7 страницComparitive Study of Various Watermarking TechniquesHitanshi SachdevaОценок пока нет

- June 2012 QP - M1 EdexcelДокумент13 страницJune 2012 QP - M1 EdexcelSaiyara KhanОценок пока нет

- Mathematics for Business Students Worksheet No. 7 SolutionДокумент6 страницMathematics for Business Students Worksheet No. 7 Solutionahmed wahshaОценок пока нет

- H2 MYE Revision Package Integration SolutionsДокумент9 страницH2 MYE Revision Package Integration SolutionsTimothy HandokoОценок пока нет