Академический Документы

Профессиональный Документы

Культура Документы

DWH Concepts&Informatica FAQs1

Загружено:

Raghavender Reddy PotulaИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

DWH Concepts&Informatica FAQs1

Загружено:

Raghavender Reddy PotulaАвторское право:

Доступные форматы

Data warehousing concepts (FAQs)

1) What is Data warehouse? Data warehouse is relational database used for query analysis and reporting. By definition data warehouse is Subject-oriented, Integrated, Non-volatile, Time variant. Subject oriented Integrated : Data warehouse is maintained particular subject. : Data collected from multiple sources integrated into a user readable unique format. Non volatile Time variant 2) What is Data mart? A subset of data warehouse is called Data mart. 3) Difference between Data warehouse and Data mart? Data warehouse is maintaining the total organization of data. Multiple data marts used in data warehouse. where as data mart is maintained only particular subject. 4) Difference between OLTP and OLAP? OLTP is Online Transaction Processing. This is maintained current transactional data. That means insert, update and delete must be fast. 5) Explain ODS? Operational data store is a part of data warehouse. This is maintained only current transactional data. ODS is subject oriented, integrated, volatile, current data. 6) Difference between Power Center and Power Mart? Power center receive all product functionality including ability to multiple register servers and metadata across the repository and partition data. One repository multiple informatica servers. Power mart received all features except multiple register servers and partition data. 7) What is a staging area? Staging area is a temporary storage area used for transaction, integrated and rather than transaction process When ever your data put in data warehouse you need to clean and process your data. 8) Explain Additive, Semi-additive, Non-additive facts? Additive fact: Additive Fact can be aggregated by simple arithmetical additions. Semi-Additive fact: semi additive fact can be aggregated simple arithmetical : Maintain Historical date. : data display the weekly, monthly, yearly.

additions along with some other dimensions. Non-additive fact: Non-additive fact cant be added at all. 9) What is a Fact less Fact and example? Fact table which has no measures. 10) Explain Surrogate Key? Surrogate Key is a series of sequential numbers assigned to be a primary key for the table. 11) How many types of approaches in DHW? Two approaches: Top-down(Inmol approach), Bottom-up(Ralph Kimball) 12) Explain Star Schema? Star Schema consists of one or more fact table and one or more dimension tables that are related to foreign keys.Dimension tables are De-normalized, Fact table-normalized Advantages: Less database space & Simplify queries. 13) Explain Snowflake schema? Snow flake schema is a normalize dimensions to eliminate the redundancy. The dimension data has been grouped into one large table. Both dimension and fact tables normalized. 14) What is confirm dimension? If both data marts use same type of dimension that is called confirm dimension.If you have same type of dimension can be used in multiple fact that is called confirm dimension. 15) 16) Explain the DWH architecture? What is a slowly growing dimension? Slowly growing dimensions are dimensional data, these dimensions increasing dimension data with out update existing dimensions. That means appending new data to existing dimensions. 17) What is a slowly changing dimension? Slowly changing dimension are dimension data, these dimensions increasing dimensions data with update existing dimensions. Type1: Rows containing changes to existing dimensional are update in the target by overwriting the existing dimension. In the Type1 Dimension mapping, all rows contain current dimension data. Use the type1 dimension mapping to update a slowly changing dimension table when you do not need to keep any previous versions of dimensions in the table. Type2: The Type2 Dimension data mapping inserts both new and changed dimensions into the target. Changes are tracked in the target table by versioning the primary key and creating a version number for each dimension in the table.

Use the Type2 Dimension/version data mapping to update a slowly changing dimension when you want to keep a full history of dimension data in the table. Version numbers and versioned primary keys track the order of changes to each dimension. Type3: The type 3 dimension mapping filters source rows based on user-defined comparisons and inserts only those found to be new dimensions to the target. Rows containing changes to existing dimensions are updated in the target. When updating an existing dimension the informatica server saves existing data in different columns of the same row and replaces the existing data with the updates. 18) When you use for dynamic cache. Your target table is also look up table then you go for dynamic cache .In dynamic cache multiple matches return an error. use only = operator. 19) what is lookup override? Override the default SQL statement. You can join multiple sources use lookup override. By default informatica server add the order by clause. 20) we can pass the null value in lookup transformation? Lookup transformation returns the null value or equal to null value. 21) what is the target load order? You specify the target load order based on source qualifiers in a mapping.if u have the multiple source qualifiers connected to the multiple targets you can designate the order in which informatica server loads data into the targets. 22) what is default join that source qualifier provides? Inner equi join. 23) what are the difference between joiner transformation and source qualifier transformation? You can join heterogeneous data sources in joiner transformation, which we cannot achieve in source qualifier transformation. You need matching keys to join two relational sources in source qualifier transformation. Where you doesnt need matching keys to join two sources. Two relational sources should come from same data source in source qualifier.You can join relational sources, which are coming from different sources in source qualifier.You can join relational sources which are coming from different sources also. 24) what is update strategy transformation? Whenever you create the target table whether you are store the historical data or current transaction data in to target table. 25) 26) Describe two levels in which update strategy transformation sets? what is default source option for update strategy transformation?

Data driven. 27) What is data driven? The information server follows instructions coded into update strategy transformations with in the session mapping determine how to flag records for insert,update,delete or reject if u do not choose data driven option setting , the informatica server ignores all update strategy transformations in the mapping. 28) what are the options in the target session of update strategy transformation? Insert Delete Update Update as update Update as insert Update else insert Truncate table. 29) Difference between the source filter and filter? Source filter is filtering the data only relational sources. Where as filter transformation filter the data any type of source.

30)

what is a tracing level? Amount of information sent to log file. -- What are the types of tracing levels? Normal,Terse,verbose data,verbose initialization. --Explain sequence generator transformation?

-- can you connect multiple ports from one group to multiple transformations? Yes 31) can you connect more than one group to the same target or transformation? NO

32)

what is a reusable transformation? Reusable transformation can be a single transformation. This transformation can be used in multiple mappings. When you need to incorporate this transformation into mapping you add an instance of it to

mapping. Later if you change the definition of the transformation, all instances of it inherit the changes. Since the instance of reusable transformation is a pointer to that transformation can change the transformation in the transformation developer, its instance automatically reflect these changes. This feature can save U great deal of work. -- what are the methods for creating reusable transformation? Two methods 1) Design it in the transformation developer. 2) Promote a standard transformation from the mapping designer. After you add a transformation to the mapping, you can promote it to status of reusable transformation. Once you promote a standard transformation to reusable status, you can demote it to a standard transformation at any time. If u change the properties of a reusable transformation in mapping , you can revert it to the original reusable transformation properties by clicking the revert. 33) what are mapping parameters and mapping variables? Mapping parameter represents a constant value that you can define before running a session. A mapping parameter retains the same value throughout the entire session. When you use the mapping parameter , you declare and use the parameter in a mapping or mapplet.Then define the value of parameter in a parameter file for the session. Unlike a mapping parameter, a mapping variable represents a value that can change through out the session. The informatica server save the value of mapping variable to the repository at the end of session run and uses that value next time you run the session. 34) can you use the mapping parameters or variables created in one mapping into another mapping? NO, we can use mapping parameters or variables in any transformation of the same mapping or mapplet in which have crated mapping parameters or variables. 35) Can you are the mapping parameters or variables created in one mapping into any other result transformation. Yes because the reusable transformation is not contained with any mapplet or mapping. 36) How the informatica server sorts the string values in rank transformation? When the informatica server runs in the ASCII data movement mode it sorts session data using binary sort order. If you configures the session to use a binary sort order, the informatica server calculates the binary value of each string and returns the specified number of rows with the highest binary values for the string.

37) What is the rank index in rank transformation?

The designer automatically creates a RANKINDEX port for each Rank transformation. The informatica server uses the Rank Index port to store the ranking position for each record in a group.For example, if you create a Rank transformation that ranks the top 5 sales persons for each quarter, the rank index number the salespeople from 1 to 5. 38) what is the mapplet? Mapplet is a set of transformation that you build in the mapplet designer and you can use in multiple mappings. 39) Difference between mapplet and reusable transformation? Reusable transformation can be a single transformation.Where as mapplet use multiple transformations. 40) what is a parameter a file? Paramater file defines the values for parameter and variables. WORKFLOW MANAGER 41) what is a server? The power center server moves data from source to targets based on a workflow and mapping metadata stored in a repository. 42) what is a work flow? A workflow is a set of instructions that describe how and when to run tasks related to extracting,transformation and loading data. -- what is session? A session is a set of instructions that describes how to move data from source to target using a mapping. -- what is workflow monitor? Use the work flow monitor work flows and stop the power center server. 43) explain a work flow process? The power center server uses both process memory and system shared memory to perform these tasks. Load manager process: stores and locks the workflow tasks and start the DTM run the sessions. Data Transformation Process DTM: Perform session validations,create threads to initialize the session,read,write and transform data, and handle pre and post session operations. The default memory allocation is 12,000,000 bytes. 44) What are types of threads in DTM? The main dtm thread is called the master thread.

Mapping thread. Transformation thread. Reader thread. Writer thread. Pre-and-post session thread. 45) Explain work flow manager tools? 1) Task developer. 2) Work flow designer. 3) Worklet designer. 46) Explain work flow schedule. You can sehedule a work flow to run continuously, repeat at given time or interval or you manually start a work flow.By default the workflow runs on demand. 47) Explain stopping or aborting a session task? If the power center is executing a session task when you issue the stop the command the power center stop reading data. If continuous processing and writing data and committing data to targets. If the power center cant finish processing and committing data you issue the abort command. You can also abort a session by using the Abort() function in the mapping logic. 48) What is a worklet? A worklet is an object that represents a set of taske.It can contain any task available in the work flow manager. You can run worklets inside a workflow. You can also nest a worklet in another worklet.The worklet manager does not provide a parameter file for worklets. The power center server writes information about worklet execution in the workflow log. 49) what is a commit interval and explain the types? A commit interval is the interval at which power center server commits data to targets during a session. The commit interval the number of rows you want to use as a basis for the commit point. Target Based commit: The power center server commits data based on the number of target rows and the key constraints on the target table. The commit point also depends on the buffer block size and the commit interval. Source-based commit:--------------------------------------------User-defined commit:----------------------------------------------

50) Explain bulk loading? You can use bulk loading to improve performance of a session that inserts a large amount of data to a db2,sysbase,oracle or MS SQL server database. When bulk loading the power center server by passes the database log,which speeds performance. With out writing to the database log, however the target database cant perform rollback.As a result you may not be perform recovery. 51) What is a constraint based loading? When you select this option the power center server orders the target load on a row-by-row basis only. Edit tasks->properties->select treat source rows as insert. Edit tasks->config object tab->select constraint based If session is configured constraint absed loading when target table receive rows from different sources.The power center server revert the normal loading for those tables but loads all other targets in the session using constraint based loading when possible loading the primary key table first then the foreign key table. Use the constraint based loading only when the session option treat rows as set to insert. Constraint based load ordering functionality which allows developers to read the source once and populate parent and child tables in a single process. 52) Explain incremental aggregation? When using incremental aggregation you apply captured changes in the source to aggregate calculations in a session.If the source changes only incrementally and you can capture changes you can configure the session to process only those changes. This allows the power center server to update your target incrementally rather than forcing it to process the entire source and recalculate the same data each time you run the session. You can capture new source data.use incremental aggregation when you can capture new source data much time you run the session.Use a stored procedure on filter transformation only new data. Incremental changes do not significantly change the target.Use incremental aggregation when the changes do not significantly change the target.If processing the incrementally changed source alters more than half the existing target, the session may not benefit from using incremental aggregation. In this case drop the table and recreate the target with complete source data. 53) Processing of incremental aggregation The first time u run an incremental aggregation session the power center server process the entire source.At the end of the session the power center server stores aggregate data from the session runs in two files, the index file and the data file .The power center server creates the files in a local directory.

Transformations. --- what is transformation? Transformation is repository object that generates modifies or passes data. 54) what are the type of transformations? 2 types: 1) active 2) passive. -- explain active and passive transformation? Active transformation can change the number of rows that pass through it.No of output rows less than or equal to no of input rows. Passive transformation does not change the number of rows.Always no of output rows equal to no of input rows. 55) Difference filter and router transformation. Filter transformation to filter the data only one condition and drop the rows dont meet the condition. Drop rows does not store any ware like session log file.. Router transformation to filter the data based on multiple conditions and give yiou the option to route rows that dont match to a default group. 56) what r the types of groups in router transformation? Router transformation 2 groups 1. Input group 2. output groups. Output groups in 2 types. 1. user defined group 2. default group. 57) difference between expression and aggregator transformation? Expression transformation calculate the single row values before writes the target.Expression transformation executed by row-by-row basis only. Aggregator transformation allows you to perform aggregate calculations like max, min,avg Aggregate transformation perform calculation on groups. 58) How can u improve the session performance in aggregate transformation? Use stored input. 59) what is aggregate cache in aggregate transformation? The aggregate stores data in the aggregate cache until it completes aggregate calculations.When u run a session that uses an aggregate transformation , the informatica server creates index and

data caches in memory is process the transformation. If the informatica server requires more space it seores overview values in cache files. 60) explain joiner transformation? Joiner transformation joins two related heterogeneous sources residing in different locations or files. --What are the types of joins in joiner in the joiner transformation? Normal Master outer Detail outer Full outer 61) Difference between connected and unconnected transformations. Connected transformation is connected to another transformation with in a mapping. Unconnected transformation is not connected to any transformation with in a mapping.

62) In which conditions we cannot use joiner transformation(limitations of joiner transformation)? Both pipelines begin with the same original data source. Both input pipelines originate from the same source qualifier transformation. Both input pipelines originate from the same normalizer transformation Both input pipelines originate from the same joiner transformation. Either input pipelines contains an update strategy transformation Either input pipelines contains sequence generator transformation. 63) what are the settings that u use to configure the joiner transformation? Master and detail source. Type of join Condition of the join 64) what is look up transformation look up transformation can be used in a table view based on condition by default lookup is left outer join 65) why use the lookup transformation?

To perform the following tasks. Get a related value.For example if your table includes employee ID,but you want to include such as gross sales per invoice or sales tax but not the calculated value(such as net sales) Update slowly changing dimension tables. You can use a lookup transformation to determine whether records already exist in the target. 66) what are the types of lookup? Connected and unconnected 67) difference between connected and unconnected lookup? Connected lookup Unconnected lookup

Receives input values directly Receives input values from the result of a clkp from the pipe line. expression in a another transformation. U can use a dynamic or static Cache Cache includes all lokkup columns Cache includes all lookup/output ports in the used in the mapping(that is lookup lookup condition and the lookup/return port. table columns included in the lookup condition and lookup table columns linked as output ports to other transformations) Can return multiple columns from Designate one return port(R).Returns one the same row or insert into the column from each row. dynamic lookup cache. If there is no match for the lookup If there is no matching for the lookup condition condition, the informatica server the informatica server returns NULL returns the default value for all output ports.If u configure dynamic caching the informatica server inserts rows into the cache. Pass multiple output values to another transformatnion.Link lookup/output ports to another transformation Supports values. user-defined default Pass one output value to another transformation.The lookup/output/return port passes the same value to the --------------------------------------------------------Does not support user-defined default values. U can use a static cache

68) explain index cache and data cache? The informatica server stores conditions values in the index cache and output values in the data cache.

69) What are the types of lookup cache? Persistent cache: U can save the look up cache files and reuse them the next time the informatica server processes a lookup transformation to use the cache. Static cache: U can configure a static or read-only lookup table.By default informatica server creates a static cache.It caches the lookup table and lookup values in the cache for each row that comes into the transformation.When the lookup condition is true the inforamtica server does not update the cache while it processes the lookup transformation. Dynamic cache: If you want to cache the target table and insert new rows into cache and the target you can create a look up transformation to use dynamic cache.The informatica server dynamically inserts data into the target table. Shared cache: You can share the lookup cache between multiple transformations.You can share unnamed cache between transformation in the same mapping.

70) Difference between static cache and dynamic cache? Static cache Dynamic cache

You cannot insert or update You can insert rows into the cache as you the cache pass rows to the target The informatica server returns a value from the lookup table or cache when the condition is true,.When the condition is true the informatica server returns the default value for connected transformation ORACLE: 71) Difference between primary key and unique key? Primary key is Not null unique Unique accept the null values. 72) 73) 74) 75) Difference between inserting and sub string? What is referential integrity? Difference between view and materialized view? What is Redolog file? The set of redo log files for a database is collectively know as the databases redo log. 76) What is RollBack statement? The informatica server inserts rows into the cache when the condition is false.This indicates that the row in the cache or target table.You can pass these rows to the target table.

A database contains one or more rollback segments to temporarily store undo information.Roll back segment are used to generate read consistant data base information during database recovery to rooback uncommitted transactions for users. -- what is table space? A data base is divided into logical storage unit called table space.A table space is used to grouped related logical structures together. -- How to delete the duplicate records. -- What are the difference types of joins in Oracle? Self-join,equi-join,outer join. 77) What is outer join? One of which rows that dont match those in the commen column of another table. 78) write query Max 5 salaries? Select * from emp e where 5>(select count(*) from emp where sal>e.sal) 79) what is synonym?

80) 81) 82) 83) 84)

--------------------------------

What is bit map index and example? What is stored procedure and advantages? Explain cursor and how many types of triggers in oracle? Trigger is stored procedure.Trigger is automatically executed.

85)

Difference between function and stored procedure? Function returns a value.Procedure does not return a value(but returns a value tru IN OUT parameters!!!!!!)

86) 87)

Difference between replace and translate? Write the query nth max sal Select distinct (a.sal) from emp a where &n=(select count(distinct(b.sal) from emp b where a.sal<=b.sal);

88)

Write the query odd and even numbers?

Select * from emp where (rowid,1) in (select rowid,mod(rownum,2) from emp)

Вам также может понравиться

- VIVANTA Central Court - Luxury Homes in Mokila's Emerging HubДокумент17 страницVIVANTA Central Court - Luxury Homes in Mokila's Emerging HubRaghavender Reddy PotulaОценок пока нет

- SAP Process IntegrationДокумент18 страницSAP Process IntegrationRaghavender Reddy PotulaОценок пока нет

- A Step-By-Step Guide On IDoc-ALE Between Two SAP ServersДокумент22 страницыA Step-By-Step Guide On IDoc-ALE Between Two SAP ServersRavindra Chowdary Gokina100% (1)

- Aggregation With Out A AggregatorДокумент4 страницыAggregation With Out A AggregatorRaghavender Reddy PotulaОценок пока нет

- Test Paper 18: APTITUDE TEST Questions on Environmental IssuesДокумент17 страницTest Paper 18: APTITUDE TEST Questions on Environmental IssuesRaghavender Reddy Potula100% (1)

- Interview SessionДокумент8 страницInterview SessionRaghavender Reddy PotulaОценок пока нет

- Unix Job Setup Template ExplainedДокумент4 страницыUnix Job Setup Template ExplainedRaghavender Reddy PotulaОценок пока нет

- C++ Interview Questions and AnswersДокумент30 страницC++ Interview Questions and Answersapi-26368914Оценок пока нет

- Oracle Interview Questions and Answers: Components, Structures, and Key ConceptsДокумент117 страницOracle Interview Questions and Answers: Components, Structures, and Key ConceptsRaghavender Reddy PotulaОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Oval DPR Overall For NGN ProjectДокумент4 страницыOval DPR Overall For NGN ProjectRashmi Ranjan MohantyОценок пока нет

- Products Guide Book: - Crawler CranesДокумент16 страницProducts Guide Book: - Crawler CranesSarifa Rangunwala Sen100% (1)

- Yodlee CrossSell Case StudyДокумент4 страницыYodlee CrossSell Case StudylocalonОценок пока нет

- Microsoft Outlook QuestionДокумент1 страницаMicrosoft Outlook QuestionRipunjay MishraОценок пока нет

- Part 1 Piping SystemsДокумент22 страницыPart 1 Piping SystemsfacebookshopОценок пока нет

- Configure Dynamic NAT and PATДокумент20 страницConfigure Dynamic NAT and PATMary Amirtha Sagayee. GОценок пока нет

- Glory gfs220 Manual (01 90) (31 40)Документ10 страницGlory gfs220 Manual (01 90) (31 40)alexander quintero romeroОценок пока нет

- Engineer'S Stamp: Contractor'S Stamp:: Al Qunfudhah New City Feeder Water Transmission SystemДокумент7 страницEngineer'S Stamp: Contractor'S Stamp:: Al Qunfudhah New City Feeder Water Transmission SystemLouis ClarkОценок пока нет

- Army C-sUAS Systems AssessmentДокумент4 страницыArmy C-sUAS Systems AssessmentArthur WongОценок пока нет

- Emebbedd Question BankДокумент25 страницEmebbedd Question Banksujith100% (3)

- Autel Maxisys Citroen v4.10 Coverage TableДокумент58 страницAutel Maxisys Citroen v4.10 Coverage TableBeto HoffmannОценок пока нет

- SPE 00 301120 40mah en 1.0verДокумент10 страницSPE 00 301120 40mah en 1.0verAndreea FilipОценок пока нет

- John Deere Parts CatalogueДокумент47 страницJohn Deere Parts CatalogueAdam Ioan100% (2)

- LOW POWER SOLUTIONSДокумент48 страницLOW POWER SOLUTIONSDavidОценок пока нет

- QCL Certification Pvt. LTDДокумент3 страницыQCL Certification Pvt. LTDRamaKantDixitОценок пока нет

- Performance of Fiber ReinforcedДокумент16 страницPerformance of Fiber ReinforcedBang BangОценок пока нет

- The 4 - 95 Stirling Engine For Underwater ApplicationДокумент4 страницыThe 4 - 95 Stirling Engine For Underwater ApplicationVladimirDvortsovОценок пока нет

- An Introduction To SAP Business One CloudДокумент14 страницAn Introduction To SAP Business One CloudBharathkumar PalaniveluОценок пока нет

- Formal vs Informal Communication TypesДокумент13 страницFormal vs Informal Communication TypesOmar GalalОценок пока нет

- Water Overflow Rage and Bubble Surface Area Flux in FlotationДокумент105 страницWater Overflow Rage and Bubble Surface Area Flux in FlotationRolando QuispeОценок пока нет

- Bornemann Twin Screw Pumps Series W V U and TДокумент16 страницBornemann Twin Screw Pumps Series W V U and TFelipeОценок пока нет

- Blue HyundaiДокумент7 страницBlue HyundaiDANIELОценок пока нет

- GITAM University Industrial Training ReportДокумент6 страницGITAM University Industrial Training ReportAkash RanjanОценок пока нет

- Zroute Training Lab v1 0Документ23 страницыZroute Training Lab v1 0ChetanОценок пока нет

- AMCRPS - AKILA - InfoSheet - GBДокумент2 страницыAMCRPS - AKILA - InfoSheet - GBmigusagoОценок пока нет

- Shandong Lingong Construction Machinery Co., Ltd. Null Product Parts CatalogДокумент353 страницыShandong Lingong Construction Machinery Co., Ltd. Null Product Parts Catalogadelmomoura100% (1)

- SiemensДокумент9 страницSiemensGhassen Khalil100% (2)

- Panduit Electrical CatalogДокумент1 040 страницPanduit Electrical CatalognumnummoОценок пока нет

- Ingersoll Rand Rotary Screw Air Compressors 15 To 50 HP Brochure JECДокумент11 страницIngersoll Rand Rotary Screw Air Compressors 15 To 50 HP Brochure JECMardeni OliveiraОценок пока нет

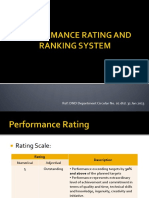

- DND DC No 01 31JAN2013 - Performance Rating and Ranking SystemДокумент6 страницDND DC No 01 31JAN2013 - Performance Rating and Ranking SystemAbdushaheed Ibn IbrahimОценок пока нет