Академический Документы

Профессиональный Документы

Культура Документы

Sparse Implementation of Revised Simplex Algorithms On Parallel Computers

Загружено:

Karam SalehИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Sparse Implementation of Revised Simplex Algorithms On Parallel Computers

Загружено:

Karam SalehАвторское право:

Доступные форматы

Sparse Implementation of Revised Simplex Algorithms on Parallel Computers

Wei Shu and Min-You Wu

Parallelizing sparse simplex algorithms is one of the most challenging problems. Because of very sparse matrices and very heavy communication, the ratio of computation to communication is extremely low. It becomes necessary to carefully select parallel algorithms, partitioning patterns, and communication optimization to achieve a speedup. Two implementations on Intel hypercubes are presented in this paper. The experimental results show that a nearly linear speedup can be obtained with the basic revised simplex algorithm. However, the basic revised simplex algorithm has many ll-ins. We also implement a revised simplex algorithm with LU decomposition. It is a very sparse algorithm, and it is very di cult to achieve a speedup with it.

Abstract

Linear programming is a fundamental problem in the eld of operations research. Many methods are available for solving the linear programming problems, in which the simplex method is the most widely used one for its simplicity and speed. Although it is well-known that the computational complexity of the simplex method is not polynomial in the number of equations, in practice it can quickly solve many linear programming problems. The interior-point algorithms are polynomial and potentially easy to parallelize 7, 1]. However, since the interior-point algorithm has not been well-developed yet, the simplex algorithm is still applied to most real applications. Given a linear programming problem, the simplex method starts from an initial feasible solution and moves toward the optimal solution. The execution is carried out iteratively. At each iteration, it improves the value of the objective function. This procedure will terminate after a nite number of iterations. The simplex method was rst designed in 1947 by Dantzig 3]. The revised simplex method is a modi cation of the original one which signi cantly reduces the total number of calculations to be performed at each iteration 4]. In this paper, we will use the revised simplex algorithm to solve the linear programming problem. Parallel implementations of the linear programming algorithms have been studied on di erent machine architectures, including both distributed and shared memory parallel machines. Sheu et al. presented a mapping technique of linear programming problems on the BNN Butter y parallel computer 10]. The performance of parallel simplex algorithms was studied on the Sequent Balance shared memory machine and a 16-processor Transputer system from INMOS Corporation 12]. The parallel simplex algorithms for a loosely coupled message-passing based parallel systems were presented in 5]. The implementations

This work appeared in the proceeding of the Sixth SIAM Conference on Parallel Processing for Scienti c Computing, March 22-24, 1993, Norfolk. This research was partially supported by NSF grants CCR-9109114 and CCR-8809165

1 Introduction

Shu and Wu

of simplex algorithm on the xed-size hypercubes was proposed by Ho et al. 6]. The performance of the simplex and revised simplex method on the Intel iPSC/2 hypercube was examined in 11]. All existing works are for dense algorithms and there is no sparse implementation. Parallel computers are used to solve large application problems. Large-scale linear programming problems are very sparse. Therefore, dense algorithms are not of practical value. We implemented two sparse algorithms. The rst one, the revised simplex algorithm, can be a good parallel algorithm but has many ll-ins. It is easy to parallelize and can yield good speedup. The second one, the revised simplex algorithm with LU decomposition 2], is one of the best sequential simplex algorithms. It is a sophisticated algorithm with few ll-ins, however, it is extremely di cult to parallelize and to obtain a speedup, especially on distributed memory computers. Actually, it is one of the most di cult algorithms we ever implemented. The linear programming problems can be formulated as follows: solve Ax = b while minimizing z = cT x where A is a m n matrix representing m constraints, b is the right hand side vector, c is the coe cient vector that de nes the objective function z , and x is a column of n variables. A fundamental theorem of linear programming states that an optimal solution, if it exists, occurs when (n ? m) components of x are set to zero. In most practical problems, some or all constraints may be speci ed by linear inequalities, which can be easily converted to linear equality constraints with the addition of nonnegative slack variables . Similarly, arti cial variables may be added to maintain x nonnegative 8]. We use the two-phase revised simplex method, in which the arti cial variables are driven to zero in the rst phase, and an optimal solution is obtained in the second phase. A basis consists of m linearly independent columns of matrix A, represented as B = Aj1 ; Aj2 ; :::; Ajm ]. A basic solution can be obtained by setting (n ? m) components of x to zero and solving the resulting m m linear equations. These m components are called the basic variables ; the rest of (n ? m) components are called the nonbasic variables . Each set of basic variables represents a solution, but not necessarily the optimal one. To approach the optimal, we need to vary the basis by exchanging a basic variable with a nonbasic variable each time, such that the new basis represents a better solution than the one formed previously. The variable that enters the basis is called the entering variable , and the variable that leaves the basis is called the leaving variable . This process forms a kernel of the revised simplex method algorithm as shown in Fig 1. It will be iteratively repeated in both the phases. The slack and arti cial variables are commonly chosen as an initial basis, which always results in a basic feasible solution. As shown in Fig. 1, the principle cost of determining the entering variable and the leaving variable is the same | a vector-matrix multiplication followed by a search for minimum value. These two steps contribute to the major computation part, more than 98% of the total computation in large test data. The third step updates the basic variable and basis inverse. The parallelization of the revised simplex algorithm, therefore, turns to applying the parallel vector-matrix multiplication and minimum reduction repeatedly for each iteration.

2 Revised Simplex Algorithm

3 Parallelization of Revised Simplex Algorithm

Parallel Sparse Simplex Implementation

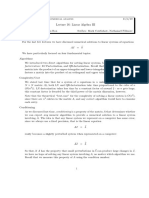

1. Determine the entering variable by using optimality condition,

c0s = minfc0j ; j = 1; 2; : : :; n and xj is nonbasic g; where c0j = cj +

If c0s 0, then the current value is optimal and algorithm stops. 2. Determine the leaving variable by using feasibility condition,

m X iaij i=1

br = minf bi ; i = 1; 2; : : :; m and y > 0g; where Y = y ; i = 1; 2; : : :; m and Y = B ?1 A is s is s s yrs yis If yis 0, then the problem is unbounded and algorithm stops. 3. The basic variable in row r will be replaced by variable xs and the new basis B = Aj1 ; : : :; Ajr?1 ; As; Ajr+1 ; : : :; Ajm ] Update the basis inverse B ?1 , the vector of simplex multipliers , and the right hand side vector b by B?1 = B ?1 ? YsB?1 (r; :)=yrs = ? c0s B ?1 (r; :) b = b ? Ys ybr rs

Fig. 1.

The Revised Simplex Algorithm

A is a sparse matrix with two properties, (1) it is accessed through column exclusively, therefore, using a column-by-column storage scheme; and (2) it is read only during the entire computation. The nonzero elements of matrix A are packed into a one-dimensional array aA, where aA(k) is the kth nonzero element in A with column-major order. Another array iA, with the same size of aA, contains the row indices which correspond to the nonzero elements of A. In addition, a one-dimension array jA is used to point out the index of aA where each column of A begins. Matrix A is partitioned column-wise, due to the nature of multiplication of vector and matrix. If the row partitioning is chosen, a row-wise reduction will introduce heavy communication. Notice that the sparsity of matrix A is not evenly distributed. For example, the right portion of matrix A, consisting of slack and arti cial variables with only one nonzero element for each column, is usually more sparse compared to the left portion. In order to make computation density evenly distributed, a scatter column partitioning scheme is used 9]. Vector is duplicated in all nodes to eliminate communications while doing the multiplication. Besides, vector is scattered into a full-length vector of storage. Thus, the multiplication of vector and matrix A can be performed e ciently because of (1) searching for i is not needed; (2) the amount of work performed depends only on the number of nonzero elements in matrix A. The entire multiplication is then performed in parallel without any communication involved, leaving the result vector c0 distributed. A reduction is performed to nd out the minimal value from c0 . This completes the rst multiplication and reduction. Compared to the rst multiplication, the second one is a multiplication of a matrix to

Shu and Wu

a vector, instead of a vector to a matrix. Matrix B ?1 is then partitioned by row to avoid communications. Vector As is also duplicated by broadcasting column s of A once the entering variable is selected. This broadcast serves as the only communication interface between the two steps and the rest is the same as in the rst one. In the third step, the basic variable in row r will be replaced by variable xs and the new basis B is given by B = Aj1 ; :::; Ajr?1 ; As ; Ajr+1 ; :::; Ajm ] The basis B is not physically represented. Instead, the basis inverse B ?1 is manipulated and needs to be updated. This update may generate many ll-ins, therefore, the linked list scheme is used for B ?1 storage. With the scatter partitioning technique, the load can be well balanced. The performance in Table 1 shows that a nearly linear speedup can be obtained with this algorithm.

Execution Time (in seconds) of the Revised Simplex Algorithm

Table 1

Matrix Number of Processors Size 1 2 4 8 16 SHARE2B 97*79 9.02 4.96 3.66 3.44 3.32 SC105 106*103 15.6 8.58 5.73 4.46 3.01 SC205 206*203 158 88.7 43.5 26.5 15.6 BRANDY 221*249 278 162 90.7 59.9 38.0 BANDM 306*472 1330 718 378 209 127

32 2.58 2.90 11.5 27.5 80.2

The standard revised simplex algorithm mentioned above is based on constructing the basis inverse matrix B ?1 and updating the inverse after every iteration. While this standard algorithm bares the advantages of its simplicity and easiness for parallelization, it is not the best one in terms of its sparsity and its round-o error behavior. This algorithm has many ll-ins in B ?1 . Large number of ll-ins not only increase the execution time, but also occupy too much memory space. Consequently, large test data cannot t in the memory. Another alternative is the method of Bartels and Golub that is based on the LU decomposition of the basis matrix B with row exchanges 2]. Compared to the standard one, the new one di ers in the way to solve two linear equations: T B = ?cT and BYs = As B With the original Bartels-Golub algorithm used, the LU decomposition needs to be applied for the newly constructed basic matrix B in each iteration. This operation is computationally more expensive than the one used to update the basis inverse B ?1 in the standard case. To compromise, a better implementation is to take the advantage of the fact that the newly constructed Bk di ers from the preceding Bk?1 in only one column after k iterations. Here, we can construct an eta matrix E , which di ers from the identity matrix in only one column, referred to as its eta column, to satisfy Bk = Bk?1 Ek = Bk?2 Ek?1 Ek = ::: = B0 E1E2 :::Ek This eta factorization, therefore, suggests another way of solving the two linear equations at iteration k: (((( T B0 )E1)E2):::)Ek = ?cT and B0 (E1(E2(:::(EkYs )))) = As B

4 Revised Simplex Algorithm with LU decomposition

Parallel Sparse Simplex Implementation

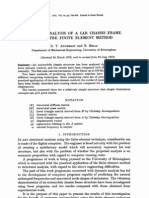

where B0 will be decomposed into L and U . The E solver is a special case of the linear system solver, which consists of a sequence of k eta column modi cation to the solution vector. Assume we use an array p to reserve each eta column position in its original eta matrix. To solve the original eta matrix system, the lth modi cation can be described as follows, El(El+1 (:::(EkX ))) = Zl?1 can be modi ed to El+1 (:::(EkX )) = Zl where zl;p(l) = zl?1;p(l)=el;p(l) and zl;i = zl?1;i ? el;i zl;p(l); i 6= p(l) After k modi cations, Zk is the resulting vector. However, the number of nonzero elements of E grows with the number of iterations. As k increases, the storage of all eta matrices becomes large and the time spent on the E solver is slow too. Therefore, after a certain number of iterations, say r, the basis B0 needs to be refactorized 2]. That is, all the eta matrixes will be discarded. The current Bk is treated as a new B0 to be decomposed. Such an LU decomposition needs to be conducted every r iterations to keep the time and storage of E solver within an acceptable range. This improved algorithm can be summarized in Fig 2. In our implementation, r is set to 40. 1. Determine the entering variable by using optimality condition, m 0 = minfc0 ; j = 1; 2; : : :; n and xj is nonbasic g; where c0 = cj + cs i aij j j If c0s 0, then the current value is optimal and algorithm stops. 2. Solving B0 (E1(E2(:::(EkYs )))) = As If yis 0 for i = 1; 2; : : :; m, then the problem is unbounded and algorithm stops. 3. Determine the leaving variable by using feasibility condition,

i=1

br = minf bi ; y > 0 and i = 1; 2; : : :; mg yrs yis is 4. The basic variable in row r will be replaced by variable xs and the new basis B = Aj1 ; : : :; Ajr?1 ; As; Ajr+1 ; : : :; Ajm ] 5. If (k r) then treat Bk as the new B0 and decompose B0 to get the new matrices L and U , and reset k to 1, else store Ek+1 = Ys and increment k 6. Solving (((( T B0 )E1)E2):::)Ek = ?cT B

Fig. 2.

The Revised Simplex Algorithm with LU Decomposition

Parallelization of the simplex algorithm with LU decomposition is more di cult. As experiments indicated, the major computation cost is distributed among the matrix multiplication in step 1 of Fig 2; the linear system solvers and E solvers for Ys in step 2 and one for in step 6, respectively, and the LU decomposition in step 5 if applied. The parallelization of the matrix multiplication of step 1 is the same as the one in the standard algorithm, as well as the storage scheme and the data partitioning strategy. For the two linear system solvers, the basis matrix B0 serves as the coe cient matrix for r iterations before the next refactorization takes place. We do not expect a good

5 Parallelization of Revised Simplex Algorithm with LU Decomposition

Shu and Wu

speedup from the triangular solver, because the matrices L and U are very sparse and the computation time spent on the triangular solvers is small compared to the other steps. In consideration of scalability, we distribute the factorized triangular matrices L and U to minimize the overhead of 2r triangular solvers. For either L or U , partitioning by row is a simple way to reduce overhead. Furthermore, a block partitioning is used to increase the grainsize between two consecutive communications. Although a scatter partitioning scheme can improve load balance theoretically, a large number of communication, proportional to the number of rows m, will overwhelm this potential, especially for a sparse matrix case. Here, with a block-row partitioning scheme, the total number of communications for each time of triangular solver is equal to the number of physical processors used, independent of m. It is not conceivable to parallelize the E solver, because (i) the lth eta column modi cation depends on the results from the l?1th one; (ii) the modi cation of each element depends on the value of the diagonal element in its eta column; and (iii) a small amount of computation is involved in each modi cation. Due to such heavy data dependences and small grainsizes, the E solver, if executed in parallel, will not be bene cial; therefore, it is currently executed sequentially and all the eta columns are stored in a single physical processor, with two assumptions: if time spent on this sequential part is too long, we can adjust the frequency of refactorization to make it under control. in consideration of the scalability, if needed, we can always distribute k eta columns and have the column modi cations executed in turn for each processor; The basis refactorization is performed every r iterations. A new algorithm was used to factorize the basis matrix e ciently 13]. The idea is to take advantages of the fact that the basis matrix B is extremely sparse and has many column singletons and row singletons. As part of the matrix is phased out by rearranging singletons, the remaining matrix to be factorized may have new singletons generated. Therefore, the column or row exchange of singletons should be reapplied continuously until no more singleton exists. At that time, the rest of the matrix can be factorized in a regular manner. By the column and row exchanges of singletons, we can reduce the size of the submatrix that is actually factorized, and sometimes, eliminate the factorization completely. During the period of exchanging of singletons, rst, no ll-ins will be introduced since no updating; and secondly, searching for the singletons can be easily conducted in parallel since there is no updating, therefore, no dependency. In general, a simple application algorithm has only one single computation task on a single data domain. For this class of problems, the major data domain should be distributed such that the computation task can be executed most e ectively in parallel. The LU decomposition, matrix multiplication, triangular solver, etc. belongs to this class. However, a complex application problem, such as the revised simplex method with LU decomposition, consists of many computation steps and many underlying data domains. Usually, these computation steps depend on each other. If we simply distribute the underlying data domains in consideration of parallelization of individual computation steps, it may ends up a mismatch between two underlying data domains. A global optimization may not be reached simply with local optimal data distributions for each step. Sometimes a sub-optimal local data distribution must be used to reduce interface overhead. There are two major interfaces in this algorithm. The rst one is the interface between matrix A and basis B . The current new basis is constructed by collecting m columns from matrix A. Remember that matrix A has been distributed with scatter-column partitioning.

Parallel Sparse Simplex Implementation

One way to construct matrix B is to extract the columns of the basic variables from A and redistribute them to obtain local optimal data distribution. However, data redistribution between di erent computation steps can be bene cial only when it is crucial for performance of the next step. Here, the refactorization is not expected for a perfect speedup and the bene t from redistribution is less than the cost of redistribution. Therefore, we leave the extracted columns in the physical processor where they reside. With the distribution inherited from matrix A, the basis matrix B is not necessarily evenly distributed among processors, leading to a sub-optimal data distribution. The second interface is between basis B and matrices L and U after refactorization. Since L and U are to be used in the following triangular solvers for 2r times, it is worthwhile to carry out the redistribution. The unbalanced scatter-column basis B is redistributed to a block-row scheme for L and U , which is an optimal scheme used for the triangular solver. Without redistribution, matrices L and U were distributed in column-scatter scheme and the communication cost in the following triangular solvers would be even worse. This algorithm is very sparse, therefore, di cult to parallelize due to small granularity. The triangular solvers and E solvers are hard to get speedup and redistribution involves in large communication overhead. The basic strategy used in this implementation is to apply the best possible parallelization techniques for the most computational dense part | matrix multiplication | to obtain maximum speedup. This part spends more than 70% of the total execution time and has speedup of around 7.5 on 8 processors. The LU decomposition part spends less than 10% of total time and rarely has a speedup. The triangular solvers and the E solvers spend about 10% of total time and the parallel execution time increases with the number of processors, dominating the overall performance. The performance is shown in Table 2. Compared it with Table 1, BRANDY and BANDM are executed much faster on a single processor, but have almost no speedup. When the number of processors is larger than 8, these two data sets run slower. For large data, such as SHIP08L, SCSD8, and SHIP12L, up to two-fold speedup can be obtained. More importantly, these data cannot be run with the basic revised simplex algorithm since they cannot t in the memory.

Execution Time (in seconds) of the Revised Simplex Algorithm with LU decomposition

Table 2

BRANDY BANDM SHIP08L SCSD8 SHIP12L

Matrix Number of Processors Size 1 2 4 8 221*249 72.5 84.2 { { 306*472 371 344 331 342 779*4283 523 407 329 334 398*2750 674 590 560 682 1152*5427 2104 1344 1076 1020

6 Conclusions

Two revised simplex algorithms, with and without LU decomposition, have been implemented on the Intel iPSC/2 hypercube computers. The test data sets are from netlib. These data sets represent realistic problems in industry applications, ranging from smallscale to large-scale. The execution time is from a few seconds to thousands of seconds. Although the basic revised simplex algorithm is relative easier to implement, both of them need to be carefully tuned to get the best possible performance. We emphasized on sophis-

Shu and Wu

ticated parallelization techniques, such as partitioning patterns, data distribution, communication reduction, and interface between computation steps. Our experience shows that for a complicated problem, each step of computation requires di erent data distribution. The interfaces between steps a ect the decision of data distribution, and consequently, communications. In summary, parallelizing sparse simplex algorithms is not easy. The experimental results show that a nearly linear speedup can be obtained with the basic revised simplex algorithm. On the other hand, because of very sparse matrices and very heavy communication, the revised simplex algorithm with LU decomposition is hard to achieve good speedup even with carefully selected partitioning patterns and communication optimization.

Acknowledgments

The authors wish to thank Yong Li for his contribution on linear programming algorithms and sequential codes.

References

1] G. Astfalk, I. Lusting, R. Marsten and D. Shanno, The Interior-Point Method for Linear Programming, IEEE Software, July, 1992, pages 61{67. 2] R. H. Bartels and G. H. Golub, The Simplex Method of Linear Programming Using LU Decomposition , Comm. ACM, 12, pages 266-268, 1969. 3] G. B. Dantzig. Linear Programming and Extensions , Princeton University Press, New Jersey, 1963. 4] G. B. Dantzig and W. Orchard-Hays, The product form for the inverse in the simplex method , Mathematical Tables and Other Aids to Computation, 8, pages 64-67, 1954. 5] R. A. Finkel, Large-grain Parallelism { Three Cases Studies , The Characteristics of Parallel Algorithms, L. H. Jamieson ed., The MIT Press, 1987. 6] H. F. Ho, G. H. Chen, S. H. Lin, and J. P. Sheu, Solving Linear Programming on Fixed-Size Hypercubes , pages 112-116, ICPP'88. 7] N. Karmarkar, A New Polynomial-Time Algorithm for Linear Programming, Combinatorica, Vol. 4, No. 8, 1984, pages 373-395. 8] Y. Li, M. Wu, W. Shu and G. Fox, Linear Programming Algorithms and Parallel Implementations, SCCS Report 288, Syracuse University, May 1992. 9] D. M. Nicol and J. H. Saltz. An analysis of scatter decomposition. IEEE Trans. Computers, C{39(11):1337{1345, November 1990. 10] T. Sheu and W. Lin, Mapping Linear Programming Algorithms onto the Butter y Parallel Processor , 1988. 11] C. B. Stunkel and D. C. Reed, Hypercube Implementation of the Simplex Algorithm , Prof. 4th Conf. on Hypercube Concurrent Computer and Applications, March 1989. 12] Y. Wu and T. G. Lewis, Performance of Parallel Simplex Algorithms , Department of Computer Science, Oregon State University, 1988. 13] M. Wu, and Y. Li, Fast LU Decomposition for Sparse Simplex Method , SIAM Conference on Parallel Processing for Scienti c Computing, March 1993.

Вам также может понравиться

- Perkins - General Ti BulletinДокумент65 страницPerkins - General Ti BulletinUTEL CARTERОценок пока нет

- SkepticismДокумент5 страницSkepticismstevenspillkumarОценок пока нет

- Data Structures and Algorithms AssignmentДокумент25 страницData Structures and Algorithms Assignmentعلی احمد100% (1)

- Brochure Exterior LightingДокумент49 страницBrochure Exterior Lightingmurali_227Оценок пока нет

- Final Exam DiassДокумент9 страницFinal Exam Diassbaby rafa100% (3)

- LV SWBDQualityInspectionGuideДокумент72 страницыLV SWBDQualityInspectionGuiderajap2737Оценок пока нет

- Different Simplex MethodsДокумент7 страницDifferent Simplex MethodsdaselknamОценок пока нет

- Efficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesДокумент16 страницEfficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesJason StanleyОценок пока нет

- Simplex Algorithm - WikipediaДокумент20 страницSimplex Algorithm - WikipediaGalata BaneОценок пока нет

- A Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowДокумент4 страницыA Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowFlores JesusОценок пока нет

- Approximate Linear Programming For Network Control: Column Generation and SubproblemsДокумент20 страницApproximate Linear Programming For Network Control: Column Generation and SubproblemsPervez AhmadОценок пока нет

- Local Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXДокумент9 страницLocal Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXhellothapliyalОценок пока нет

- ECSE 420 - Parallel Cholesky Algorithm - ReportДокумент17 страницECSE 420 - Parallel Cholesky Algorithm - ReportpiohmОценок пока нет

- Safe and Effective Determinant Evaluation: February 25, 1994Документ16 страницSafe and Effective Determinant Evaluation: February 25, 1994David ImmanuelОценок пока нет

- Linear AlgebraДокумент65 страницLinear AlgebraWilliam Hartono100% (1)

- Systems of Linear EquationsДокумент54 страницыSystems of Linear EquationsOlha SharapovaОценок пока нет

- Analysis of Algorithms, A Case Study: Determinants of Matrices With Polynomial EntriesДокумент10 страницAnalysis of Algorithms, A Case Study: Determinants of Matrices With Polynomial EntriesNiltonJuniorОценок пока нет

- ME 17 - Homework #5 Solving Partial Differential Equations Poisson's EquationДокумент10 страницME 17 - Homework #5 Solving Partial Differential Equations Poisson's EquationRichardBehielОценок пока нет

- Linear AlgebraДокумент65 страницLinear AlgebraMariyah ZainОценок пока нет

- Simplex AlgorithmДокумент10 страницSimplex AlgorithmGetachew MekonnenОценок пока нет

- DSP Implementation of Cholesky DecompositionДокумент4 страницыDSP Implementation of Cholesky DecompositionBaruch CyzsОценок пока нет

- Applications of Numerical Methods in Civil EngineeringДокумент9 страницApplications of Numerical Methods in Civil EngineeringAsniah M. RatabanОценок пока нет

- DSP Implementation of Cholesky DecompositionДокумент4 страницыDSP Implementation of Cholesky DecompositionNayim MohammadОценок пока нет

- Systems of Linear EquationsДокумент35 страницSystems of Linear EquationsJulija KaraliunaiteОценок пока нет

- Cscan 788Документ9 страницCscan 788Adin AdinaОценок пока нет

- Ij N×NДокумент5 страницIj N×NLaila Azwani PanjaitanОценок пока нет

- Math OptimizationДокумент11 страницMath OptimizationAlexander ValverdeОценок пока нет

- Chapter 7Документ14 страницChapter 7Avk SanjeevanОценок пока нет

- ERL M 520 PDFДокумент425 страницERL M 520 PDFalbendalocuraОценок пока нет

- Approximating The Logarithm of A Matrix To Specified AccuracyДокумент14 страницApproximating The Logarithm of A Matrix To Specified AccuracyfewieОценок пока нет

- Large Margin Multi Channel Analog To Digital Conversion With Applications To Neural ProsthesisДокумент8 страницLarge Margin Multi Channel Analog To Digital Conversion With Applications To Neural ProsthesisAdrian PostavaruОценок пока нет

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisДокумент7 страницLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionОценок пока нет

- LP NotesДокумент7 страницLP NotesSumit VermaОценок пока нет

- MIR2012 Lec1Документ37 страницMIR2012 Lec1yeesuenОценок пока нет

- Training Feed Forward Networks With The Marquardt AlgorithmДокумент5 страницTraining Feed Forward Networks With The Marquardt AlgorithmsamijabaОценок пока нет

- Listening To Booths Algorithm Using Rust and OrcaДокумент17 страницListening To Booths Algorithm Using Rust and OrcaMuhammad Areeb KazmiОценок пока нет

- Neural Network Simulations in MatlabДокумент5 страницNeural Network Simulations in MatlablrdseekerОценок пока нет

- A New Algorithm For Linear Programming: Dhananjay P. Mehendale Sir Parashurambhau College, Tilak Road, Pune-411030, IndiaДокумент20 страницA New Algorithm For Linear Programming: Dhananjay P. Mehendale Sir Parashurambhau College, Tilak Road, Pune-411030, IndiaKinan Abu AsiОценок пока нет

- A. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520Документ27 страницA. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520john brownОценок пока нет

- Linear Programming: Data Structures and Algorithms A.G. MalamosДокумент32 страницыLinear Programming: Data Structures and Algorithms A.G. MalamosAlem Abebe AryoОценок пока нет

- Summary QM2 Math IBДокумент50 страницSummary QM2 Math IBIvetteОценок пока нет

- Fast and Stable Least-Squares Approach For The Design of Linear Phase FIR FiltersДокумент9 страницFast and Stable Least-Squares Approach For The Design of Linear Phase FIR Filtersjoussef19Оценок пока нет

- A3 110006223Документ7 страницA3 110006223Samuel DharmaОценок пока нет

- Parallel Skyline Method Using Two Dimensional ArrayДокумент14 страницParallel Skyline Method Using Two Dimensional ArraydaskhagoОценок пока нет

- Least Square MethodДокумент2 страницыLeast Square MethodgknindrasenanОценок пока нет

- LDL FactorizacionДокумент7 страницLDL FactorizacionMiguel PerezОценок пока нет

- An Economical Method For Determining The Smallest Eigenvalues of Large Linear SystemsДокумент9 страницAn Economical Method For Determining The Smallest Eigenvalues of Large Linear Systemsjuan carlos molano toroОценок пока нет

- PP Introduction To MATLABДокумент11 страницPP Introduction To MATLABAnindya Ayu NovitasariОценок пока нет

- LPP Compte Rendu TP 1 Taibeche Ahmed Et Fezzai OussamaДокумент11 страницLPP Compte Rendu TP 1 Taibeche Ahmed Et Fezzai Oussamami doОценок пока нет

- Dynamic Analysis of A Car Chassis Frame Using The Finite Element MethodДокумент10 страницDynamic Analysis of A Car Chassis Frame Using The Finite Element MethodNir ShoОценок пока нет

- Calculating Traffic Flow Using 2D Array Computations in CДокумент14 страницCalculating Traffic Flow Using 2D Array Computations in CMuhammad AufaristamaОценок пока нет

- Summary of EFICAДокумент7 страницSummary of EFICAReedip BanerjeeОценок пока нет

- Karthik Nambiar 60009220193Документ9 страницKarthik Nambiar 60009220193knambiardjsОценок пока нет

- Scan Doc0002Документ9 страницScan Doc0002jonty777Оценок пока нет

- Equation-Based Behavioral Model Generation For Nonlinear Analog CircuitsДокумент4 страницыEquation-Based Behavioral Model Generation For Nonlinear Analog CircuitsNayna RathoreОценок пока нет

- Linear EquationДокумент9 страницLinear Equationsam_kamali85Оценок пока нет

- Implementing The Division Operation On A Database Containing Uncertain DataДокумент31 страницаImplementing The Division Operation On A Database Containing Uncertain DataBhartiОценок пока нет

- MATLAB Worksheet For Solving Linear Systems by Gaussian EliminationДокумент2 страницыMATLAB Worksheet For Solving Linear Systems by Gaussian Eliminationv44iОценок пока нет

- Longest Common Subsequence Using LTDP and Rank Convergence: Course Code - CSE: 371Документ15 страницLongest Common Subsequence Using LTDP and Rank Convergence: Course Code - CSE: 371Vivek SourabhОценок пока нет

- R&D Notes Steady-State Multiplicities in Reactive Distillation: Stage-By-Stage Calculation RevisitedДокумент8 страницR&D Notes Steady-State Multiplicities in Reactive Distillation: Stage-By-Stage Calculation Revisitedali_baharevОценок пока нет

- Sail Mast ReportДокумент6 страницSail Mast ReportMax KondrathОценок пока нет

- WLRДокумент4 страницыWLREstira Woro Astrini MartodihardjoОценок пока нет

- Efficient Logarithmic Function ApproximationДокумент5 страницEfficient Logarithmic Function ApproximationInnovative Research PublicationsОценок пока нет

- Content PDFДокумент14 страницContent PDFrobbyyuОценок пока нет

- Comparison of Plate Count Agar and R2A Medium For Enumeration of Heterotrophic Bacteria in Natural Mineral WaterДокумент4 страницыComparison of Plate Count Agar and R2A Medium For Enumeration of Heterotrophic Bacteria in Natural Mineral WaterSurendar KesavanОценок пока нет

- Addis Ababa University Lecture NoteДокумент65 страницAddis Ababa University Lecture NoteTADY TUBE OWNER100% (9)

- Waste Heat BoilerДокумент7 страницWaste Heat Boilerabdul karimОценок пока нет

- Simulation of Inventory System PDFДокумент18 страницSimulation of Inventory System PDFhmsohagОценок пока нет

- Mbeya University of Science and TecnologyДокумент8 страницMbeya University of Science and TecnologyVuluwa GeorgeОценок пока нет

- Tank Top Return Line Filter Pi 5000 Nominal Size 160 1000 According To Din 24550Документ8 страницTank Top Return Line Filter Pi 5000 Nominal Size 160 1000 According To Din 24550Mauricio Ariel H. OrellanaОценок пока нет

- Description: Super Thoroseal Is A Blend of PortlandДокумент2 страницыDescription: Super Thoroseal Is A Blend of Portlandqwerty_conan100% (1)

- The Logistics of Harmonious Co-LivingДокумент73 страницыThe Logistics of Harmonious Co-LivingKripa SriramОценок пока нет

- SThe Electric Double LayerДокумент1 страницаSThe Electric Double LayerDrishty YadavОценок пока нет

- Ens Air To Water Operation ManualДокумент8 страницEns Air To Water Operation ManualcomborОценок пока нет

- Bachelors - Project Report 1Документ43 страницыBachelors - Project Report 1divyaОценок пока нет

- Getting Started in Steady StateДокумент24 страницыGetting Started in Steady StateamitОценок пока нет

- (Checked) 12 Anh 1-8Документ9 страниц(Checked) 12 Anh 1-8Nguyễn Khánh LinhОценок пока нет

- Embargoed: Embargoed Until April 24, 2019 at 12:01 A.M. (Eastern Time)Документ167 страницEmbargoed: Embargoed Until April 24, 2019 at 12:01 A.M. (Eastern Time)Las Vegas Review-JournalОценок пока нет

- Differential Association Theory - Criminology Wiki - FANDOM Powered by WikiaДокумент1 страницаDifferential Association Theory - Criminology Wiki - FANDOM Powered by WikiaMorningstarAsifОценок пока нет

- How To Get Jobs in Neom Saudi Arabia 1703510678Документ6 страницHow To Get Jobs in Neom Saudi Arabia 1703510678Ajith PayyanurОценок пока нет

- Week 14 Report2Документ27 страницWeek 14 Report2Melaku DesalegneОценок пока нет

- Ficha Tecnica Castrol Hyspin AWS RangeДокумент2 страницыFicha Tecnica Castrol Hyspin AWS Rangeel pro jajaja GonzalezОценок пока нет

- Chuck Eesley - Recommended ReadingДокумент7 страницChuck Eesley - Recommended ReadinghaanimasoodОценок пока нет

- A Presentation On Organizational Change ModelДокумент4 страницыA Presentation On Organizational Change ModelSandeepHacksОценок пока нет

- PDF - Gate Valve OS and YДокумент10 страницPDF - Gate Valve OS and YLENINROMEROH4168Оценок пока нет

- Project Scheduling: Marinella A. LosaДокумент12 страницProject Scheduling: Marinella A. LosaMarinella LosaОценок пока нет

- Unit 8 - A Closer Look 2Документ3 страницыUnit 8 - A Closer Look 2Trần Linh TâmОценок пока нет

- McKinsey On Marketing Organizing For CRMДокумент7 страницMcKinsey On Marketing Organizing For CRML'HassaniОценок пока нет