Академический Документы

Профессиональный Документы

Культура Документы

6826 Proposal

Загружено:

Mohamed RizkИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

6826 Proposal

Загружено:

Mohamed RizkАвторское право:

Доступные форматы

ISP Server Farm

Better Surng Through Caching Jason Goggin, Erik Nordlander, Daniel Loreto, and Adam Oliner April 1, 2004

Introduction

The Internet places heavy demands on servers to provide a continuous, reliable, and ecient service to clients. Servers should be resilient to failures, able to handle very large numbers of requests, and able to answer those requests quickly. A common way to provide these features is by having multiple networked servers share their resources for the benet of all. These servers are usually all housed in a single location and are commonly called a server farm. Of particular interest to us are ISPs: client requests come through ISP routers and must be handled appropriately. The service provider is responsible for maintaining the connection to the Internet, and managing the infrastructure that guarantees fast, reliable access. The ISP can achieve this, in part, through caching. The aim of this project is to provide the following deliverables for an ISP system: 1. A specication for the system. 2. High-level code implementation of the system, emphasizing the tricky and novel parts of the system. 3. The abstraction function and key invariants for the correctness of the code. 4. Proofs of correctness for the code whenever appropriate. Among the dierent aspects we will consider in the design of our system are: Scaling: How easy is it for our system to grow? Can we add new servers easily? How many servers can we support? Initialization: How is the system brought to its starting state? If a server needs to be restarted, how do we initialize it so that it is in agreement with the rest of the system? Partitioning of Data: How will the data in our farm be divided? Is data to be distributed among several servers, or will each server be used for a specic type of data? Which servers will hold what information? Replication for Availability: If there is very important data for which we must guarantee availability, should we replicate it? How and where should we do that? Persistent Caching: Should frequently requested data be cached on multiple servers to increase performance? Where do we place copies of the data in order to avoid bottlenecks and balance the load? System Failures: How can our system fail? How can we recover from failed system components? What are the consequences of a failure? Automation: The system should perform with minimal manual intervention. In particular when it comes to load balancing and handling failures. In the next section, we describe our technical approach to the problem and discuss some basic ideas behind our design.

Technical Approach

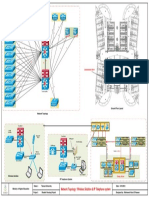

The system can be viewed as consisting of four components: routers, management servers, caching servers, and persistent content servers. This section describes each component. An overview of the system is shown in Figure 1.

2.1

Router Level

The router level is composed of the routers and network connections that bridge between the exterior network and the caching servers. The routers forward incoming requests for data to the appropriate caching servers. Some such requests are sent around the caching servers and directly to the content servers. Their routing tables can be changed dynamically and are controlled by a set of management servers.

2.2

Caching Level

The cache level is the heart of our system. It consists of a number of stateless servers that cache data requested by clients. These servers receive requests from the router level and determine, using time stamps, whether they should return their locally cached copy of the data to the client or retrieve a fresh copy from the server. Multiple caching servers may contain the same data, and some content servers may not have any caching server assigned to them. There are a variety of replacement policies, but one ultimate goal of the strategy should be to minimize a cost function; most likely, this will relate to latency or bandwidth costs. The caching servers will cache data in their persistent storage, as well, because disk latencies may be exceeded by network latencies.

2.3

Content Level

This level consists only of the persistent servers. The job of each of these servers is simply to store the data on a stable medium and serve material back to clients and cache servers. These machines are not necessarily under our control, and some may be web servers across the world. Indeed, those are the kinds of machines for which our system will be most benecial.

2.4

Management Level

The management servers control aspects of the routers and caching servers. The management servers sample requests as they are sent to the routers. The goal of the management servers is to manage routing tables to ensure that the caching servers are not overloaded, and that the appropriate data is cached. Periodically, the management servers update routing tables and instruct the caching servers to move data among themselves. The management server is aware of what caching servers are responsible for what storage servers. Each management server is mirrored by a number of hot backup servers that process all incoming requests alongside the primary server. Therefore, these backups always contain the current state of the system. In the event of a failure, the system can fail over quickly to another backup management server. When a management server is restored, it can copy the current state over from one of the active backups. The system will not be able to function optimally for very long without any management servers, but will still function. In a more geographically disperse system, there can be a number of management servers active at the same time. For example, an ISP with locations in New York and Boston may wish to have cache servers active in each location, which could each be controlled by their own collection of management servers.

Requests from Clients

Routing Tables

Internet

Routers

Files Returned to Clients

Requests for Cached Documents

Requests for Uncached Documents

Stateless Cache Machines

Primary & Back-up Load Balancers

Caching Instructions

Files returned to Clients

Server

Server

Persistent Servers

Server

Server

Server Server

Figure 1: An overview of the network architecture. The system is composed of three main components: the routers, the stateless cache machines, and the load balancers that manage the other two. Requests are made by clients of the ISP. The persistent servers may either be local to the ISP or may be arbitrarily far away. The distant servers are the ones for which caching is most benecial.

Вам также может понравиться

- Educative System Design Basic Part0Документ44 страницыEducative System Design Basic Part0Shivam JaiswalОценок пока нет

- Client-Server ReviewerДокумент7 страницClient-Server ReviewerCristy VegaОценок пока нет

- Configuration and Evaluation of Some Microsoft and Linux Proxy Servers, Security, Intrusion Detection, AntiVirus and AntiSpam ToolsОт EverandConfiguration and Evaluation of Some Microsoft and Linux Proxy Servers, Security, Intrusion Detection, AntiVirus and AntiSpam ToolsОценок пока нет

- Identify and Resolve Database Performance ProblemsДокумент18 страницIdentify and Resolve Database Performance Problemsaa wantsОценок пока нет

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedОт EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedОценок пока нет

- Client-Server ReviewerДокумент7 страницClient-Server ReviewerCristy VegaОценок пока нет

- MC - Unit 5 - 31-5-2022 - FinalДокумент28 страницMC - Unit 5 - 31-5-2022 - FinalselvaperumalvijayalОценок пока нет

- Distributed Operating Systems: By: Malik AbdulrehmanДокумент27 страницDistributed Operating Systems: By: Malik AbdulrehmanMalikAbdulrehmanОценок пока нет

- What Is Hadoop Zookeeper?: Big Data Assignment Name - Vedang Kulkarni Roll No. - DS5A-1942Документ3 страницыWhat Is Hadoop Zookeeper?: Big Data Assignment Name - Vedang Kulkarni Roll No. - DS5A-1942Vedang KulkarniОценок пока нет

- Network Operating SystemДокумент7 страницNetwork Operating SystemMuñoz, Jian Jayne O.Оценок пока нет

- BCA 2nd YearДокумент31 страницаBCA 2nd YearAnas BaigОценок пока нет

- Distributed File Systems: Unit - V Essay QuestionsДокумент10 страницDistributed File Systems: Unit - V Essay Questionsdinesh9866119219Оценок пока нет

- Server Maintenance A Practical Guide - Hypertec SPДокумент2 страницыServer Maintenance A Practical Guide - Hypertec SPNnah InyangОценок пока нет

- Assg Distributed SystemsДокумент20 страницAssg Distributed SystemsDiriba SaboОценок пока нет

- Adbms: Concepts and Architectures: Unit IДокумент41 страницаAdbms: Concepts and Architectures: Unit Iabhishek920124nairОценок пока нет

- Unit V-MCДокумент20 страницUnit V-MCashmi StalinОценок пока нет

- System Design BasicsДокумент33 страницыSystem Design BasicsSasank TalasilaОценок пока нет

- Effect of Database Server Arrangement To The Performance of Load Balancing SystemsДокумент10 страницEffect of Database Server Arrangement To The Performance of Load Balancing SystemsRamana KadiyalaОценок пока нет

- Client Server and Multimedia NotesДокумент71 страницаClient Server and Multimedia NotesJesse KamauОценок пока нет

- 16 System Design Concepts I Wish I Knew Before The InterviewДокумент18 страниц16 System Design Concepts I Wish I Knew Before The InterviewZrako PrcОценок пока нет

- It4128 AbordoДокумент5 страницIt4128 AbordoJunvy AbordoОценок пока нет

- Unit 1 MergedДокумент288 страницUnit 1 MergedNirma VirusОценок пока нет

- CPT FullДокумент153 страницыCPT FullNirma VirusОценок пока нет

- 5 Common Server Setups For Your Web Application - DigitalOceanДокумент12 страниц5 Common Server Setups For Your Web Application - DigitalOceanNel CaratingОценок пока нет

- Network ArchitectureДокумент7 страницNetwork Architecturehadirehman488Оценок пока нет

- Cloud Computing Unit - Ii: Mohammed Sharfuddin, Assistant Professor, Csed, MjcetДокумент61 страницаCloud Computing Unit - Ii: Mohammed Sharfuddin, Assistant Professor, Csed, MjcetMohammed Abdul BariОценок пока нет

- Advantages of Client Server ComputerДокумент1 страницаAdvantages of Client Server ComputerRavi GajenthranОценок пока нет

- Virtualization and Cloud Computing-U3Документ8 страницVirtualization and Cloud Computing-U3Prabhash JenaОценок пока нет

- Module 1Документ34 страницыModule 1vani_V_prakashОценок пока нет

- Internet-Based Services: Load Balancing Is AДокумент7 страницInternet-Based Services: Load Balancing Is ASalman KhanОценок пока нет

- Mobile Computing - Unit 5Документ20 страницMobile Computing - Unit 5Sekar Dinesh50% (2)

- Unit 5 Database HoardingДокумент63 страницыUnit 5 Database Hoarding126 Kavya sriОценок пока нет

- Load Balancing On Multimedia Servers Karunya UniversityДокумент5 страницLoad Balancing On Multimedia Servers Karunya UniversityMangesh MandhareОценок пока нет

- The Athena Service Management System: Mark A. Rosenstein Daniel E. Geer, Jr. Peter J. LevineДокумент11 страницThe Athena Service Management System: Mark A. Rosenstein Daniel E. Geer, Jr. Peter J. LevineClarancè AnthònyОценок пока нет

- Part 2Документ7 страницPart 2Gihan GunasekeraОценок пока нет

- Realization of Web Hotspot Rescue in A Distributed System: Debashree DeviДокумент8 страницRealization of Web Hotspot Rescue in A Distributed System: Debashree DeviInternational Organization of Scientific Research (IOSR)Оценок пока нет

- Unit 1.3Документ28 страницUnit 1.3Erika CarilloОценок пока нет

- SAP Web DispatcherДокумент10 страницSAP Web DispatcherravimbasiОценок пока нет

- Database Issues in Mobile ComputingДокумент20 страницDatabase Issues in Mobile ComputingMukesh90% (51)

- VII. Database System ArchitectureДокумент15 страницVII. Database System ArchitectureNandan KumarОценок пока нет

- The System DesignДокумент135 страницThe System DesignaeifОценок пока нет

- Mobile Computing Unit 3/B: Database IssuesДокумент20 страницMobile Computing Unit 3/B: Database IssuesKenya DavisОценок пока нет

- Distributed Database Management Notes - 1Документ21 страницаDistributed Database Management Notes - 1Saurav Kataruka100% (11)

- Ebook - Cracking The System Design Interview CourseДокумент91 страницаEbook - Cracking The System Design Interview Coursesharad1987Оценок пока нет

- CC Unit 3Документ28 страницCC Unit 3Bharath KumarОценок пока нет

- 9-Database System ArchitectureДокумент37 страниц9-Database System ArchitectureAdwaithAdwaithDОценок пока нет

- Chapter 1 The ArenaДокумент45 страницChapter 1 The ArenaRaven Laurdain PasiaОценок пока нет

- Whiteboard Summary On Data Processing For Ss2, Week 3 and 4 Distributed and Parallel Database SystemsДокумент7 страницWhiteboard Summary On Data Processing For Ss2, Week 3 and 4 Distributed and Parallel Database SystemsKelvin NTAHОценок пока нет

- Answer:: I/O CPU CPU CPU I/OДокумент8 страницAnswer:: I/O CPU CPU CPU I/OEleunamme ZxОценок пока нет

- Microsoft Azure DevOps Solutions AZ 400 Practice Exam 9 Py2tzwДокумент9 страницMicrosoft Azure DevOps Solutions AZ 400 Practice Exam 9 Py2tzwkkeyn688Оценок пока нет

- 9 - The Database Design Part-5Документ15 страниц9 - The Database Design Part-5ay9993805Оценок пока нет

- AWS Questions - TomiДокумент2 страницыAWS Questions - TomiTalha BajwaОценок пока нет

- Unit V-B PDFДокумент20 страницUnit V-B PDFRajesh RajОценок пока нет

- Definition:: Single TierДокумент33 страницыDefinition:: Single TierPriy JoОценок пока нет

- Client ServerДокумент3 страницыClient ServerSubramonia Muthu Perumal PillaiОценок пока нет

- Security in Cloud Computing Project ExДокумент6 страницSecurity in Cloud Computing Project Exthen12345Оценок пока нет

- HW 1Документ21 страницаHW 1owen0928092110Оценок пока нет

- Proposed Architecture On - A Hybrid Approach For Load Balancing With Respect To Time in Content Delivery NetworkДокумент3 страницыProposed Architecture On - A Hybrid Approach For Load Balancing With Respect To Time in Content Delivery NetworkInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- DI AUDIO Datasheet - X NPMIEN54 - EN1 2 PDFДокумент2 страницыDI AUDIO Datasheet - X NPMIEN54 - EN1 2 PDFMohamed RizkОценок пока нет

- Installation Manual: Inrow™ Chilled Water Air Conditioners Inrow™ RC Acrc301S, Acrc301HДокумент66 страницInstallation Manual: Inrow™ Chilled Water Air Conditioners Inrow™ RC Acrc301S, Acrc301HMohamed RizkОценок пока нет

- AXIS T98A-VE Media Converter Cabinet A Series: Installation GuideДокумент142 страницыAXIS T98A-VE Media Converter Cabinet A Series: Installation GuideMohamed RizkОценок пока нет

- SDC Catalog 6 Remote ControlsДокумент16 страницSDC Catalog 6 Remote ControlsMohamed RizkОценок пока нет

- Fiber Optic Cable /MM 6 CoreДокумент1 страницаFiber Optic Cable /MM 6 CoreMohamed RizkОценок пока нет

- Audio Visual Supply and System Design Integration and Maintenance (AVI2003NE)Документ4 страницыAudio Visual Supply and System Design Integration and Maintenance (AVI2003NE)Mohamed RizkОценок пока нет

- Network Topology / Wireless Solution & IP Telephone System: Ground Floor LayoutДокумент1 страницаNetwork Topology / Wireless Solution & IP Telephone System: Ground Floor LayoutMohamed RizkОценок пока нет

- ASTM - A 27 - Standard Specification For Steel Castings, Carb PDFДокумент4 страницыASTM - A 27 - Standard Specification For Steel Castings, Carb PDFMohamed RizkОценок пока нет

- Selectable Output Horns, Strobes, and Horn/StrobesДокумент4 страницыSelectable Output Horns, Strobes, and Horn/StrobesMohamed RizkОценок пока нет

- FM-200 Tank and Valve Assembly: FM-200 Fire Suppression System Data SheetДокумент1 страницаFM-200 Tank and Valve Assembly: FM-200 Fire Suppression System Data SheetMohamed RizkОценок пока нет

- FM-200 Tank and Valve Assembly: FM-200 Fire Suppression System Data SheetДокумент1 страницаFM-200 Tank and Valve Assembly: FM-200 Fire Suppression System Data SheetMohamed RizkОценок пока нет

- ASTM - A 888 - Standard Specification For Hubless Cast Iron S PDFДокумент51 страницаASTM - A 888 - Standard Specification For Hubless Cast Iron S PDFMohamed Rizk100% (1)

- MTOCO001008Документ8 страницMTOCO001008Mohamed Rizk100% (1)

- Aknan PDFДокумент38 страницAknan PDFMohamed RizkОценок пока нет

- Saes L 110Документ1 страницаSaes L 110Mohamed RizkОценок пока нет

- Material Take-Off List: Excavation: Excavation in Dense SoilДокумент1 страницаMaterial Take-Off List: Excavation: Excavation in Dense SoilMohamed RizkОценок пока нет

- Water Solutions Up Stream Portfolio OldДокумент35 страницWater Solutions Up Stream Portfolio OldMohamed Rizk100% (1)

- BrandДокумент4 страницыBrandMohamed RizkОценок пока нет

- Material Take-Off List: PFE-1: 10 Lbs. HAND TYPE FireДокумент2 страницыMaterial Take-Off List: PFE-1: 10 Lbs. HAND TYPE FireMohamed RizkОценок пока нет

- DONIT IG Brosura NOVO PDFДокумент36 страницDONIT IG Brosura NOVO PDFMohamed RizkОценок пока нет

- IBM - SDLC Using DLSWДокумент3 страницыIBM - SDLC Using DLSWmana1345Оценок пока нет

- Mcqs All ComputerДокумент88 страницMcqs All ComputerQamar NangrajОценок пока нет

- CCNA 2 v6.0 Final Exam Answers 2018 - Routing & Switching Essentials-16-22 PDFДокумент7 страницCCNA 2 v6.0 Final Exam Answers 2018 - Routing & Switching Essentials-16-22 PDFMario ZalacОценок пока нет

- FortiGate-60 Administration GuideДокумент354 страницыFortiGate-60 Administration Guidelarry13804100% (3)

- Cisco - Certforall.300 415.PDF.2022 Sep 22.by - Jerome.88q.vceДокумент7 страницCisco - Certforall.300 415.PDF.2022 Sep 22.by - Jerome.88q.vcejulioОценок пока нет

- Chapter 1-Networking PDFДокумент58 страницChapter 1-Networking PDFVinod Srivastava100% (1)

- 6600 DatasheetДокумент2 страницы6600 Datasheetkbitsik33% (3)

- CV KhaleduzzamanДокумент4 страницыCV KhaleduzzamanMd KhaleduzzamanОценок пока нет

- Mini-Link E: Traffic Node Concept TechnicalДокумент60 страницMini-Link E: Traffic Node Concept Technicaldendani anfelОценок пока нет

- W4 Network Access - PresentationДокумент57 страницW4 Network Access - PresentationNaih イ ۦۦОценок пока нет

- DIR-615 X1 User-Manual 3.0.7 26.02.21 ENДокумент176 страницDIR-615 X1 User-Manual 3.0.7 26.02.21 ENZain MirzaОценок пока нет

- Pg-Diploma in Cyber Security PDFДокумент15 страницPg-Diploma in Cyber Security PDFKang DedeОценок пока нет

- Delivery and Routing of IP Packets1 (Classless)Документ28 страницDelivery and Routing of IP Packets1 (Classless)mustafa kerramОценок пока нет

- Ryo Brill It - 2204: Lab - Implement Dhcpv4Документ6 страницRyo Brill It - 2204: Lab - Implement Dhcpv4jfoexamОценок пока нет

- Ba Tim1531 76Документ168 страницBa Tim1531 76canОценок пока нет

- TD-W8968 V3 Datasheet PDFДокумент4 страницыTD-W8968 V3 Datasheet PDFSanjeev VermaОценок пока нет

- Connecting Devices: 1. RepeaterДокумент5 страницConnecting Devices: 1. RepeaterAadhya JyothiradityaaОценок пока нет

- Switch Abstraction Interface OCP Specification v0.2Документ8 страницSwitch Abstraction Interface OCP Specification v0.2vinujkatОценок пока нет

- Midlands State University: Faculty of CommerceДокумент5 страницMidlands State University: Faculty of CommerceStar69 Stay schemin2Оценок пока нет

- 4 - Spedge 1.0 - Lab GuideДокумент112 страниц4 - Spedge 1.0 - Lab GuideannajugaОценок пока нет

- Ccna NotesДокумент34 страницыCcna NotesQuarantine 2.0Оценок пока нет

- Evaluación de Habilidades Prácticas de PTДокумент18 страницEvaluación de Habilidades Prácticas de PTalvarofst100% (1)

- 1.2.1.7 Packet Tracer - Comparing 2960 and 3560 Switches InstructionsДокумент3 страницы1.2.1.7 Packet Tracer - Comparing 2960 and 3560 Switches InstructionsRoy Janssen100% (3)

- Cisco Ucertify 350-401 Exam Prep 2022-Aug-28 by Paddy 378q VceДокумент30 страницCisco Ucertify 350-401 Exam Prep 2022-Aug-28 by Paddy 378q VceMelroyОценок пока нет

- Chapter 7: Ripv2: Ccna Exploration Version 4.0 Ccna Exploration Version 4.0Документ60 страницChapter 7: Ripv2: Ccna Exploration Version 4.0 Ccna Exploration Version 4.0http://heiserz.com/Оценок пока нет

- Csc261 CCN Lab ManualДокумент125 страницCsc261 CCN Lab ManualasadhppyОценок пока нет

- CCNA Discovery 1 - FINAL Exam AnswersДокумент9 страницCCNA Discovery 1 - FINAL Exam AnswersUdesh FernandoОценок пока нет

- Network Design Proposal For 5 Star ResortДокумент5 страницNetwork Design Proposal For 5 Star Resortgsain1Оценок пока нет

- TL-WR841N ManualДокумент121 страницаTL-WR841N ManualMaftei David RaresОценок пока нет

- Chillifire Hotspot Router Installation Guide Mikrotik PDFДокумент11 страницChillifire Hotspot Router Installation Guide Mikrotik PDFPaul MoutonОценок пока нет

- Python for Beginners: A Crash Course Guide to Learn Python in 1 WeekОт EverandPython for Beginners: A Crash Course Guide to Learn Python in 1 WeekРейтинг: 4.5 из 5 звезд4.5/5 (7)

- Excel 2023 for Beginners: A Complete Quick Reference Guide from Beginner to Advanced with Simple Tips and Tricks to Master All Essential Fundamentals, Formulas, Functions, Charts, Tools, & ShortcutsОт EverandExcel 2023 for Beginners: A Complete Quick Reference Guide from Beginner to Advanced with Simple Tips and Tricks to Master All Essential Fundamentals, Formulas, Functions, Charts, Tools, & ShortcutsОценок пока нет

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveОт EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveОценок пока нет

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessОт EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessОценок пока нет

- Fundamentals of Data Engineering: Plan and Build Robust Data SystemsОт EverandFundamentals of Data Engineering: Plan and Build Robust Data SystemsРейтинг: 5 из 5 звезд5/5 (1)

- Grokking Algorithms: An illustrated guide for programmers and other curious peopleОт EverandGrokking Algorithms: An illustrated guide for programmers and other curious peopleРейтинг: 4 из 5 звезд4/5 (16)

- Design and Build Modern Datacentres, A to Z practical guideОт EverandDesign and Build Modern Datacentres, A to Z practical guideРейтинг: 3 из 5 звезд3/5 (2)

- Optimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesОт EverandOptimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesОценок пока нет

- Microsoft Outlook Guide to Success: Learn Smart Email Practices and Calendar Management for a Smooth Workflow [II EDITION]От EverandMicrosoft Outlook Guide to Success: Learn Smart Email Practices and Calendar Management for a Smooth Workflow [II EDITION]Рейтинг: 5 из 5 звезд5/5 (3)

- Blockchain Basics: A Non-Technical Introduction in 25 StepsОт EverandBlockchain Basics: A Non-Technical Introduction in 25 StepsРейтинг: 4.5 из 5 звезд4.5/5 (24)

- Computer Science Beginners Crash Course: Coding Data, Python, Algorithms & HackingОт EverandComputer Science Beginners Crash Course: Coding Data, Python, Algorithms & HackingРейтинг: 3.5 из 5 звезд3.5/5 (12)

- Microsoft Word Guide for Success: From Basics to Brilliance in Achieving Faster and Smarter Results [II EDITION]От EverandMicrosoft Word Guide for Success: From Basics to Brilliance in Achieving Faster and Smarter Results [II EDITION]Рейтинг: 5 из 5 звезд5/5 (3)

- Microsoft Teams Guide for Success: Mastering Communication, Collaboration, and Virtual Meetings with Colleagues & ClientsОт EverandMicrosoft Teams Guide for Success: Mastering Communication, Collaboration, and Virtual Meetings with Colleagues & ClientsРейтинг: 5 из 5 звезд5/5 (3)

- Microsoft PowerPoint Guide for Success: Learn in a Guided Way to Create, Edit & Format Your Presentations Documents to Visual Explain Your Projects & Surprise Your Bosses And Colleagues | Big Four Consulting Firms MethodОт EverandMicrosoft PowerPoint Guide for Success: Learn in a Guided Way to Create, Edit & Format Your Presentations Documents to Visual Explain Your Projects & Surprise Your Bosses And Colleagues | Big Four Consulting Firms MethodРейтинг: 5 из 5 звезд5/5 (3)

- Microsoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]От EverandMicrosoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]Рейтинг: 5 из 5 звезд5/5 (2)

- Learn Power BI: A beginner's guide to developing interactive business intelligence solutions using Microsoft Power BIОт EverandLearn Power BI: A beginner's guide to developing interactive business intelligence solutions using Microsoft Power BIРейтинг: 5 из 5 звезд5/5 (1)

- Artificial Intelligence in Practice: How 50 Successful Companies Used AI and Machine Learning to Solve ProblemsОт EverandArtificial Intelligence in Practice: How 50 Successful Companies Used AI and Machine Learning to Solve ProblemsРейтинг: 4.5 из 5 звезд4.5/5 (38)

- Excel Dynamic Arrays Straight to the Point 2nd EditionОт EverandExcel Dynamic Arrays Straight to the Point 2nd EditionРейтинг: 5 из 5 звезд5/5 (1)

![Microsoft Outlook Guide to Success: Learn Smart Email Practices and Calendar Management for a Smooth Workflow [II EDITION]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/728320983/198x198/d544db3174/1715163095?v=1)

![Microsoft Word Guide for Success: From Basics to Brilliance in Achieving Faster and Smarter Results [II EDITION]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/728320756/198x198/6f19793d5e/1715167141?v=1)

![Microsoft Excel Guide for Success: Transform Your Work with Microsoft Excel, Unleash Formulas, Functions, and Charts to Optimize Tasks and Surpass Expectations [II EDITION]](https://imgv2-1-f.scribdassets.com/img/audiobook_square_badge/728318885/198x198/86d097382f/1714821849?v=1)