Академический Документы

Профессиональный Документы

Культура Документы

TF Idf Algorithm

Загружено:

Sharat DasikaИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

TF Idf Algorithm

Загружено:

Sharat DasikaАвторское право:

Доступные форматы

Using TF-IDF to Determine Word Relevance in Document Queries

Juan Ramos JURAMOS@EDEN.RUTGERS.EDU Department of Computer Science, Rutgers University, 23515 BPO Way, Piscataway, NJ, 08855

Abstract

In this paper, we examine the results of applying Term Frequency Inverse Document Frequency (TF-IDF) to determine what words in a corpus of documents might be more favorable to use in a query. As the term implies, TF-IDF calculates values for each word in a document through an inverse proportion of the frequency of the word in a particular document to the percentage of documents the word appears in. Words with high TF-IDF numbers imply a strong relationship with the document they appear in, suggesting that if that word were to appear in a query, the document could be of interest to the user. We provide evidence that this simple algorithm efficiently categorizes relevant words that can enhance query retrieval.

return a subset D* of D such that for each d D*, we maximize the following probability: P(d | q, D) (1) (Berger & Lafferty, 1999). As the above notation suggests, numerous approaches to this problem involve probability and statistics, while others propose vectorbased models to enhance the retrieval. 1.2 Algorithms for Ad-Hoc Retrieval Let us briefly examine other approaches used for responding to queries. Intuitively, given the formal notation we present for the problem, the use of statistical methods has proven both popular and efficient in responding to the problem. (Berger & Lafferty, 1999) for example, propose a probabilistic framework that incorporates the users mindset at the time the query was entered to enhance their approximations. They suggest that the user has a specific information need G, which is approximated as a sequence of words q in the actual query. By accounting for this noisy transformation of G into q and applying Bayes Law to equation (1), they show good results on returning appropriate documents given q. Vector-based methods for performing query retrieval also show good promise. (Berry, Dumais & OBrien, 1994) suggest performing query retrieval using a popular matrix algorithm called Latent Semantic Indexing (LSI). In essence, the algorithm creates a reduced-dimensional vector space that captures an n-dimensional representation of a set of documents. When a query is entered, its numerical representation is compared the cosine-distance of other documents in the document space, and the algorithm returns documents where this distance is small. The authors experimental results show that this algorithm is highly effective in query retrieval, even when the problem entails performing information retrieval over documents written in different languages (Littman & Keim 1997). If certain criteria are met, they suggest that the LSI approach can be extended to more than two languages. The procedure we examine with more detail is Term Frequency Inverse Document Frequency (TF-IDF). This weighing scheme can be categorized as a statistical

1. Introduction

Before proceeding in depth into our experiments, it is useful to describe the nature of the query retrieval problem for a corpus of documents and the different approaches used to solve it, including TF-IDF. 1.1 Query Retrieval Problem The task of retrieving data from a user-defined query has become so common and natural in recent years that some might not give it a second thought. However, this growing use of query retrieval warrants continued research and enhancements to generate better solutions to the problem. Informally, query retrieval can be described as the task of searching a collection of data, be that text documents, databases, networks, etc., for specific instances of that data. First, we will limit ourselves to searching a collection of English documents. The refined problem then becomes the task of searching this corpus for documents that the query retrieval system considers relevant to what the user entered as the query. Let us describe this problem more formally. We have a set of documents D, with the user entering a query q = w1, w2, , wn for a sequence of words wi . Then we wish to

procedure, though its immediate results are deterministic in nature. Though TF-IDF is a relatively old weighing scheme, it is simple and effective, making it a popular starting point for other, more recent algorithms (Salton & Buckley, 1988). In this paper, we will examine the behavior of TF-IDF over a set of English documents from the LDCs United Nations Parallel Text Corpus. The purpose of this paper is to examine the behavior, strengths, and weaknesses of TF-IDF as a starting point for future algorithms.

pronouns, and prepositions, which by themselves hold no relevant meaning in a query (unless the user explicitly wants documents containing such common words). Such common words thus receive a very low TF-IDF score, rendering them essentially negligible in the search. Finally, suppose f w, d is large and fw, D is small. Then log (|D|/ fw, D) will be rather large, and so wd will likewise be large. This is the case were most interested in, since words with high wd imply that w is an important word in d but not common in D. This w term is said to have a large discriminatory power. Therefore, when a query contains this w, returning a document d where wd is large will very likely satisfy the user. 2.2 Encoding TF-IDF The code for TF-IDF is elegant in its simplicity. Given a query q composed of a set of words wi , we calculate wi, d for each wi for every document d D. In the simplest way, this can be done by running through the document collection and keeping a running sum of f w, d and fw, D. Once done, we can easily calculate wi d according to the mathematical framework presented before. Once all wi, ds are found, we return a set D* containing documents d such that we maximize the following equation: i wi, d (3). Either the user or the system can arbitrarily determine the size of D* prior to initiating the query. Also, documents are returned in a decreasing order according to equation (3). This is the traditional method of implementing TF-IDF. We will discuss extensions of this algorithm in later sections, along with an analysis of TF-IDF according to our own results.

2. An Overview of TF-IDF

We will now examine the structure and implementation of TF-IDF for a set of documents. We will first introduce the mathematical background of the algorithm and examine its behavior relative to each variable. We then present the algorithm as we implemented it. 2.1 Mathematical Framework We will give a quick informal explanation of TF-IDF before proceeding. Essentially, TF-IDF works by determining the relative frequency of words in a specific document compared to the inverse proportion of that word over the entire document corpus. Intuitively, this calculation determines how relevant a given word is in a particular document. Words that are common in a single or a small group of documents tend to have higher TFIDF numbers than common words such as articles and prepositions. The formal procedure for implementing TF-IDF has some minor differences over all its applications, but the overall approach works as follows. Given a document collection D, a word w, and an individual document d D, we calculate wd = fw, d * log (|D|/fw, D) (2), where fw, d equals the number of times w appears in d, |D| is the size of the corpus, and fw, D equals the number of documents in which w appears in D (Salton & Buckley, 1988, Berger, et al, 2000). There are a few different situation that can occur here for each word, depending on the values of f w, d, |D|, and fw, D, the most prominent of which well examine. Assume that |D| ~ fw, D, i.e. the size of the corpus is approximately equal to the frequency of w over D. If 1 < log (|D|/ fw, D) < c for some very small constant c, then wd will be smaller than fw, d but still positive. This implies that w is relatively common over the entire corpus but still holds some importance throughout D. For example, this could be the case if TF-IDF would examine the word Jesus over the New Testament. More relevant to us, this result would be expected of the word United in the corpus of United Nations documents. This is also the case for extremely common words such as articles,

3. Experiment

3.1 Data Collection and Formatting We tested our TF-IDF implementation on a collection of 1400 documents from the LDCs United Nations Parallel Text Corpus. These documents were gathered arbitrarily from a larger collection of documents from the UNs 1988 database. The documents were encoded with the SGML text format, so we decided to leave in the formatting tags to account for noisy data and to test the robustness of TF-IDF. We simulated more noise by enforcing case-sensitivity. Due to certain constraints, we had to limit the number of queries used to perform information retrieval on to 86. We calculate TF-IDF weights for these queries according to equation (3), and then return the first 100 documents that maximize equation (3). The returned documents were returned in descending order, with documents with higher weight sums appearing first. To compare our results, we also performed in parallel the brute force (and rather nave)

method of performing query retrieval based only on the term fw, d. Naturally, this latter method is intuitively flawed for the larger problem of query retrieval, since this approach would simply return documents where nonrelevant words appear most (i.e. long documents with plenty of articles and prepositions that might not have any relevance to the query). We will provide evidence that TF-IDF, though relatively simple, is a big improvement over this nave approach. 3.2 Experimental Results Return Pos. 1 2 3 4 5 6 7 8 Document # 64 879 1037 324 710 161 1175 402 Sum fw, d 139 136 121 107 98 93 87 86 Sum wd 1.83 4.52 2.08 0.91 7.22 3.95 0.24 5.13

Let us now consider the results from running TF-IDF on our data. In this case, documents at the top of the list have a high sum of wd, so a query containing w would likely receive document d as a return value. Return Pos. 1 2 3 4 5 6 7 8 Document # 788 426 881 253 1007 362 520 23 Sum fw, d 24 72 56 43 37 29 33 58 Sum wd 28.09 26.73 23.96 19.16 16.5 15.42 12.34 10.79

Table 2. First eight documents with highest wd returned by TFIDF for query = the trafficking of drugs in Colombia. The top document, entitled International Campaign Against Traffic in Drugs, is intuitively relevant to the query.

Table 1. First eight documents with highest fw, d returned by our nave algorithm for query = the trafficking of drugs in Colombia. The high fw, d comes mostly from long documents with plenty articles and prepositions. These documents had very low wd scores and are mostly useless to the query.

As Table 2 shows, retrieval with TF-IDF returned documents highly correlate to the given query. The top two documents make frequent use of the non-article words in the query; words that are not that frequent in other documents. This gives a high sum of wd, which in turn gives a high relevance to the document. Figure 2 shows this continuing pattern for our data.

Relevancet to Query

As expected, the nave, brute force approach of simply returning documents with high sum of fw, d given each query word was very inaccurate. Of the top eight documents shown in Table 1, none are relevant to the given query. This pattern is evident throughout the whole list of 344 words, which is summarized in Figure 1.

Naive Query Retrieval

12 10 8 6 4 2 0 1 6 11 16 21 26 31 36 41 46 51 56 61 66 71 76 81 86 91 -2 96

Query Results with TF-IDF

120 100 80 60 40 20 0 1 7 13 19 25 31 37 43 49 55 61 67 73 79 85 91

Relevance to Query

Documents

Documents

Figure 1. Results of running nave query retrieval on our data. Notice the algorithm does not consider wd, but rather returns documents based solely on fw,d. Relevant documents are scattered sporadically, so simply returning the top documents as done by this algorithm returns irrelevant documents.

Figure 2. Results of retrieval with TF-IDF on our data. High values of wd are concentrated on the beginning of the graph, so basing query retrieval on the top words here will likely return relevant documents. The two extra graphs indicate upper and lower bounds found by the retrieval engine.

Clearly, TF-IDF is much more powerful than its nave counterpart. When examining the words in the query, we see that TF-IDF can find documents that make frequent

97

use of said words and determine if they are relevant in the document. The discriminatory power of TF-IDF allows the retrieval engine to quickly find relevant documents that are likely to satisfy the user.

4. Conclusions

4.1 Advantages and Limitations We have seen that TF-IDF is an efficient and simple algorithm for matching words in a query to documents that are relevant to that query. From the data collected, we see that TF-IDF returns documents that are highly relevant to a particular query. If a user were to input a query for a particular topic, TF-IDF can find documents that contain relevant information on the query. Furthermore, encoding TF-IDF is straightforward, making it ideal for forming the basis for more complicated algorithms and query retrieval systems (Berger et al, 2000). Despite its strength, TF-IDF has its limitations. In terms of synonyms, notice that TF-IDF does not make the jump to the relationship between words. Going back to (Berger & Lafferty, 1999), if the user wanted to find information about, say, the word priest, TF-IDF would not consider documents that might be relevant to the query but instead use the word reverend. In our own experiment, TF-IDF could not equate the word drug with its plural drugs, categorizing each instead as separate words and slightly decreasing the words wd value. For large document collections, this could present an escalating problem. 4.2 Further Research Since TF-IDF is merely a staple benchmark, numerous algorithms have surfaced that take the program to the next level. (Berger et al, 2000) propose a number of these in a single paper, including a version of TF-IDF that they call Adaptive TF-IDF. This algorithm incorporates hillclimbing and gradient descent to enhance performance. They also propose an algorithm for performing TF-IDF in a cross-language retrieval setting by applying statistical translation to the benchmark TFIDF. Genetic algorithms have also been used to evolve programs that can match or beat TF-IDF schemes. (Oren, 2002) employs this method to evolve a large colony of individuals. Using the main ideas of genetic programming, mutation, crossover and copying, the author of the paper was able to evolve programs that performed slightly better than the common TF-IDF weighing scheme. Though the author felt the results were not considered significant, the paper shows that there is still interest in enhancing the simple TF-IDF scheme. Examining our data, the easiest way for us to enhance TFIDF would be to disregard case-sensitivity and equate

words with their lexical derivations and synonyms. Future research might also include employing TF-IDF to performing searches in documents written in a different language than the query. Enhancing the already powerful TF-IDF algorithm would increase the success of query retrieval systems, which have quickly risen to become a key element of present global information exchange.

References

Berger, A & Lafferty, J. (1999). Information Retrieval as nd Statistical Translation. In Proceedings of the 22 ACM Conference on Research and Development in Information Retrieval (SIGIR99), 222-229. Berger, A et al (2000). Bridging the Lexical Chasm: Statistical Approaches to Answer Finding. In Proc. Int. Conf. Research and Development in Information Retrieval, 192-199. Berry, Michael W. et al. (1995). Using Linear Algebra for Intelligent Information Retrieval. SIAM Review, 37(4):177-196. Brown, Peter F. et al. (1990). A Statistical Approach to Machine Translation. In Computational Linguistics 16(2): 79-85. Littman, M., & Keim, G. (1997). Cross-Language Text Retrieval with Three Languages. In CS-1997-16, Duke University. Oren, Nir. (2002). Reexamining tf.idf based information retrieval with Genetic Programming. In Proceedings of SAICSIT 2002, 1-10. Salton, G. & Buckley, C. (1988). Term-weighing approache sin automatic text retrieval. In Information Processing & Management, 24(5): 513-523.

Вам также может понравиться

- Lecture Notes For Algorithms For Data Science: 1 Nearest NeighborsДокумент3 страницыLecture Notes For Algorithms For Data Science: 1 Nearest NeighborsLakshmiNarasimhan GNОценок пока нет

- REUTERS-21578 TEXT MINING REPORTДокумент51 страницаREUTERS-21578 TEXT MINING REPORTsabihuddin100% (1)

- Introduction to Reliable and Secure Distributed ProgrammingОт EverandIntroduction to Reliable and Secure Distributed ProgrammingОценок пока нет

- Implement of Salary Prediction System To Improve Student Motivation Using Data Mining Technique PDFДокумент6 страницImplement of Salary Prediction System To Improve Student Motivation Using Data Mining Technique PDFMokong SoaresОценок пока нет

- Introduction to Machine Learning in the Cloud with Python: Concepts and PracticesОт EverandIntroduction to Machine Learning in the Cloud with Python: Concepts and PracticesОценок пока нет

- Data Governance and Data Management: Contextualizing Data Governance Drivers, Technologies, and ToolsОт EverandData Governance and Data Management: Contextualizing Data Governance Drivers, Technologies, and ToolsОценок пока нет

- A Hands-On Guide To Text Classification With Transformer Models (XLNet, BERT, XLM, RoBERTa)Документ9 страницA Hands-On Guide To Text Classification With Transformer Models (XLNet, BERT, XLM, RoBERTa)sita deviОценок пока нет

- Bok:978 1 4899 7218 7 PDFДокумент375 страницBok:978 1 4899 7218 7 PDFPRITI DASОценок пока нет

- My ML Lab ManualДокумент21 страницаMy ML Lab ManualAnonymous qZP5Zyb2Оценок пока нет

- ML - Lab - Classifiers BLANK PDFДокумент68 страницML - Lab - Classifiers BLANK PDFAndy willОценок пока нет

- TensorFlow With RДокумент46 страницTensorFlow With RbiondimiОценок пока нет

- PTC Mathcad Prime 3.1 Migration Guide PDFДокумент58 страницPTC Mathcad Prime 3.1 Migration Guide PDFJose DuarteОценок пока нет

- Ordinal and Multinomial ModelsДокумент58 страницOrdinal and Multinomial ModelsSuhel Ahmad100% (1)

- Machine Learning Interview QuestionsДокумент8 страницMachine Learning Interview QuestionsPriya KoshtaОценок пока нет

- 000+ +curriculum+ +Complete+Data+Science+and+Machine+Learning+Using+PythonДокумент10 страниц000+ +curriculum+ +Complete+Data+Science+and+Machine+Learning+Using+PythonVishal MudgalОценок пока нет

- Introduction to Machine Learning in 40 CharactersДокумент59 страницIntroduction to Machine Learning in 40 CharactersJAYACHANDRAN J 20PHD0157Оценок пока нет

- Core Java Assignments: Day #2: Inheritance and PolymorphismДокумент2 страницыCore Java Assignments: Day #2: Inheritance and PolymorphismSai Anil Kumar100% (1)

- Dealing With Missing Data in Python PandasДокумент14 страницDealing With Missing Data in Python PandasSelloОценок пока нет

- Self Organized Maps: Inspire Educate TransformДокумент17 страницSelf Organized Maps: Inspire Educate TransformSahil GouthamОценок пока нет

- Pro Power BI Theme Creation: JSON Stylesheets for Automated Dashboard FormattingОт EverandPro Power BI Theme Creation: JSON Stylesheets for Automated Dashboard FormattingОценок пока нет

- IE 7374 Spring SyllabusДокумент5 страницIE 7374 Spring SyllabusArnold ZhangОценок пока нет

- Statistics and Machine Learning in PythonДокумент218 страницStatistics and Machine Learning in PythonvicbaradatОценок пока нет

- RM NotesДокумент50 страницRM NotesManu YuviОценок пока нет

- Decision Tree - GeeksforGeeksДокумент4 страницыDecision Tree - GeeksforGeeksGaurang singhОценок пока нет

- Regression Analysis in Machine LearningДокумент26 страницRegression Analysis in Machine Learningvepowo LandryОценок пока нет

- Principal Components Analysis (PCA) FinalДокумент23 страницыPrincipal Components Analysis (PCA) FinalendaleОценок пока нет

- Marketing Mix v5Документ38 страницMarketing Mix v5caldevilla515Оценок пока нет

- Text Mining Techniques and ApplicationsДокумент37 страницText Mining Techniques and ApplicationsRusli TaherОценок пока нет

- OrdinalexampleR PDFДокумент9 страницOrdinalexampleR PDFDamon CopelandОценок пока нет

- DATA SCIENCE INTERVIEW PREPARATION: V-NET ARCHITECTURE FOR BIOMEDICAL IMAGE SEGMENTATIONДокумент16 страницDATA SCIENCE INTERVIEW PREPARATION: V-NET ARCHITECTURE FOR BIOMEDICAL IMAGE SEGMENTATIONJulian TolosaОценок пока нет

- ML Lecture 11 (K Nearest Neighbors)Документ13 страницML Lecture 11 (K Nearest Neighbors)Md Fazle RabbyОценок пока нет

- Statistics For Data Science - 1Документ38 страницStatistics For Data Science - 1Akash Srivastava100% (1)

- UGC MODEL CURRICULUM STATISTICSДокумент101 страницаUGC MODEL CURRICULUM STATISTICSAlok ThakkarОценок пока нет

- Machine Learning in Action: Linear RegressionДокумент47 страницMachine Learning in Action: Linear RegressionRaushan Kashyap100% (1)

- Introduction to Parallel Computing: Matrix Multiplication and Decomposition MethodsДокумент52 страницыIntroduction to Parallel Computing: Matrix Multiplication and Decomposition MethodsMumbi NjorogeОценок пока нет

- Ridge and Lasso in Python PDFДокумент5 страницRidge and Lasso in Python PDFASHISH MALIОценок пока нет

- Data Mining Lab ManualДокумент44 страницыData Mining Lab ManualAmanpreet Kaur33% (3)

- Machine LearningДокумент62 страницыMachine LearningDoan Hieu100% (1)

- DBMSДокумент240 страницDBMShdabc123Оценок пока нет

- Crime Prediction in Nigeria's Higer InstitutionsДокумент13 страницCrime Prediction in Nigeria's Higer InstitutionsBenjamin BalaОценок пока нет

- Exploratory Data AnalysisДокумент203 страницыExploratory Data AnalysisSneha miniОценок пока нет

- R Programming InterviewДокумент24 страницыR Programming InterviewRahul ShrivastavaОценок пока нет

- Random ForestДокумент32 страницыRandom ForestHJ ConsultantsОценок пока нет

- Data Science Interview Questions ExplainedДокумент55 страницData Science Interview Questions ExplainedArunachalam Narayanan100% (2)

- Data Engineering Lab: List of ProgramsДокумент2 страницыData Engineering Lab: List of ProgramsNandini ChowdaryОценок пока нет

- SAS PresentationДокумент49 страницSAS PresentationVarun JainОценок пока нет

- Retrieval Utilities Improve ResultsДокумент19 страницRetrieval Utilities Improve ResultsTaran RishitОценок пока нет

- CS699 Introduction to Data MiningДокумент50 страницCS699 Introduction to Data Miningt naОценок пока нет

- A Novel Approach For Improving Packet Loss MeasurementДокумент86 страницA Novel Approach For Improving Packet Loss MeasurementChaitanya Kumar Reddy PotliОценок пока нет

- 6 Different Ways To Compensate For Missing Values in A DatasetДокумент6 страниц6 Different Ways To Compensate For Missing Values in A DatasetichaОценок пока нет

- Artificial Neural Networks Kluniversity Course HandoutДокумент18 страницArtificial Neural Networks Kluniversity Course HandoutHarish ParuchuriОценок пока нет

- SAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesОт EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesОценок пока нет

- Conners 3 Parent Assessment ReportДокумент19 страницConners 3 Parent Assessment ReportSamridhi VermaОценок пока нет

- NotesДокумент100 страницNotesUgo PetrusОценок пока нет

- English: Quarter 2 - Module 7: A Venture To The Wonders of Reading and ListeningДокумент32 страницыEnglish: Quarter 2 - Module 7: A Venture To The Wonders of Reading and ListeningMercy GanasОценок пока нет

- Teacher support and student disciplineДокумент3 страницыTeacher support and student disciplineZamZamieОценок пока нет

- Module 1 - Lesson 1 - PR3Документ39 страницModule 1 - Lesson 1 - PR3Cressia Mhay BaroteaОценок пока нет

- Ged 105 - Main Topic 1Документ33 страницыGed 105 - Main Topic 1Dannica Keyence MagnayeОценок пока нет

- NSD TestScoresДокумент6 страницNSD TestScoresmapasolutionОценок пока нет

- Elderly Depression Reduced by Structured Reminiscence TherapyДокумент23 страницыElderly Depression Reduced by Structured Reminiscence TherapyRoberto Carlos Navarro QuirozОценок пока нет

- A Semi-Detailed Lesson Plan in Physical Education 7: ValuesДокумент3 страницыA Semi-Detailed Lesson Plan in Physical Education 7: ValuesRose Glaire Alaine Tabra100% (1)

- Disc Personality Test Result - Free Disc Types Test Online at 123testДокумент3 страницыDisc Personality Test Result - Free Disc Types Test Online at 123testapi-522595985Оценок пока нет

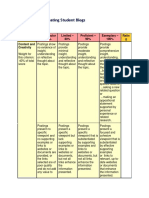

- A Rubric For Evaluating Student BlogsДокумент5 страницA Rubric For Evaluating Student Blogsmichelle garbinОценок пока нет

- Emerys IepДокумент15 страницEmerys Iepapi-300745138Оценок пока нет

- Knowledge Base Model For Call Center Department: A Literature ReviewДокумент6 страницKnowledge Base Model For Call Center Department: A Literature ReviewElma AvdagicОценок пока нет

- Writing ObjectivesДокумент32 страницыWriting ObjectivesTita RakhmitaОценок пока нет

- Mba 504Документ2 страницыMba 504api-3782519Оценок пока нет

- Cause and Effect LessonДокумент3 страницыCause and Effect Lessonapi-357598198Оценок пока нет

- Project Scheduling PERTCPMДокумент17 страницProject Scheduling PERTCPMEmad Bayoumi NewОценок пока нет

- Grade 5 Wetlands EcosystemsДокумент4 страницыGrade 5 Wetlands EcosystemsThe Lost ChefОценок пока нет

- Knu Plan 2-6 Sept 2019Документ4 страницыKnu Plan 2-6 Sept 2019tehminaОценок пока нет

- Psychology From YoutubeДокумент5 страницPsychology From YoutubeMharveeann PagalingОценок пока нет

- Theories and Models in CommunicationДокумент19 страницTheories and Models in CommunicationMARZAN MA RENEEОценок пока нет

- Learning Experience 1-14Документ125 страницLearning Experience 1-14Yvonne John PuspusОценок пока нет

- Joyce TravelbeeДокумент9 страницJoyce TravelbeeCj ScarletОценок пока нет

- Goal 3 Cspel 4Документ4 страницыGoal 3 Cspel 4api-377039370Оценок пока нет

- Course Syllabus English B2Документ8 страницCourse Syllabus English B2Ale RomeroОценок пока нет

- Satisfaction With Life ScaleДокумент7 страницSatisfaction With Life Scale'Personal development program: Personal development books, ebooks and pdfОценок пока нет

- The Actor in You Teacher's GuideДокумент9 страницThe Actor in You Teacher's GuideIonut IvanovОценок пока нет

- Introduction To LinguisticsДокумент40 страницIntroduction To LinguisticsFernandita Gusweni Jayanti0% (1)

- Barreno, Marou P.: Performance Task 1Документ3 страницыBarreno, Marou P.: Performance Task 1mars100% (2)

- General and Behavioral Interview QuestionsДокумент3 страницыGeneral and Behavioral Interview QuestionsAbhishek VermaОценок пока нет