Академический Документы

Профессиональный Документы

Культура Документы

Introduction To Machine Learning Lecture 2: Linear Regression

Загружено:

Deepa DevarajИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Introduction To Machine Learning Lecture 2: Linear Regression

Загружено:

Deepa DevarajАвторское право:

Доступные форматы

CSC2515 Fall 2007

Introduction to Machine Learning

Lecture 2: Linear regression

All lecture slides will be available as .ppt, .ps, & .htm at

www.cs.toronto.edu/~hinton

Many of the figures are provided by Chris Bishop

from his textbook: Pattern Recognition and Machine Learning

Linear models

It is mathematically easy to fit linear models to data.

We can learn a lot about model-fitting in this relatively simple

case.

There are many ways to make linear models more powerful while

retaining their nice mathematical properties:

By using non-linear, non-adaptive basis functions, we can get

generalised linear models that learn non-linear mappings from

input to output but are linear in their parameters only the linear

part of the model learns.

By using kernel methods we can handle expansions of the raw

data that use a huge number of non-linear, non-adaptive basis

functions.

By using large margin kernel methods we can avoid overfitting

even when we use huge numbers of basis functions.

But linear methods will not solve most AI problems.

They have fundamental limitations.

Some types of basis function in 1-D

Sigmoids Gaussians Polynomials

Sigmoid and Gaussian basis functions can also be used in

multilayer neural networks, but neural networks learn the

parameters of the basis functions. This is much more powerful but

also much harder and much messier.

Two types of linear model that are

equivalent with respect to learning

The first model has the same number of adaptive

coefficients as the dimensionality of the data +1.

The second model has the same number of adaptive

coefficients as the number of basis functions +1.

Once we have replaced the data by the outputs of the basis

functions, fitting the second model is exactly the same

problem as fitting the first model (unless we use the kernel

trick)

So its silly to clutter up the math with basis functions

) ( ... ) ( ) ( ) (

... ) (

2 2 1 1 0

2 2 1 1 0

x w x x w x,

x w w x,

u = + + + =

= + + + =

T

T

w w w y

x w x w w y

| |

bias

The loss function

Fitting a model to data is typically done by finding the

parameter values that minimize some loss function.

There are many possible loss functions. What criterion

should we use for choosing one?

Choose one that makes the math easy (squared error)

Choose one that makes the fitting correspond to

maximizing the likelihood of the training data given

some noise model for the observed outputs.

Choose one that makes it easy to interpret the learned

coefficients (easy if mostly zeros)

Choose one that corresponds to the real loss on a

practical application (losses are often asymmetric)

Minimizing squared error

t X X X w

x w

x w

T T

n

T

n

n

T

t error

y

1 *

2

) (

) (

=

=

=

optimal

weights

inverse of the

covariance

matrix of the

input vectors

the transposed

design matrix has

one input vector

per column

vector of

target values

A geometrical view of the solution

The space has one axis for

each training case.

So the vector of target values

is a point in the space.

Each vector of the values of

one component of the input is

also a point in this space.

The input component vectors

span a subspace, S.

A weighted sum of the

input component vectors

must lie in S.

The optimal solution is the

orthogonal projection of the

vector of target values onto S.

3.1 4.2

1.5 2.7

0.6 1.8

input vector

component

vector

When is minimizing the squared error equivalent to

Maximum Likelihood Learning?

Minimizing the squared

residuals is equivalent to

maximizing the log probability

of the correct answer under a

Gaussian centered at the

models guess.

t = the

correct

answer

y = models

estimate of most

probable value

2

2

2

) (

2

) (

log 2 log ) | ( log

2

1

) , | ( ) | (

) , (

2

2

o

o t

o t

o

n n

n n

y t

n n n n n

n n

y t

y t p

t noise y p y t p

y y

n n

e

+ + =

= = + =

=

w x

w x

can be ignored

if sigma is fixed

can be ignored if

sigma is same

for every case

Multiple outputs

If there are multiple outputs we can often treat the

learning problem as a set of independent problems, one

per output.

Not true if the output noise is correlated and changes

from case to case.

Even though they are independent problems we can

save work by only multiplying the input vectors by the

inverse covariance of the input components once. For

output k we have:

k

T T

k

t X X X w

1 *

) (

=

does not

depend on a

Least mean squares: An alternative approach for

really big datasets

This is called online learning. It can be more efficient if the dataset is

very redundant and it is simple to implement in hardware.

It is also called stochastic gradient descent if the training cases are

picked at random.

Care must be taken with the learning rate to prevent divergent

oscillations, and the rate must decrease at the end to get a good fit.

) (

1

t

t t

q

n

E V =

+

w w

weights after

seeing training

case tau+1

learning

rate

vector of derivatives of the

squared error w.r.t. the

weights on the training case

presented at time tau.

Regularized least squares

2

1

|| || } ) , ( { ) (

~

2

2

2

1

w w x w

+

=

=

n n

t y E

N

n

t X X X I w

T T 1 *

) (

+ =

The penalty on the squared weights is

mathematically compatible with the squared

error function, so we get a nice closed form

for the optimal weights with this regularizer:

identity

matrix

A picture of the effect of the regularizer

The overall cost function

is the sum of two

parabolic bowls.

The sum is also a

parabolic bowl.

The combined minimum

lies on the line between

the minimum of the

squared error and the

origin.

The regularizer just

shrinks the weights.

A problem with the regularizer

We would like the solution we find to be independent of the units we

use to measure the components of the input vector.

If different components have different units (e.g. age and height), we

have a problem.

If we measure age in months and height in meters, the relative

values of the two weights are very different than if we use years

and millemeters. So the squared penalty has very different

effects.

One way to avoid the units problem: Whiten the data so that the

input components all have unit variance and no covariance. This

stops the regularizer from being applied to the whitening matrix.

But this can cause other problems when two input components

are almost perfectly correlated.

We really need a prior on the weight on each input component.

T T T

whitened

X X X X

2

1

) (

=

Why does shrinkage help?

Suppose you have an unbiased estimator for the

price of corn and an unbiased estimator for the

number of fouls committed by the leafs.

You can improve each estimate by taking a

weighted average with the other:

For some positive epsilon, this estimate will have

a smaller squared error (but it will be biased).

leafs corn corn

y y y c c + = ) 1 (

~

Why shrinkage helps

residual

0

corn corn

t y

corn leafs

t y

one example of

If we move all the blue residuals towards the green arrow by an

amount proportional to their difference, we are bound to reduce the

average squared magnitudes of the residuals. So if we pick a blue

point at random, we reduce the expected residual.

only the red

points get worse

Other regularizers

We do not need to use the squared error,

provided we are willing to do more computation.

Other powers of the weights can be used.

The lasso: penalizing the absolute values of

the weights

Finding the minimum requires quadratic programming

but its still unique because the cost function is convex (a

bowl plus an inverted pyramid)

As lambda is increased, many of the weights go to

exactly zero.

This is great for interpretation, and it is also pretty

good for preventing overfitting.

=

=

i

i n n

t y E

N

n

| | } ) , ( { ) (

~

2

2

1

1

w w x w

A geometrical view of the lasso compared

with a penalty on the squared weights

Notice that w1=0

at the optimum

An example where minimizing the squared

error gives terrible estimates

Suppose we have a network of 500

computers and they all have

slightly imperfect clocks.

After doing statistics 101 we decide

to improve the clocks by averaging

all the times to get a least squares

estimate

Then we broadcast the average

to all of the clocks.

Problem: The probability of being

wrong by ten hours is more than

one hundredth of the probability of

being wrong by one hour. In fact, its

about the same!

error

0

n

e

g

a

t

i

v

e

l

o

g

p

r

o

b

o

f

e

r

r

o

r

One dimensional cross-sections of loss

functions with different powers

Negative log of Gaussian Negative log of Laplacian

Minimizing the absolute error

This minimization involves solving a linear

programming problem.

It corresponds to maximum likelihood estimation

if the output noise is modeled by a Laplacian

instead of a Gaussian.

n

n

T

n over

t | | min x w

w

const y t a y t p

e a y t p

n n n n

y t a

n n

n n

+ =

=

| | ) | ( log

) | (

| |

The bias-variance trade-off

(a figment of the frequentists lack of imagination?)

Imagine that the training set was drawn at random from a

whole set of training sets.

The squared loss can be decomposed into a bias term

and a variance term.

Bias = systematic error in the models estimates

Variance = noise in the estimates cause by sampling

noise in the training set.

There is also an additional loss due to the fact that the

target values are noisy.

We eliminate this extra, irreducible loss from the math

by using the average target values (i.e. the unknown,

noise-free values)

{ } { }

{ }

D

D n n

n

D

n

D

n n

D y D y

t D y t D y

2

2

2

) ; ( ) ; (

) ; ( ) ; (

> < +

=

x x

x x

average

target

value for

test case n

models estimate

for test case n

when trained on

dataset D

angle brackets are

physics notation

for expectation

over D

The bias term is the squared error of the

average, over all training datasets, of the

estimates.

The variance term is the variance, over all training

datasets, of the models estimate.

see Bishop page 149 for a derivation using a different notation

The bias-variance decomposition

How the regularization parameter affects the bias

and variance terms

low bias high bias

low variance high variance

4 . 2

= e

31 .

= e

6 . 2

e =

An example of the bias-variance trade-off

Beating the bias-variance trade-off

We can reduce the variance term by averaging lots of

models trained on different datasets.

This seems silly. If we had lots of different datasets it

would be better to combine them into one big training

set.

With more training data there will be much less variance.

Weird idea: We can create different datasets by bootstrap

sampling of our single training dataset.

This is called bagging and it works surprisingly well.

But if we have enough computation its better to do the

right Bayesian thing:

Combine the predictions of many models using the

posterior probability of each parameter vector as the

combination weight.

The Bayesian approach

Consider a very simple linear model that only has two

parameters:

It is possible to display the full posterior distribution over

the two-dimensional parameter space.

The likelihood term is a Gaussian, so if we use a

Gaussian prior the posterior will be Gaussian:

This is a conjugate prior. It means that the prior is just

like having already observed some data.

x w w x y

1 0

) , ( + = w

const t p

p

t p

T

N

n

n

T

n

N

n

n

T

n

+ + =

=

=

[

=

=

w w x w t | w

I w w

x w w X, t

1

2

1

1

1

2

) (

2

) ( ln

) , 0 | ( ) | (

) , | ( ) , | (

o |

o o

| |

N

likelihood

conjugate

prior

Gaussian

variance of

output noise

inverse

variance

of prior

|

o

=

The Bayesian interpretation of

the regularization parameter:

With no data we

sample lines from

the prior.

With 20 data

points, the prior

has little effect

Using the posterior distribution

If we can afford the computation, we ought to average

the predictions of all parameter settings using the

posterior distribution to weight the predictions:

w w w d D p x t p D x t p

test test test test

) , , | ( ) , , | ( ) , , , | ( | o | | o

}

=

training

data

precision

of output

noise

precision

of prior

The predictive distribution for noisy

sinusoidal data modeled by a linear

combination of nine radial basis functions.

A way to see the covariance of the

predictions for different values of x

We sample

models at

random from

the posterior

and show the

mean of the

each models

predictions

Bayesian model comparison

We usually need to decide between many different models:

Different numbers of basis functions

Different types of basis functions

Different strengths of regularizers

The frequentist way to decide between models is to hold back a

validation set and pick the model that does best on the validation

data.

This gives less training data. We can use a small validation set

and evaluate models by training many different times using

different small validation sets. But this is tedious.

The Bayesian alternative is to use all of the data for training each

model and to use the evidence to pick the best model (or to

average over models).

The evidence is the marginal likelihood with the parameters

integrated out.

Definition of the evidence

The evidence is the normalizing term in the

expression for the posterior probability of a

weight vector given a dataset and a model class

w w w d M p M D p M D p

i i i

) | ( ) , | ( ) | (

}

=

) | (

) , | ( ) | (

) , | (

i

i i

i

M D p

M D p M p

M D p

w w

w =

Using the evidence

Now we use the evidence for a model class in exactly

the same way as we use the likelihood term for a

particular setting of the parameters

The evidence gives us a posterior distribution over

model classes, provided we have a prior.

For simplicity in making predictions we often just pick

the model class with the highest posterior probability.

This is called model selection.

But we should still average over the parameter vectors for

that model class using the posterior distribution.

) | ( ) ( ) | (

i i i

M D p M p D M p

How the model complexity affects the evidence

Increasingly complicated data

Determining the hyperparameters that

specify the variance of the prior and the

variance of the output noise.

Ideally, when making a prediction, we would like to

integrate out the hyperparameters, just like we integrate

out the weights

But this is infeasible even when everything is

Gaussian.

Empirical Bayes (also called the evidence approximation)

means integrating out the parameters but maximizing over

the hyperparameters.

Its more feasible and often works well.

It creates ideological disputes.

Empirical Bayes

The equation above is the right predictive distribution (assuming we

do not have hyperpriors for alpha and beta).

The equation below is a more tractable approximation that works

well if the posterior distributions for alpha and beta are highly

peaked (so the distributions are well approximated by their most

likely values)

| o | o | o | d d d D p D p t p D t p w w w x x ) | , ( ) , , | ( ) , , | ( ) , | (

} } }

=

target and

input on

test case

training

data

point estimates of alpha and beta

that maximize the evidence

| o | o | o | | o d d d D p D p t p D t p D t p w w w x x x ) |

( ) ,

| ( ) ,

, | ( )

, , | ( ) , | (

}

= ~

precision

of output

noise

precision

of prior

Вам также может понравиться

- NA FinalExam Summer15 PDFДокумент8 страницNA FinalExam Summer15 PDFAnonymous jITO0qQHОценок пока нет

- HSC4537 SyllabusДокумент10 страницHSC4537 Syllabusmido1770Оценок пока нет

- Sword of The Spirit at Franciscan UniversityДокумент11 страницSword of The Spirit at Franciscan UniversityJohn Flaherty100% (1)

- Neural Network Lectures RBF 1Документ44 страницыNeural Network Lectures RBF 1Yekanth RamОценок пока нет

- Lec 10 SVMДокумент35 страницLec 10 SVMvijayОценок пока нет

- Unit IVДокумент100 страницUnit IVMugizhan NagendiranОценок пока нет

- Numerical Methods Chapter 1 3 4Документ44 страницыNumerical Methods Chapter 1 3 4dsnОценок пока нет

- Lecture 4Документ33 страницыLecture 4Venkat ram ReddyОценок пока нет

- Divide and ConquerДокумент50 страницDivide and Conquerjoe learioОценок пока нет

- EE5434 RegressionДокумент96 страницEE5434 Regressionrui sunОценок пока нет

- Numerical Methods - An IntroductionДокумент30 страницNumerical Methods - An IntroductionPrisma FebrianaОценок пока нет

- Exercise 4: Self-Organizing Maps: Articial Neural Networks and Other Learning Systems, 2D1432Документ7 страницExercise 4: Self-Organizing Maps: Articial Neural Networks and Other Learning Systems, 2D1432Durai ArunОценок пока нет

- Lecture1 6 PDFДокумент30 страницLecture1 6 PDFColinОценок пока нет

- MLCH9Документ45 страницMLCH9sam33rdhakalОценок пока нет

- Dynamicprogramming 160512234533Документ30 страницDynamicprogramming 160512234533Abishek joshuaОценок пока нет

- Math 243M, Numerical Linear Algebra Lecture NotesДокумент71 страницаMath 243M, Numerical Linear Algebra Lecture NotesRonaldОценок пока нет

- Linear AlgebraДокумент65 страницLinear AlgebraWilliam Hartono100% (1)

- Sparse 1Документ68 страницSparse 1abiОценок пока нет

- 5 Round-Off Errors and Truncation ErrorsДокумент35 страниц5 Round-Off Errors and Truncation ErrorsEdryan PoОценок пока нет

- Linear AlgebraДокумент65 страницLinear AlgebraMariyah ZainОценок пока нет

- Linear RegressionДокумент29 страницLinear RegressionSoubhav ChamanОценок пока нет

- Teknik Komputasi (TEI 116) : 3 Sks Oleh: Husni Rois Ali, S.T., M.Eng. Noor Akhmad Setiawan, S.T., M.T., PH.DДокумент44 страницыTeknik Komputasi (TEI 116) : 3 Sks Oleh: Husni Rois Ali, S.T., M.Eng. Noor Akhmad Setiawan, S.T., M.T., PH.DblackzenyОценок пока нет

- AI-Lecture 12 - Simple PerceptronДокумент24 страницыAI-Lecture 12 - Simple PerceptronMadiha Nasrullah100% (1)

- Unit 2Документ37 страницUnit 2PoornaОценок пока нет

- SUMSEM2022-23 CSI3020 TH VL2022230700600 2023-06-16 Reference-Material-IДокумент49 страницSUMSEM2022-23 CSI3020 TH VL2022230700600 2023-06-16 Reference-Material-IVishnu MukundanОценок пока нет

- PWD 2019 20 Class 9 Final PDFДокумент21 страницаPWD 2019 20 Class 9 Final PDFGuille FKОценок пока нет

- Discretization of EquationДокумент14 страницDiscretization of Equationsandyengineer13Оценок пока нет

- Numc PDFДокумент18 страницNumc PDFMadhur MayankОценок пока нет

- Supervised Learning 1 PDFДокумент162 страницыSupervised Learning 1 PDFAlexanderОценок пока нет

- Divide and ConquerДокумент17 страницDivide and Conquernishtha royОценок пока нет

- Lec 6 TutorialДокумент27 страницLec 6 TutorialsentryОценок пока нет

- Numerical Programming I (For CSE) : Final ExamДокумент8 страницNumerical Programming I (For CSE) : Final ExamhisuinОценок пока нет

- CmdieДокумент8 страницCmdieBeny AbdouОценок пока нет

- L5 Normal Equations For Regression PDFДокумент20 страницL5 Normal Equations For Regression PDFjoseph karimОценок пока нет

- Lecture 2 MathДокумент34 страницыLecture 2 Mathnikola001Оценок пока нет

- Integration of Ordinary Differential Equations: + Q (X) R (X) (16.0.1)Документ4 страницыIntegration of Ordinary Differential Equations: + Q (X) R (X) (16.0.1)Vinay GuptaОценок пока нет

- Week 7Документ53 страницыWeek 7Khaled TarekОценок пока нет

- Clustering (Unit 3)Документ71 страницаClustering (Unit 3)vedang maheshwari100% (1)

- Lecture 1Документ17 страницLecture 1amjadtawfeq2Оценок пока нет

- DSCI 303: Machine Learning For Data Science Fall 2020Документ5 страницDSCI 303: Machine Learning For Data Science Fall 2020Anonymous StudentОценок пока нет

- SortingДокумент57 страницSortingSanjeeb PradhanОценок пока нет

- Unit 4,5Документ13 страницUnit 4,5ggyutuygjОценок пока нет

- CIS 520, Machine Learning, Fall 2015: Assignment 2 Due: Friday, September 18th, 11:59pm (Via Turnin)Документ3 страницыCIS 520, Machine Learning, Fall 2015: Assignment 2 Due: Friday, September 18th, 11:59pm (Via Turnin)DiDo Mohammed AbdullaОценок пока нет

- Recursive Binary Search AlgorithmДокумент25 страницRecursive Binary Search AlgorithmChhaya NarvekarОценок пока нет

- Komputasi TK Kuliah 1 Genap1213Документ33 страницыKomputasi TK Kuliah 1 Genap1213Ferdian TjioeОценок пока нет

- Assignment ProblemДокумент16 страницAssignment ProblemSamia RahmanОценок пока нет

- SVM Using PythonДокумент24 страницыSVM Using PythonRavindra AmbilwadeОценок пока нет

- Chapter 8 Part 2 Distribution ModelДокумент23 страницыChapter 8 Part 2 Distribution ModelernieОценок пока нет

- Unit 8 Assignment Problem: StructureДокумент14 страницUnit 8 Assignment Problem: StructurezohaibОценок пока нет

- w3 - Linear Model - Linear RegressionДокумент33 страницыw3 - Linear Model - Linear RegressionSwastik SindhaniОценок пока нет

- CPSC 540 Assignment 1 (Due January 19)Документ9 страницCPSC 540 Assignment 1 (Due January 19)JohnnyDoe0x27AОценок пока нет

- Python Basics NympyДокумент5 страницPython Basics NympyikramОценок пока нет

- COMP2230 Introduction To Algorithmics: Lecture OverviewДокумент35 страницCOMP2230 Introduction To Algorithmics: Lecture OverviewMrZaggyОценок пока нет

- Random ForestДокумент83 страницыRandom ForestBharath Reddy MannemОценок пока нет

- ML NotesДокумент79 страницML NotesIna VladОценок пока нет

- Olympiad Combinatorics PDFДокумент255 страницOlympiad Combinatorics PDFKrutarth Patel100% (5)

- Assignment 2Документ4 страницыAssignment 210121011Оценок пока нет

- A-level Maths Revision: Cheeky Revision ShortcutsОт EverandA-level Maths Revision: Cheeky Revision ShortcutsРейтинг: 3.5 из 5 звезд3.5/5 (8)

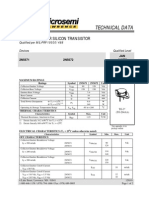

- Support Component List: Components For Spacecraft ElectronicsДокумент22 страницыSupport Component List: Components For Spacecraft ElectronicsDeepa DevarajОценок пока нет

- Recipes From Mum's Kitchen - Mutton BiryaniДокумент3 страницыRecipes From Mum's Kitchen - Mutton BiryaniDeepa DevarajОценок пока нет

- Standard Wire Gauge ListДокумент3 страницыStandard Wire Gauge ListDeepa DevarajОценок пока нет

- Spacewire PCBДокумент1 страницаSpacewire PCBDeepa DevarajОценок пока нет

- Spec - Dappro 16Mb SRAM - 5V - QFP68Документ23 страницыSpec - Dappro 16Mb SRAM - 5V - QFP68Deepa DevarajОценок пока нет

- EC Announces Dates For Polls in Five StatesДокумент3 страницыEC Announces Dates For Polls in Five StatesDeepa DevarajОценок пока нет

- Results PO 2013Документ22 страницыResults PO 2013Deepa DevarajОценок пока нет

- 2N6849 LP PMOS IR For Neg SupplyДокумент7 страниц2N6849 LP PMOS IR For Neg SupplyDeepa DevarajОценок пока нет

- Tina Ti Spice ModelsДокумент17 страницTina Ti Spice Modelsdragos_bondОценок пока нет

- Systems Advantages of 1553Документ8 страницSystems Advantages of 1553Deepa DevarajОценок пока нет

- Silicon NPN Power Transistors: Savantic Semiconductor Product SpecificationДокумент3 страницыSilicon NPN Power Transistors: Savantic Semiconductor Product SpecificationDeepa DevarajОценок пока нет

- 2n7389 Irhf9130Документ8 страниц2n7389 Irhf9130Deepa DevarajОценок пока нет

- Vehicle Rto Exam FaqДокумент1 страницаVehicle Rto Exam FaqDeepa DevarajОценок пока нет

- 2N6849Документ2 страницы2N6849Deepa DevarajОценок пока нет

- 2N5672Документ4 страницы2N5672Deepa DevarajОценок пока нет

- Technical Data: PNP High Power Silicon TransistorДокумент3 страницыTechnical Data: PNP High Power Silicon TransistorDeepa DevarajОценок пока нет

- NPN 2N5671 - 2N5672: H I G H C U R R e N T F A S T S W I T C H I N G A P P L I C A T I o N SДокумент0 страницNPN 2N5671 - 2N5672: H I G H C U R R e N T F A S T S W I T C H I N G A P P L I C A T I o N SDeepa DevarajОценок пока нет

- 2N3792Документ4 страницы2N3792Deepa DevarajОценок пока нет

- 2N5672Документ13 страниц2N5672Deepa DevarajОценок пока нет

- 2 N 5153Документ3 страницы2 N 5153Deepa DevarajОценок пока нет

- Technical Data: NPN High Power Silicon TransistorДокумент2 страницыTechnical Data: NPN High Power Silicon TransistorDeepa DevarajОценок пока нет

- 2N3792Документ4 страницы2N3792Deepa DevarajОценок пока нет

- 1 N 5822Документ3 страницы1 N 5822Deepa DevarajОценок пока нет

- 2N3700UBДокумент0 страниц2N3700UBDeepa DevarajОценок пока нет

- 2N3637Документ0 страниц2N3637Deepa DevarajОценок пока нет

- 2N3019Документ0 страниц2N3019Deepa DevarajОценок пока нет

- Description Appearance: Surface Mount Voidless-Hermetically-Sealed Ultra Fast Recovery Glass RectifiersДокумент0 страницDescription Appearance: Surface Mount Voidless-Hermetically-Sealed Ultra Fast Recovery Glass RectifiersDeepa DevarajОценок пока нет

- Diodo Shotky 1n5817-5819Документ9 страницDiodo Shotky 1n5817-5819Alejandro Esteban VilaОценок пока нет

- 1 N 5822Документ3 страницы1 N 5822Deepa DevarajОценок пока нет

- Description Appearance: Surface Mount Voidless-Hermetically-Sealed Ultra Fast Recovery Glass RectifiersДокумент0 страницDescription Appearance: Surface Mount Voidless-Hermetically-Sealed Ultra Fast Recovery Glass RectifiersDeepa DevarajОценок пока нет

- Customer IdentifyingДокумент11 страницCustomer Identifyinganon_363811381100% (2)

- Discussion Report - Language, Langue and ParoleДокумент3 страницыDiscussion Report - Language, Langue and ParoleIgnacio Jey BОценок пока нет

- Report On Descriptive Statistics and Item AnalysisДокумент21 страницаReport On Descriptive Statistics and Item Analysisapi-3840189100% (1)

- Reaction Paper On Thesis ProposalДокумент2 страницыReaction Paper On Thesis ProposalDann SarteОценок пока нет

- Explanatory Models and Practice Models of SHRDДокумент5 страницExplanatory Models and Practice Models of SHRDSonia TauhidОценок пока нет

- Critical Reflective Techniques - Ilumin, Paula Joy DДокумент2 страницыCritical Reflective Techniques - Ilumin, Paula Joy DPaula Joy IluminОценок пока нет

- Anatomy and Surgical Approaches To Lateral VentriclesДокумент39 страницAnatomy and Surgical Approaches To Lateral VentriclesKaramsi Gopinath NaikОценок пока нет

- Natural Tactical Systems (NTS) 5 Day 360° CQD Basic Instructor CourseДокумент6 страницNatural Tactical Systems (NTS) 5 Day 360° CQD Basic Instructor Coursejhon fierroОценок пока нет

- Review of Present Tense - 1st HomeworkДокумент5 страницReview of Present Tense - 1st HomeworkPaola SОценок пока нет

- Advanced Pharmaceutical Engineering (Adpharming) : Accreditation Course AimsДокумент2 страницыAdvanced Pharmaceutical Engineering (Adpharming) : Accreditation Course AimsJohn AcidОценок пока нет

- Csov HomeworkДокумент4 страницыCsov Homeworkerb6df0n100% (1)

- Naveen Reddy Billapati: Bachelor of Technology in Mechanical EngineeringДокумент2 страницыNaveen Reddy Billapati: Bachelor of Technology in Mechanical EngineeringvikОценок пока нет

- A Detailed Lesson Plan For Grade 7 Final DemoДокумент9 страницA Detailed Lesson Plan For Grade 7 Final DemoEljean LaclacОценок пока нет

- Project NEXT Accomplishment ReportДокумент5 страницProject NEXT Accomplishment ReportRodolfo NelmidaОценок пока нет

- Exam DLLДокумент2 страницыExam DLLlalhang47Оценок пока нет

- Project SynopsisДокумент7 страницProject SynopsisAbinash MishraОценок пока нет

- Course: Life and Works of Rizal: Sand Course Intended Learning RoutcomeДокумент15 страницCourse: Life and Works of Rizal: Sand Course Intended Learning RoutcomeDaryl HilongoОценок пока нет

- Project RizalДокумент2 страницыProject RizalTyrene Samantha SomisОценок пока нет

- Pricing Tutorial MEXLv2 PDFДокумент8 страницPricing Tutorial MEXLv2 PDFjegosssОценок пока нет

- Capstone IdeasДокумент9 страницCapstone IdeastijunkОценок пока нет

- Review of Effective Human Resource Management Techniques in Agile Software Project ManagementДокумент6 страницReview of Effective Human Resource Management Techniques in Agile Software Project ManagementAmjad IqbalОценок пока нет

- Broadcaster 2012 89-2 WinterДокумент25 страницBroadcaster 2012 89-2 WinterConcordiaNebraskaОценок пока нет

- How Many Hours Should One Study To Crack The Civil Services Exam?Документ27 страницHow Many Hours Should One Study To Crack The Civil Services Exam?654321Оценок пока нет

- 14thconvo Booklet at IIT GДокумент73 страницы14thconvo Booklet at IIT GMallikarjunPerumallaОценок пока нет

- Aby Warburg's, Bilderatlas Mnemosyne': Systems of Knowledge and Iconography, In: Burlington Magazine 162, 2020, Pp. 1078-1083Документ7 страницAby Warburg's, Bilderatlas Mnemosyne': Systems of Knowledge and Iconography, In: Burlington Magazine 162, 2020, Pp. 1078-1083AntonisОценок пока нет

- Sing A Joyful Song - PREVIEWДокумент5 страницSing A Joyful Song - PREVIEWRonilo, Jr. CalunodОценок пока нет

- Bank of India (Officers') Service Regulations, 1979Документ318 страницBank of India (Officers') Service Regulations, 1979AtanuОценок пока нет

- Music Discoveries: Discover More at The ShopДокумент3 страницыMusic Discoveries: Discover More at The ShopyolandaferniОценок пока нет