Академический Документы

Профессиональный Документы

Культура Документы

Independent Component Analysis For Time Series Separation

Загружено:

Pravin BholeИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Independent Component Analysis For Time Series Separation

Загружено:

Pravin BholeАвторское право:

Доступные форматы

Independent Component

Analysis

For Time Series Separation

ICA

Blind Signal Separation (BSS) or Independent Component Analysis (ICA) is the

identification & separation of mixtures of sources with little prior

information.

Applications include:

Audio Processing

Medical data

Finance

Array processing (beamforming)

Coding

and most applications where Factor Analysis and PCA is currently used.

While PCA seeks directions that represents data best in a |x

0

- x|

2

sense,

ICA seeks such directions that are most independent from each other.

We will concentrate on Time Series separation of Multiple Targets

The simple Cocktail Party Problem

Sources

Observations

s

1

s

2

x

1

x

2

Mixing matrix A

x = As

n sources, m=n observations

Motivation

Two Independent Sources

Mixture at two Mics

a

IJ

... Depend on the distances of the microphones from the speakers

2 22 1 21 2

2 12 1 11 1

) (

) (

s a s a t x

s a s a t x

+ =

+ =

Motivation

Get the Independent Signals out of the Mixture

ICA Model (Noise Free)

Use statistical latent variables system

Random variable s

k

instead of time signal

x

j

= a

j1

s

1

+ a

j2

s

2

+ .. + a

jn

s

n

, for all j

x = As

ICs s are latent variables & are unknown AND Mixing matrix A is

also unknown

Task: estimate A and s using only the observeable random vector x

Lets assume that no. of ICs = no of observable mixtures

and A is square and invertible

So after estimating A, we can compute W=A

-1

and hence

s = Wx = A

-1

x

Illustration

2 ICs with distribution:

Zero mean and variance equal to 1

Mixing matrix A is

The edges of the parallelogram are in the

direction of the cols of A

So if we can Est joint pdf of x

1

& x

2

and then

locating the edges, we can Est A.

|

|

.

|

\

|

=

1 2

3 2

A

s

=

otherwise

s if

s p

i

i

0

3 | |

3 2

1

) (

Restrictions

s

i

are statistically independent

p(s

1

,s

2

) = p(s

1

)p(s

2

)

Nongaussian distributions

The joint density of unit

variance s

1

& s

2

is symmetric.

So it doesnt contain any

information about the

directions of the cols of the

mixing matrix A. So A cannt

be estimated.

If only one IC is gaussian, the

estimation is still possible.

|

|

.

|

\

|

+

=

2

exp

2

1

) , (

2

2

2

1

2 1

x x

x x p

t

Ambiguities

Cant determine the variances (energies)

of the ICs

Both s & A are unknowns, any scalar multiple in one of the

sources can always be cancelled by dividing the corresponding

col of A by it.

Fix magnitudes of ICs assuming unit variance: E{s

i

2

} = 1

Only ambiguity of sign remains

Cant determine the order of the ICs

Terms can be freely changed, because both s and A are

unknown. So we can call any IC as the first one.

ICA Principal (Non-Gaussian is Independent)

Key to estimating A is non-gaussianity

The distribution of a sum of independent random variables tends toward a Gaussian

distribution. (By CLT)

f(s

1

) f(s

2

) f(x

1

) = f(s

1

+s

2

)

Where w is one of the rows of matrix W.

y is a linear combination of s

i

, with weights given by z

i

.

Since sum of two indep r.v. is more gaussian than individual r.v., so z

T

s is more

gaussian than either of s

i

. AND becomes least gaussian when its equal to one of s

i

.

So we could take w as a vector which maximizes the non-gaussianity of w

T

x.

Such a w would correspond to a z with only one non zero comp. So we get back the s

i.

s z As w x w y

T T T

= = =

Measures of Non-Gaussianity

We need to have a quantitative measure of non-gaussianity for ICA

Estimation.

Kurtotis : gauss=0 (sensitive to outliers)

Entropy : gauss=largest

Neg-entropy : gauss = 0 (difficult to estimate)

Approximations

where v is a standard gaussian random variable and :

2 2 4

}) { ( 3 } { ) ( y E y E y kurt =

}

= dy y f y f y H ) ( log ) ( ) (

) ( ) ( ) ( y H y H y J

gauss

=

{ }

2

2

2

) (

48

1

12

1

) ( y kurt y E y J + =

{ } { } | |

2

) ( ) ( ) ( v G E y G E y J ~

) 2 / . exp( ) (

) . cosh( log

1

) (

2

u a y G

y a

a

y G

=

=

Data Centering & Whitening

Centering

x = x E{x}

But this doesnt mean that ICA cannt estimate the mean, but it just simplifies the

Alg.

ICs are also zero mean because of:

E{s} = WE{x}

After ICA, add W.E{x} to zero mean ICs

Whitening

We transform the xs linearly so that the x

~

are white. Its done by EVD.

x

~

= (ED

-1/2

E

T

)x = ED

-1/2

E

T

Ax = A

~

s

where E{xx

~

} = EDE

T

So we have to Estimate Orthonormal Matrix A

~

An orthonormal matrix has n(n-1)/2 degrees of freedom. So for large dim A we

have to est only half as much parameters. This greatly simplifies ICA.

Reducing dim of data (choosing dominant Eig) while doing whitening also

help.

Noisy ICA Model

x = As + n

A ... mxn mixing matrix

s ... n-dimensional vector of ICs

n ... m-dimensional random noise vector

Same assumptions as for noise-free model, if we use measures of

nongaussianity which are immune to gaussian noise.

So gaussian moments are used as contrast functions. i.e.

however, in pre-whitening the effect of noise must be taken in to account:

x

~

= (E{xx

T

} - )

-1/2

x

x

~

= Bs + n

~

.

{ } { } | |

( ) ) 2 / exp( 2 / 1 ) (

) ( ) ( ) (

2 2

2

c x c y G

v G E y G E y J

=

~

t

Вам также может понравиться

- Independent Components AnalysisДокумент26 страницIndependent Components AnalysisPhuc HoangОценок пока нет

- Independent Component AnalysisДокумент16 страницIndependent Component Analysislehoangthanh100% (1)

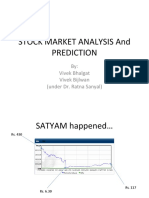

- Stock Market Analysis and PredictionДокумент26 страницStock Market Analysis and Predictionhitesh_qsОценок пока нет

- Independent Components AnalysisДокумент26 страницIndependent Components AnalysisadityavarshaОценок пока нет

- Computer Vision: Spring 2006 15-385,-685Документ58 страницComputer Vision: Spring 2006 15-385,-685minh_neuОценок пока нет

- PcaДокумент73 страницыPca1balamanianОценок пока нет

- Lecture 9 Unit2Документ168 страницLecture 9 Unit2Siddharth SidhuОценок пока нет

- Linear Algebra For Computer Vision - Part 2: CMSC 828 DДокумент23 страницыLinear Algebra For Computer Vision - Part 2: CMSC 828 DsatyabashaОценок пока нет

- Linear Algebra and Applications: Benjamin RechtДокумент42 страницыLinear Algebra and Applications: Benjamin RechtKarzan Kaka SwrОценок пока нет

- Chap 2Документ9 страницChap 2MingdreamerОценок пока нет

- SVD NoteДокумент2 страницыSVD NoteSourabh SinghОценок пока нет

- Clase08-Mlss05au Hyvarinen Ica 02Документ87 страницClase08-Mlss05au Hyvarinen Ica 02Juan AlvarezОценок пока нет

- Cos323 s06 Lecture09 SVDДокумент24 страницыCos323 s06 Lecture09 SVDRandhirKumarОценок пока нет

- Eeg SeminarДокумент23 страницыEeg SeminarsumanthОценок пока нет

- Numerical Methods Overview of The CourseДокумент93 страницыNumerical Methods Overview of The CoursechetanОценок пока нет

- Data Booklet Review-IntroДокумент20 страницData Booklet Review-IntroNorfu PIОценок пока нет

- Time, Frequency Analysis of SignalsДокумент78 страницTime, Frequency Analysis of SignalsstephanОценок пока нет

- ME401T CAD Ellipse Algorithm - 5Документ29 страницME401T CAD Ellipse Algorithm - 5AnuragShrivastavОценок пока нет

- Power Spectral DensityДокумент9 страницPower Spectral DensitySourav SenОценок пока нет

- Where To Draw A Line??Документ49 страницWhere To Draw A Line??RajaRaman.GОценок пока нет

- Singular Value Decomposition: Yan-Bin Jia Sep 6, 2012Документ9 страницSingular Value Decomposition: Yan-Bin Jia Sep 6, 2012abcdqwertyОценок пока нет

- Lucas-Kanade in A Nutshell: 1 MotivationДокумент5 страницLucas-Kanade in A Nutshell: 1 Motivationalessandro CostellaОценок пока нет

- Independent Component Analysis & Blind Source Separation: Ata Kaban The University of BirminghamДокумент23 страницыIndependent Component Analysis & Blind Source Separation: Ata Kaban The University of BirminghamMohammad Waqas Moin SheikhОценок пока нет

- Independent Component Analysis (ICA) : Adopted FromДокумент37 страницIndependent Component Analysis (ICA) : Adopted FromManavi VinodОценок пока нет

- SVD Note PDFДокумент2 страницыSVD Note PDFaliОценок пока нет

- Cs 224S / Linguist 281 Speech Recognition, Synthesis, and DialogueДокумент59 страницCs 224S / Linguist 281 Speech Recognition, Synthesis, and DialogueBurime GrajqevciОценок пока нет

- RichtungsstatistikДокумент31 страницаRichtungsstatistikBauschmannОценок пока нет

- Lecture 7: Unsupervised Learning: C19 Machine Learning Hilary 2013 A. ZissermanДокумент20 страницLecture 7: Unsupervised Learning: C19 Machine Learning Hilary 2013 A. ZissermansvijiОценок пока нет

- Solution20for20Midterm 201420fallДокумент5 страницSolution20for20Midterm 201420fall3.14159265Оценок пока нет

- Matrix-Decomposition-and-Its-application-Part IIДокумент55 страницMatrix-Decomposition-and-Its-application-Part IIปิยะ ธโรОценок пока нет

- PresentationДокумент31 страницаPresentationAileen AngОценок пока нет

- Intro SVDДокумент16 страницIntro SVDTri KasihnoОценок пока нет

- CS3220 Lecture Notes: Singular Value Decomposition and ApplicationsДокумент13 страницCS3220 Lecture Notes: Singular Value Decomposition and ApplicationsOnkar PanditОценок пока нет

- Slides Lecture7 ExtДокумент21 страницаSlides Lecture7 ExtMuhammad ZeeshanОценок пока нет

- Mathbootcamp Ampba Aug 2021Документ193 страницыMathbootcamp Ampba Aug 2021sourav abhishekОценок пока нет

- Topic 8-Mean Square Estimation-Wiener and Kalman FilteringДокумент73 страницыTopic 8-Mean Square Estimation-Wiener and Kalman FilteringHamza MahmoodОценок пока нет

- MCT Module 2Документ68 страницMCT Module 2Anish BennyОценок пока нет

- 1 - Vectors & Coordinate SystemsДокумент37 страниц1 - Vectors & Coordinate SystemsSreedevi MenonОценок пока нет

- Linear Algebra Cheat-Sheet: Laurent LessardДокумент13 страницLinear Algebra Cheat-Sheet: Laurent LessardAnantDashputeОценок пока нет

- Ss2 Post PracДокумент9 страницSs2 Post PracLenny NdlovuОценок пока нет

- Error Perfomance Analysis of Digital Modulation Based On The Union BoundДокумент10 страницError Perfomance Analysis of Digital Modulation Based On The Union BoundCanbruce LuwangОценок пока нет

- Anderson Distribution of The Correlation CoefficientДокумент14 страницAnderson Distribution of The Correlation Coefficientdauren_pcОценок пока нет

- ICA Dim RedДокумент39 страницICA Dim RedRohit SinghОценок пока нет

- Kriging: Reservoir Modeling With GSLIBДокумент16 страницKriging: Reservoir Modeling With GSLIBskywalk189Оценок пока нет

- From Signal To Vector ChannelДокумент10 страницFrom Signal To Vector ChannelpusculОценок пока нет

- Chapter 4Документ14 страницChapter 4Hamed NikbakhtОценок пока нет

- Statistics NotesДокумент15 страницStatistics NotesMarcus Pang Yi ShengОценок пока нет

- Digital Signal Processing Important Two Mark Questions With AnswersДокумент15 страницDigital Signal Processing Important Two Mark Questions With AnswerssaiОценок пока нет

- PCAДокумент45 страницPCAPritam BardhanОценок пока нет

- (El2004) W1Документ39 страниц(El2004) W1BaherFreedomОценок пока нет

- Advancedigital Communications: Instructor: Dr. M. Arif WahlaДокумент34 страницыAdvancedigital Communications: Instructor: Dr. M. Arif Wahlafahad_shamshadОценок пока нет

- Singular Value Decomposition (SVD) : - DefinitionДокумент5 страницSingular Value Decomposition (SVD) : - DefinitionMervin RodrigoОценок пока нет

- Module 2 - DS IДокумент94 страницыModule 2 - DS IRoudra ChakrabortyОценок пока нет

- Digital Signal Processing Important 2 Two Mark Question and Answer IT 1252Документ14 страницDigital Signal Processing Important 2 Two Mark Question and Answer IT 1252startedforfunОценок пока нет

- Elhabian ICP09Документ130 страницElhabian ICP09angeliusОценок пока нет

- Lecture 9Документ24 страницыLecture 9Adila AnbreenОценок пока нет

- Topic 4 - Sequences of Random VariablesДокумент32 страницыTopic 4 - Sequences of Random VariablesHamza MahmoodОценок пока нет

- Matrix Norm: A A I MJ NДокумент13 страницMatrix Norm: A A I MJ NAmelieОценок пока нет

- 03 Spring Final SolnДокумент3 страницы03 Spring Final SolnanthalyaОценок пока нет

- Procedure For Building PermissionДокумент5 страницProcedure For Building PermissionPravin BholeОценок пока нет

- Equ 1Документ2 страницыEqu 1Pravin BholeОценок пока нет

- Search Results: Searched For: in Document: Results: Document(s) With Instance(s) Saved OnДокумент4 страницыSearch Results: Searched For: in Document: Results: Document(s) With Instance(s) Saved OnPravin BholeОценок пока нет

- Wireless Soil Moisture Parameter MonitoringДокумент1 страницаWireless Soil Moisture Parameter MonitoringPravin BholeОценок пока нет

- PIC16F84 Based LC MeterДокумент3 страницыPIC16F84 Based LC MeterPravin Bhole100% (1)

- AT89C51 Microcontroller AT89C51 Microcontroller AT89C51 Microcontroller AT89C51 MicrocontrollerДокумент2 страницыAT89C51 Microcontroller AT89C51 Microcontroller AT89C51 Microcontroller AT89C51 MicrocontrollerPravin BholeОценок пока нет

- Citation in LaTeXДокумент3 страницыCitation in LaTeXPravin BholeОценок пока нет

- Optical Switching: Switch Fabrics, Techniques and ArchitecturesДокумент20 страницOptical Switching: Switch Fabrics, Techniques and ArchitecturesMona SinghОценок пока нет

- Ayurvedic Home Remedies For ParalysisДокумент2 страницыAyurvedic Home Remedies For ParalysisPravin BholeОценок пока нет

- GratingДокумент28 страницGratingPravin BholeОценок пока нет

- Remote-Controlled 6-Camera CCTV Switcher: The CircuitДокумент7 страницRemote-Controlled 6-Camera CCTV Switcher: The CircuitPravin BholeОценок пока нет

- Deep Dictionary Learning - 2016Документ9 страницDeep Dictionary Learning - 2016bamshadpotaОценок пока нет

- Synthetic Division: Example 1 - DivideДокумент4 страницыSynthetic Division: Example 1 - DivideReynand F. MaitemОценок пока нет

- Information Gain - Towards Data ScienceДокумент8 страницInformation Gain - Towards Data ScienceSIDDHARTHA SINHAОценок пока нет

- Thesis With Multiple Regression AnalysisДокумент6 страницThesis With Multiple Regression Analysiszehlobifg100% (2)

- Anshul Dyundi Machine Learning July 2022Документ46 страницAnshul Dyundi Machine Learning July 2022Anshul Dyundi0% (1)

- What Is A DAC?: - A Digital To Analog Converter (DAC) Converts A Digital Signal To An Analog Voltage or Current OutputДокумент18 страницWhat Is A DAC?: - A Digital To Analog Converter (DAC) Converts A Digital Signal To An Analog Voltage or Current OutputRojan PradhanОценок пока нет

- Turbo Codes For Multi-Hop Wireless Sensor Networks With Dandf MechanismДокумент30 страницTurbo Codes For Multi-Hop Wireless Sensor Networks With Dandf Mechanismjeya1990Оценок пока нет

- 설계기준 Korea Design Standard KDS 41 10 05: 2016 설계기준 Korean Design StandardДокумент24 страницы설계기준 Korea Design Standard KDS 41 10 05: 2016 설계기준 Korean Design Standard최광민Оценок пока нет

- BCT - Subjective QBДокумент2 страницыBCT - Subjective QBabhijit kateОценок пока нет

- SCE-Advanced PID Control S7-1500 (2016)Документ49 страницSCE-Advanced PID Control S7-1500 (2016)Jorge_Andril_5370100% (1)

- Solution Manual For Probability and Statistics With R For Engineers and Scientists 1st Edition Michael Akritas 0321852990 9780321852991Документ36 страницSolution Manual For Probability and Statistics With R For Engineers and Scientists 1st Edition Michael Akritas 0321852990 9780321852991john.rosenstock869100% (14)

- Module 4Документ64 страницыModule 4Jagadeswar Babu100% (1)

- Engineering Mathematics Ii Ras203Документ2 страницыEngineering Mathematics Ii Ras203Dhananjay ChaurasiaОценок пока нет

- Ex No 2 Implementation of Queue Using ArrayДокумент5 страницEx No 2 Implementation of Queue Using ArrayNIVAASHINI MATHAPPANОценок пока нет

- Discussion 4 CS771Документ25 страницDiscussion 4 CS771Jglewd 2641Оценок пока нет

- FEA-Academy Course On-Demand - Practical Basic FEAДокумент35 страницFEA-Academy Course On-Demand - Practical Basic FEAaxatpgmeОценок пока нет

- Clustering Approaches For Financial Data Analysis PDFДокумент7 страницClustering Approaches For Financial Data Analysis PDFNewton LinchenОценок пока нет

- Asymmetric-Key Cryptography: 19CSE311 Computer Security Jevitha KP Department of CSEДокумент32 страницыAsymmetric-Key Cryptography: 19CSE311 Computer Security Jevitha KP Department of CSEugiyuОценок пока нет

- KNN With MNIST Dataset - 2Документ2 страницыKNN With MNIST Dataset - 2Jaydev RavalОценок пока нет

- Face Recognition Based Attendance System Using OpencvДокумент64 страницыFace Recognition Based Attendance System Using OpencvsumathiОценок пока нет

- Entscheidungsproblem - WikipediaДокумент25 страницEntscheidungsproblem - WikipediablitbiteОценок пока нет

- Quiz 1: and Analysis of AlgorithmsДокумент13 страницQuiz 1: and Analysis of AlgorithmsajgeminiОценок пока нет

- Etl Testing Training in HyderabadДокумент13 страницEtl Testing Training in HyderabadsowmyavibhinОценок пока нет

- CS224n: Natural Language Processing With Deep LearningДокумент18 страницCS224n: Natural Language Processing With Deep LearningJpОценок пока нет

- SDH NotesДокумент8 страницSDH NotesGokulОценок пока нет

- Questions and Answers On Regression Models With Lagged Dependent Variables and ARMA ModelsДокумент8 страницQuestions and Answers On Regression Models With Lagged Dependent Variables and ARMA ModelsCarmen OrazzoОценок пока нет

- Cryptography: Principles of Information Security, 2nd Edition 1Документ20 страницCryptography: Principles of Information Security, 2nd Edition 1Nishanth KrishnamurthyОценок пока нет

- Skin Lesion Classification Using Deep Convolutional Neural NetworkДокумент4 страницыSkin Lesion Classification Using Deep Convolutional Neural NetworkMehera Binte MizanОценок пока нет

- Modelling For Cruise Two-Dimensional Online Revenue Management SystemДокумент7 страницModelling For Cruise Two-Dimensional Online Revenue Management SystemBysani Vinod KumarОценок пока нет

- Quiz 2.doc ReadyДокумент3 страницыQuiz 2.doc ReadyKuma AguirreОценок пока нет