Академический Документы

Профессиональный Документы

Культура Документы

Data Mining Slides Edited

Загружено:

solo_gauravАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Data Mining Slides Edited

Загружено:

solo_gauravАвторское право:

Доступные форматы

Data Mining

Outline

Data Mining

Data Warehousing

Knowledge Discovery in Databases (KDD)

Goals of Data Mining and Knowledge Discovery

Association Rules

Additional Data Mining Algorithms

Sequential pattern analysis

Time Series Analysis

Regression

Neural Networks

Genetic Algorithms

Definitions of Data Mining

The discovery of new information in

terms of patterns or rules from vast

amounts of data.

The process of finding interesting

structure in data.

The process of employing one or more

computer learning techniques to

automatically analyze and extract

knowledge from data.

Data Warehousing

The data warehouse is a historical

database designed for decision

support.

Data mining can be applied to the data

in a warehouse to help with certain

types of decisions.

Proper construction of a data

warehouse is fundamental to the

successful use of data mining.

Knowledge Discovery in

Databases (KDD)

Data mining is actually one step of a

larger process known as knowledge

discovery in databases (KDD).

The KDD process model comprises

six phases

Data selection

Data cleansing

Enrichment

Data transformation or encoding

Data mining

Reporting and displaying discovered

knowledge

Goals of Data Mining and

Knowledge Discovery (PICO)

Prediction:

Determine how certain attributes will

behave in the future.

Identification:

Identify the existence of an item, event, or

activity.

Classification:

Partition data into classes or categories.

Optimization:

Optimize the use of limited resources.

Types of Discovered

Knowledge

Association Rules

Classification Hierarchies

Sequential Patterns

Patterns Within Time Series

Clustering

Association Rules

Association rules are frequently used to generate

rules from market-basket data.

A market basket corresponds to the sets of items

a consumer purchases during one visit to a

supermarket.

The set of items purchased by customers is known

as an itemset.

An association rule is of the form X=>Y, where X

={x

1

, x

2

, ., x

n

}, and Y = {y

1

,y

2

, ., y

n

} are sets of

items, with x

i

and y

i

being distinct items for all i and

all j.

For an association rule to be of interest, it must

satisfy a minimum support and confidence.

Association Rules

Confidence and Support

Support:

The minimum percentage of instances in the database

that contain all items listed in a given association rule.

Support is the percentage of transactions that contain all

of the items in the itemset, LHS U RHS.

Confidence:

Given a rule of the form A=>B, rule confidence is the

conditional probability that B is true when A is known to

be true.

Confidence can be computed as

support(LHS U RHS) / support(LHS)

Generating Association Rules

The general algorithm for generating

association rules is a two-step

process.

Generate all itemsets that have a support

exceeding the given threshold. Itemsets

with this property are called large or

frequent itemsets.

Generate rules for each itemset as

follows:

For itemset X and Y a subset of X, let Z = X

Y;

If support(X)/Support(Z) > minimum

confidence, the rule Z=>Y is a valid rule.

Reducing Association Rule

Complexity

Two properties are used to reduce the

search space for association rule

generation.

Downward Closure

A subset of a large itemset must also be large

Anti-monotonicity

A superset of a small itemset is also small. This

implies that the itemset does not have sufficient

support to be considered for rule generation.

Generating Association Rules:

The Apriori Algorithm

The Apriori algorithm was the first

algorithm used to generate

association rules.

The Apriori algorithm uses the general

algorithm for creating association rules

together with downward closure and anti-

monotonicity.

Generating Association Rules:

The Sampling Algorithm

The sampling algorithm selects

samples from the database of

transactions that individually fit into

memory. Frequent itemsets are then

formed for each sample.

If the frequent itemsets form a superset of

the frequent itemsets for the entire database,

then the real frequent itemsets can be

obtained by scanning the remainder of the

database.

In some rare cases, a second scan of the

database is required to find all frequent

itemsets.

Generating Association Rules:

Frequent-Pattern Tree Algorithm

The Frequent-Pattern Tree Algorithm

reduces the total number of candidate

itemsets by producing a compressed

version of the database in terms of an

FP-tree.

The FP-tree stores relevant

information and allows for the efficient

discovery of frequent itemsets.

The algorithm consists of two steps:

Step 1 builds the FP-tree.

Step 2 uses the tree to find frequent

itemsets.

Step 1: Building the FP-Tree

First, frequent 1-itemsets along with the count of transactions

containing each item are computed.

The 1-itemsets are sorted in non-increasing order.

The root of the FP-tree is created with a null label.

For each transaction T in the database, place the frequent 1-

itemsets in T in sorted order. Designate T as consisting of a

head and the remaining items, the tail.

Insert itemset information recursively into the FP-tree as

follows:

if the current node, N, of the FP-tree has a child with an

item name = head, increment the count associated with N

by 1 else create a new node, N, with a count of 1, link N to

its parent and link N with the item header table.

if tail is nonempty, repeat the above step using only the tail,

i.e., the old head is removed and the new head is the first

item from the tail and the remaining items become the new

tail.

Step 2: The FP-growth Algorithm

For Finding Frequent Itemsets

Input: Fp-tree and minimum support, mins

Output: frequent patterns (itemsets)

procedure FP-growth (tree, alpha);

Begin

if tree contains a single path P then

for each combination, beta of the nodes in the path

generate pattern (beta U alpha)

with support = minimum support of nodes in beta

else

for each item, i, in the header of the tree do

begin

generate pattern beta = (i U alpha) with support = i.support;

construct betas conditional pattern base;

construct betas conditional FP-tree, beta_tree;

if beta_tree is not empty then

FP-growth(beta_tree, beta);

end;

End;

Generating Association Rules:

The Partition Algorithm

Divide the database into non-overlapping

subsets.

Treat each subset as a separate database

where each subset fits entirely into main

memory.

Apply the Apriori algorithm to each partition.

Take the union of all frequent itemsets from

each partition.

These itemsets form the global candidate

frequent itemsets for the entire database.

Verify the global set of itemsets by having

their actual support measured for the entire

database.

Complications seen with

Association Rules

The cardinality of itemsets in most

situations is extremely large.

Association rule mining is more difficult

when transactions show variability in

factors such as geographic location and

seasons.

Item classifications exist along multiple

dimensions.

Data quality is variable; data may be

missing, erroneous, conflicting, as well

as redundant.

Classification

Classification is the process of

learning a model that is able to

describe different classes of data.

Learning is supervised as the classes

to be learned are predetermined.

Learning is accomplished by using a

training set of pre-classified data.

The model produced is usually in the

form of a decision tree or a set of

rules.

An Example Rule

Here is one of the rules extracted from

the decision tree of Figure 28.7.

IF 50K > salary >= 20K

AND age >=25

THEN class is yes

Clustering

Unsupervised learning or clustering

builds models from data without

predefined classes.

The goal is to place records into

groups where the records in a group

are highly similar to each other and

dissimilar to records in other groups.

The k-Means algorithm is a simple yet

effective clustering technique.

Additional Data Mining

Methods

Sequential pattern analysis

Time Series Analysis

Regression

Neural Networks

Genetic Algorithms

Sequential Pattern Analysis

Transactions ordered by time of

purchase form a sequence of

itemsets.

The problem is to find all

subsequences from a given set of

sequences that have a minimum

support.

The sequence S

1

, S

2

, S

3

, .. is a

predictor of the fact that a customer

purchasing itemset S

1

is likely to buy

S

2

, and then S

3

, and so on.

Time Series Analysis

Time series are sequences of events.

For example, the closing price of a

stock is an event that occurs each day

of the week.

Time series analysis can be used to

identify the price trends of a stock or

mutual fund.

Time series analysis is an extended

functionality of temporal data

management.

Regression Analysis

A regression equation estimates a

dependent variable using a set of

independent variables and a set of

constants.

The independent variables as well as the

dependent variable are numeric.

A regression equation can be written in

the form Y=f(x

1

,x

2

,,x

n

) where Y is the

dependent variable.

If f is linear in the domain variables x

i

,

the equation is call a linear regression

equation.

Neural Networks

A neural network is a set of

interconnected nodes designed to

imitate the functioning of the brain.

Node connections have weights

which are modified during the learning

process.

Neural networks can be used for

supervised learning and unsupervised

clustering.

The output of a neural network is

quantitative and not easily

understood.

Genetic Learning

Genetic learning is based on the

theory of evolution.

An initial population of several

candidate solutions is provided to the

learning model.

A fitness function defines which

solutions survive from one generation

to the next.

Crossover, mutation and selection

are used to create new population

elements.

Data Mining Applications

Marketing

Marketing strategies and consumer

behavior

Finance

Fraud detection, creditworthiness and

investment analysis

Manufacturing

Resource optimization

Health

Image analysis, side effects of drug, and

treatment effectiveness

Recap

Data Mining

Data Warehousing

Knowledge Discovery in Databases (KDD)

Goals of Data Mining and Knowledge Discovery

Association Rules

Additional Data Mining Algorithms

Sequential pattern analysis

Time Series Analysis

Regression

Neural Networks

Genetic Algorithms

Вам также может понравиться

- Read MeДокумент3 страницыRead Mesolo_gauravОценок пока нет

- RporateДокумент7 страницRporatesolo_gauravОценок пока нет

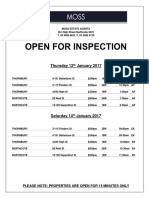

- Thursday 12 January 2017: Please Note: Properties Are Open For 15 Minutes OnlyДокумент1 страницаThursday 12 January 2017: Please Note: Properties Are Open For 15 Minutes Onlysolo_gauravОценок пока нет

- Example of Reflective Diary WritingДокумент3 страницыExample of Reflective Diary Writingsolo_gauravОценок пока нет

- Debug RFC AbapДокумент1 страницаDebug RFC Abapsolo_gauravОценок пока нет

- Debug RFC AbapДокумент1 страницаDebug RFC Abapsolo_gauravОценок пока нет

- Had OopsДокумент3 страницыHad Oopssolo_gauravОценок пока нет

- White Paper Application Performance Management (APM)Документ26 страницWhite Paper Application Performance Management (APM)solo_gauravОценок пока нет

- Comprehensive Analysis On The Indian Renewable Energy Sector."feb 3,2012)Документ3 страницыComprehensive Analysis On The Indian Renewable Energy Sector."feb 3,2012)solo_gauravОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Catálogo Safety (Junho 2010)Документ852 страницыCatálogo Safety (Junho 2010)Robison Raniere MartinsОценок пока нет

- Belts Failure Book Final CE8149Документ16 страницBelts Failure Book Final CE8149vulpinorОценок пока нет

- GP 9, Nissan CaseДокумент17 страницGP 9, Nissan CaseHumna Amna67% (3)

- QuestionnaireДокумент4 страницыQuestionnaireShanchez JoseОценок пока нет

- Data ProcessingДокумент9 страницData ProcessingRahul NigamОценок пока нет

- Khatua Committee Report Part 1 PDFДокумент80 страницKhatua Committee Report Part 1 PDFMihir PatilОценок пока нет

- Opm IV ModuleДокумент18 страницOpm IV ModuleSharma VishnuОценок пока нет

- Figure 1: Logo of The CompanyДокумент10 страницFigure 1: Logo of The CompanyKhairil MuhamadОценок пока нет

- "Agricultural Equipment Repair & Motor Repair'': A Powerpoint Presentation OnДокумент17 страниц"Agricultural Equipment Repair & Motor Repair'': A Powerpoint Presentation OnAniruddha MОценок пока нет

- SAP MM Quick Guide PDFДокумент222 страницыSAP MM Quick Guide PDFKhadeer AhamedОценок пока нет

- Bank Statement PDFДокумент188 страницBank Statement PDFragaveyndhar maniОценок пока нет

- Univar Chemcare SolutionsДокумент4 страницыUnivar Chemcare SolutionselirancoolОценок пока нет

- Techniques of Quality Improvement 09092010Документ40 страницTechniques of Quality Improvement 09092010International-Society-for-Six-Sigma-CertificationsОценок пока нет

- Oracle Data Integrator (ODI) Online Training and SupportДокумент9 страницOracle Data Integrator (ODI) Online Training and SupportGloryittechnologiesОценок пока нет

- How To Use VLOOKUP in ExcelДокумент7 страницHow To Use VLOOKUP in Excelmbkanchan1Оценок пока нет

- Product Manual 02879 (Revision B) : 723PLUS Digital Speed Control For Reciprocating Engines - Analog Load SharingДокумент176 страницProduct Manual 02879 (Revision B) : 723PLUS Digital Speed Control For Reciprocating Engines - Analog Load SharingmasudalamОценок пока нет

- Hiab Xs322 CWДокумент12 страницHiab Xs322 CWrolo_consultas2956Оценок пока нет

- As 1121.1-2007 Agricultural Tractor Power Take-Offs Rear-Mounted Power Take-Off Types 1 2 and 3 - General SpeДокумент6 страницAs 1121.1-2007 Agricultural Tractor Power Take-Offs Rear-Mounted Power Take-Off Types 1 2 and 3 - General SpeSAI Global - APACОценок пока нет

- 2011 Fiat SpA Sustainability ReportДокумент268 страниц2011 Fiat SpA Sustainability ReportAbarth USAОценок пока нет

- Turbocharger CatalogДокумент16 страницTurbocharger CatalogAdi PoianaОценок пока нет

- Oracle UnifierДокумент16 страницOracle UnifierahhanyОценок пока нет

- Tebo ICTviewДокумент17 страницTebo ICTviewNgọc Thạnh Nguyễn0% (1)

- COREWIND D4.1 Identification of Floating Wind Specific O&M Requirements and Monitoring TechnologiesДокумент98 страницCOREWIND D4.1 Identification of Floating Wind Specific O&M Requirements and Monitoring TechnologiesadeshinexxОценок пока нет

- KPI's - CUTE Handbook PDFДокумент19 страницKPI's - CUTE Handbook PDFpatriciolaria100% (1)

- Mumbai Port Trust: Civil Engineering DepartmentДокумент96 страницMumbai Port Trust: Civil Engineering Departmentsuji91Оценок пока нет

- Experiment About CNC MachineДокумент4 страницыExperiment About CNC MachineMohammed Al-saa'baryОценок пока нет

- Will Agile Development Change The Way We ManageДокумент7 страницWill Agile Development Change The Way We ManageKovags DiegoОценок пока нет

- Development of Oil-Pump For Lubrication of Turbocharger in Wankel Rotary EngineДокумент3 страницыDevelopment of Oil-Pump For Lubrication of Turbocharger in Wankel Rotary EnginefullkuleОценок пока нет

- Radio Navigation QBДокумент64 страницыRadio Navigation QBVijay Samuel J100% (3)

- WEF Technology and Innovation The Next Economic Growth Engine PDFДокумент33 страницыWEF Technology and Innovation The Next Economic Growth Engine PDFPiroch RudolfОценок пока нет