Академический Документы

Профессиональный Документы

Культура Документы

2.review Techniques

Загружено:

arunlalds0 оценок0% нашли этот документ полезным (0 голосов)

187 просмотров21 страницаReview Techniques

Оригинальное название

2.Review Techniques

Авторское право

© © All Rights Reserved

Доступные форматы

PPT, PDF, TXT или читайте онлайн в Scribd

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документReview Techniques

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PPT, PDF, TXT или читайте онлайн в Scribd

0 оценок0% нашли этот документ полезным (0 голосов)

187 просмотров21 страница2.review Techniques

Загружено:

arunlaldsReview Techniques

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PPT, PDF, TXT или читайте онлайн в Scribd

Вы находитесь на странице: 1из 21

Review Techniques

Primary objective of formal technical reviews

Find errors during the process so that they do

not become defects after release of the

software.

Benefit - the early discovery of errors so they

do not propagate to the next step in the

software process.

What Are Reviews?

a meeting conducted by technical people for

technical people

a technical assessment of a work product

created during the software engineering

process

a software quality assurance mechanism

a training ground

What Do We Look For?

• Errors and defects

• Error—a quality problem found before the

software is released to end users

• Defect—a quality problem found only after

the software has been released to end-users

Defect Amplification

• Defect Amplification

• A defect amplification model [IBM81] can be

used to illustrate the generation and detection

of errors during the design and code generation

actions of a software process.

Defect Amplification Model

• A box represents a software development step. During the

step, errors may be inadvertently generated.

• Review may fail to uncover newly generated errors and errors

from previous steps, resulting in some number of errors that

are passed through.

• In some cases, errors passed through from previous steps are

amplified (amplification factor, x) by current work.

• box subdivisions represent each of these characteristics and the

percent of efficiency for detecting errors, a function of the

thoroughness of the review.

Defect amplification –no reviews

• Hypothetical example of defect amplification

for a software development process in which

no reviews are conducted.

• Each test step uncovers and corrects 50

percent of all incoming errors without

introducing any new errors.

• Ten preliminary design defects are amplified

to 94 errors before testing commences.

Twelve latent errors are released to the field.

Defect amplification –Reviews Conducted

• Same conditions except that design and code

reviews are conducted as part of each

development step.

• Ten initial preliminary design errors are

amplified to 24 errors before testing

commences.

• Only three latent errors exist.

Review metrics and their use

• Preparation effort Ep- the effort in person

hours required to review a work product prior

to actual review meeting

• Assessment effort Ea- the effort in person –

hours that is expended during actual review

• Rework effort Er the effort that is dedicated to

the correction of those errors uncovered

during the review

• Work product size WPS a measure of the size

of work product that has been reviewed

• Minor errors found Errminor –the number of

errors found that can be categorized as minor

• Major errors found Errmajor the number of

errors found that can be categorized as major

Analyzing Metrics

• The total review effort and the total number

of errors discovered are defined as

• Ereview =Ep + Ea + Er

• Errtot =errminor+errmajor

• Error density = err tot/WPS

• Error density represents the errors found per

unit of work product.

Reference Model for technical reviews

Informal Reviews

• Informal reviews include:

a simple desk check of a software engineering work product

with a colleague

a casual meeting (involving more than 2 people) for the

purpose of reviewing a work product, or the review-oriented

aspects of pair programming

pair programming encourages continuous review as a work

product (design or code) is created.

• The benefit is immediate discovery of errors and better work

product quality as a consequence.

Formal Technical Reviews

• The objectives of an FTR are:

• to uncover errors in function, logic, or implementation for

• any representation of the software

• to verify that the software under review meets its

• requirements

• to ensure that the software has been represented according

• to predefined standards

• to achieve software that is developed in a uniform manner

• to make projects more manageable

• The FTR is actually a class of reviews that includes

• walkthroughs and inspections

The review meeting

• Regardless of the FTR format that is chosen every review

meeting should abide by the following constraints

• Between three and five people should be involved in the

review

• Advance preparation should occur but should require no

more than two hours of work for each person

• The duration of the review meeting should be less than two

hours

• Focus is on a work product (e.g., a portion of a

• requirements model, a detailed component design,

• source code for a component

The Players

• Producer—the individual who has developed the work

• Product informs the project leader that the work product is

complete and that a review is required

• Review leader—evaluates the product for readiness,

• generates copies of product materials, and distributes

• them to two or three reviewers for advance preparation.

• Reviewer(s)—expected to spend between one and two

• hours reviewing the product, making notes, and

• otherwise becoming familiar with the work.

• Recorder—reviewer who records (in writing) all important

• issues raised during the review.

Review guidelines

• Review the product, not the producer.

• Set an agenda and maintain it.

• Limit debate and rebuttal.

• Enunciate problem areas, but don't attempt to solve

every problem noted.

• Take written notes.

• Limit the number of participants and insist upon

advance Preparation.

• Develop a checklist for each product that is

likely to be reviewed.

• Allocate resources and schedule time for FTRs.

• Conduct meaningful training for all reviewers.

• Review your early reviews

Вам также может понравиться

- Icmp Error ReportingДокумент58 страницIcmp Error ReportingSanjeev SubediОценок пока нет

- BayesianNetworks ReducedДокумент14 страницBayesianNetworks Reducedastir1234Оценок пока нет

- Face Recognition Using Neural NetworkДокумент31 страницаFace Recognition Using Neural Networkvishnu vОценок пока нет

- Sample Introduction and ObjectivesДокумент9 страницSample Introduction and Objectivesyumira khateОценок пока нет

- The Embedded Design Life Cycle: - Product Specification - Hardware/software Partitioning - Hardware/software IntegrationДокумент18 страницThe Embedded Design Life Cycle: - Product Specification - Hardware/software Partitioning - Hardware/software IntegrationTejinder Singh SagarОценок пока нет

- Metrics For Process and Projects Chapter 15 & 22: Roger S. 6 Edition)Документ25 страницMetrics For Process and Projects Chapter 15 & 22: Roger S. 6 Edition)farhan sdfОценок пока нет

- Introduction To CMOS VLSI Design (E158) Final ProjectДокумент4 страницыIntroduction To CMOS VLSI Design (E158) Final ProjectbashasvuceОценок пока нет

- Software Engineering - Functional Point (FP) Analysis - GeeksforGeeksДокумент12 страницSoftware Engineering - Functional Point (FP) Analysis - GeeksforGeeksGanesh PanigrahiОценок пока нет

- Capstone Project ReportДокумент45 страницCapstone Project ReportPrabh JeetОценок пока нет

- Chapter 22 - Software Configuration ManagementДокумент15 страницChapter 22 - Software Configuration Managementmalik assadОценок пока нет

- Chap 6 - Software ReuseДокумент51 страницаChap 6 - Software ReuseMohamed MedОценок пока нет

- SE - Unit 1 - The Process and The Product - PPTДокумент15 страницSE - Unit 1 - The Process and The Product - PPTZameer Ahmed SaitОценок пока нет

- Realtime Operating SystemsДокумент1 страницаRealtime Operating Systemssarala20021990100% (1)

- PDC 1 - PD ComputingДокумент12 страницPDC 1 - PD ComputingmishaОценок пока нет

- The Systems Development EnvironmentДокумент6 страницThe Systems Development EnvironmentAhimbisibwe BakerОценок пока нет

- IT2403 Systems Analysis and Design: (Compulsory)Документ6 страницIT2403 Systems Analysis and Design: (Compulsory)Akber HassanОценок пока нет

- CW COMP1631 59 Ver1 1617Документ10 страницCW COMP1631 59 Ver1 1617dharshinishuryaОценок пока нет

- Cse345p Bi LabДокумент30 страницCse345p Bi LabkamalОценок пока нет

- CS2301-Software Engineering 2 MarksДокумент17 страницCS2301-Software Engineering 2 MarksDhanusha Chandrasegar SabarinathОценок пока нет

- 2.1 Basic Design Concepts in TQMДокумент6 страниц2.1 Basic Design Concepts in TQMDr.T. Pridhar ThiagarajanОценок пока нет

- Ee382M - Vlsi I: Spring 2009 (Prof. David Pan) Final ProjectДокумент13 страницEe382M - Vlsi I: Spring 2009 (Prof. David Pan) Final ProjectsepritaharaОценок пока нет

- Android Malware Detection Using Machine LearningДокумент31 страницаAndroid Malware Detection Using Machine LearningNALLURI PREETHIОценок пока нет

- Unit 7Документ56 страницUnit 7Abhinav Abz100% (1)

- Lesson Plan ESДокумент5 страницLesson Plan ESsureshvkumarОценок пока нет

- Seham Ali 2021: Chapter-1Документ33 страницыSeham Ali 2021: Chapter-1سميره شنيمرОценок пока нет

- KPIT-A Development Tools PartnerДокумент23 страницыKPIT-A Development Tools PartnerNaeem PatelОценок пока нет

- Cs8494 Software Engineering: Course Instructor Mrs.A.Tamizharasi, AP/CSE, RMDEC Mrs. Remya Rose S, AP/CSE, RMDECДокумент23 страницыCs8494 Software Engineering: Course Instructor Mrs.A.Tamizharasi, AP/CSE, RMDEC Mrs. Remya Rose S, AP/CSE, RMDECTamizharasi AОценок пока нет

- Lisa - Add New Front: Process Matching/Installation and Qualification (IQ)Документ62 страницыLisa - Add New Front: Process Matching/Installation and Qualification (IQ)Thanh Vũ NguyễnОценок пока нет

- Software Engineering and Project ManagementДокумент23 страницыSoftware Engineering and Project ManagementUmair Aziz KhatibОценок пока нет

- Embedded System (9168)Документ5 страницEmbedded System (9168)Victoria FrancoОценок пока нет

- Digital Camera CasestudyДокумент57 страницDigital Camera CasestudyAnonymous aLRiBR100% (1)

- Reduced Instruction Set Computer 1 PDFДокумент6 страницReduced Instruction Set Computer 1 PDFKunal PawarОценок пока нет

- 17CS62 CGVДокумент297 страниц17CS62 CGVCHETHAN KUMAR SОценок пока нет

- Microprocessors Lab Manual 408331: Hashemite UniversityДокумент63 страницыMicroprocessors Lab Manual 408331: Hashemite UniversitytargetОценок пока нет

- 156 - Engineering - Drawing - EE-155 - Lab - Manual Final-2Документ24 страницы156 - Engineering - Drawing - EE-155 - Lab - Manual Final-2Hamza AkhtarОценок пока нет

- Speech Recognition Calculator: ECE 4007 Section L02 - Group 08Документ23 страницыSpeech Recognition Calculator: ECE 4007 Section L02 - Group 08GJ CarsonОценок пока нет

- Data Scientist Test Task V2Документ1 страницаData Scientist Test Task V2suryanshОценок пока нет

- Pressman CH 23 Estimation For Software Projects 2Документ37 страницPressman CH 23 Estimation For Software Projects 2Suman MadanОценок пока нет

- Prototyping: Prototyping: Prototypes and Production - Open Source Versus Closed SourceДокумент14 страницPrototyping: Prototyping: Prototypes and Production - Open Source Versus Closed Sourcerajeswarikannan0% (1)

- Pressman CH 25 Risk ManagementДокумент35 страницPressman CH 25 Risk ManagementSuma H R hr100% (1)

- 04 - 05-AI-Knowledge and ReasoningДокумент61 страница04 - 05-AI-Knowledge and ReasoningderbewОценок пока нет

- MaualTesting QuestionsДокумент23 страницыMaualTesting QuestionsRohit KhuranaОценок пока нет

- Computer Science Capstone Project: Course DescriptionДокумент7 страницComputer Science Capstone Project: Course DescriptionGrantham UniversityОценок пока нет

- 5 - Field Technician Networking and StorageДокумент12 страниц5 - Field Technician Networking and StoragenaveenОценок пока нет

- Power PC vs. MIPS: by Saumil Shah and Joel MartinДокумент31 страницаPower PC vs. MIPS: by Saumil Shah and Joel MartinbaptbaptОценок пока нет

- Digital Signal Processors and Architectures (DSPA) Unit-2Документ92 страницыDigital Signal Processors and Architectures (DSPA) Unit-2Chaitanya DuggineniОценок пока нет

- The Object-Oriented Approach To Design: Use Case RealizationДокумент59 страницThe Object-Oriented Approach To Design: Use Case Realizationmohamed187Оценок пока нет

- INF305 Notes (Pressman)Документ57 страницINF305 Notes (Pressman)dinesh_panchariaОценок пока нет

- SQA MidTermExam Online Paper 03122020 105129pmДокумент2 страницыSQA MidTermExam Online Paper 03122020 105129pmAisha ArainОценок пока нет

- ISR Case StudyДокумент48 страницISR Case StudysnehalОценок пока нет

- Final Project Synopsis "Age and Gender Detection"Документ17 страницFinal Project Synopsis "Age and Gender Detection"Ankit KumarОценок пока нет

- SPM PPT U1Документ29 страницSPM PPT U1Venkata Nikhil Chakravarthy KorrapatiОценок пока нет

- SWT3 TimДокумент75 страницSWT3 TimNguyen Minh Hieu (K17 HL)100% (1)

- Compiler Construction: Chapter 1: Introduction To CompilationДокумент65 страницCompiler Construction: Chapter 1: Introduction To CompilationazimkhanОценок пока нет

- Ad SW Final Revision Essay QuestionДокумент4 страницыAd SW Final Revision Essay QuestionKAREEM Abo ELsouDОценок пока нет

- Advanced JAVA ProgrammingДокумент7 страницAdvanced JAVA ProgrammingSaurabh TargeОценок пока нет

- Speech Emotion Detection (CNN Algorithm)Документ29 страницSpeech Emotion Detection (CNN Algorithm)narendra rahmanОценок пока нет

- AnswerДокумент4 страницыAnswerFernando SalasОценок пока нет

- Module 4Документ31 страницаModule 4arunlaldsОценок пока нет

- System Software Module 1Документ67 страницSystem Software Module 1arunlalds0% (1)

- System Software Module 3Документ109 страницSystem Software Module 3arunlaldsОценок пока нет

- Module 3Документ23 страницыModule 3arunlaldsОценок пока нет

- Module 2Документ35 страницModule 2arunlaldsОценок пока нет

- System Software Module 2Документ156 страницSystem Software Module 2arunlalds0% (1)

- Module 3Документ23 страницыModule 3arunlaldsОценок пока нет

- Ss Module 1Документ24 страницыSs Module 1noblesivankuttyОценок пока нет

- Assembler Module 1-1Документ23 страницыAssembler Module 1-1arunlaldsОценок пока нет

- AssemblerДокумент23 страницыAssemblerarunlaldsОценок пока нет

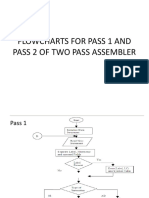

- Flowcharts For Pass 1 and Pass 2 ofДокумент4 страницыFlowcharts For Pass 1 and Pass 2 ofarunlaldsОценок пока нет

- Ss Module 1Документ24 страницыSs Module 1noblesivankuttyОценок пока нет

- 4 ProjectschedulingДокумент21 страница4 ProjectschedulingarunlaldsОценок пока нет

- 5 RiskmanagementДокумент40 страниц5 RiskmanagementarunlaldsОценок пока нет

- 4 TestingwebapplicationsДокумент34 страницы4 TestingwebapplicationsarunlaldsОценок пока нет

- 2.testing Conventional Applications1Документ56 страниц2.testing Conventional Applications1arunlaldsОценок пока нет

- Project Management ConceptsДокумент19 страницProject Management ConceptsarunlaldsОценок пока нет

- Estimation For Software ProjectsДокумент57 страницEstimation For Software ProjectsarunlaldsОценок пока нет

- 2 Project&ProcessmetricsДокумент30 страниц2 Project&ProcessmetricsarunlaldsОценок пока нет

- 3.OO TestingДокумент9 страниц3.OO TestingarunlaldsОценок пока нет

- 4.software Configuration Management-s3MCAДокумент25 страниц4.software Configuration Management-s3MCAarunlaldsОценок пока нет

- 1.software TestingДокумент40 страниц1.software TestingarunlaldsОценок пока нет

- Product Metrics For SoftwareДокумент26 страницProduct Metrics For SoftwarearunlaldsОценок пока нет

- 1.quality ConceptsДокумент12 страниц1.quality ConceptsarunlaldsОценок пока нет

- Pressman 7 CH 8Документ31 страницаPressman 7 CH 8arunlaldsОценок пока нет

- 3.software Quality AssuranceДокумент22 страницы3.software Quality AssurancearunlaldsОценок пока нет

- Pressman 7 CH 15Документ23 страницыPressman 7 CH 15arunlaldsОценок пока нет

- Chapter 5 - Understanding RequirementsДокумент6 страницChapter 5 - Understanding RequirementsDanish PawaskarОценок пока нет

- Chapter 05 1Документ20 страницChapter 05 1arunlaldsОценок пока нет

- (Solution Manual) Fundamentals of Electric Circuits 4ed - Sadiku-Pages-774-800Документ35 страниц(Solution Manual) Fundamentals of Electric Circuits 4ed - Sadiku-Pages-774-800Leo AudeОценок пока нет

- VP5 Datasheet Infusion Pump PDFДокумент2 страницыVP5 Datasheet Infusion Pump PDFsafouen karrayОценок пока нет

- Preboard Questions: Multiple ChoiceДокумент3 страницыPreboard Questions: Multiple ChoiceAlfredo CondeОценок пока нет

- Hybrid ModelДокумент9 страницHybrid ModelanjulОценок пока нет

- Investment Model QuestionsДокумент12 страницInvestment Model Questionssamuel debebe0% (1)

- DNV RP F401Документ23 страницыDNV RP F401Christopher BlevinsОценок пока нет

- Zott Business ModelДокумент25 страницZott Business ModelNico LightОценок пока нет

- Preserving and Randomizing Data Responses in Web Application Using Differential PrivacyДокумент9 страницPreserving and Randomizing Data Responses in Web Application Using Differential PrivacyInternational Journal of Innovative Science and Research Technology100% (1)

- Soil Bearing Capacity CalculationДокумент29 страницSoil Bearing Capacity CalculationJohn Jerome TerciñoОценок пока нет

- Contoh SRSДокумент46 страницContoh SRSFatur RachmanОценок пока нет

- Notebook PC Service Manual System Disassembly Model: - AMILO D 1845 (257SA0)Документ16 страницNotebook PC Service Manual System Disassembly Model: - AMILO D 1845 (257SA0)Robert DumitrescuОценок пока нет

- Introduction To GIS (Geographical Information System)Документ20 страницIntroduction To GIS (Geographical Information System)pwnjha100% (3)

- SDS-PAGE PrincipleДокумент2 страницыSDS-PAGE PrincipledhashrathОценок пока нет

- Mv324 Data SheetДокумент17 страницMv324 Data SheetGianmarco CastilloОценок пока нет

- Abstract of Talk by Dr. Nitin Oke For National Conference RLTДокумент2 страницыAbstract of Talk by Dr. Nitin Oke For National Conference RLTnitinОценок пока нет

- Electricity Markets PDFДокумент2 страницыElectricity Markets PDFAhmed KhairiОценок пока нет

- Anomaly Detection Principles and Algorithms: Mehrotra, K.G., Mohan, C.K., Huang, HДокумент1 страницаAnomaly Detection Principles and Algorithms: Mehrotra, K.G., Mohan, C.K., Huang, HIndi Wei-Huan HuОценок пока нет

- Database Management Systems: Lecture - 5Документ37 страницDatabase Management Systems: Lecture - 5harisОценок пока нет

- Tài Liệu CAT Pallet Truck NPP20NДокумент9 страницTài Liệu CAT Pallet Truck NPP20NJONHHY NGUYEN DANGОценок пока нет

- Kinetic Energy Recovery SystemДокумент2 страницыKinetic Energy Recovery SystemInternational Journal of Innovative Science and Research TechnologyОценок пока нет

- Fds 8878Документ12 страницFds 8878Rofo2015Оценок пока нет

- Isentropic ExponentДокумент2 страницыIsentropic ExponentAlf OtherspaceОценок пока нет

- NEW Handbook UG 160818 PDFДокумент118 страницNEW Handbook UG 160818 PDFHidayah MutalibОценок пока нет

- Structural Design 2Документ43 страницыStructural Design 2Meymuna AliОценок пока нет

- Supercritical CO2: Properties and Technological Applications - A ReviewДокумент38 страницSupercritical CO2: Properties and Technological Applications - A ReviewXuân ĐứcОценок пока нет

- Lorian Meyer-Wendt - Anton Webern - 3 Lieder Op.18Документ136 страницLorian Meyer-Wendt - Anton Webern - 3 Lieder Op.18Daniel Fuchs100% (1)

- A) I) Define The Term Variable Costs Variable Costs Are Costs That Change With The Quantity of Products SoldДокумент2 страницыA) I) Define The Term Variable Costs Variable Costs Are Costs That Change With The Quantity of Products SoldAleksandra LukanovskaОценок пока нет

- Opposite Corners CourseworkДокумент8 страницOpposite Corners Courseworkpqltufajd100% (2)

- 02 Sub-Surface Exploration 01Документ24 страницы02 Sub-Surface Exploration 01kabir AhmedОценок пока нет

- Elasticity Measurement of Local Taxes and Charges in Forecast of Own-Source Revenue (PAD) of Provincial Government in IndonesiaДокумент27 страницElasticity Measurement of Local Taxes and Charges in Forecast of Own-Source Revenue (PAD) of Provincial Government in Indonesiaayu desiОценок пока нет