Академический Документы

Профессиональный Документы

Культура Документы

Principal Component Analysis (PCA) : Dimensionality Reduction Using Pca

Загружено:

neeraj12121Исходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Principal Component Analysis (PCA) : Dimensionality Reduction Using Pca

Загружено:

neeraj12121Авторское право:

Доступные форматы

Principal Component

Analysis (PCA)

DIMENSIONALITY REDUCTION USING PCA 1

Introduction to PCA

PCA (Principal Component Analysis) Characteristics:

◦ An effective method for reducing a ◦ For unlabeled data

dataset’s dimensionality while ◦ A linear transform with solid

keeping spatial characteristics as mathematical foundation

much as possible

Applications

◦ Line/plane fitting

◦ Face recognition

◦ Machine learning

◦ ...

DIMENSIONALITY REDUCTION USING PCA 2

Story

DIMENSIONALITY REDUCTION USING PCA 3

Story Starts

I heard you are studying "Pee-See-Ay". I wonder what

that is...

Ah, it's just a method of summarizing some data.

• Look, we have some wine bottles standing here on the table. We can describe

each wine by its colour, by how strong it is, by how old it is

• We can compose a whole list of different characteristics of each wine in our

cellar.

• But many of them will measure related properties and so will be redundant.

• If so, we should be able to summarize each wine with fewer characteristics!

This is what PCA does.

DIMENSIONALITY REDUCTION USING PCA 4

Story Continues

This is interesting! So this PCA thing checks what

characteristics are redundant and discards them?

Excellent question, granny!

• Nooooo, PCA is not selecting some characteristics and discarding the others.

Instead, it constructs some new characteristics that turn out to summarize our

list of wines well.

• Of course these new characteristics are constructed using the old ones

• for example, a new characteristic might be computed as wine age minus wine

acidity level or some other combination like that (we call them linear

combinations).

• In fact, PCA finds the best possible characteristics, the ones that summarize

the list of wines as well as only possible (among all conceivable linear

combinations). This is why it is so useful.

DIMENSIONALITY REDUCTION USING PCA 5

Story Continues

Hmmm, this certainly sounds good, but I am not sure I understand. What do you

actually mean when you say that these new PCA characteristics "summarize" the

list of wines?

I guess I can give two different answers to this question MOM

• First answer is that you are looking for some wine properties (characteristics)

that strongly differ across wines. Indeed, imagine that you come up with a

property that is the same for most of the wines. This would not be very useful,

wouldn't it? Wines are very different, but your new property makes them all

look the same! This would certainly be a bad summary. Instead, PCA looks for

properties that show as much variation across wines as possible.

DIMENSIONALITY REDUCTION USING PCA 6

Story Continues

Hmmm, this certainly sounds good, but I am not sure I understand. What do you

actually mean when you say that these new PCA characteristics "summarize" the

list of wines?

• The second answer is that you look for the properties that would allow you to

predict, or "reconstruct", the original wine characteristics.

• Again, imagine that you come up with a property that has no relation to the

original characteristics; if you use only this new property, there is no way you

could reconstruct the original ones!

• This, again, would be a bad summary. So PCA looks for properties that allow to

reconstruct the original characteristics as well as possible.

DIMENSIONALITY REDUCTION USING PCA 7

Story Continues

Hey, these two "goals" of PCA sound so different! Why would they be equivalent?

• Hmmm. Perhaps I should make a little drawing (takes a napkin and starts

scribbling).

• Let us pick two wine characteristics, perhaps wine darkness and alcohol

content -- I don't know if they are correlated, but let's imagine that they are.

• Here is what a scatter plot of different wines could look like:

DIMENSIONALITY REDUCTION USING PCA 8

Story Continues

• Each dot in this "wine cloud" shows one particular wine. You see that the two

properties (x and y on this figure) are correlated.

• A new property can be constructed by drawing a line through the center of

this wine cloud and projecting all points onto this line.

• This new property will be given by a linear combination w1x+w2y, where each

line corresponds to some particular values of w1 and w2.

• Now look here very carefully -- here is how these projections look like for

different lines (red dots are projections of the blue dots):

DIMENSIONALITY REDUCTION USING PCA 9

Story Continues

• PCA will find the "best" line according to two different criteria of what is the

"best".

• First, the variation of values along this line should be maximal. Pay attention to

how the "spread" (we call it "variance") of the red dots changes while the line

rotates; can you see when it reaches maximum?

• Second, if we reconstruct the original two characteristics (position of a blue

dot) from the new one (position of a red dot), the reconstruction error will be

given by the length of the connecting red line. Observe how the length of

these red lines changes while the line rotates; can you see when the total

length reaches minimum?

If you stare at this animation for some

time, you will notice that "the maximum

"first principal component" variance" and "the minimum error" are

reached at the same time, namely when

the line points to the magenta ticks

DIMENSIONALITY REDUCTION USING PCA 10

Story Continues

Very nice, papa! I think I can see why the two goals yield the same result: it is

essentially because of the Pythagoras theorem, isn't it?

• Mathematically, the spread of the red dots is measured as the average squared distance

from the center of the wine cloud to each red dot - as you know, it is called the variance.

• On the other hand, the total reconstruction error is measured as the average squared

length of the corresponding red lines. But as the angle between red lines and the black

line is always 90∘, the sum of these two quantities is equal to the average squared

distance between the center of the wine cloud and each blue dot; this is precisely

Pythagoras theorem.

• Of course this average distance does not depend on the orientation of the black line, so

the higher the variance the lower the error (because their sum is constant).

• By the way, you can imagine that the black line is a solid rod and each red line is a spring.

• The energy of the spring is proportional to its squared length (this is known in physics as

the Hooke's law), so the rod will orient itself such as to minimize the sum of these

squared distances.

DIMENSIONALITY REDUCTION USING PCA 11

PCA

Common goal: Reduction of unlabeled data

◦ PCA: dimensionality reduction

◦ Objective function: Variance ↑

| | |

X x1 x 2 x n

| | |

DIMENSIONALITY REDUCTION USING PCA 12

Examples of PCA Projections

PCA projections

◦ 2D 1D

◦ 3D 2D

DIMENSIONALITY REDUCTION USING PCA 13

Quiz!

Problem Definition

Input Output

◦ A dataset X of n d-dim points which ◦ A unity vector u such that the

are zero justified: square sum of the dataset’s

projection onto u is maximized.

Dataset : X x1 , x 2 ,..., x n

n

Zero justified : x i 0

i 1

DIMENSIONALITY REDUCTION USING PCA

14

Projection

Angle between vectors Projection of x onto u

x u T

xT u

cos x cos xT u if u 1

Quiz! x u u

Extension: What is the projection of x onto

the subspace spanned by u1, u2, …, um?

x

u

x cos xT u if u 1

DIMENSIONALITY REDUCTION USING PCA

15

Steps for PCA

1 n

1. Find the sample mean: μ xi

n i 1

2. Compute the covariance matrix:

1 1 n

C XX (xi μ )(xi μ )T

T

n n i 1

3. Find the eigenvalues of C and arrange them into descending order,

1 2 d

with the corresponding eigenvectors {u1, u2 ,, ud }

4. The transformation is y UT x , with

| | |

U u1 u2 ud

| | |

DIMENSIONALITY REDUCTION USING PCA

16

Example of PCA

IRIS dataset projection

DIMENSIONALITY REDUCTION USING PCA

17

Weakness of PCA for

Classification

Not designed for classification problem (with labeled

training data)

Ideal situation Adversary situation

DIMENSIONALITY REDUCTION USING PCA

18

Is rotation necessary in PCA? If yes, Why?

What will happen if you don’t rotate the

components?

Yes, rotation (orthogonal) is necessary because it maximizes the

difference between variance captured by the component. This makes

the components easier to interpret. Not to forget, that’s the motive of

doing PCA where, we aim to select fewer components (than features)

which can explain the maximum variance in the data set. By doing

rotation, the relative location of the components doesn’t change, it only

changes the actual coordinates of the points.

If we don’t rotate the components, the effect of PCA will diminish and

we’ll have to select more number of components to explain variance in

the data set.

DIMENSIONALITY REDUCTION USING PCA 19

DIMENSIONALITY REDUCTION USING PCA 20

DIMENSIONALITY REDUCTION USING PCA 21

Вам также может понравиться

- Pca - Making Sense of Principal Component Analysis, Eigenvectors & Eigenvalues - Cross ValidatedДокумент5 страницPca - Making Sense of Principal Component Analysis, Eigenvectors & Eigenvalues - Cross ValidatedsharmiОценок пока нет

- Computer Vision: Spring 2006 15-385,-685 Instructor: S. Narasimhan Wean 5403 T-R 3:00pm - 4:20pmДокумент34 страницыComputer Vision: Spring 2006 15-385,-685 Instructor: S. Narasimhan Wean 5403 T-R 3:00pm - 4:20pmramnbantunОценок пока нет

- CS434a/541a: Pattern Recognition Prof. Olga VekslerДокумент42 страницыCS434a/541a: Pattern Recognition Prof. Olga Vekslerabhas kumarОценок пока нет

- Curse of Dimensionality, Dimensionality Reduction With PCAДокумент36 страницCurse of Dimensionality, Dimensionality Reduction With PCAYonatanОценок пока нет

- Hw4 ProbsДокумент12 страницHw4 ProbssfaawrgawgОценок пока нет

- Lec 5,6Документ46 страницLec 5,6ABHIRAJ EОценок пока нет

- 20 - 1 - ML - Unsup - 03 - Dbscan HdbscanДокумент21 страница20 - 1 - ML - Unsup - 03 - Dbscan HdbscanMohitKhemkaОценок пока нет

- Statistical Data Mining: Edward J. WegmanДокумент70 страницStatistical Data Mining: Edward J. Wegmansertse26Оценок пока нет

- Curse of Dimensionality, Dimensionality Reduction With PCAДокумент36 страницCurse of Dimensionality, Dimensionality Reduction With PCAAditya ChristianОценок пока нет

- Deep Learning Basics Lecture 7 Factor AnalysisДокумент32 страницыDeep Learning Basics Lecture 7 Factor AnalysisbarisОценок пока нет

- Blob Detection: - Matching Whole Images - Matching Small Regions - Matching ObjectsДокумент11 страницBlob Detection: - Matching Whole Images - Matching Small Regions - Matching ObjectsSajal JainОценок пока нет

- Lecture14 PcaДокумент63 страницыLecture14 PcaAbhishekОценок пока нет

- CMSC 671 Chapter 6 ConstraintsДокумент42 страницыCMSC 671 Chapter 6 ConstraintsSamir SabryОценок пока нет

- A Recommender System: John UrbanicДокумент36 страницA Recommender System: John Urbaniclevi_sum2008Оценок пока нет

- PCA, Eigenfaces, and Face Detection: CSC320: Introduction To Visual Computing Michael GuerzhoyДокумент30 страницPCA, Eigenfaces, and Face Detection: CSC320: Introduction To Visual Computing Michael GuerzhoyApricot BlueberryОценок пока нет

- 14: Dimensionality Reduction (PCA) : Motivation 1: Data CompressionДокумент7 страниц14: Dimensionality Reduction (PCA) : Motivation 1: Data CompressionmarcОценок пока нет

- Stereo Vision: The Correspondence ProblemДокумент23 страницыStereo Vision: The Correspondence ProblemGauri DeshmukhОценок пока нет

- 15 Unsupervised LearningДокумент8 страниц15 Unsupervised LearningJiahong HeОценок пока нет

- Dimensionality Reduction Using Principal Component AnalysisДокумент32 страницыDimensionality Reduction Using Principal Component Analysissai varunОценок пока нет

- Lecture 2 - Nearest-Neighbors MethodsДокумент57 страницLecture 2 - Nearest-Neighbors MethodsAli RazaОценок пока нет

- 3 Regression DiagnosticsДокумент53 страницы3 Regression DiagnosticsArda Hüseyinoğlu100% (1)

- CSE605 Computer Graphics Scan ConversionДокумент22 страницыCSE605 Computer Graphics Scan Conversionanithamary100% (1)

- Non-Parametric MethodsДокумент51 страницаNon-Parametric Methodsbill.morrissonОценок пока нет

- GIMP - Basic Color CurvesДокумент1 страницаGIMP - Basic Color CurvesNassro BolОценок пока нет

- Statistics Part1 2013Документ17 страницStatistics Part1 2013Victor De Paula VilaОценок пока нет

- Exploratory Data Analysis ReferenceДокумент49 страницExploratory Data Analysis ReferenceafghОценок пока нет

- PixelProjectedReflectionsAC V 1.92 WithnotesДокумент85 страницPixelProjectedReflectionsAC V 1.92 WithnotesStar ZhangОценок пока нет

- WINSEM2022-23 MEE3502 ETH VL2022230500702 Reference Material I 14-12-2022 3 - COMPUTER AIDED DESIGN (CAD) 14-DEC-22Документ17 страницWINSEM2022-23 MEE3502 ETH VL2022230500702 Reference Material I 14-12-2022 3 - COMPUTER AIDED DESIGN (CAD) 14-DEC-22Hariharan SОценок пока нет

- Accuracy Precision and RecallДокумент12 страницAccuracy Precision and Recallksoleti8254Оценок пока нет

- Dimensionality ReductionДокумент41 страницаDimensionality Reductionh_mursalinОценок пока нет

- Introduction To Data Science Unsupervised Learning: CS 194 Fall 2015 John CannyДокумент54 страницыIntroduction To Data Science Unsupervised Learning: CS 194 Fall 2015 John CannyPedro Jesús García RamosОценок пока нет

- 8.2 Graphsandconvergence: Discrete Mathematics: Functions On The Set of Natural NumbersДокумент9 страниц8.2 Graphsandconvergence: Discrete Mathematics: Functions On The Set of Natural NumbersVarun SinghОценок пока нет

- Lecture8 2015Документ51 страницаLecture8 2015hu jackОценок пока нет

- Dim Reduction & Pattern RecognitionДокумент63 страницыDim Reduction & Pattern RecognitionDharaneesh .R.PОценок пока нет

- Knn-Experiments - Jupyter NotebookДокумент10 страницKnn-Experiments - Jupyter Notebookapi-526286119Оценок пока нет

- Introduction-To-Ml-Part-3 EditedДокумент73 страницыIntroduction-To-Ml-Part-3 EditedHana AlhomraniОценок пока нет

- MidtermДокумент12 страницMidtermEman AsemОценок пока нет

- ch01 PDFДокумент17 страницch01 PDFlmORphlОценок пока нет

- CNN Basics and Techniques ExplainedДокумент19 страницCNN Basics and Techniques Explainedseul aloneОценок пока нет

- 03 Image Formation 2Документ64 страницы03 Image Formation 2herusyahputraОценок пока нет

- EECE 5639 Computer Vision I: Descriptors, Feature Matching, Hough TransformДокумент68 страницEECE 5639 Computer Vision I: Descriptors, Feature Matching, Hough TransformSourabh Sisodia SrßОценок пока нет

- Create Your Own CamScanner Using Python and OpenCVДокумент20 страницCreate Your Own CamScanner Using Python and OpenCVShirish GuptaОценок пока нет

- STAT 535 Final Exam: Efficient Algorithms for Santa's ElvesДокумент11 страницSTAT 535 Final Exam: Efficient Algorithms for Santa's Elvessykim657Оценок пока нет

- GIS Remote Sensing LectureДокумент27 страницGIS Remote Sensing LectureaddОценок пока нет

- Probability NotesДокумент70 страницProbability Notespeterwanjiru1738Оценок пока нет

- Feature Matching and RANSAC: 15-463: Computational Photography Alexei Efros, CMU, Fall 2005Документ36 страницFeature Matching and RANSAC: 15-463: Computational Photography Alexei Efros, CMU, Fall 2005shaguftahumaОценок пока нет

- Min ImДокумент17 страницMin ImRam Charan KonidelaОценок пока нет

- Vlsi Cad:: Logic To LayoutДокумент45 страницVlsi Cad:: Logic To LayoutSohini RoyОценок пока нет

- CS 4650/7650: Natural Language Processing: Neural Text ClassificationДокумент85 страницCS 4650/7650: Natural Language Processing: Neural Text ClassificationRahul GautamОценок пока нет

- Wjarosz Convolution 2001Документ16 страницWjarosz Convolution 2001_leviathan_Оценок пока нет

- Stable Diffusion A TutorialДокумент66 страницStable Diffusion A Tutorialmarkus.aurelius100% (1)

- Predictive Analytics - Session 3: Associate Professor Ole ManeesoonthornДокумент30 страницPredictive Analytics - Session 3: Associate Professor Ole ManeesoonthornSope DalleyОценок пока нет

- RegressionДокумент17 страницRegressionRenhe SONGОценок пока нет

- 07-Réseaux-convolutifs-v2.10Документ57 страниц07-Réseaux-convolutifs-v2.10Mathy Aristide GnabroОценок пока нет

- Lec 5Документ51 страницаLec 5Vân AnhОценок пока нет

- 07 PartitioningДокумент10 страниц07 PartitioningBruhath KotamrajuОценок пока нет

- 2014 Phillip Reu All About Speckles Speckle Size MeasurementДокумент3 страницы2014 Phillip Reu All About Speckles Speckle Size MeasurementGilberto LovatoОценок пока нет

- Brain Benders, Grades 3 - 5: Challenging Puzzles and Games for Math and Language ArtsОт EverandBrain Benders, Grades 3 - 5: Challenging Puzzles and Games for Math and Language ArtsОценок пока нет

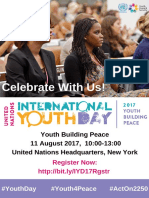

- International Youth Day 2017 FlyerДокумент1 страницаInternational Youth Day 2017 Flyerneeraj12121Оценок пока нет

- Metabolic Denitrosation of jY-Nitrosodimethylamine in Vivo in The RatДокумент8 страницMetabolic Denitrosation of jY-Nitrosodimethylamine in Vivo in The Ratneeraj12121Оценок пока нет

- A Glimpse of July 2018Документ1 страницаA Glimpse of July 2018neeraj12121Оценок пока нет

- CPC Cooperative Patent ClassificationДокумент11 страницCPC Cooperative Patent Classificationneeraj12121Оценок пока нет

- Y Division Nformation: EmissionДокумент4 страницыY Division Nformation: Emissionneeraj12121Оценок пока нет

- Interglobe Technologies: About The CompanyДокумент3 страницыInterglobe Technologies: About The Companyneeraj12121Оценок пока нет

- Genus Statistics of The Virgo N-Body Simulations and The 1.2-Jy Redshift SurveyДокумент20 страницGenus Statistics of The Virgo N-Body Simulations and The 1.2-Jy Redshift Surveyneeraj12121Оценок пока нет

- DS18B20 (Temperature Sensor)Документ20 страницDS18B20 (Temperature Sensor)Kroos ZumОценок пока нет

- Data Science Interview Questions: Answer HereДокумент54 страницыData Science Interview Questions: Answer Hereneeraj12121Оценок пока нет

- Storage Choke, Fully Potted Resign: Description ApplicationsДокумент3 страницыStorage Choke, Fully Potted Resign: Description Applicationsneeraj12121Оценок пока нет

- Early Active Zone Assembly in Drosophila: DSyd-1 and DLiprin-α RoleДокумент1 страницаEarly Active Zone Assembly in Drosophila: DSyd-1 and DLiprin-α Roleneeraj12121Оценок пока нет

- IJCB2020 For Review TemplateДокумент4 страницыIJCB2020 For Review Templateneeraj12121Оценок пока нет

- Pinki Ke Joote PDFДокумент28 страницPinki Ke Joote PDFneeraj12121100% (1)

- Reliable 4K Decoder Series with HDMI, VGA, BNC OutputsДокумент8 страницReliable 4K Decoder Series with HDMI, VGA, BNC Outputsneeraj12121Оценок пока нет

- DataДокумент5 страницDataneeraj12121Оценок пока нет

- Tune: A Research Platform For Distributed Model Selection and TrainingДокумент8 страницTune: A Research Platform For Distributed Model Selection and Trainingneeraj12121Оценок пока нет

- Machine Learning Interviews - Lessons From Both Sides - FSDLДокумент70 страницMachine Learning Interviews - Lessons From Both Sides - FSDLneeraj12121100% (2)

- Chart Is Right For You? PDFДокумент17 страницChart Is Right For You? PDFميلاد نوروزي رهبرОценок пока нет

- Functional Flow PDFДокумент1 страницаFunctional Flow PDFneeraj12121Оценок пока нет

- Ebook Getting Started With Design SystemsДокумент24 страницыEbook Getting Started With Design SystemsJuan Carlos AcostaОценок пока нет

- Machine Learning Model Optimization with Gradient BoostingДокумент17 страницMachine Learning Model Optimization with Gradient Boostingneeraj12121Оценок пока нет

- AI in Indian Governance: Opportunities, Challenges and a Path ForwardДокумент64 страницыAI in Indian Governance: Opportunities, Challenges and a Path Forwardneeraj12121Оценок пока нет

- Human Activity RecoДокумент17 страницHuman Activity Reconeeraj12121Оценок пока нет

- Ray: A Distributed Framework For Emerging AI ApplicationsДокумент19 страницRay: A Distributed Framework For Emerging AI Applicationsneeraj12121Оценок пока нет

- Artgan Content: Bout RojectДокумент2 страницыArtgan Content: Bout Rojectneeraj12121Оценок пока нет

- Getting Started Data Science GuideДокумент76 страницGetting Started Data Science GuideKunal LangerОценок пока нет

- Gradient BoostingДокумент80 страницGradient BoostingV Prasanna ShrinivasОценок пока нет

- SVM PresentationДокумент27 страницSVM Presentationneeraj12121Оценок пока нет

- Riccati EquationДокумент4 страницыRiccati Equationletter_ashish4444Оценок пока нет

- 2130002Документ19 страниц2130002ahmadfarrizОценок пока нет

- Appli DerivativesДокумент31 страницаAppli DerivativesBảo KhangОценок пока нет

- Manula of Functional AnalysisДокумент1 страницаManula of Functional AnalysisGhulam Muhammad BismilОценок пока нет

- Fantasy Theme Analysis MethodДокумент11 страницFantasy Theme Analysis Methodjakebobb7084Оценок пока нет

- Optimization TechniqueДокумент30 страницOptimization Techniquerahuljiit100% (6)

- 9709 s13 QP 33Документ4 страницы9709 s13 QP 33salak946290Оценок пока нет

- Ma MSC MathsДокумент42 страницыMa MSC Mathskaif0331Оценок пока нет

- HPLC method for pantoprazole and impuritiesДокумент9 страницHPLC method for pantoprazole and impuritiesJesus Barcenas HernandezОценок пока нет

- Functional AnalysisДокумент8 страницFunctional AnalysisJunaidvali ShaikОценок пока нет

- Sample Exam 1 EE 210Документ6 страницSample Exam 1 EE 210doomachaleyОценок пока нет

- WHO Lab Quality Management SystemДокумент246 страницWHO Lab Quality Management SystemMigori Art100% (1)

- 12MSD-complex Numbers Test-S1-2020Документ3 страницы12MSD-complex Numbers Test-S1-2020JerryОценок пока нет

- Analisis Swot Implementasi Sistem Penyediaan Air Minum Berkelanjutan (Green Spam) Pada Spam Regional Keburejo Di Jawa TengahДокумент17 страницAnalisis Swot Implementasi Sistem Penyediaan Air Minum Berkelanjutan (Green Spam) Pada Spam Regional Keburejo Di Jawa TengahJo BangОценок пока нет

- Cert BA UCTДокумент12 страницCert BA UCTfreedomОценок пока нет

- 3.1.decision TheoryДокумент43 страницы3.1.decision Theoryusnish banerjeeОценок пока нет

- Research: A Review and A PreviewДокумент79 страницResearch: A Review and A PreviewJonathan SiguinОценок пока нет

- Wxmaxima For Calculus I CQДокумент158 страницWxmaxima For Calculus I CQRisdita Putra ArfiyanОценок пока нет

- 16.323 Principles of Optimal Control: Mit OpencoursewareДокумент18 страниц16.323 Principles of Optimal Control: Mit Opencoursewaremousa bagherpourjahromiОценок пока нет

- Digital Forensics Using Data Mining: AbstractДокумент13 страницDigital Forensics Using Data Mining: AbstractTahir HussainОценок пока нет

- Stochastic ProcessДокумент24 страницыStochastic ProcessAnil KumarОценок пока нет

- Baldwinm J. D. e Baldin, J. L. (1998) - Princípios Do Comportamento Na Vida DiáriaДокумент800 страницBaldwinm J. D. e Baldin, J. L. (1998) - Princípios Do Comportamento Na Vida Diáriaifmelojr100% (1)

- Data Analytics VsДокумент3 страницыData Analytics VsJoel DsouzaОценок пока нет

- Chapter 1 - Perspective DrawingДокумент23 страницыChapter 1 - Perspective DrawingryanОценок пока нет

- STAT 200 Quiz 3Документ5 страницSTAT 200 Quiz 3ldlewisОценок пока нет

- 3 Properties of Real Numbers ChartДокумент1 страница3 Properties of Real Numbers ChartwlochОценок пока нет

- 065 - PR 12 - Jerky Lagrangian MechanicsДокумент2 страницы065 - PR 12 - Jerky Lagrangian MechanicsBradley Nartowt100% (1)

- Ortho PolyДокумент20 страницOrtho PolyRenganathanMuthuswamyОценок пока нет

- Taylors/Trial/9709/3/March/April/2008/Q9: Daily Questions 23/11/15 - SimonДокумент40 страницTaylors/Trial/9709/3/March/April/2008/Q9: Daily Questions 23/11/15 - SimonmengleeОценок пока нет